SEO case study. How TemplateMonster found 3 mln.orphaned pages that were regularly visited by Google search bot.

We’ve recently applied this Tech SEO Checklist to help TemplateMonster migrate to a new UI and new CMS without legacy SEO troubles.

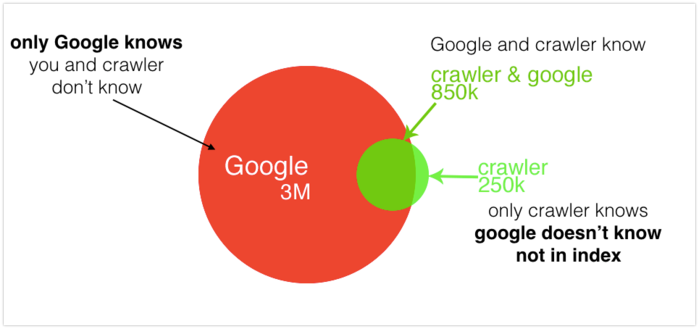

We’ve found 3 million pages which are not in site structure where crawl budget had been being wasted for a long time. Another 250K pages eliminated which were in site structure but would never be visited by search bots without our recommendations. As a result, effective and smooth site migration led TemplateMonster to a new era of SEO.

Numerous pitfalls await unsuspecting SEOs when they start working on websites with a history. Typically, older websites have complex structures that were developed by several generations of webmasters. As a result, the eventual site structure might be non-optimal and overcomplicated. Most commonly, fresh SEO teams are not aware of secrets that such sites have in store. If you are the one who has to deal with it, puzzling things out is the number one task.

Another challenge is redesigns and migrations. On the one hand, businesses have to keep up with up-to-date web standards and UX/UI tendencies. On the other hand, this might be overwhelmingly tricky, as every modification affects SEO to some extent. Not only it’s important for SEO geeks but also for the business: once traffic is affected, the revenue will suffer. Missed organic visitors mean missed conversions and less affluent income.

The silver lining is that a site migration is a great opportunity to resolve all the SEO-surprises and address current issues. Our client, Template Monster, is a perfect example.

What were customer’s goals

Template Monster, first went online in 2002. As of 2018, Template Monster had a million URLs and 3 million monthly visits.

The main client’s goals at that point were:

- Redesign;

- CMS Migration.

The process expected to be flawless: with no drops in organic traffic and no revenue loss.

How did we help the customer to meet these needs?

After evaluating our client’s goals, we designed a traffic-oriented strategy which included two major steps:

- To carry out a complete crawl of the current website with JetOctopus in a bid to understand site structure on a granular level, and determine technical SEO issues.

- To look at the website through Google’s ‘eyes’ with log analysis to see how search bot scans Templatemonster.com.

The main idea was to find and fix technical issues in advance, prior to the website’s migration.

2D analysis is a lifesaver

Crawling data combined with log files` data reveals the site’s true structure and crawl ratio. For the first time, TemplateMonster team was able to see their site as Googlebot perceives it.

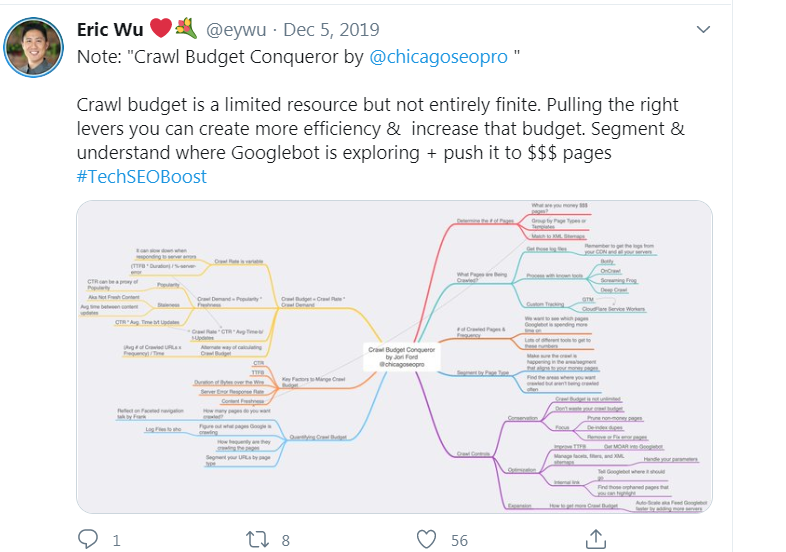

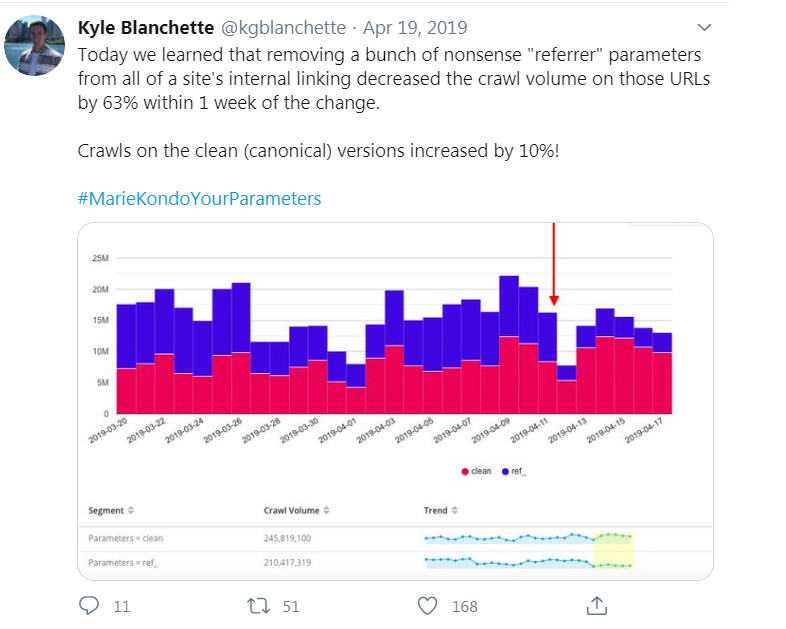

Firstly, let’s recap how Google crawls and indexes websites, and why the understanding of Googlebot behavior on your website is essential. Crawling is the process by which Google and other search bots discover new and updated pages to be added to the index. Bots’ crawling capacity is always limited, and Googlebot defines a so-called ‘crawl budget’ for every single website. The more pages Googlebot crawls, the better. But the number of crawled URLs does not necessarily correlate with healthy indexation. Sometimes Google continues to crawl outdated pages and doesn’t care that much about valuable and relevant URLs.

That was the case for Template Monster. The log analysis was a revelation:

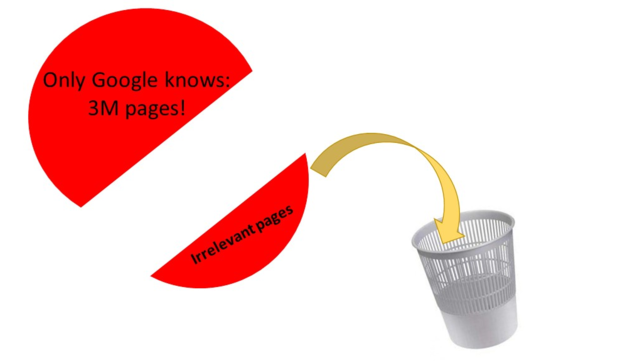

we discovered 3 million of unknown orphaned pages crawled by Googlebot, and 250 000 pages which were not crawled.

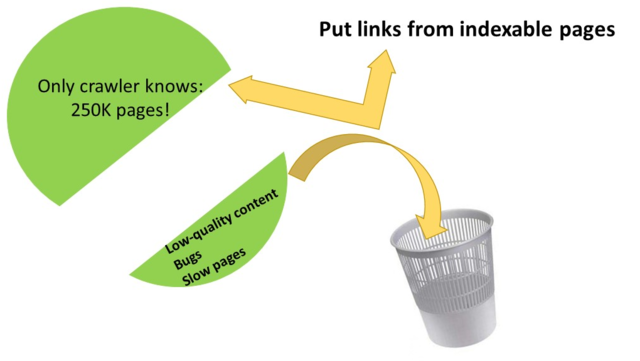

Among these 250k URLs were valuable pages that Google has never crawled. It means that these pages haven’t been ever ranked in Google, so that potential clients wouldn’t be able to find them.

There was a large room for improvement!

Client’s feedback:

What recommendations we gave

With 3 million pages in the black box, Template Monster team had a lot of work: To find out useful and commercial pages and include them in the site structure.

- To find out useful and commercial pages and include them in the site structure.

- To get rid of useless orphaned pages.

Another important milestone was pages which were not visited by Googlebot. On the one hand, the SEO team had to analyze 250 000 URLs to ascertain relevant pages and improve their indexability: Link these pages from indexable pages.

- Link these pages from indexable pages.

- Add these pages to sitemap.xml.

Also, it was important to remove useless and low-quality pages from the site structure.

Server log analysis has also revealed dead and 5xx pages which were frequently visited by the search bot, wasting the crawl budget. These pages were rather fixed or deleted.

Reap the results

Migration would have never been proved successful without taking into consideration the Googlebot perception of the site structure. With the help of server log analysis and JetOctopus crawl data, Template Monster managed to ameliorate the site’s overall health and optimize its structure, which inevitably resulted in better indexation and rankings as well as increased organic traffic and performance.

This case clearly demonstrates how a comprehensive SEO strategy can unleash the site’s true potential and turn the flaws into profitable business outcomes.

Read another Case when Site migration led even to a slight SEO traffic increase.