Log Analyzer you’ve been looking for

The most affordable log analyzer on the market. Easy-to-use, a lot of pre-set issue reports, live logs stream at hand. 2 clicks integration. No log lines limits.

Identify Crawl Budget Waste

Crawl budget waste is a significant issue. Instead of valuable and profitable pages Googlebot oftentimes crawls irrelevant and outdated pages.

The Most Important Problems in Logs

Logs analysis gives opportunity to spot problems at their early stages before they negatively impact your organic traffic.

JetOctopus tracks various checks in logs: increased load time, unintended redirects, trash page generation, AJAX URLs, etc.

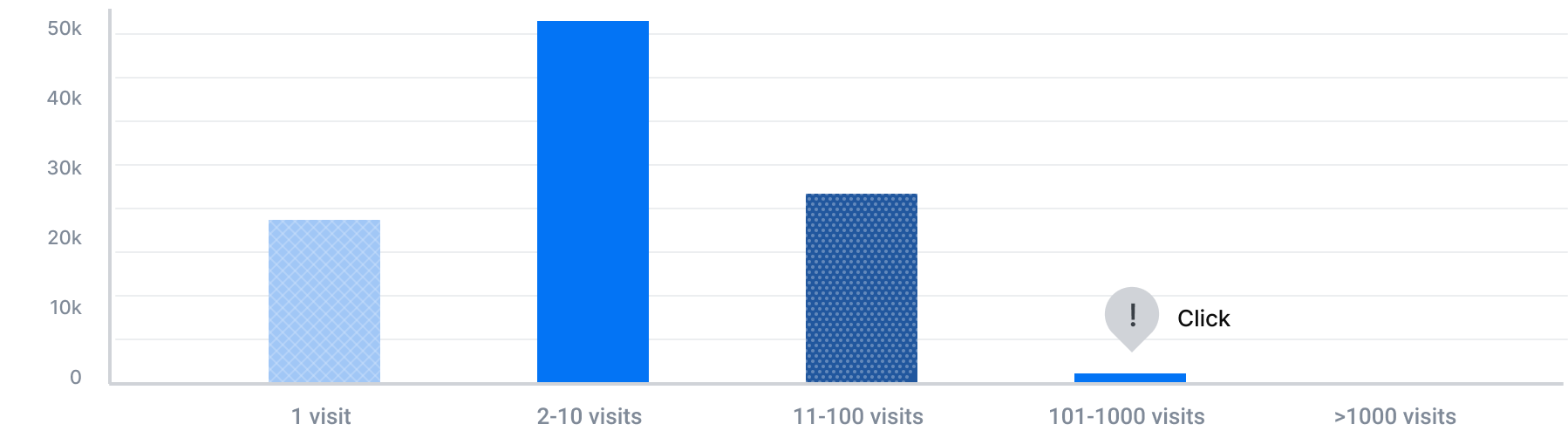

Define the most visited pages

Define the most visited pages

URLs that are more popular on the Internet tend to be crawled more often to keep them fresher in our index.Google

- The pages that are most visited by Googlebot are considered as the most important ones

- You should pay the closest attention to such URLs, keeping them evergreen and accessible

- You can find the most visited URLs by clicking on chart at Pages by Bot Visits report

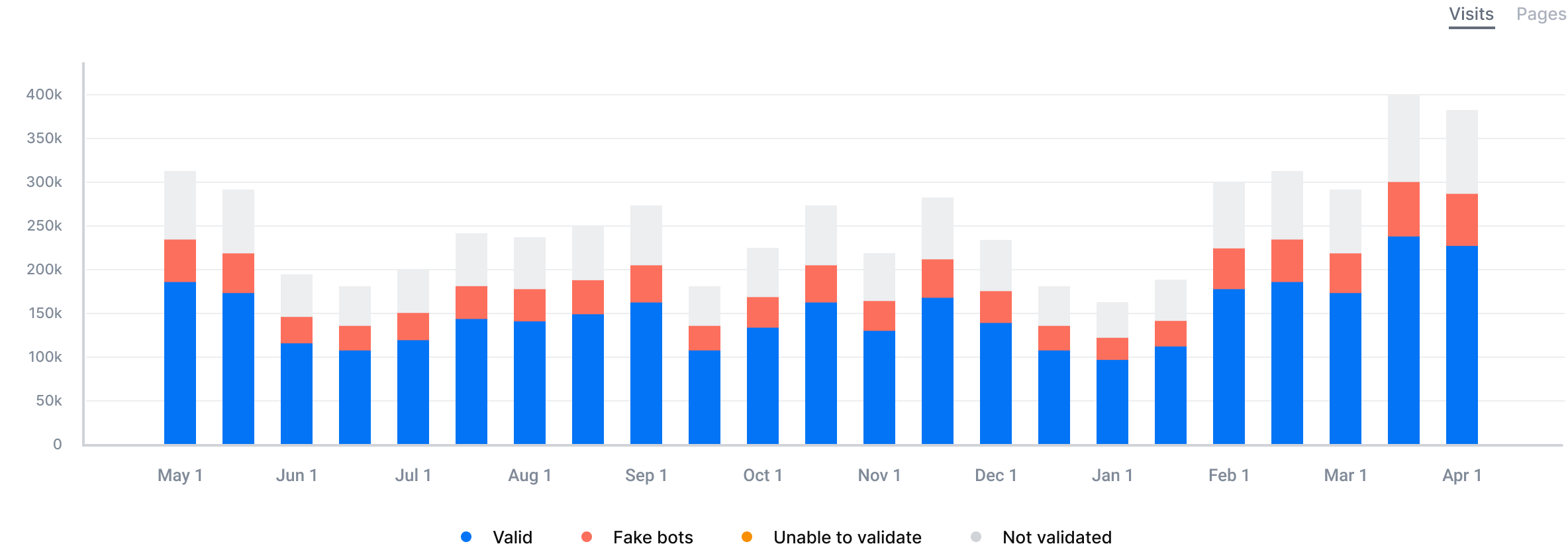

Analyze Crawl Budget Dynamics

Changes on a website can influence Googlebot's behavior - the frequency of its visits to the website. The increase of Googlebot's visits to the website is not always a good thing, it can be harmful. And it can be the opposite.

The Bot Dynamics report is the true helper in understanding changes in Googlebot's behavior and in identifying the critical issues when they just emerge. This way you avoid unexpected SEO traffic drops with the help of logs at hand.

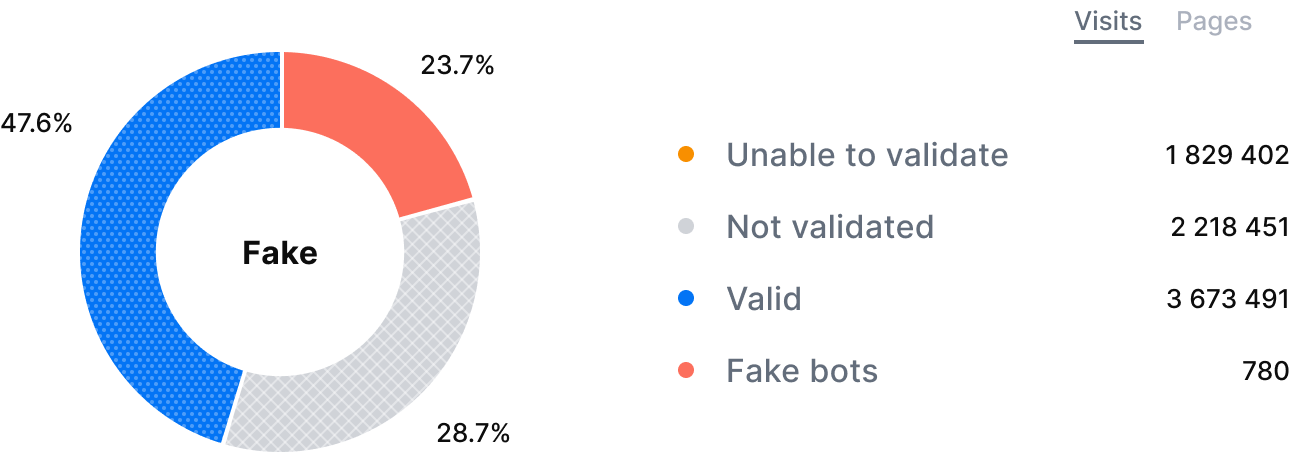

Dynamics of fake bots

SEO opportunities with Log files analysis

Improve website’s Visibility

Check accessibility of your website for proper indexation. Get your website found on the internet when your prospects are searching for your related products and services.

Boost Organic Traffic

Eliminate and fix technical errors and other aspects of on-site SEO and get more SEO traffic. Tech SEO is always predictable.

Increase website’s Indexation

Optimize site structure, improve interlinking, enrich content, fix duplicates, check your indexation management tags and get SEO traffic uplift.

Secure your SEO

Have at hand your raw logs and be the first who identifies the errors at your website to fix them right away before Googlebot visits them.

Horror #1

Horror #1

Solution #1

Solution #1

Horror #2

Horror #2

Horror #3

Horror #3