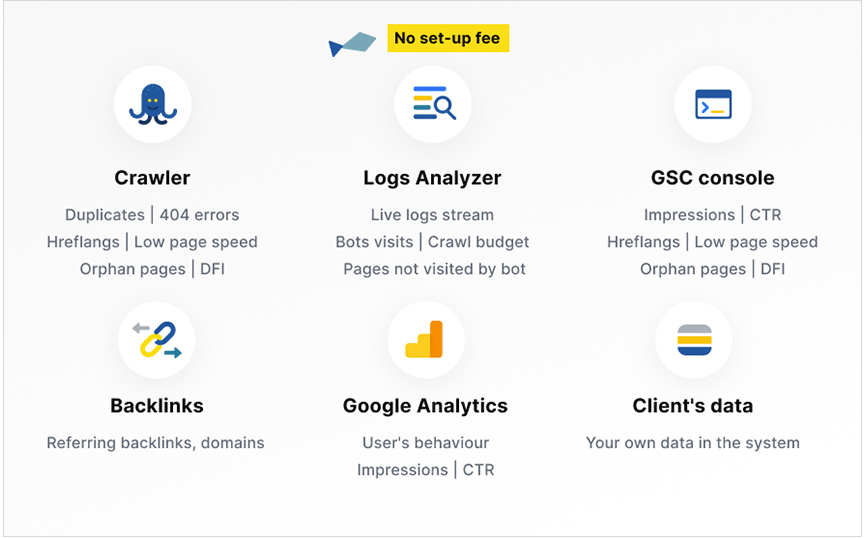

JetOctopus

best fits for

E-commerce websites

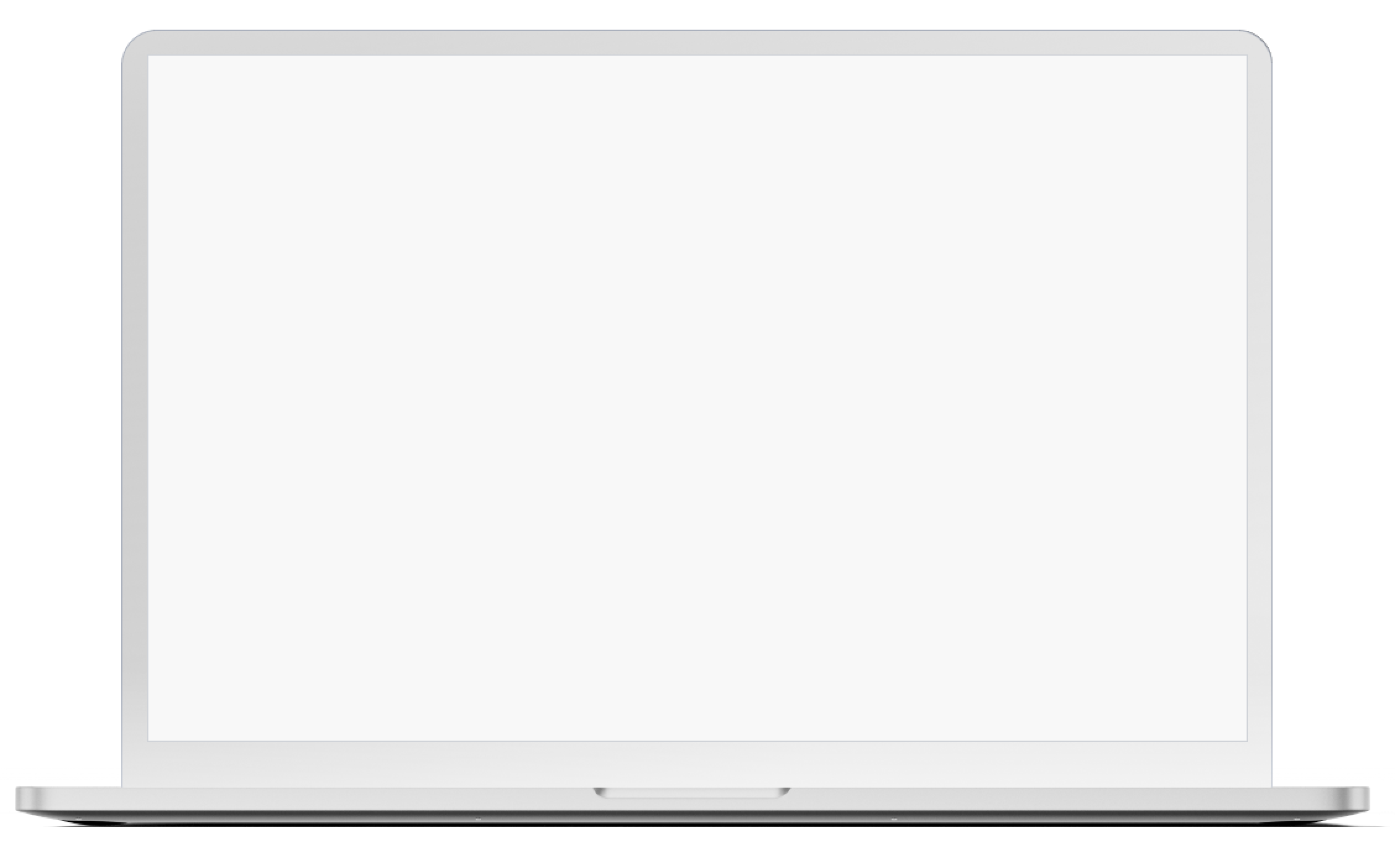

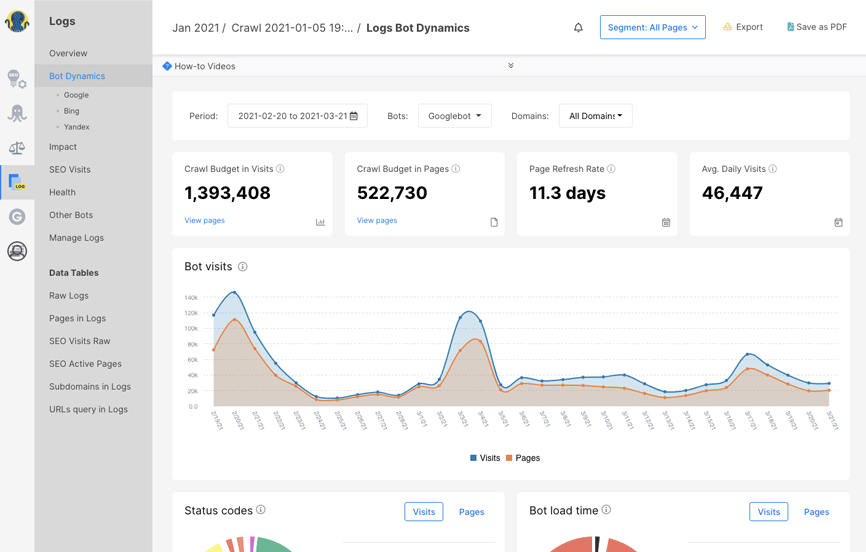

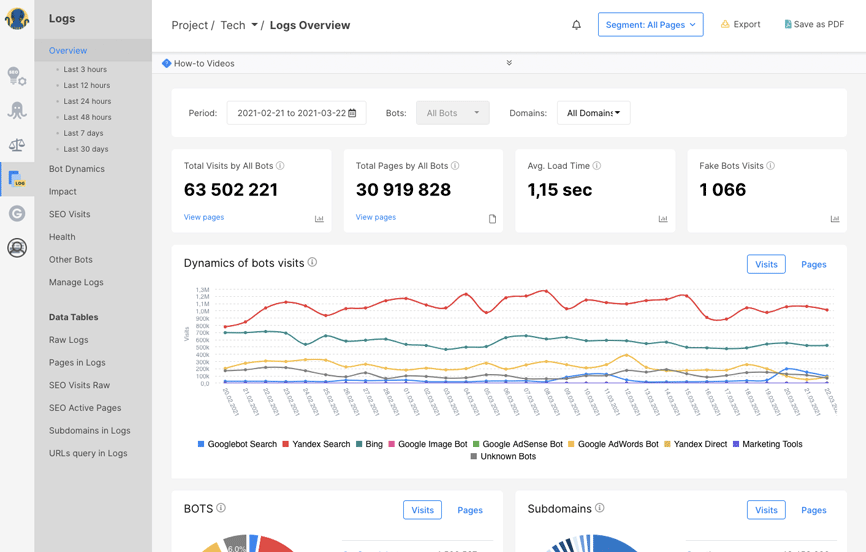

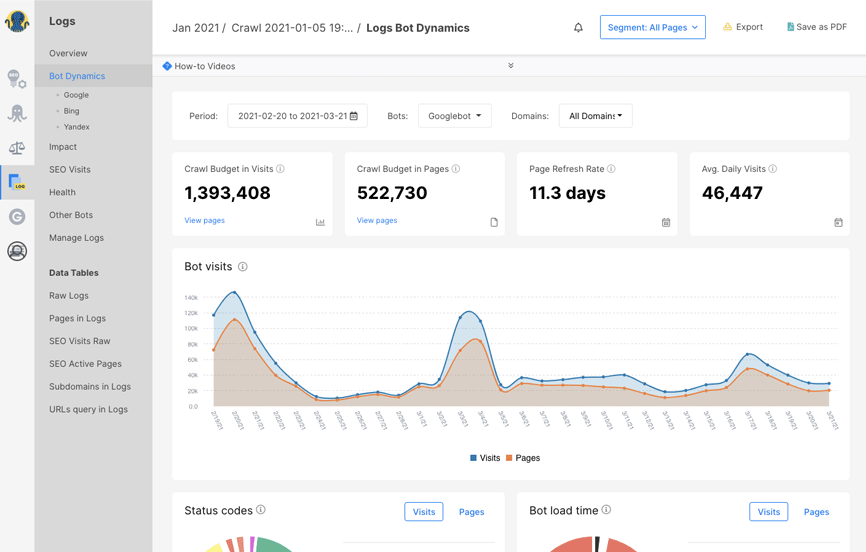

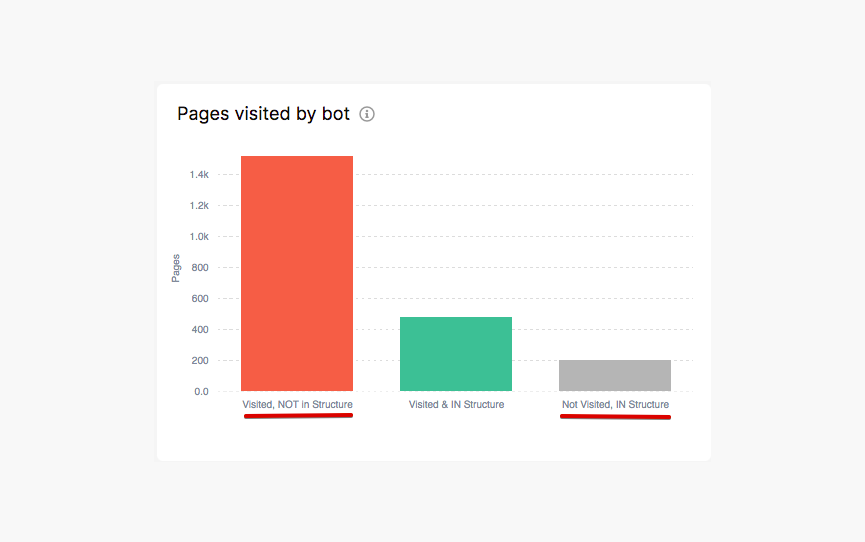

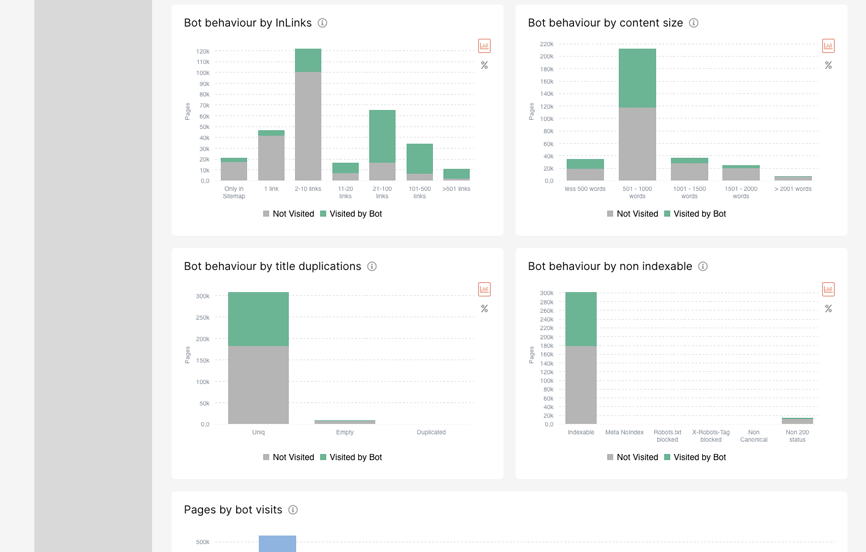

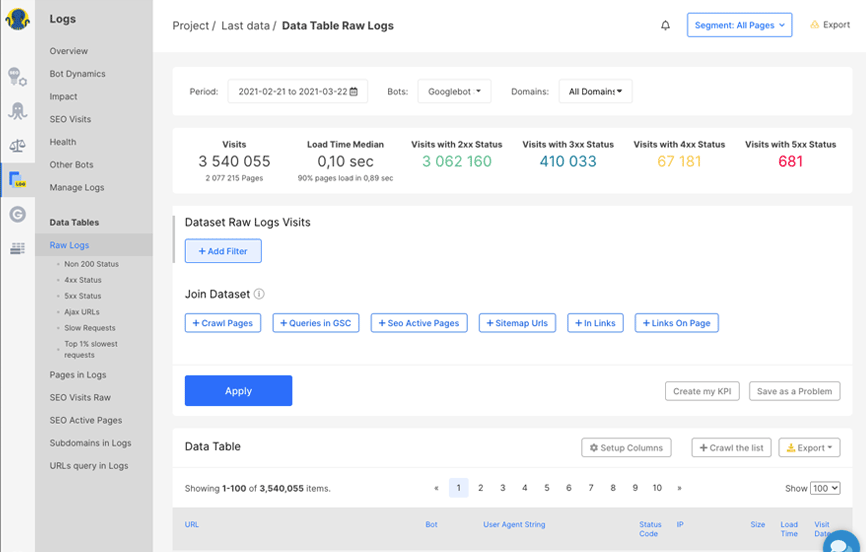

- When there are 10K+ pages on a website, Site Visibility for Googlebot is the number 1 priority to take care of.

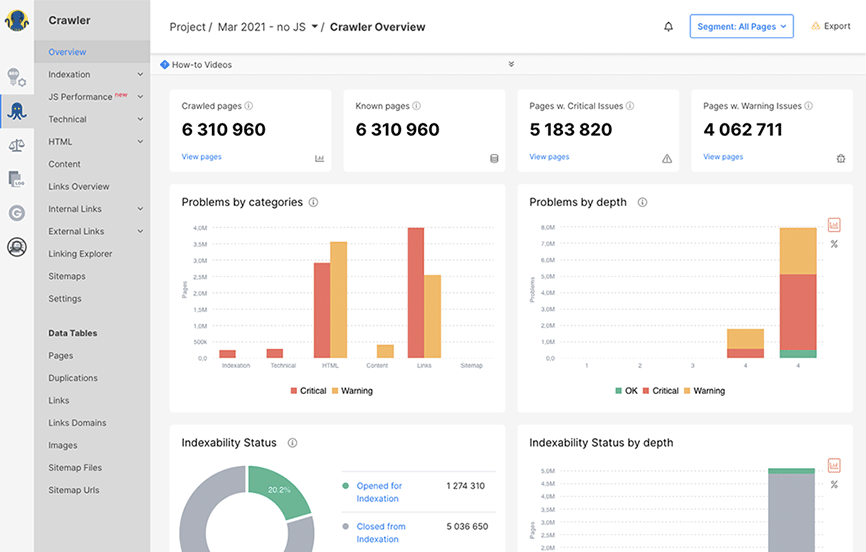

- Log analyzer serves perfectly here.

- Checking how each SO optimization impacts Googlebot's behavior on your website makes your strategy winning.

- The fastest crawler provides fresh actual data on a fly, not paralyzing your PC.

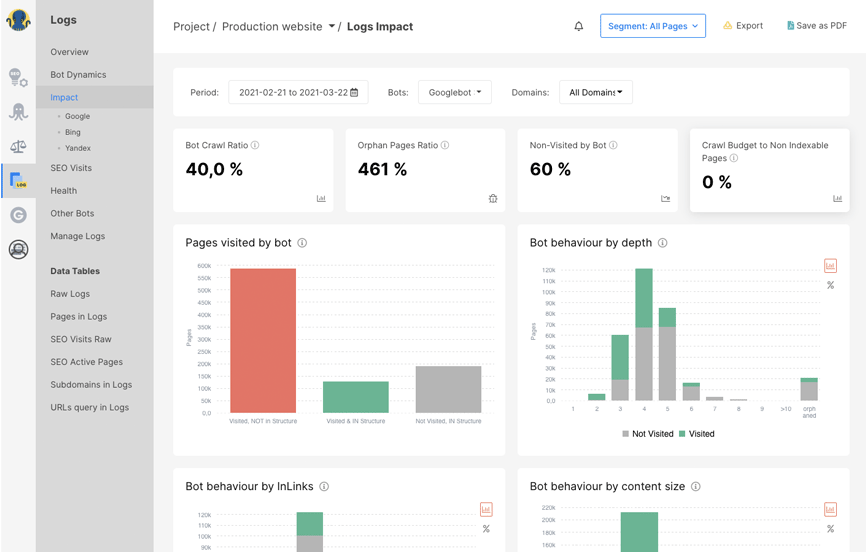

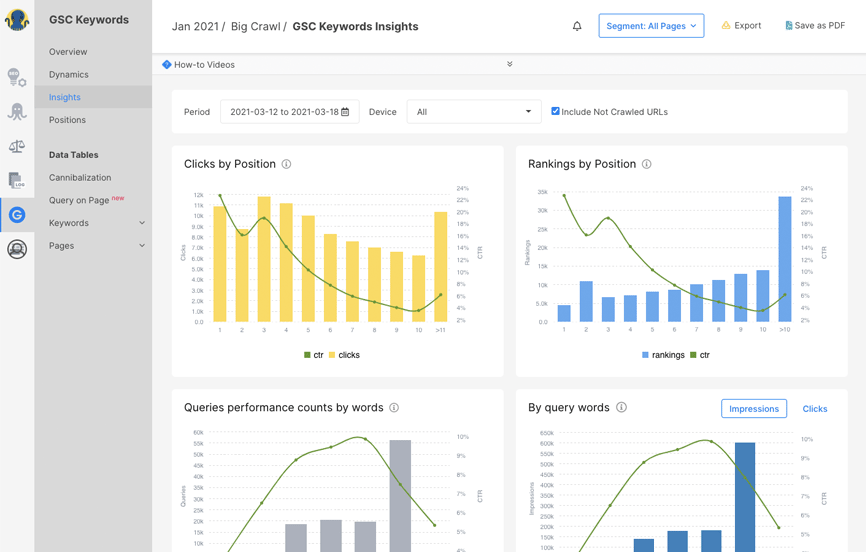

- GSC on Steroids reveals a ton of SERP efficiency insights and SEO opportunities. We store 16+ months of GSC data in your account.

- Alerts provides you SEO security.

Digital agencies

- All your SEO customers in one account always with fresh data (no extra for domains)

- Preset charts with SEO ideas and impactful opportunities (true time saver)

- Alerts that provide SEO security to all your customers making you a strategic partner

- Work always with full fresh data without paralyzing your PC (schedule a crawl option, cloud-based)

- Expert SEO support and profound onboarding

How JetOctopus helps you increase SEO traffic

How JetOctopus helps you increase SEO traffic