Log Analysis in the Age of AI Crawlers

How Search Works in 2026

Search has fundamentally changed. In 2025, visibility is no longer determined only by Google. Websites are now discovered, evaluated, and surfaced by:

- Googlebot (Search & Mobile)

- AI-driven search systems (ChatGPT Search, Perplexity, Bing Deep Search)

- Autonomous LLM crawlers (ChatGPT User bots, OpenAI SearchBot, Claude User bot, Perplexity User bot)

- RAG-based engines that fetch content in real time

Modern SEO requires understanding how all these systems access your site and ensuring that your content is discoverable for both search engines and AI models.

Logs have become the single most reliable source of truth for understanding your real visibility across the entire search ecosystem.

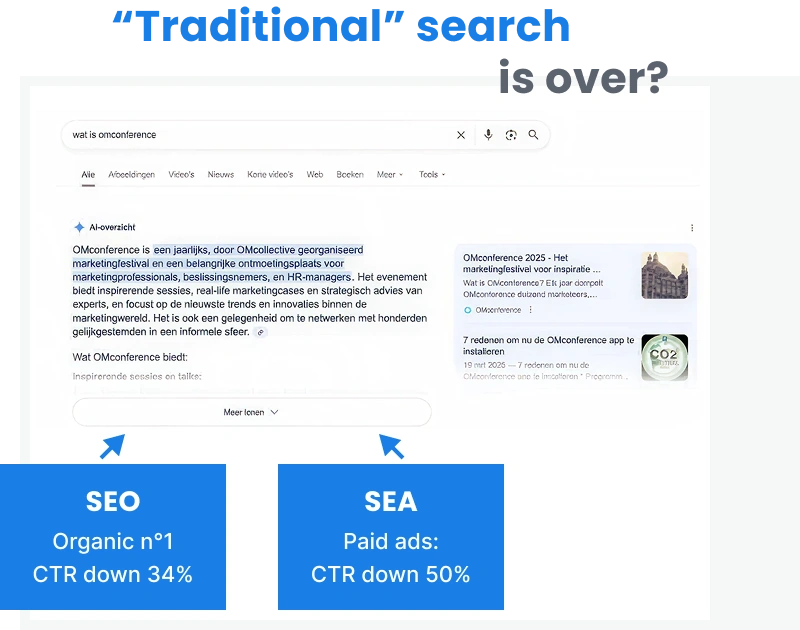

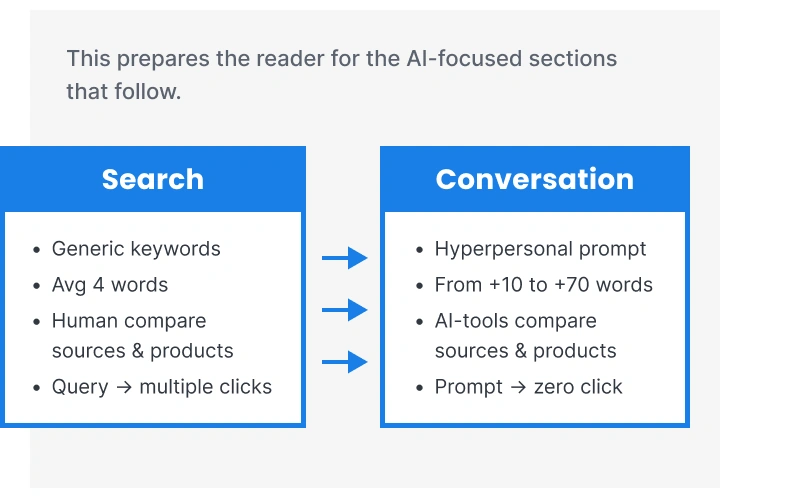

Key Shifts in the Search Ecosystem (Google + AI Search)

The search landscape is undergoing rapid transformation. The biggest shifts impacting your visibility include:

1. AI search is now mainstream

LLMs and AI-driven engines fetch live content for answer generation, training and contextual retrieval. They depend heavily on your site’s crawlability and technical health.

2. Content is evaluated beyond rankings

Your pages may influence AI answers even when they don’t rank in Google.

3. Crawl behavior is no longer Google-only

Each AI crawler has its own patterns, priorities and limitations.

4. Logs reveal the complete discovery reality

Only log file analysis shows:

– which systems crawl your site

– which URLs they hit

– where visibility breaks down

This prepares the reader for the AI-focused sections that follow.

The Ultimate Guide to Log Analysis – a 21-Point Checklist for the Age of AI Crawlers

This checklist is designed for SEOs who want to strengthen their website’s visibility, improve indexation and grow organic traffic using data-driven insights from log files. Today, log analysis is not only about understanding Googlebot – it also helps you monitor AI user-bots, autonomous crawlers and LLM-driven traffic sources that increasingly rely on your content.

With this guide, you get a clear and actionable roadmap: how logs work, what signals to focus on, how to detect crawling issues early and how to optimize your site for both search engines and AI systems. You’ll also learn which tools can help you analyze logs efficiently and uncover new opportunities for visibility.

What Is Log File Analysis and Why You Need It in AI era

Log file analysis gives SEOs a complete, unbiased picture of how search engines, AI bots and automated systems actually interact with a website. It reveals technical issues, uncovers crawl waste, highlights indexation gaps and shows how both Googlebot and AI user-bots access your content.

Today, logs are essential not only for classic technical SEO but also for understanding how LLMs, generative search systems and AI-driven crawlers use your pages as data sources.

Most importantly, log data provides hard evidence to support your SEO strategy, helping you justify priorities, secure development resources, and validate experiments with real user-bot behavior.

With log insights, you can confidently detect issues early, optimize your site structure, reduce crawl budget waste, and even improve conversion paths by understanding how humans and bots move through your site.

AI Bots: A New Traffic Source in Your Logs

AI crawlers such as ChatGPT User, GPTBot, OpenAI SearchBot, PerplexityBot and Anthropic ClaudeBot are now actively visiting websites.

Unlike traditional search engine crawlers, AI bots collect content for:

- LLM training

- AI search engines (RAG systems)

- Answer generation

- Content summarization

- Knowledge base expansion

Why It Matters

AI crawlers affect your site in new ways:

- They consume server resources just like search bots.

- They can discover URLs Google does not crawl.

- They may cause crawl budget waste, especially on large sites.

- Your content may be used by AI systems even if Google doesn’t rank it.

What to Check

- Which AI bots visit your site most often

- Which pages they crawl

- Whether they hit thin, orphan or non-indexable pages

- Load impact on server performance

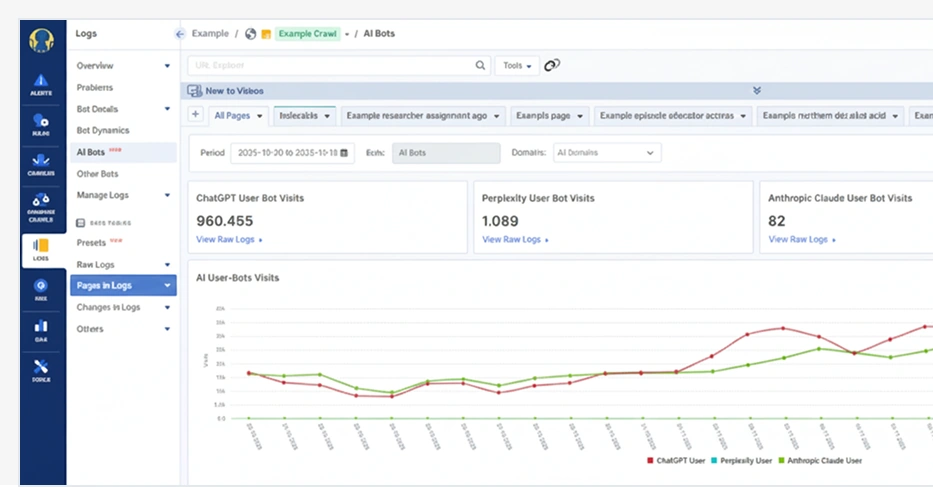

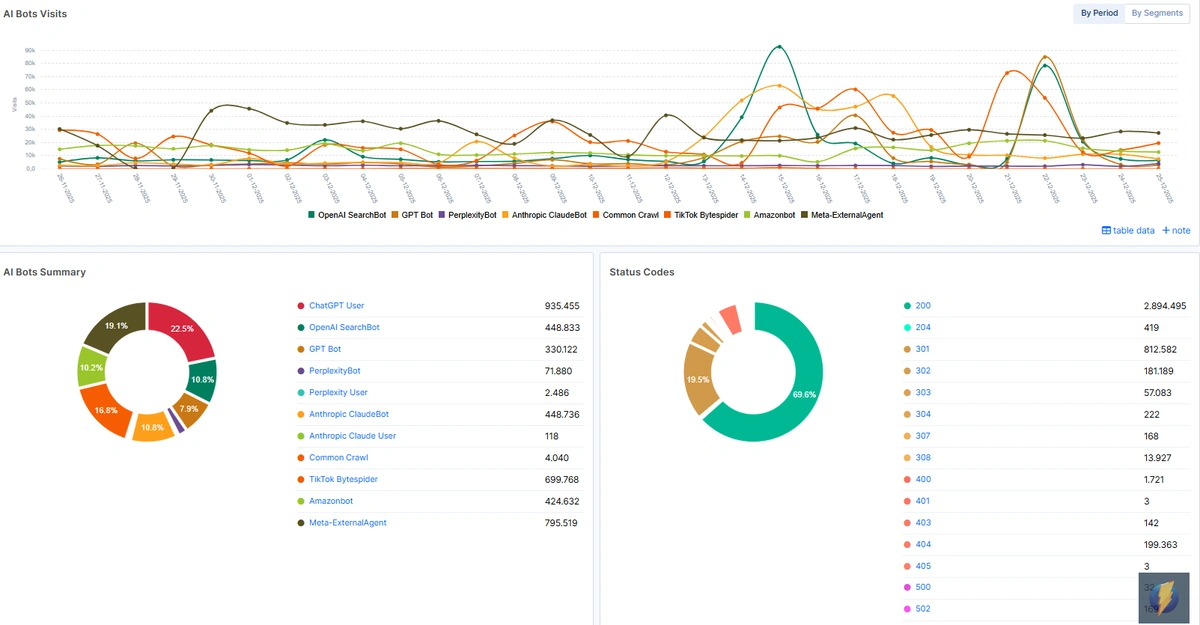

AI Bot Trends in JetOctopus

The AI Bots dashboard displays:

- Daily visits from ChatGPT User bots

- Perplexity User bot behavior

- Anthropic Claude visits

- Combined AI bot traffic dynamics

- Status codes AI bots encounter

This helps identify unusual spikes or potential issues.

Key Insights You Can Gain:

- AI bots often revisit popular pages – this signals which content LLMs find valuable.

- Low-quality pages may still be heavily crawled → fix internal linking & remove junk URLs.

- AI bots frequently hit 404/500 pages → this may degrade LLM understanding of your site.

- High load times for AI crawlers can indicate deeper performance issues.

- Different AI bots crawl different URL groups → useful for prioritizing content optimization.

Why SEO Teams Should Care?

- AI systems increasingly drive referral traffic (Perplexity, Bing Deep Search, ChatGPT Search).

- Improving pages heavily crawled by AI bots may boost AI visibility, not only Google rankings.

Monitoring AI bot patterns helps protect your content and manage server load effectively.

What Is Crawl Budget and Why It Matters Even More?

Crawl budget is the number of URLs that Googlebot and now also AI-driven crawlers can and want to request on your website within a given period. It’s finite, it’s different for every site and it directly affects how quickly your important pages get discovered, indexed and surfaced in search or AI-generated answers.

When a website produces more URLs than its crawl capacity can handle, both search engines and AI bots may simply ignore the “extra” pages. As a result, key content stays undiscovered longer, limiting your visibility across SERPs and LLM-powered platforms.

Major factors that waste crawl budget include:

- Faceted navigation and session-based URLs

- Duplicate or thin content

- Soft 404s and other pseudo-valid pages

- Security-compromised or hacked URLs

- Infinite crawl spaces (calendars, filters, parameters)

- Low-quality, auto-generated or spam content

These pages drain server resources and distract both Googlebot and AI crawlers from the URLs that actually matter your high-value content.

The good news. Crawl budget can be optimized and expanded, especially when you use log analysis and AI-powered diagnostics to identify and eliminate waste.

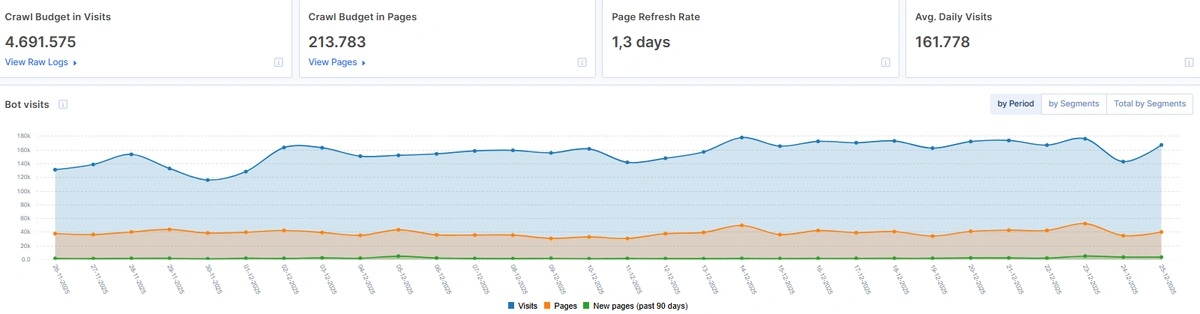

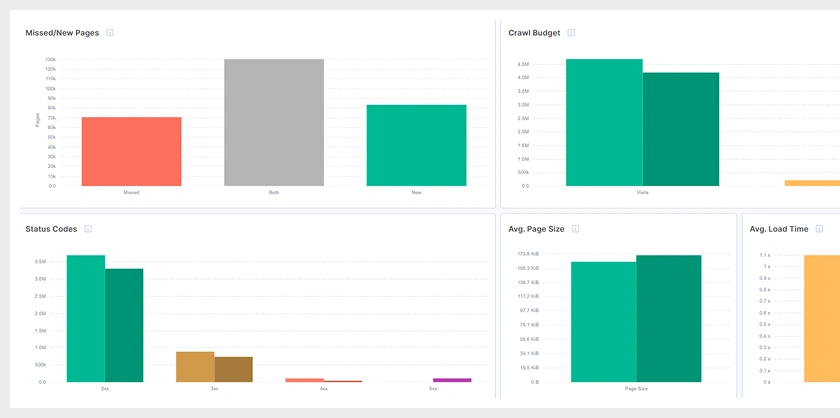

Define Your Crawl Budget

The first step in understanding and optimizing your crawl budget is evaluating how both search engines and AI crawlers interact with your website. The updated Log Overview panel gives you a clear, real-time picture of this activity.

Here’s what you’ll find inside:

Bot Visit Dynamics: an interactive chart showing how frequently Googlebot, Bingbot and AI bots like ChatGPT User, OpenAI SearchBot, ClaudeBot and others request your pages – and how many URLs they crawl each day.

Subdomain Crawl Distribution: a breakdown of how bots allocate their crawl activity across subdomains, helping you detect uneven or wasteful crawling.

Together, these insights reveal your effective crawl budget per bot type. They’re essential for spotting anomalies such as unexpected spikes, crawl drops or sudden changes caused by technical issues, deployments or AI traffic surges.

But this dashboard is only the starting point. To truly understand your crawl efficiency and the impact of AI bots you need to go deeper into log patterns, crawl waste and page-level behavior.

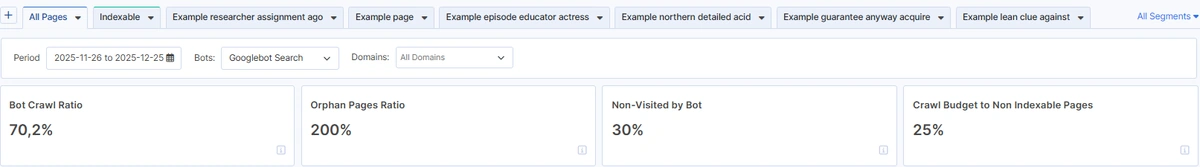

Identify Crawl Budget Waste for Googlebot & AI Crawlers

Crawl budget waste affects both search engines and AI crawlers. When bots spend their time on irrelevant, outdated or low-value pages, your important content becomes harder to discover – whether for Googlebot or for AI systems like ChatGPT, Claude and Perplexity.

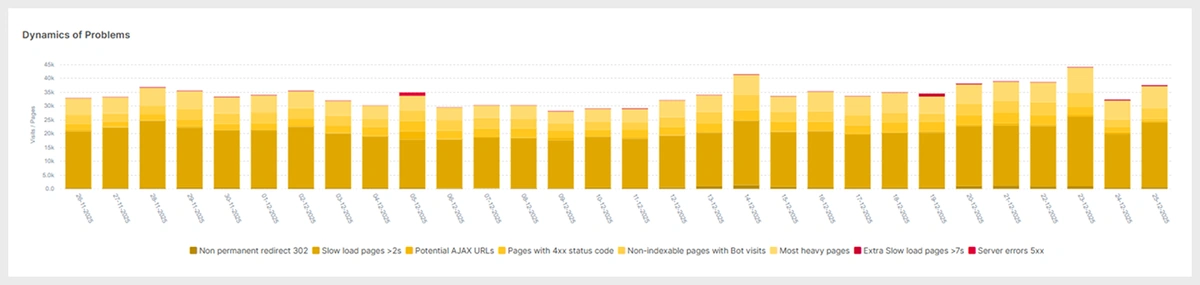

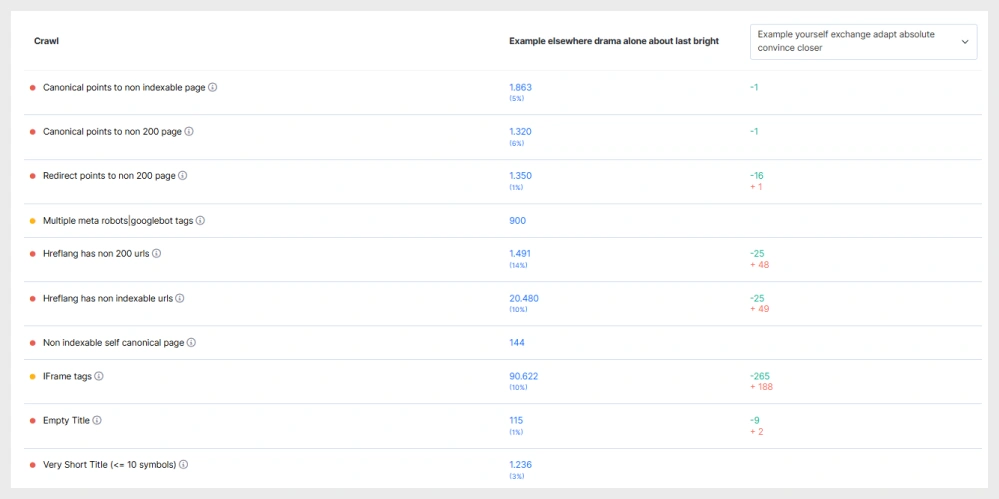

The Impact report helps you quickly identify where your crawl budget is being lost by showing.

Take Action on Crawl Budget Waste

Once you identify crawl budget waste, it’s time to take action!

Each column in this report is fully clickable and opens a detailed list of URLs that drain your crawl resources or remain undiscovered.

JetOctopus analyzes both search engine bots and AI crawlers, helping you understand:

- which pages attract irrelevant or low-value bot activity,

- which important pages are not crawled at all,

- where crawl signals conflict with your site structure.

With these insights, you can fix the issues that prevent Googlebot and AI bots (like ChatGPT, Claude and Perplexity) from reaching your key business pages – improving discoverability and overall visibility.

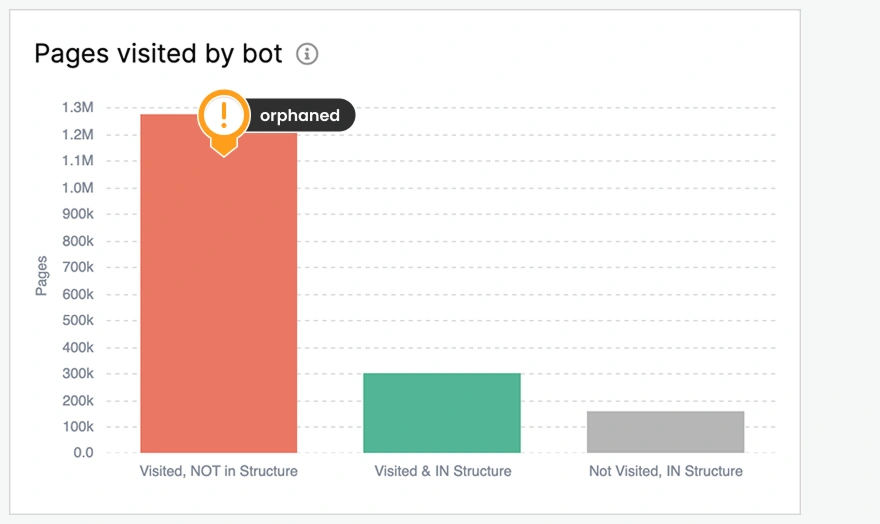

Orphaned Pages

Orphaned pages are URLs that receive no internal links – which means neither Googlebot nor AI crawlers (like ChatGPT, Claude or Perplexity) can easily discover or evaluate them.

Some of these pages are old or irrelevant, but others may contain valuable content that never gets visibility simply because it’s isolated from your site structure.

To fix orphaned pages, follow these steps:

- Review the list of orphaned URLs and group them by type (directory, category, template).

- Identify which pages are valuable and should be reintegrated into the site structure.

- Add internal links from relevant pages to restore discoverability.

- For outdated or low-value content, apply no-index or remove it completely.

- Monitor bot activity – after reintegration, both search bots and AI bots should begin crawling these URLs again.

If a valuable page is orphaned by mistake, reincluding it into your internal linking graph can dramatically improve its crawlability, visibility and overall performance.

Pages Not Visited by Search & AI Crawlers

When crawl budget is wasted on low-value or irrelevant URLs, important pages may never be visited – not only by Googlebot, but also by AI crawlers like ChatGPT, Claude, Perplexity, OpenAI SearchBot and others.

Unvisited pages stay invisible to both search engines and modern AI systems that rely on live crawling for generating answers.

Pages are often skipped because of:

- High Distance From Index (DFI) – too many clicks from key entry points.

- Weak or missing internal links – bots cannot discover the page.

- Large or complex content – slow rendering, heavy JS, or poor performance.

- Duplicate or near-duplicate content – bots deprioritize low-unique URLs.

- Technical issues – redirects, 4xx/5xx errors, canonical conflicts, blocked resources.

Analyzing crawl logs helps you understand why these pages are ignored and how to make them discoverable again.Fixing these issues improves crawlability, indexability and visibility – both in Google and in AI-generated search experiences.

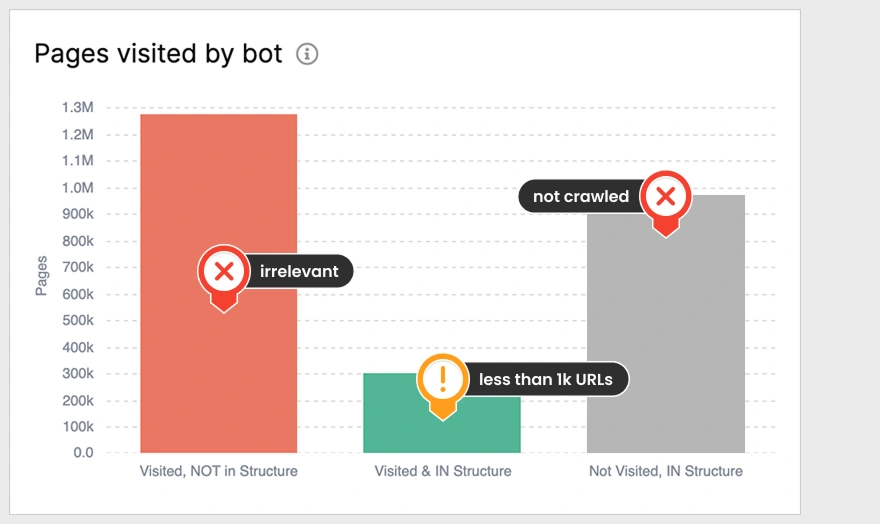

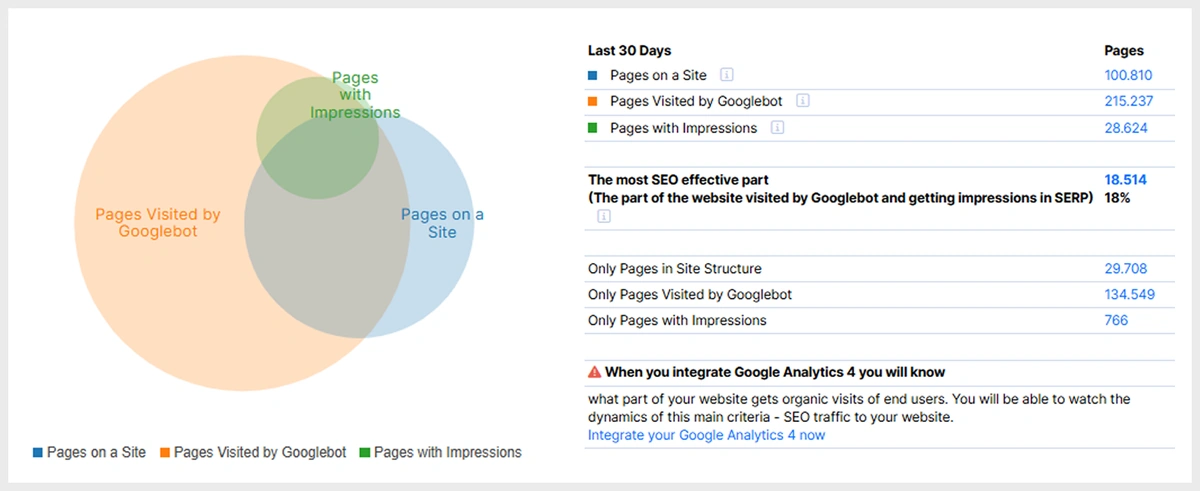

By combining these three datasets – Crawl, Logs, and GSC – you can clearly understand how bots interact with your pages, which URLs are included in your site structure and which pages are receiving impressions.

Here are several SEO insights you can use to improve your website:

1. Crawl Budget Waste

These are pages in the orange area that do not overlap with the site structure (blue). Bots crawl these pages even though they don’t belong to your structural hierarchy, which means your crawl budget is being wasted. Decide whether to remove these URLs or properly integrate them into your site structure.

2. Invisible Pages in Your Site Structure

These are the pages in the blue area that don’t overlap with the orange area. They exist in your site structure but are never visited by bots. Review these pages to understand why Googlebot or AI crawlers ignore them and how to make them more discoverable.

3. Pages Crawled but Not Receiving Impressions

The intersection of the orange and blue circles shows pages that are included in your structure and crawled by bots, but they receive no impressions. These URLs may lack internal links, have weak content, or suffer from technical problems. Improving these pages can enhance visibility and rankings.

Bot Behaviour by DFI (Distance From Index)

Distance From Index (DFI) shows how many clicks separate a page from the homepage. It remains one of the strongest signals influencing how both search crawlers and AI bots prioritize your content.

Pages that sit too deep in the structure receive fewer visits from Googlebot and may be ignored entirely by AI crawlers that rely on fast, high-confidence data sources.

Ideally, your most important pages should be 2–3 clicks from the homepage.

Pages located 4+ clicks deep are typically treated as lower-value and are crawled less frequently or skipped.

WHAT TO DO

- Identify whether the unvisited or rarely visited pages are valuable for users or SEO.

- If they are important, reduce their DFI by adding internal links from higher-authority, shallow-depth pages.

- Strengthen their internal linking to improve discoverability for both search engines and AI crawlers.

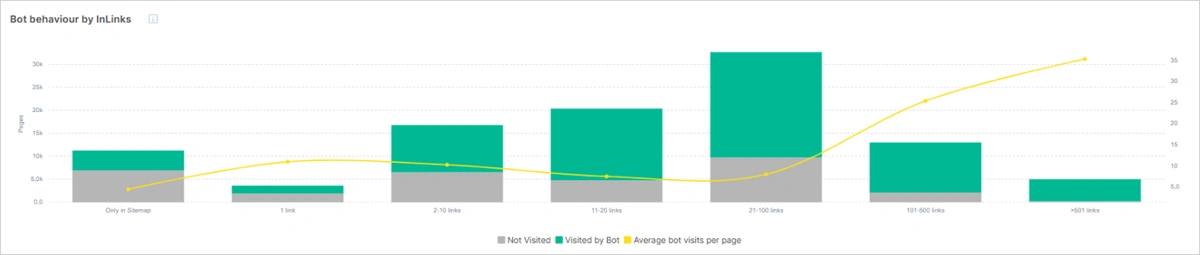

Bot Behavior by Inlinks

Both Googlebot and modern AI crawlers prioritize pages with strong internal and external linking. Pages with more inlinks typically receive:

- higher crawl frequency,

- deeper analysis from AI bots,

- better visibility and ranking potential.

Inlinks serve as a signal of importance – for search engines and AI models alike. Pages with poor internal linking often remain under-crawled, misunderstood or ignored entirely.

JetOctopus helps you reveal how linking depth affects crawl behavior so you can strengthen your internal architecture and ensure your key pages are fully discoverable.

WHAT TO DO

- Review pages with low inlink counts and determine if they provide value.

- If they are important, increase internal links, especially from pages with strong crawl activity.

- Ensure linking is logical and helpful for both users and bots – AI crawlers reward well-structured hierarchies.

- Avoid adding random links; focus on relevance and contextual signals.

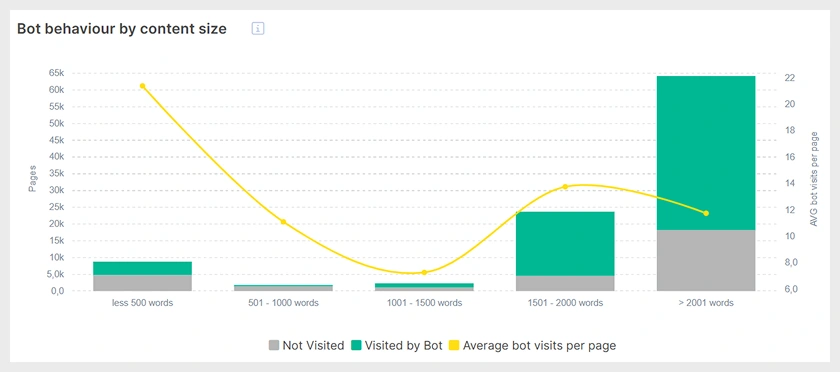

Content Size

Content remains one of the strongest signals for both search engines and modern AI crawlers. High-quality, well-structured content helps bots understand your pages faster, improves their crawlability and increases the chances your content will be used in AI-generated answers.

Pages with very little meaningful content – usually under about 500 words or lacking real value – tend to be crawled less frequently and are viewed as low-quality by both Googlebot and AI-driven systems.

AI models prioritize pages that are:

– comprehensive,

– helpful and trustworthy,

– structured in a way that is easy to parse and reuse.

WHAT TO DO

- First, determine whether these low-content or unvisited pages are still needed.

- If they are important, expand the content using Google’s and AI content-quality guidelines (EEAT, helpful content standards).

- Strengthen internal linking from pages with high crawl frequency to improve discoverability.

- Ensure the content provides real value, not just extra words – AI bots increasingly filter out fluff.

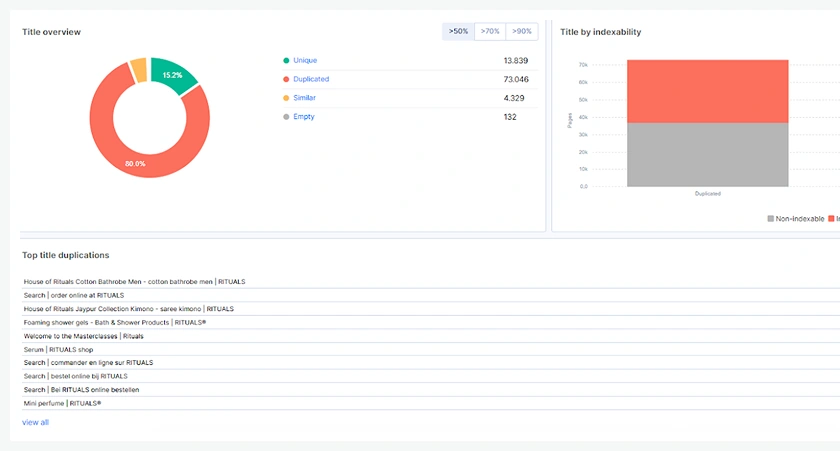

Title Tags & Bot Visibility

Title tags play a crucial role in helping both users and crawlers – including Googlebot and AI bots such as ChatGPT, Claude and Perplexity – understand what your page is about. Clear, unique and keyword-relevant titles improve crawl prioritization, indexation and overall visibility in both search engines and AI-driven answer systems.

Two major issues reduce visibility and waste crawl resources:

Duplicate titles – make it harder for bots to understand which page is the primary one.

Empty titles – leave bots with no signal about relevance or topic.

WHAT TO DO

- Review pages with empty or duplicated title tags and decide whether they should remain in your site structure.

- For pages you want indexed, create unique and descriptive titles aligned with Google’s Guidelines and AI-friendly content principles.

- Ensure titles clearly communicate relevance – this helps both search engines and AI crawlers surface your content more often.

Your Most Valuable Pages for Google & AI Crawlers

“URLs that are more popular on the Internet tend to be crawled more often to keep them fresher in our index.” – Google.

Pages that receive the most visits from Googlebot and AI crawlers (such as ChatGPT-user bot, Claude-user bot, Perplexity-bot) are treated as your highest-value pages. These URLs are seen as the most authoritative, most relevant and most useful – which is why bots revisit them frequently.

These pages should stay:

- evergreen and frequently updated,

- easily accessible,

- technically clean,

- free of crawling barriers.

You can find these top-priority URLs in the ‘Pages by Bot Visits’ report.

Add internal links from your most-visited pages to relevant but weaker pages.

This significantly increases:

– crawl frequency for underperforming URLs,

– their visibility in Google,

– and their likelihood of being surfaced in AI-generated answers.

Identify Low-Visited Pages

Using the ‘Pages by Bot Visits’ report, you can quickly identify the URLs that receive the fewest visits from both Googlebot and AI crawlers (such as ChatGPT-user, Claude-user, Perplexity-bot).

Although low visit frequency doesn’t always indicate poor rankings, it often highlights pages that bots struggle to reach or consider low-value. Among these low-visited URLs, you may still find important or profitable pages that deserve more visibility.

Analyzing these pages helps you uncover why bots ignore them and build a clear, data-driven plan for improvement.

To improve low-visited pages:

- Reduce DFI (Distance From Index) by making pages closer to your homepage or strong hubs.

- Strengthen internal linking, especially from high-authority or frequently crawled pages.

- Improve content quality to increase relevance and bot engagement.

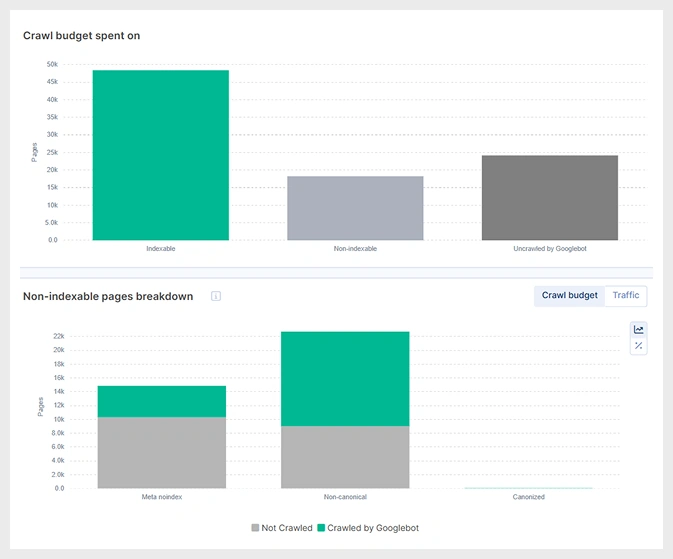

Non-Indexable Pages Visited by Googlebot & AI Crawlers

Both Googlebot and AI crawlers (like ChatGPT-user, Claude-user, PerplexityBot) often crawl non-indexable pages, including:

- Pages returning non-200 status codes

- Non-canonical URLs

- Pages blocked via meta robots or X-Robots-Tag

These visits usually happen because internal links point to these pages or because other URLs mistakenly reference them as canonical or hreflang versions.

As a result, bots waste crawl resources on pages that shouldn’t be crawled – creating unnecessary crawl-budget waste and reducing visibility of important content.

What to fix

To prevent search and AI bots from crawling non-indexable pages:

- Remove all internal links pointing to these pages

- Ensure they are not set as canonical targets

- Make sure they are not included in hreflang clusters

- Review templates and navigation to avoid accidental references

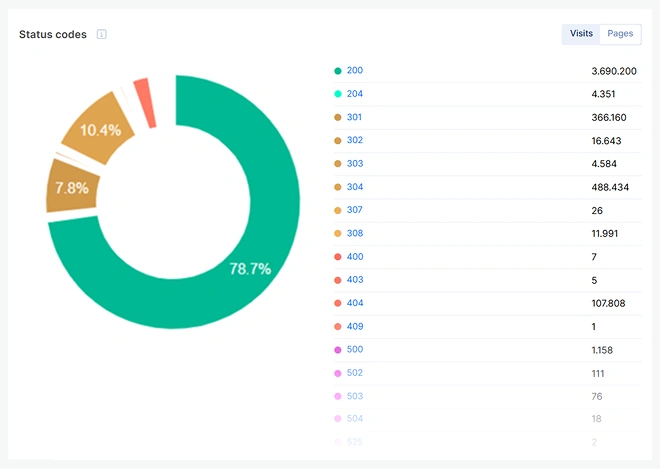

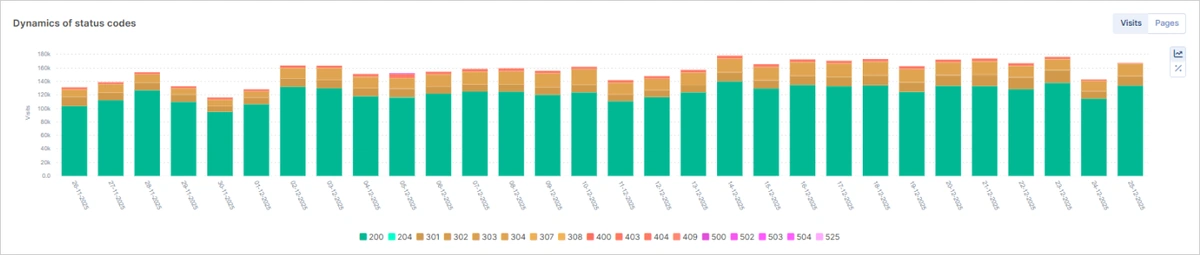

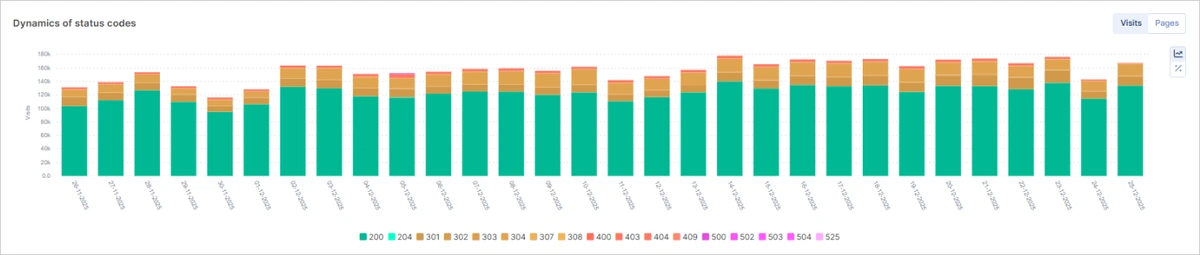

HTTP Status Codes: How They Impact SEO & AI Crawling

HTTP status codes directly affect how Googlebot and modern AI crawlers (such as ChatGPT-User, Claude-User, PerplexityBot) interact with your website.

When status code issues accumulate, bots waste their crawl resources on pages that:

- return errors (5xx),

- redirect excessively (3xx),

- or cannot be accessed (4xx).

This leads to crawl-budget waste, slower discovery of important pages and reduced visibility in both search and AI-generated answers.

5xx Errors

5xx errors are some of the most damaging issues for your website’s crawlability. When your server returns 5xx responses or times out, both search engine bots and AI crawlers (ChatGPT-User, Claude-User, PerplexityBot, etc.) lose access to your content entirely.

A spike in 5xx errors means:

- Googlebot reduces crawl frequency to protect its resources

- AI systems can’t retrieve your information for training or response generation

- your content becomes undiscoverable

Because 5xx errors are often intermittent, you may not notice them immediately – but bots do. Consistent monitoring is critical.

Using the Health and Bot Dynamics dashboards, JetOctopus detects server-side failures in real time so you can react before they cause ranking loss or visibility drops.

WHAT TO DO

- Investigate and fix every 5xx error as soon as it appears – no exceptions.

- Review server logs, CDN performance, hosting limits and firewall rules.

- If 5xx errors happen repeatedly, increase server capacity, optimize infrastructure or apply caching strategies.

- Ensure API endpoints and dynamic pages are stable under load, especially during traffic or bot-crawl peaks.

3xx Status Codes & AI Crawlers

3xx status codes indicate redirections – permanent (301, 308) and temporary (302, 307). While redirects are a normal part of any website, excessive or misconfigured 3xx pages can waste crawl budget for both search bots and AI crawlers (ChatGPT, Claude, Perplexity, etc.).

When Googlebot or AI systems spend time crawling redirect chains or outdated redirects, your high-value pages may receive fewer visits and reduced visibility in both search results and AI-generated answers.

WHAT TO DO

Investigate all 3xx pages to understand why they are being crawled:

- Verify internal links: ensure important pages aren’t pointing to long redirect chains.

- Check canonical tags: make sure they don’t reference redirected URLs.

- Review hreflang tags: redirected URLs should not be used as language alternates.

- Audit XML Sitemaps: remove any 3xx pages still included.

- Identify redirect chains & loops: simplify them to reduce wasted crawling.

By fixing redirect issues, you protect your crawl budget and improve howboth Googlebot and AI crawlers discover your key content.

4xx Status Codes

The most common 4XX errors include:

- 404 Not Found – the page or file wasn’t found by the server. This doesn’t indicate whether it’s missing temporarily or permanently.

- 410 Gone – the page is permanently removed and will not return.

- 429 – This is the most harmful of all 4xx status codes.

It signals to both AI crawlers and search engine bots that your server is overloaded or limiting requests. As a result, bots slow down or temporarily stop crawling your site. This can cause important pages to be discovered later, crawled less frequently, or missed entirely – which directly impacts indexation and visibility.

It signals to both AI crawlers and search engine bots that your server is overloaded orlimiting requests. As a result, bots slow down or temporarily stop crawling your site.This can cause important pages to be discovered later, crawled less frequently, ormissed entirely – which directly impacts indexation and visibility.

Both Googlebot and modern AI crawlers still spend crawl budget on these URLs, eventhough they provide no value and slow down the discovery of important content.

WHAT TO DO

Investigate 4XX pages to understand why they are crawled:

- Check internal links pointing to these URLs

- Ensure they are not included in hreflang and canonical tags

- Make sure they are not listed in XML sitemaps

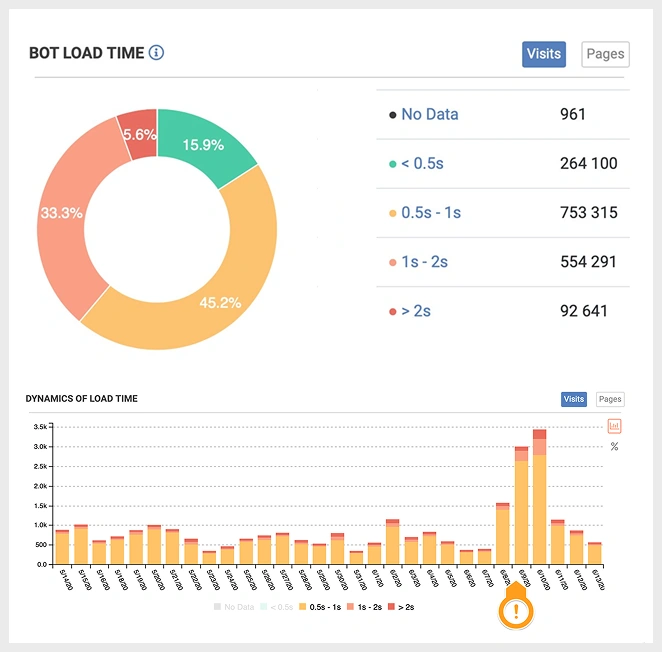

Load Time

Site speed plays a crucial role not only for Googlebot but also for AI crawlers. Fast-responding pages enable bots to fetch more content per crawl session and reduce the likelihood of timeouts or skipped URLs.

A slow server or unstable response times can cause:

- reduced crawl frequency

- incomplete crawls

- delayed indexation

- poorer understanding of your site by both search engines and AI systems

Monitor HTML load time for all bots using the Bots Dynamics dashboard to identify spikes, slowdowns or sudden performance drops.

WHAT TO DO

Improving site speed often requires deep technical changes. Work closely with your development team to:

- optimize server performance

- reduce response time

- eliminate blockages and heavy scripts

- ensure the site remains fast under load

Even small improvements in page speed can significantly increase how much content bots – both search engines and AI systems can successfully crawl.

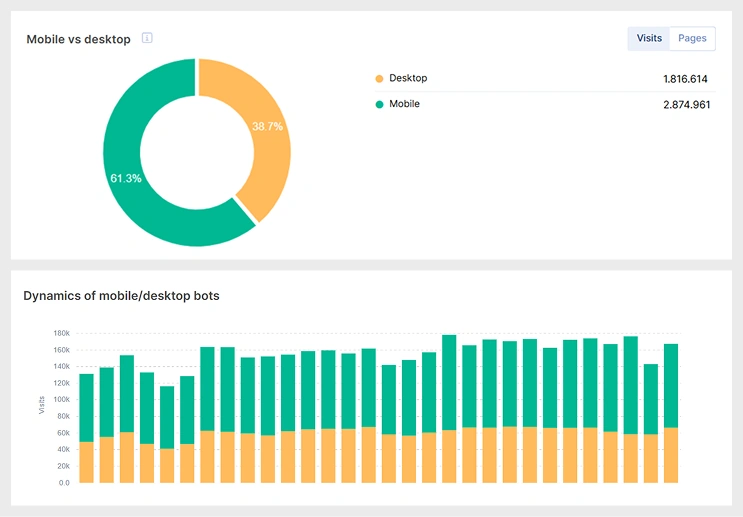

Mobile-First Indexing

Google fully adopted mobile-first indexing in 2018, meaning the mobile version of your site is now the primary source for crawling, indexing and ranking. Today, both Googlebot Smartphone and AI-powered crawlers (such as ChatGPT, Claude and Perplexity bots) rely heavily on mobile-optimized content to understand, evaluate, and surface your pages.

If your site still performs poorly on mobile, both search engines and AI systems may struggle to interpret your structure, rendering or content.

WHAT TO DO

- Ensure your website is fully mobile-friendly and follows Google’s mobile-first recommendations.

- Validate rendering, content parity, lazy loading and mobile UX issues.

- Monitor Googlebot Smartphone and AI crawler behavior in JetsOctopus to identify pages that fail mobile crawling or rendering.

- Use Google’s Guidelines and mobile testing tools to fix discovered issues.

User Experience

A detailed log file analysis reveals not only how search engines crawl your site but also how real organic users interact with it. Understanding these patterns is crucial for improving conversions, prioritizing content updates, and aligning your site structure with actual user behavior.

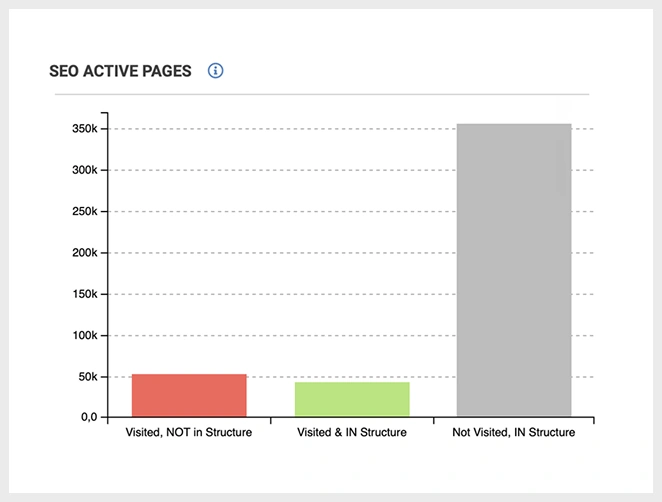

- Analyze how organic visitors navigate your website using the SEO Active Pages report in JetOctopus.

– The red bar highlights orphaned pages that still receive organic visits. These pages often contain valuable content but lack internal links. Integrating them into your site structure can boost visibility, rankings, and user engagement.

– The grey bar shows pages that are fully part of your site structure but receive no organic traffic. These pages should be reviewed for content quality, intent match, UX issues, and technical relevance. Based on data, decide whether to optimize, merge or remove them.

Modern AI crawlers and ranking systems (like Google’s AI models) increasingly rely on behavioral patterns. Ensuring your site architecture reflects how users actually browse improves overall discoverability and performance.

2. Identify technical issues that your visitors encounter – and resolve them quickly.

Fixing these issues not only improves user experience but also ensures that search engines and AI systems (such as ChatGPT, Perplexity and Claude) can properly access and evaluate your pages.Faster, more stable pages lead to higher engagement and improved conversion rates.

SEO Efficiency in the Age of AI Crawlers

Conclusion

A log file analyzer is an essential tool for improving your website’s crawlability, indexability and overall search performance. When combined with crawl data and Google Search Console insights, log file analysis gives you a full, data-backed view of how search bots actually interact with your site – so you can turn technical insights into measurable organic growth.

With in-depth log analysis in JetOctopus, you can:

- Monitor server health and detect critical errors in real time

- Optimize and redistribute your crawl budget

- Identify technical issues affecting crawling and indexing

- Detect and clean up orphaned or low-value pages

- Improve site architecture and internal linking

- Find thin, outdated, or duplicated content

The fastest way to see these insights in action is to experience JetOctopus on real data.

👉 Request a demo to see how JetOctopus log file analysis works for your website, explore key reports and learn how to turn raw logs into clear SEO decisions.