Why are there missing pages in the crawl results?

While checking the crawl results, you may notice that some pages are missing. Therefore, it is very important to understand why JetOctopus did not find some pages because search engines are just as difficult to find these pages when crawling your site.

Why JetOctopus didn’t find all the pages

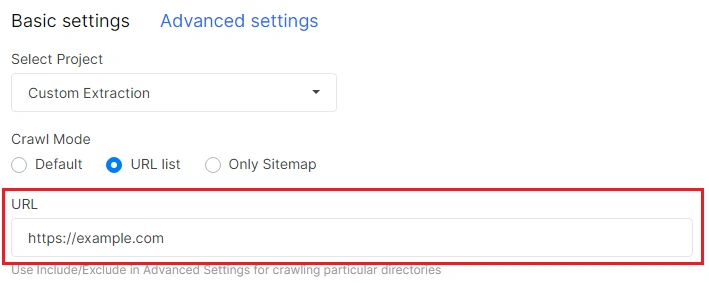

To understand why JetOctopus did not find all the pages, you need to understand how our crawler works. Scanning of your website starts from the home page (or the one you entered in the URL field when setting up a crawl).

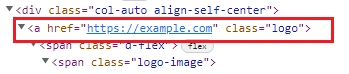

Our crawler looks for URLs with <a href= > tag in the code of the main page. GoogleBot similarly scans your website.

Then our crawler goes to the links he found on the first page and looks for <a href= > links in the code of these pages. And so on, until the crawler reaches the page limit specified in the “Page Limit” field.

Or until our crawler will find all the <a href= > links on the website.

Why are there missing URLs in crawl results?

Now, you know how JetOctopus scans your website. So, you can understand why there are missing pages in the crawl results:

- missing URLs are not linked to other pages on your website; they are not included in the structure of the website;

- crawl configuration does not allow to scan these pages.

It can be both reaching the page limit and settings of the crawl. Check if you have activated the “Respect robots rules” checkbox and if you disallow crawling pages that are closed for indexing. If so, our crawler will follow all the scanning directives for search engines, including the “nofollow” and a robots.txt file.

If you have a JavaScript website, make sure the URLs with <a href=> are displayed in the code with disabled JavaScript. To check it, go to Chrome DevTools, run the command line (Ctrl + Shift + P) and select “Disable JavaScript”. Then launch the page.

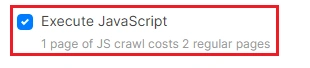

If the internal links are running only by JavaScript, then you need to scan the site by activating the “Execute JavaScript” checkbox. Pay attention that 1 page of JavaScript crawl costs 2 regular pages.

Note that JetOctopus scans your JS website in the same way as search engines. First, the regular HTML code is processed. Then the crawler is rendered JavaScript. And if the regular HTML does not have links to other pages of your website with <a href=>, search engines will need additional resources and time to scan your website and find all the pages. Because of this, search engines need more time to index your pages.

Why is it necessary to keep missing pages in focus?

Analysis of missing pages is a great option to improve internal linking and identify weaknesses of the website structure. If the pages are not included in the structure of the website, users will not be able to access them. Useful content will simply be lost. As a result, you may lose traffic.

For search engines, internal linking is also important, as it is the way to find and index a page. Search engines use the number of internal links to the page as a ranking factor.

Where to find the missing URLs in the crawl?

You can analyze the results of the crawl to see which pages are missing. There are several ways to find them.

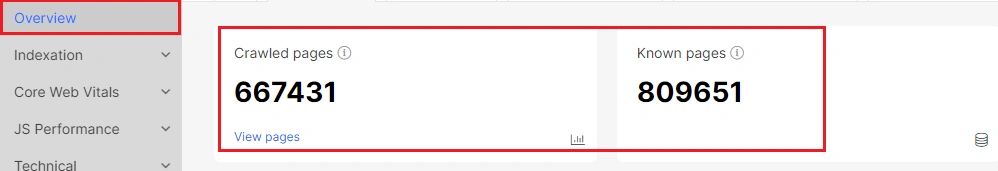

- Go to the crawl results, and select the “Overview” report. Compare the number of “Crawled pages” and “Known pages”. If the amount of “Known pages” is larger, it means that JetOctopus found all these URLs in the code, but did not process all of them. The reason may be in the limits or settings of your crawl. So do not worry, these pages can be scanned during the next crawl. They are available to crawlers and search robots.

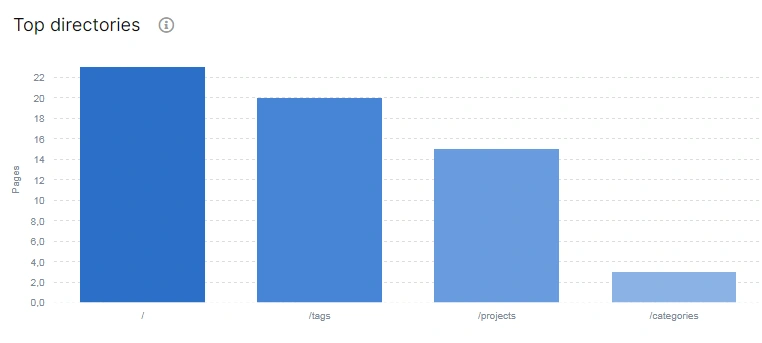

- The “Overview” report also has a chart of “Top directories”. You can see the distribution of URLs by directories. If you know that you have two language versions of the website in different directories and all pages have an analogue in two languages, make sure that the number of URLs in the language directories matches. If not, some pages may not have an analogue in two languages. From this chart, you can understand the structure of the site and highlight problems with missing URLs.

The main reasons for missing pages in crawl results

We want to finalize the main reasons why you can’t find all URLs in the crawl results:

- crawl settings;

- internal linking is running by JavaScript;

- URLs do not have <a href=>;

- page links were not found in a code; such pages are called orphans. Read the article “How to find orphan pages”;

- pagination, filtering or sections of the website cannot be scanned due to the non-availability of their links in the code;

- “nofollow” to the URLs that contain links to useful pages.