Why do JavaScript and HTML versions differ in crawl results?

When you crawl your JavaScript site, be prepared to see that JavaScript and HTML versions differ. The difference between these versions may affect the indexing results. However, if the most important SEO elements are the same, the difference between JS and HTML is normal. Below we will explain why the results of crawling JS and a regular website differ and what to look for when comparing JS and non-JS versions so that your website will be indexed without any problems.

Why JS code and HTML versions are different

Regular HTML is the original source code or unrendered JavaScript. Roughly speaking, this is the code that your server returns to the client’s browser for the next rendering.

The JS code is the code already processed by the client browser, it is the result of JavaScript execution.

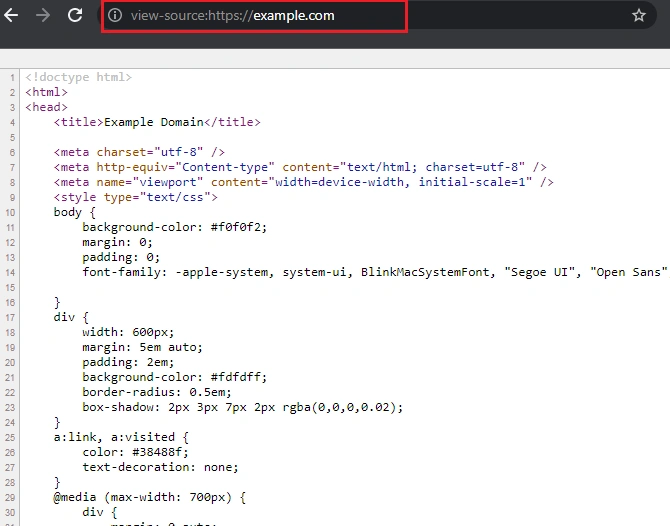

You can check your original source code by clicking Ctrl + U or entering “view-source:” in the browser bar before the page address.

You can also disable JavaScript in the browser to see non-rendering code.

Regular HTML is what your server returns. And the JS code is the result of processing by the client browser. The client can be a search engine or any visitor.

What to look for when comparing JS and non-JS versions

When crawling your website, search engines first process the original source of the page. They can also use non-JS titles, metadata and content when indexing pages of your website. Therefore, you need to pay attention to all the points we have described below.

- The non-JS version must contain the same indexing rules as the JS version. If the indexing rules change, search engines will use a more restrictive rule and will not index your pages.

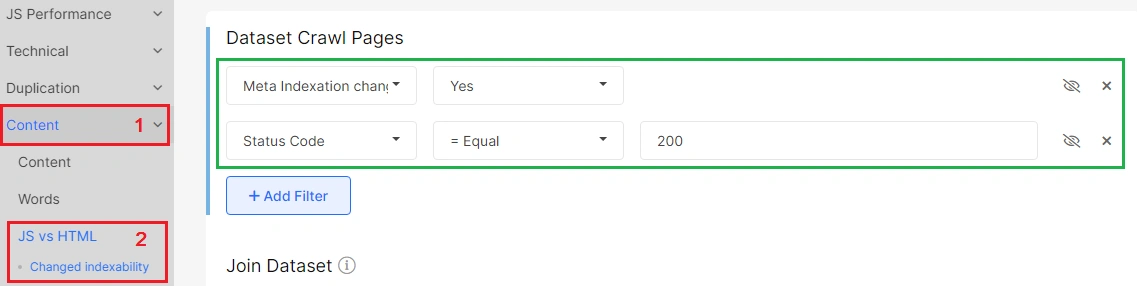

To see if your indexing rules have changed, go on to the JS crawling results, then go on to the “Content” – “JS vs HTML” – “Changed indexability report”. In this data table, you will see a list of pages that have different indexing rules in the original page source and the rendered JS.

- Search engines take into account the titles of the original source code of the page during indexing. Therefore, check whether the titles on the two versions are relevant.

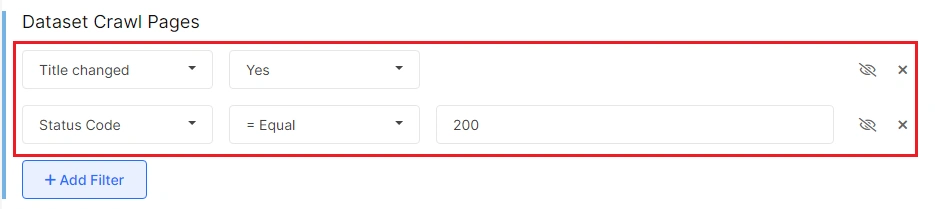

To do this, go on to the “Content” – “JS vs HTML” – “Changed titles” report to see a list of pages with different titles on JS and non-JS versions.

- Check for other important search engine directives:

- Canonical;

- Hreflang;

- Main content;

- Headings

- Internal linking.

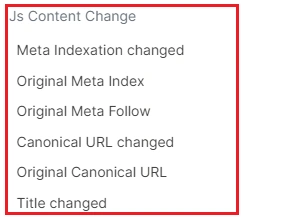

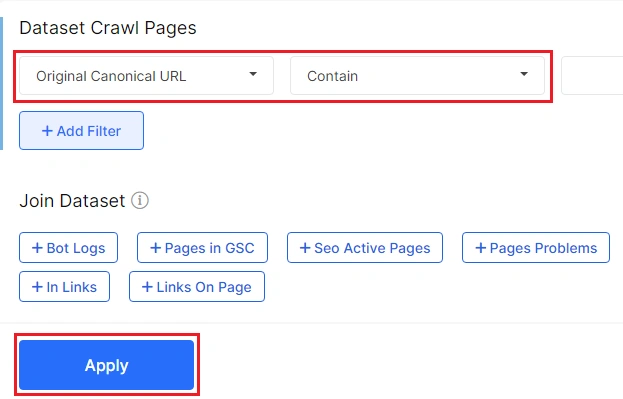

To check if the data is different, go to “Data Tables” – “Pages”. Then click “+ Add filter” and select the desired item from the “JavaScript Content change” filters.

You can also filter out elements that have been found in non-JS versions. All of these elements must be correct for search bots to process them. For example, to check if the canonicals are absolute, select “Original canonical URL” – “Is absolute”.

Also make sure that the links in the JS code are the same for robots and users. In order for the search engine to crawl your urls, they need to be used with <a href=>. All other urls, as well as those that appear on the page after the user has performed an action (for example, when the user moves the cursor onto a page element), are not crawled by search engines. Accordingly, these URLs cannot be indexed.