AI Bots & SEO in 2026: Everything You Need to Know

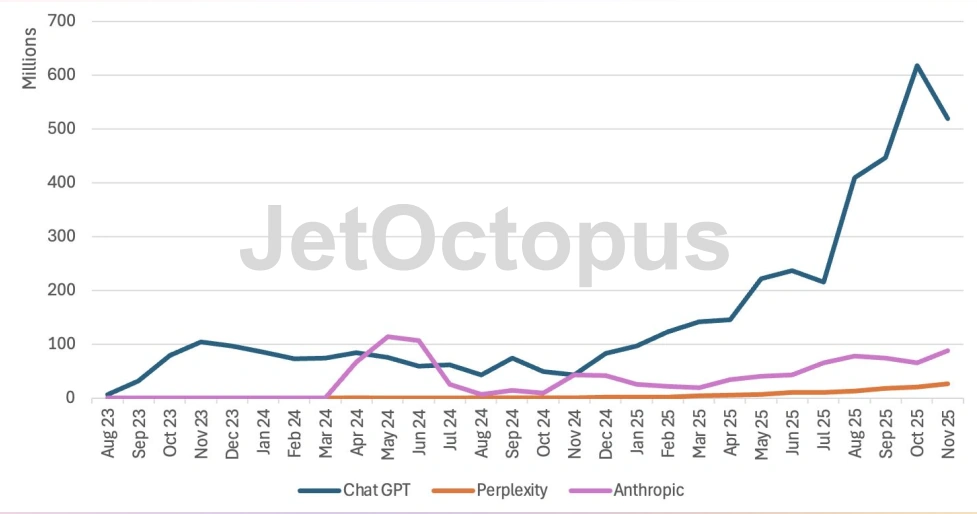

AI bots now account for roughly 50% of Google bot activity and 75% of that traffic comes from user searches, not just training crawls, according to Stan Dashevskyi, Head of SEO and Customer Success at JetOctopus. If your site isn’t optimized for AI visibility, you’re already losing ground, he said.

Speaking at a recent JetOctopus webinar, Stan explained what SEO specialists need to know about AI bots in 2026, based on analysis of server log data from thousands of websites.

The AI bot landscape

Three major players dominate AI-powered search: ChatGPT, Perplexity and Anthropic. These tools aren’t just scraping the web for training data anymore. They’re actively researching on behalf of real users, breaking down complex prompts into search queries and compiling answers from multiple sources.

Unlike traditional search engines, AI bots operate without a search index. Instead, they rely on Large Language Models that need constant education and real-time fact-checking against trusted sources. When users ask questions, AI agents conduct live research, verify information across multiple pages and synthesize responses with citations.

Three types of AI bot activity

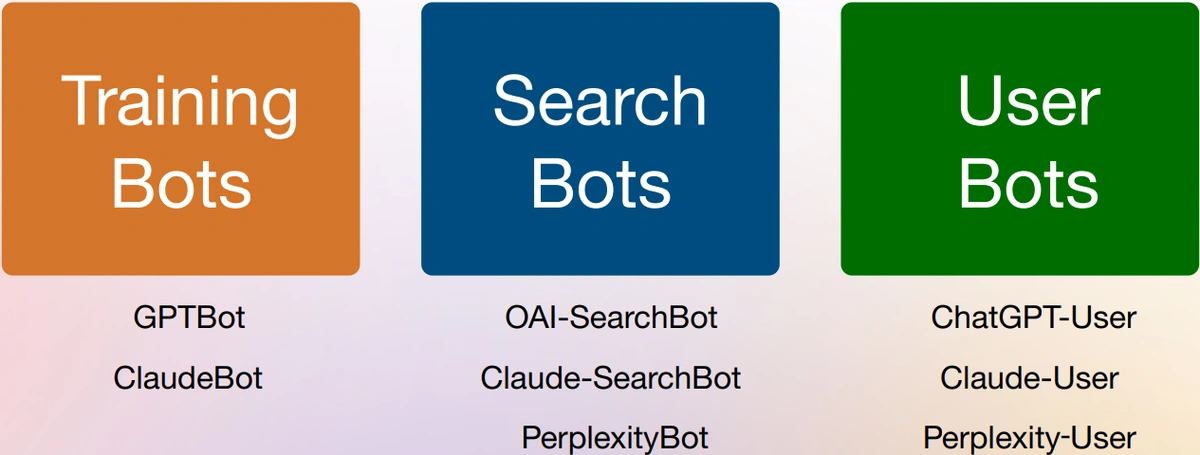

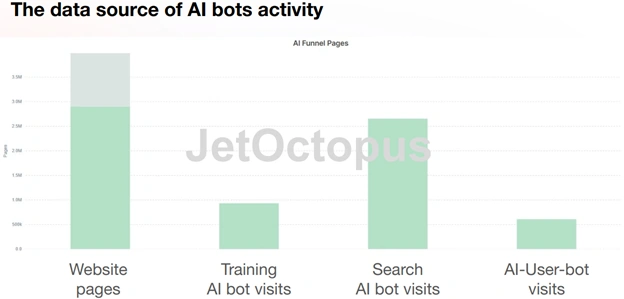

AI bots visit your site for three distinct reasons:

• Training bots collect information to educate their underlying models.

• Search bots crawl for new data to expand their knowledge base.

• User bots conduct research on behalf of real users performing searches.

The very first takeaway from today’s webinar is that 75% of all AI bots activity is generated by user searches. In other words, 75% of everything that AI bots see on the web is done on behalf of the users. This means optimizing for AI bots isn’t about training data – it’s about winning real user queries right now.

According to JetOctopus data, user-generated bot traffic represents the majority of AI engagement with website content. Users are using AI bots to find information, and AI bots search Google or Bing on their behalf, then compile the information back to users in their agent interfaces.

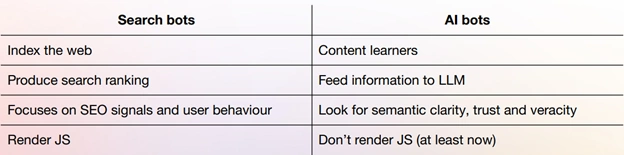

How AI bots differ from search bots

AI crawlers and traditional search bots share some behaviors. Both crawl the web, follow links and consume visible content. But the similarities end there.

Search engines like Google focus on technical SEO signals: page speed, mobile-friendliness, internal linking structure. They render JavaScript, build a search index and rank pages based on hundreds of factors. Their goal is to organize information for retrieval.

AI bots prioritize different signals, he explained. They don’t render JavaScript – at least not yet. They trust server-rendered HTML and evaluate content based on mathematical models like cosine similarity and entity graphs. Cosine similarity measures how closely your content matches user intent, while entity graphs map relationships between topics.

Instead of ranking pages, AI bots verify information against trusted sources and determine whether your content deserves to be cited in an answer shown to millions of users.

This fundamental difference changes optimization strategy. SPA frameworks and dynamic content that rely on client-side rendering may be invisible to AI bots. If your site loads content via JavaScript, AI tools might not see it at all.

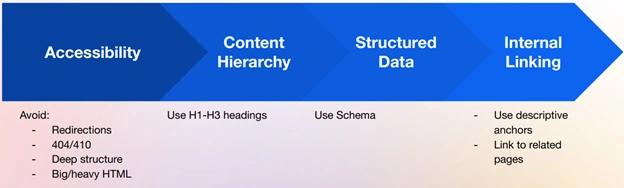

Site structure matters more than ever

AI systems can’t browse like humans. They rely on HTML structure and markup to understand context. Without JavaScript rendering, clean architecture becomes critical.

Excessive redirects, forced errors, stretched site depth and bloated HTML all reduce your chances of appearing in AI-generated answers. Each technical friction point makes it harder for bots to access and parse your content. Pages need to be fast, their HTML needs to be clean, the number of nodes needs to be small and valuable pages need to be as close to the homepage as possible.

Content hierarchy through proper heading usage helps AI bots identify main topics and supporting details. AI bots definitely trust the headings on your pages and they can parse the headings to see the main topics and secondary paragraphs.

Schema markup acts as a cheat sheet, explicitly stating where to find article authors, publication dates, product prices and stock status. For large sites with thousands of similar pages, structured data becomes essential for helping bots understand what each page contains.

Internal linking with descriptive anchors distributes topical authority and shows AI systems how pages relate to each other. Internal links with descriptive anchors help AI learn how pages relate. Good internal linking can distribute topical authority and reinforce the related pages being together.

Content requirements for AI visibility

AI agents select content based on core qualities:

Concise and direct. The content should be straight to the point. Vague content without clear takeaways gets skipped. Since ChatGPT trained primarily on user-generated content, natural language, Q&A formats and conversational queries perform well.

Deep and clear. AI prefers comprehensive articles with definitions, examples, and simple explanations.Content quality is the key for AI.

AI tools favor content that thoroughly addresses topics.

Structured and factual. The LLMs know if you’re telling the truth or not. If your content contradicts established information, it gets ignored. New facts or unique insights increase visibility, but the foundation must be accurate.

Authoritative. Google’s AI mode has access to more credibility signals than other AI tools. Strong backlink profiles and brand reputation contribute to trustworthiness evaluations. AI agents also take into account citations, unlike traditional Google search.

Safe and appropriate. Controversial, unclear or hostile content gets deprioritized, especially for sensitive topics.

Fresh. Content should be up to date, and that’s exactly not about just changing your publication date or changing the number of the year in the title. Because AI bots reach to user demand freshness, the immediacy matters much more than in old-school SEO. Outdated content is simply skipped.

The AI ranking algorithm

AI ranking combines traditional SEO fundamentals with new optimization signals. Your content must be reachable: fast pages, clean HTML, minimal node count, proximity to the homepage. Technical accessibility remains foundational.

Correctness matters. Facts must be accurate, and you need to add new information beyond what AI systems already know. You need also not to be just a source of information, rather you need to add some new things to the already known points. Content can’t be informal, overly promotional or unprofessional.

Well-structured content requires relevant terms in headings, internal links pointing to related pages with descriptive anchors and comprehensive answers. Use schema markup where applicable – prioritize Article, FAQPage, HowTo and Product schemas.

Vary your content format with lists, tables and PDFs. Don’t simply write an article that consists only of paragraphs and images. The article should have lists, should have tables if that’s possible. AI bots parse PDFs easily and Google can rank PDF files separately in search results, creating additional traffic opportunities.

Authority signals like backlink profiles and citations influence AI perception. Google’s AI mode also considers behavioral factors like dwell time and scroll depth, indicating genuine user engagement.

Research shows strong correlation between ranking in traditional search results and appearing in AI-generated answers. To perform well in AI, you still need to do and continue doing the classical SEO. Classic SEO practices remain effective, but AI optimization adds new requirements on top.

Measuring AI bot activity

Server logs are the primary source of the data and of any AI bot activity. Without log analysis, AI-driven visibility remains invisible. You can’t optimize what you can’t measure.

JetOctopus parses logs to identify AI crawlers by user agent and IP address, categorizing hits as training bots, search bots, or user-generated bots. This data reveals which parts of your site are indexable, what content interests AI systems and when user-driven research visits occur.

While training and search bot visits don’t have direct impact on visibility, they indicate whether AI systems are aware of your content. User bot visits can be translated into impressions in AI tool interfaces. By checking the pages which were visited by AI user-generated bots, you can discover which content is searched by your users and further you can scale it.

Log analysis also tracks rapid changes in AI behavior. Since early 2025, AI bots started querying Google on behalf of users, not just Bing. This is the point when AI bots started to Google. They used to Bing on behalf of the users and now they started to Google.

This shift drove massive growth in long-tail keyword impressions. According to JetOctopus internal data, there was huge growth for seven-word, eight-word, nine-word, ten-word and larger than ten-word long-tail keywords. The prompts are being taken by AI tools and they break them down into smaller ones, reconfigure into search queries, grab the information from Google or Bing and then further bring the pages to you and compile all the answers.

SEOs now see more diverse search queries clustered around topics and monthly search volumes continue to inflate.

The shift to answer engines

The biggest difference between the search engines and AI tools is that they’re transforming the web and transforming the way people use the internet. Instead of searching for information, users now ask for answers. We’re skipping this kind of stage, referring to the traditional search step where users browse multiple results.

With 75% of AI bot activity driven by user searches, the volume of AI-powered queries will grow dramatically. But this growth only benefits sites optimized for AI visibility. If AI bots can’t access, understand or trust your content, you won’t appear in their answers.

The opportunity exists for sites that adapt. AI systems need authoritative sources to cite. They reward comprehensive, accurate, well-structured content. As AI adoption accelerates, being discoverable by AI agents becomes as important as ranking in traditional search.

What to do now

Stan outlined four priorities for 2026:

Continue traditional SEO. You have to continue doing the old-school SEO and this will definitely help you in succeeding in AI-driven research. Fresh, well-structured, reachable, authoritative content still works. AI optimization builds on these fundamentals, not replaces them.

Become the answer. You need to stop being just the source of the data and you need to be the answer. Provide comprehensive, definitive answers to user questions. To gain visibility now you need to be the answer rather than just be a relevant source of information.

Track new metrics. Beyond clicks, monitor AI-driven discoverability and citations, Stan said. In 2026, SEOs might take into account new KPIs such as not only clicks but also AI-driven discoverability and citations of your business.

Use log analysis to track:

• Pages visited by user-generated AI bots

• AI bot crawl depth compared to Googlebot

• Pages with high AI bot bounce (single hit, no follow-up)

• Time-of-day patterns in user AI bot activity

Use proper tools. “The most critical probably is that you have to use the right tools to measure and analyze AI bots visibility and activity on your website,” he said. Without log analysis, you’re optimizing blind. Server logs are the primary source of data for any AI bot activity, Stan emphasized.

“Master those things in 2026 and your website becomes an AI-trusted source of answers, visible, cited in conversations and chosen by AI systems billions of users rely on.” Stan concluded.