How to find URLs blocked by robots.txt file in sitemaps and why it is important

Undoubtedly, sitemaps are an exceedingly potent tool for optimizing your website’s SEO. SEO specialists universally rely on sitemaps to signal search engines about crucial pages deserving indexing. Nonetheless, pitfalls can arise. Sitemaps turn detrimental when they incorporate broken URLs, non-indexable URLs, or pages obstructed by robots.txt directives.

What does it mean for search engines if there are URLs blocked by robots.txt file in the XML sitemap?

On the one hand, you give search engines a list of URLs in the sitemap and say “Dear Google, please scan and index these pages” On the other hand, you prevent Google from entering and crawling these pages, that is, you block search engines from accessing these pages by a robots.txt file. In this case, Google will not be able to get the content and index it, even if these pages are indexable.

Therefore, we recommend detecting pages blocked by robots.txt in your sitemaps.

How to detect pages blocked by the robots.txt file in sitemaps?

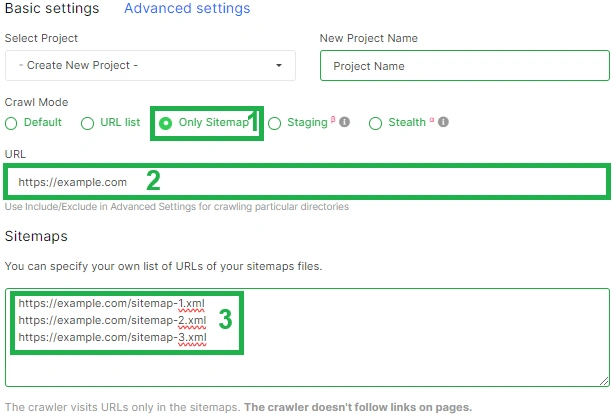

To do this, you need to start a new crawl. You can run the crawl in “Sitemap Only” mode. Or you can start crawling the entire website by adding sitemaps in advanced settings in the “Sitemap” field. If you decide to crawl only sitemaps, select the “Sitemaps Only” mode. In the “URL” field, enter the link to your website’s home page, and in the “Sitemaps” field, enter the links to the sitemaps you want to test.

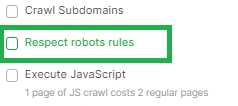

After that, perform all other crawl settings, deactivate the “Respect robot rules” checkbox and start the crawl.

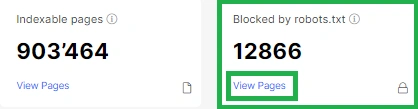

After the crawl is complete, and it is really very fast, go to the results and select the “Indexation” report.

Please note that below we cover the next steps that need to be taken if you have been running “Sitemaps Only” crawl mode.

In the “Indexation” report, select the “Blocked by robots.txt” dataset. Here you will find a list of all URLs that were found in the sitemap and that cannot be crawled by Google because there is a corresponding rule in the robots.txt file that prohibits the search engine from accessing the page.

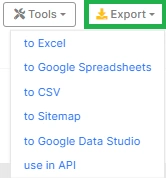

All data can be exported in a format convenient for you by clicking the “Export” button if you want to analyze these pages in more detail.

If you run a crawl of the entire website with sitemaps included, go to the “URL Sitemap” datatable, add the “Page Crawl” dataset. Then click the button “+Add filter” and select “Is robots.txt indexable” – “No”.

So you will get data only for links from site maps.

What to do with URLs blocked by robots.txt file in sitemaps?

Analyze what kind of pages these are. Maybe you need to change your robots.txt file and allow them to be crawled if they are important. Or maybe you need to remove these pages from your sitemaps because they shouldn’t be crawled and indexed by search engines.

Once you’ve decided what to do with these pages, you can update your sitemaps. This can be done by contacting the developers or by generating a new sitemap using JetOctopus.