Measuring Real Visibility in AI: Beyond Traditional Analytics

This article is based on the second part of the JetOctopus AI webinar series. You can read part one here.

AI visibility is no longer about rankings. It is increasingly about probability – the likelihood that an AI system will select, reference or paraphrase your content when responding to a user query.

For SEO specialists in 2026, this shift requires new metrics, new data sources and a rethinking of how visibility and performance are measured in AI-driven environments.

In the second part of the JetOctopus AI webinar series, Stanislav Dashevskyi, Head of SEO and Customer Success at JetOctopus, explored how to measure and interpret visibility in AI systems, using real log data and Search Console insights. The focus was practical: how to understand AI behavior, what signals matter and how SEO teams can adapt.

Recap: the AI landscape in 2026

SEO is not disappearing in 2026. Instead, it is evolving into a broader discipline where AI introduces an additional layer of responsibility rather than replacing traditional optimization.

The fundamentals remain unchanged: clean HTML, site speed, clear architecture, internal linking and well-optimized content are still essential. These factors matter just as much for AI systems as they do for traditional search engines.

What has changed is the addition of AI-specific signals. AI systems tend to prioritize factual correctness, real-world examples, citations, and content freshness. Unlike classic search algorithms that rely heavily on links and PageRank-style signals, AI tools use mathematical models to compare documents and evaluate relevance in context.

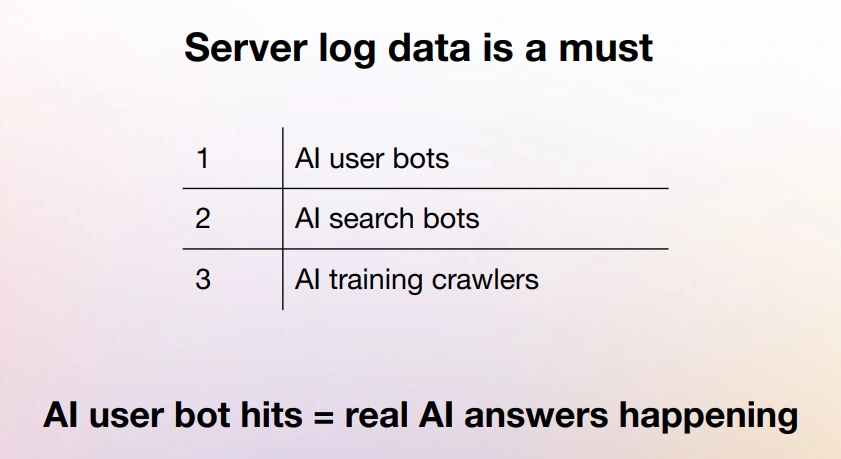

Server logs remain the most reliable source for understanding AI bot behavior. Without log analysis, AI activity largely remains invisible and optimization without measurement is not possible.

Major AI platforms and how they behave

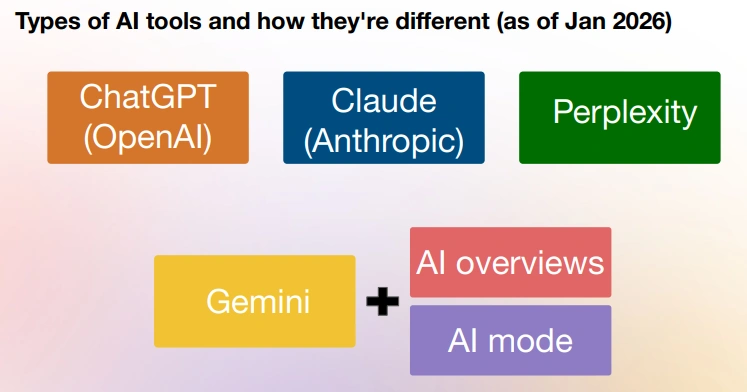

Today, three AI tools dominate most AI-driven search behavior: ChatGPT, Claude and Perplexity. Based on observed log data across large websites, ChatGPT currently generates the highest volume of AI bot activity.

These tools share several characteristics:

- They do not maintain traditional search indexes

- They perform searches on behalf of users

- They generally do not render JavaScript themselves

- They place strong emphasis on factual consistency and brand trust

There are also differences. Claude tends to include fewer outbound citations, while ChatGPT and Perplexity reference external sources more frequently.

A major development occurred when Google released Gemini, which operates with access to Google’s existing search index. Gemini does not introduce a new crawler; instead, it relies on a permission layer called Google Extended, which allows Googlebot-collected data to be used for training and answering queries.

This permission layer does not appear as a separate user agent in server logs, making it difficult to observe directly. However, Gemini can access JavaScript-rendered content that Google has already processed – a capability that gives it a structural advantage over standalone AI tools.

Rethinking visibility metrics

Traditional SEO metrics such as rankings, clicks and links do not fully translate to AI visibility.

In an AI context, visibility means:

- How often AI bots crawl and revisit your pages

- How frequently your content is cited, paraphrased or referenced

- How prominently your brand appears in AI-generated answers

Because AI systems can produce different responses to the same query, visibility becomes probabilistic. It reflects the chance that your content will be selected when an AI answers a user question.

Log-based analysis suggests that AI platforms typically rely on a relatively narrow portion of the web – slightly broader than the traditional top 10 search results, but still limited in scope.

The evolving conversion funnel

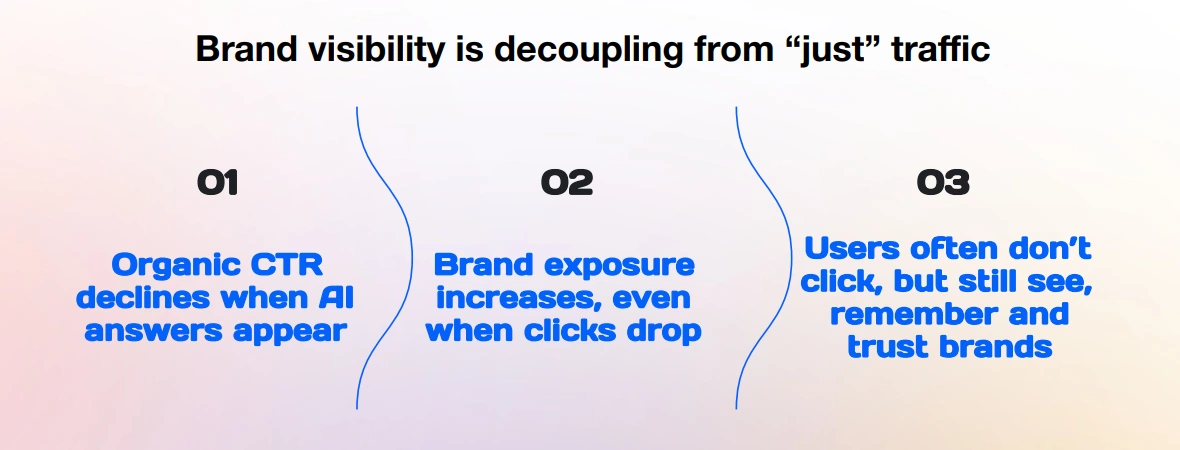

AI changes how users discover and interact with brands. When AI answers appear, organic click-through rates often decline, but brand exposure does not necessarily decrease.

Instead, users are exposed to brand names, concepts and citations directly in AI interfaces. This visibility can later translate into direct traffic, branded searches and higher-intent visits.

As Stanislav explained during the webinar, this effect can be compared to paid display ads – except the exposure is organic and data-driven rather than bought.

When websites increase AI visibility, several patterns are commonly observed:

- Organic sessions may flatten or decline

- Direct traffic remains stable or increases

- Branded searches grow

- Time on site increases

- Bounce rates decrease

- Conversion rates often improve

These changes occur because users who click through after encountering AI answers tend to be more informed and motivated. Meanwhile, low-intent users often remain within AI interfaces, reducing unqualified traffic.

Observed click-out rates from AI interfaces remain very low – often around 1%, which further increases the importance of brand exposure.

Why server logs matter

Server logs provide the clearest window into AI behavior.

Log analysis allows SEO teams to understand:

- Whether AI bots visit pages during user-initiated research

- Whether content is revisited for updates or verification

- Which pages are favored or ignored by AI systems

AI user-bot visits can be interpreted as a proxy for impressions within AI interfaces. When analyzed at the URL level, this data reveals which content AI systems rely on and where optimization opportunities exist.

Some pages may perform well in traditional search but lack the structure or citations AI systems prefer. Others may not rank highly yet still appear well-suited for AI-generated answers. Logs make these gaps visible.

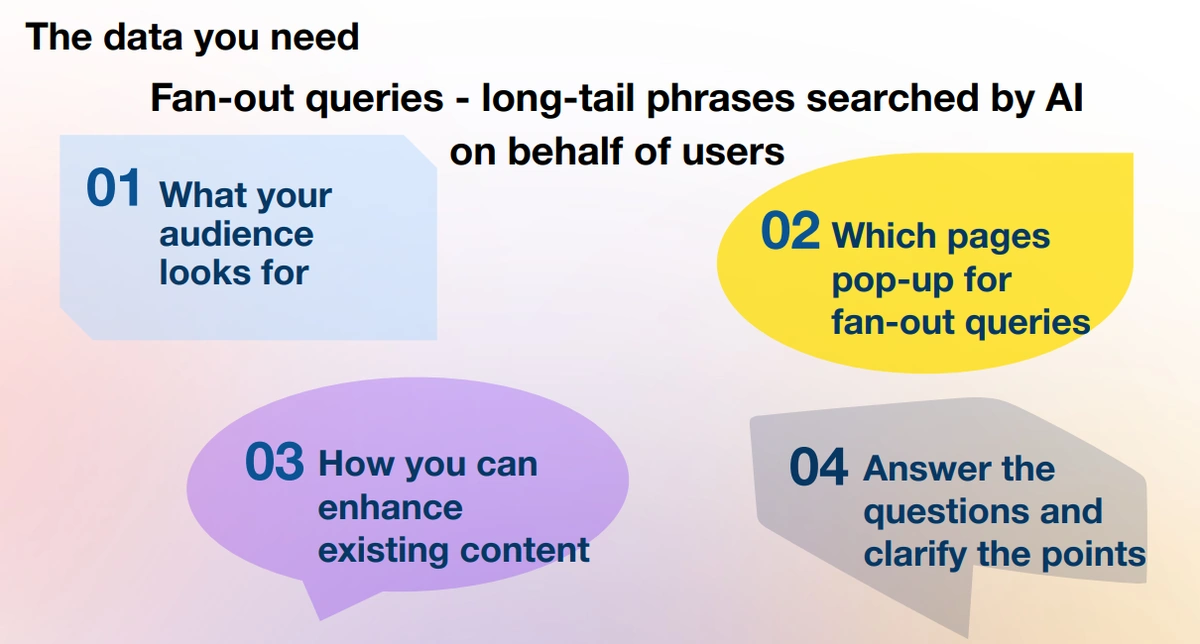

Fan-out queries: understanding real user intent

Fan-out queries are searches performed by AI tools on behalf of real users.

Based on observed data, roughly half of Googlebot-level activity now involves AI-related crawling. Within that activity, a significant portion corresponds to user-initiated searches conducted through AI interfaces.

Fan-out queries are typically long, detailed and highly specific. They are visible in Google Search Console and provide valuable insight into how users think, what problems they face, and how they compare solutions.

Analyzing these queries allows SEO teams to:

- Address real user questions directly in content

- Improve FAQ sections and documentation

- Enhance product messaging and customer support

- Increase both AI and traditional search visibility

The growth of seven-to-eleven-word queries has accelerated since 2023, with a notable increase in 2025. This trend provides SEO specialists with a level of intent visibility that has been largely unavailable for years.

Fan-out queries offer a glimpse into the full decision-making path of users – from initial curiosity to specific product concerns.

How JetOctopus supports AI visibility analysis

JetOctopus provides tools designed to analyze AI bot behavior using real data.

The platform identifies:

- Which AI bots crawl your site

- Which pages they visit or revisit

- Status codes, errors and anomalies

- Differences in behavior across bots

Data is available at the URL level, enabling detailed analysis of bot activity, load times and crawl patterns.

JetOctopus also integrates Google Search Console data via API and BigQuery, making fan-out queries easier to identify and analyze. These queries are currently visible at the URL level and will soon have a dedicated interface within the platform.

By combining log data, referral traffic and search queries, JetOctopus provides a consolidated view of how AI-driven discovery influences user behavior.

New metrics that matter

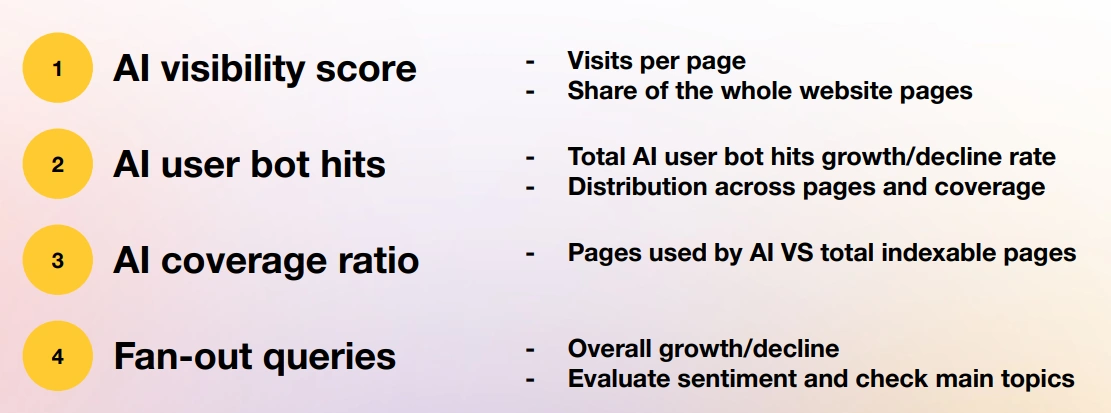

Several emerging metrics are becoming relevant for AI visibility:

- Pages visited by AI bots – measured relative to indexable or total pages

- AI user-bot hits – a proxy for brand exposure in AI interfaces

- AI coverage ratio – the share of pages accessible and usable by AI systems

- Fan-out query volume – tracked over time for growth, sentiment and intent shifts

These metrics help teams continuously refine content and strategy throughout 2026 and beyond.

From rankings to selection

“We’re no longer optimizing for rankings – we’re optimizing to be chosen,” Stan concluded.

In 2026, the most successful websites will not always be those ranking first, but those AI systems trust enough to reference when answering user questions.

That shift requires new data sources, new metrics and a deeper understanding of AI behavior – while still maintaining strong traditional SEO foundations.