How to analyze the URLs most and least visited by GoogleBot

Google and other search engines regularly crawl the pages of most websites. However, search engine resources are limited, so bots can crawl certain pages very often and certain pages extremely rarely.

Most often, regularly scanned pages are more visible in SERP. And the least scanned pages may even have outdated snippets. Therefore, it is important to monitor the pages most and least crawled by GoogleBot. Monitoring the first will allow you to identify pages with the highest potential, while analyzing the second will show pages that have little value for search engines. Least visited pages need to be improved if you want to see them in search results.

Using JetOctopus, you can quickly detect the URLs most and least visited by GoogleBot.

There are two options to analyze the pages most and least visited by search robots. The first option is to analyze the pages that have more or less visits relative to the average number of visits by search engines. Another option is to use quantiles in filters.

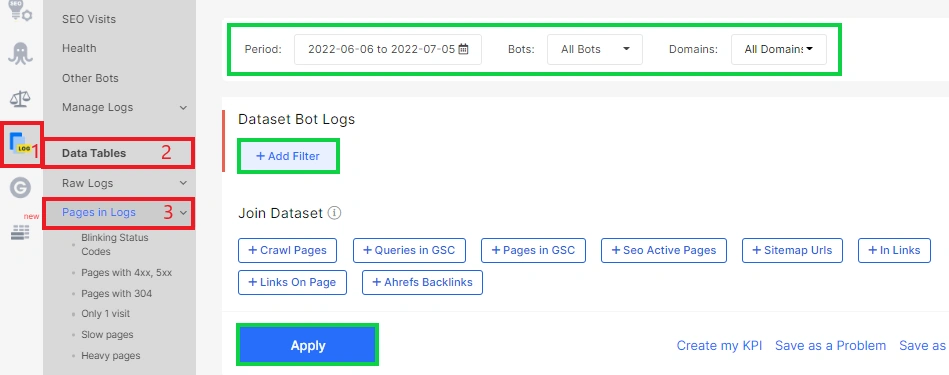

Let’s start with the first method. Go to the “Logs” menu, then go to the “Pages in Logs” data table. Set the desired period, select the type of search engine and domain. In addition, you can configure various filters, for example, to check the most visited URLs among products or categories.

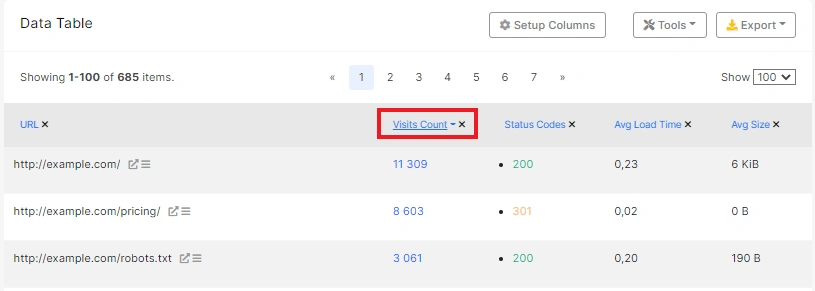

Next, click on the name of the column “Visits Count” to sort pages by the number of visits. The pages will be sorted in descending order of number of visits.

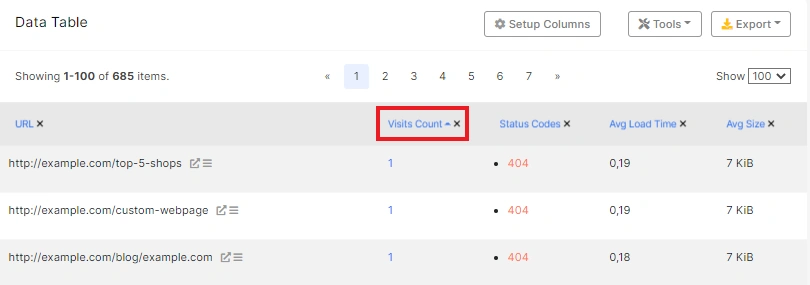

If you click again on “Visits Count”, the pages will be sorted in ascending order.

This way you get the pages that have the most and the least search robots visits. Now, you know how many visits the most popular search engine page has, and how many search engine visits the least visited page has, you can filter pages by the number of visits.

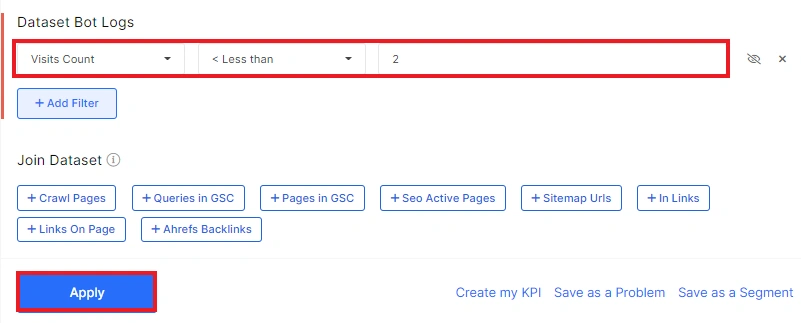

Click the “+Add filter” button, then select “Visits Count” and enter the value. For example, the count of visits is less than 2 or more than 600. Click “Apply”.

It’s a convenient way to analyze the 5, 10, or 100 pages that consume most of your website’s crawl budget.

The second method will show all pages that have a certain number of search engine visits relative to the total count. To find the pages most visited by the search robot, go to the “Pages in Logs” data table, configure the necessary filters. Everything is the same as for the first method.

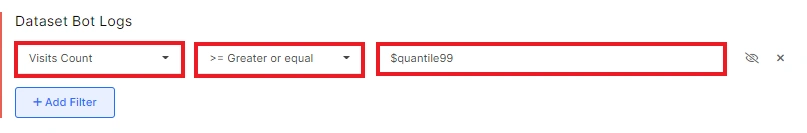

Next, click on the “+Add filter” button and select “Visits Count” filter. If you want to see the least visited pages, use $quantile10 as the value (less or equal). This means that you will see the pages with the fewest visits relative to the median (10% quantile).

To find the most visited pages, use $quantile90 or $quantile99 (greater or equal). This way, you will filter the pages that are most often crawled by search engines.

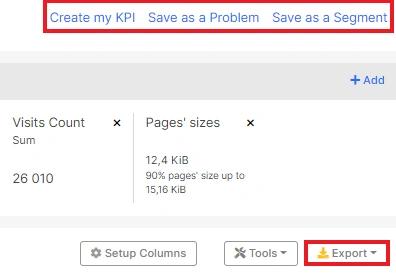

You can export all data in a convenient format. Feel free to create a segment, save a sample of URLs as a problem, or create a KPI.

Always make sure that the list of most visited pages includes the URLs that can bring the most traffic. There should not be non-200 URLs.

Among the least visited pages should not be those that contain useful content, are regularly updated and should be in SERP with higher positions.