Optimizing search bot navigation: key considerations for the ideal crawling way

Of course, the page ranking of your website depends on how the navigation is organized on your website. In this article, we will tell you what you need to pay attention to in order to make your website bot-friendly. By using these recommendations, you can ensure the perfect way for the bot to crawl your website.

What is a bot-friendly site?

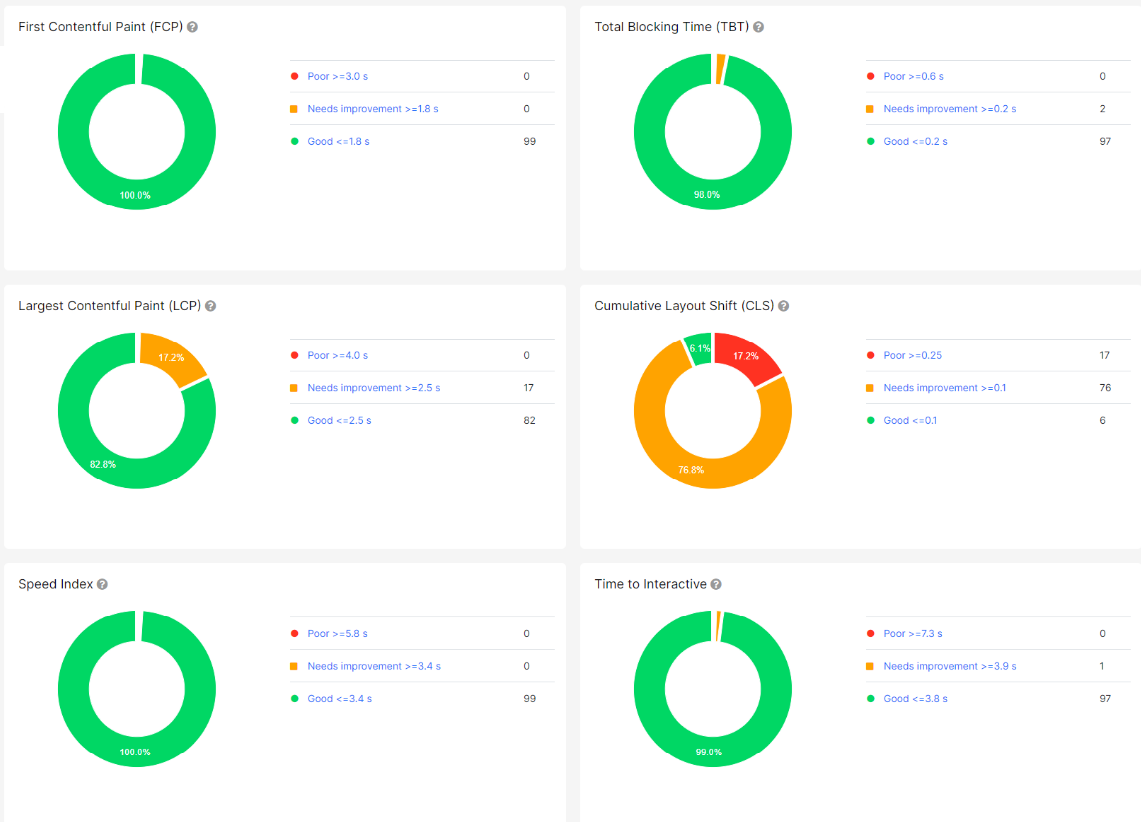

When we talk about a bot-friendly site, we no longer imagine a simple HTML page without unnecessary details. Nowadays, a bot-friendly page should also be user-friendly. Because we know that user experience has a significant impact on rankings, particularly in terms of performance and page speed. Google takes into account the Core Web Vitals results for users.

However, there is a difference: a page for bots should have structured and user-friendly HTML code, which users do not see and not care about. Bots examine the content of the page from the HTML code, as it is their primary source of navigation.

The ideal path for successful crawling by Googlebot

So what should be the ideal way for search bots to crawl your website successfully?

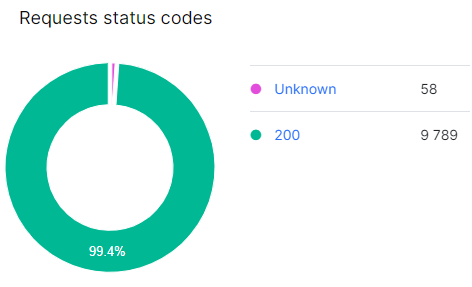

Firstly, links must be crawlable for bots. This means that all URLs must be inside the <a href> element; otherwise, Google will not be able to extract and crawl them. All these links should be placed on different pages of your website to be easily scanned by the bot. However, if the pages to which the links point are blocked from scanning by the robots.txt file, the bot will not be able to access those pages. Therefore, the second rule is to ensure the availability of pages for scanning. Carefully check that the pages return a 200 status code for bots and are not blocked by the robots.txt file.

Homepage

The search bot’s path starts from the homepage. Google states that pages placed on the homepage will be crawled more frequently. Therefore, it makes sense to feature the most important pages on the homepage, as they can bring more traffic to the search engine results pages (SERPs). However, this may not always directly affect the ranking of these pages, but it will certainly ensure that important pages are scanned faster when updates occur.

Always prioritize placing important pages on the homepage. These could include categories or other pages relevant to high-frequency keywords.

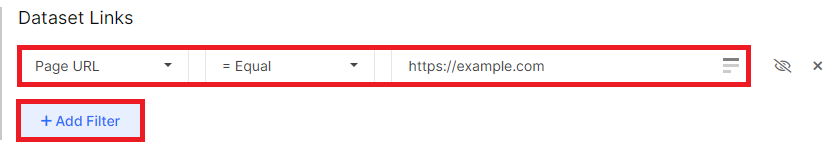

You can identify the links present on the homepage using JetOctopus. Simply go to the “Links” dataset, click the “+Add filter” button, and add the “Page URL” – “Equals” – “full link of the homepage” filter. However, pay attention to whether you are using the homepage link with or without a trailing slash.

As a result, you will see a list of outlinks from the homepage. Evaluate these pages and determine if they could be effective enough in the SERPs.

Internal linking

Internal linking plays a significant role in ensuring high-quality crawling of your website by bots. We have dedicated several blog articles to this topic due to its importance. Therefore, we highly recommend incorporating different types of internal linking. It’s crucial to remember that high-quality internal linking is also beneficial for users. For example, if you have an e-commerce website, you can utilize internal links that lead to related offers. Similarly, for an accommodation booking site, including links to nearby accommodations can be helpful.

When analyzing internal linking, explore how various types of pages are interlinked. For example, pay attention to the links between categories, offers and so on. This will assist in identifying areas where you can strengthen your internal linking strategy. It’s also worth examining the average number of internal links on all pages and how it impacts search performance. The “Deep Impact” report is an excellent starting point for conducting an initial analysis.

The “Deep Impact” report employs artificial intelligence to analyze your data and identify the technical SEO factors that have the most significant impact on your website’s SEO traffic. If links appear on this list, it signifies their importance as contributing factors.

Pagination

When considering the bot’s crawling path, it’s crucial to remember pagination. Pagination involves dividing large content into smaller sections using multiple pages. As a result, a substantial portion of your information may be spread across the second, third, fourth, or even hundredth page. Therefore, it’s essential to ensure search engines can easily access these pagination pages.

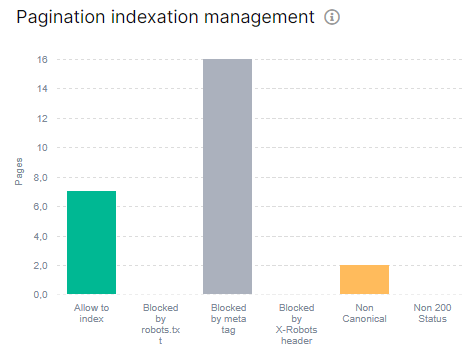

Firstly, ensure that all links to pagination pages are included in the HTML code, utilizing the <a href> element. Secondly, make sure that pagination pages are indexable, and the canonicalization is self-canonical. It’s common for pagination to be made non-indexable, but doing so prevents Google from actively crawling and analyzing the content on these pages. You can test your pagination using JetOctopus in a dedicated report.

To do this, access the crawl results, navigate to the “Pagination” report, configure your own rule, and examine the number of pagination pages on your site. Ensure they are indexable and not blocked by the robots.txt file.

Navigation

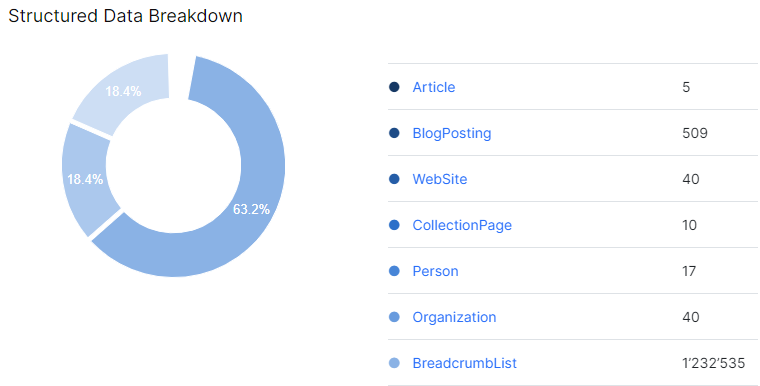

Next, let’s discuss the importance of breadcrumbs, headings, and structured data, particularly Breadcrumblist, for both bots and users. While all these elements are crucial, let’s focus on their significance for bots.

Bots rely on HTML code to understand the information present on your site. Therefore, it’s vital to convey specific details to bots using breadcrumbs, headings, and structured data.

Firstly, breadcrumbs help establish the structure and hierarchy of your website, guiding the bot. Secondly, headings assist the bot in understanding the key information on a page, which might not be apparent from the textual content alone. Lastly, structured data helps bots identify the most important information on a page and display it in a snippet.

By the way, JetOctopus recently introduced a new report for analyzing structured data. This report allows you to identify the types of pages where structured data is present.

We highly recommend incorporating breadcrumbs on each page, utilizing unique and clear headings, particularly the H1 heading, and ensuring that structured data aligns with the respective page type. Additionally, make sure that structured data includes only 200 status codes URLs. These practices will assist bots in comprehending the structure of your website and optimize the crawl budget.

Redirects and redirect chains

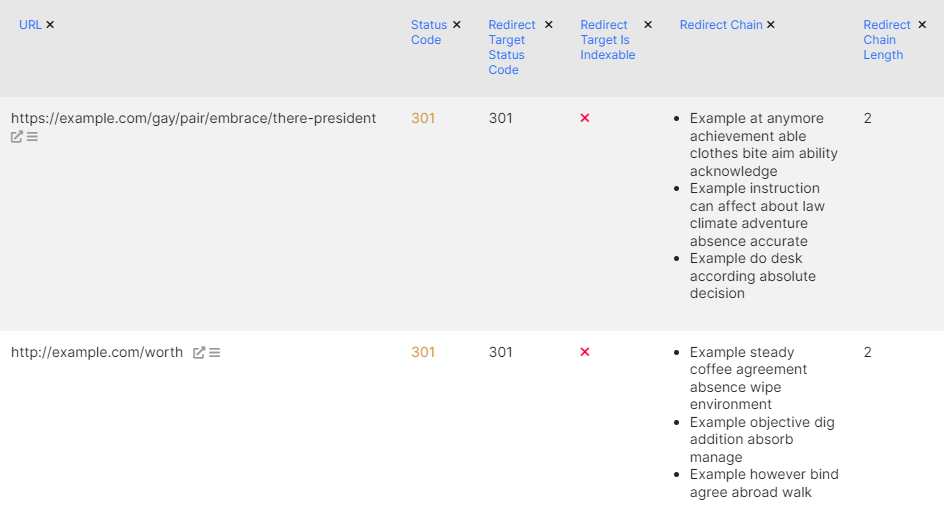

Another critical factor that can hinder the smooth crawling of your website by search bots is the presence of redirects and redirect chains. If your site has multiple redirects or long redirect chains, it is essential to address them promptly. Having an excessive number of redirect chains can cause search bots to leave the page before reaching the location page (redirect target page). Additionally, processing redirects consumes more resources for search engines. To ensure optimal scanning, it is advisable to replace links that point to pages with redirects, using direct links that lead to the final destination with a 200 status code in the HTML code.

To identify and address redirect chains, you can utilize the “Redirect Chains” dataset provided by JetOctopus. This report will show you the number of pages with redirect chains and with info about how many redirects are in the chain.

XML sitemaps

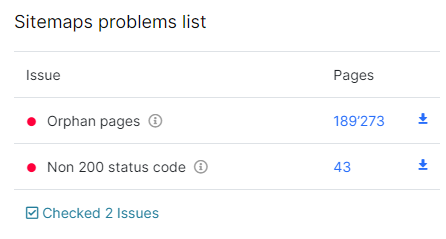

There are various sources from which search bots can discover links to your website, and sitemaps are one of them. It is crucial to regularly check your sitemaps to ensure they contain only indexable pages and that these pages are not blocked by the robots.txt file. Additionally, verify that the pages in the sitemaps return a 200 status code and have appropriate “index” meta robots tags. Neglecting these factors can lead to issues, as search bots may encounter broken URLs within the sitemaps, wasting valuable crawl budget.

To optimize crawling and ensure a healthy website, monitor the status of your sitemaps. Pay attention to the following details.

- Check that your sitemaps include indexable pages, allowing search bots to properly crawl and index the content.

- Confirm that the pages in your sitemaps are not blocked by the robots.txt file, granting search bots access to them.

- Ensure that the pages listed in your sitemaps return a 200 status code, indicating their availability and proper functioning.

To assist with this process, JetOctopus provides a dedicated report for analyzing sitemaps. Utilize this report to identify any broken or non-indexable URLs within your sitemaps.

Clean website hierarchy

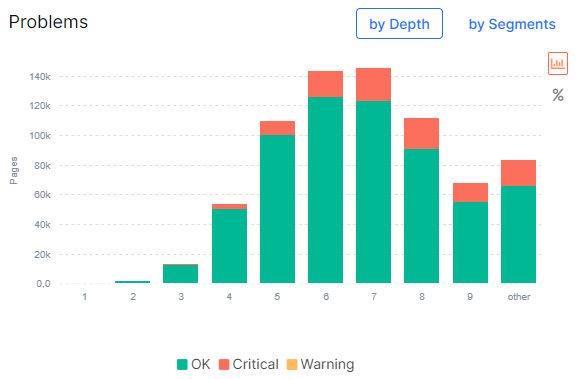

Maintaining a clean and logical website hierarchy is crucial for facilitating effective crawling by bots. A well-organized hierarchy enhances the crawling process and improves user experience. Conversely, a confusing hierarchy can impede crawling and frustrate users, as they may struggle to navigate and find desired pages buried deep within the site.

To establish a clean website hierarchy and optimize crawling, consider the following points.

- Distance from the homepage: be mindful of the distance between pages and the homepage. Pages located farther away from the homepage may be less discoverable by search engines and users. Aim to strike a balance between depth and accessibility.

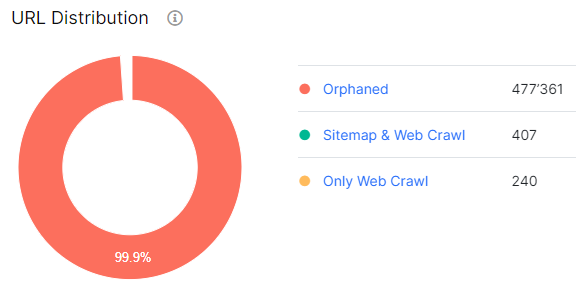

- Orphaned pages: monitor and address any orphaned pages on your site. Orphaned pages lack internal links pointing to them, making it challenging for search engines and users to find them. Ensure that all pages have proper internal linking to maximize their visibility and usefulness.

- Comprehensive internal linking: use internal linking effectively by including all indexable URLs on your website. Avoid having URLs with only one internal link – add more internal links pointing to each page on your website.

JetOctopus offers tools to check for orphaned pages and analyze internal links. Take advantage of these features to identify any orphaned pages and optimize your internal linking structure.

URL structure

Next, let’s discuss the importance of URL structure. While users may not pay much attention to it, a clear URL structure serves multiple purposes. Of course, it helps users who did pay attention to the link understand their current page location. But clear URL structure assists search engines in identifying and categorizing the content. When creating URLs, ensure they are descriptive and relevant to the page’s topic. This applies not only to web page URLs but also to supporting resource URLs, such as images and videos. All URLs on your website should be easily understandable.

Duplicate content and technical pages

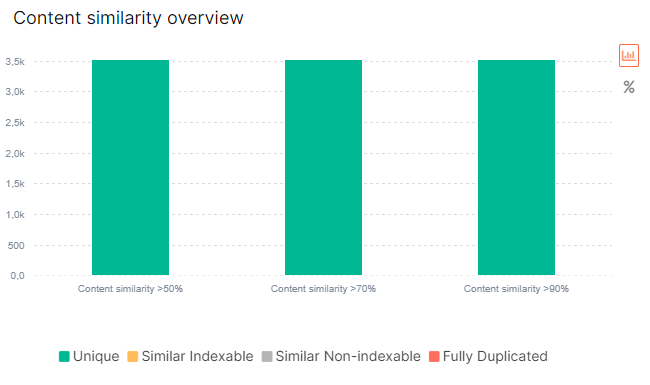

Duplicate content and technical pages can negatively impact the crawling process. Content management systems (CMS) sometimes generate technical pages that are duplicates. There are a lot of other various reasons that can lead to the presence of duplicate content on a website. While users may not notice the duplicate pages, search bots will still spend their crawl budget on them. It’s crucial to regularly check for duplicate pages on your site and take appropriate actions, such as deleting them or blocking them from being indexed if correction is not possible.

JetOctopus provides a useful tool for identifying duplicate content. By running a full crawl of your website and accessing the “Duplications” dashboard, you can analyze duplicate pages that have identical content.

Evaluate these pages and decide whether to block, remove, or make them unindexable. Addressing duplicate content is vital for maintaining a healthy website.

JavaScript

Most websites today incorporate JavaScript, with some utilizing server-side rendering (SSR) or dynamic rendering. It’s important to note that even when using JavaScript, you should ensure that your website meets all the requirements mentioned above. SSR is particularly advantageous, as it provides a fully rendered version of the website, enabling search bots to access all the necessary data without rendering (rendering takes more time).

If you use JavaScript, it’s crucial to ensure that all resources load correctly. Broken resources can negatively impact website performance. Check for broken resources, including JavaScript files returning a 404 status code, images, font files, and more. JetOctopus offers a dedicated report for identifying broken resources if you have performed a JS crawl.

Website speed

The final aspect to consider for optimizing your website for bots is the Core Web Vitals metrics and page performance. Fast-loading pages that provide quick information retrieval to search bots positively impact your crawl budget. This means that search engines can promptly detect new pages and update indexed information for pages that have been updated. Therefore, prioritize optimizing load times and page speed.

JetOctopus provides the Core Web Vitals tool for checking the most important pages based on these metrics. The tool offers a distribution of pages according to Core Web Vitals metrics, allowing you to assess their performance.

If you notice a sudden drop in bot crawling or if search bots stop visiting your website altogether, there are two main factors to investigate. The first reason could be that pages are blocked for bots, either through the robots.txt file, your website’s security system, or if the search bot receives a non-200 status code (e.g., 401 or 403). The second reason could be that Google is overloading your website, leading to decreased crawl efficiency. If this situation persists for more than two days, some pages may be de-indexed, especially those returning 500 or other 500-series status codes during search bot visits.