How to receive a quick notification if an important page is blocked by the file

Crawling and discovering a page by search bots is the basic and very first stage for recieving organic traffic. If you want the page to appear in Google or other search engines’ results, it must be available for crawling. If an important page is blocked from crawling, it won’t appear in the SERP. One of the most common ways to prevent a page from being crawled is through a robots.txt file.

Often, changes to the robots.txt file can make pages inaccessible for scanning. As a result, you may lose significant traffic. Therefore, it is important to monitor these changes. In this article, we will explain how to quickly receive a notification if a page becomes blocked by robots.txt file.

How to check if a page is blocked in the robots.txt file at this moment

Before setting up notifications, it’s important to check if the page is blocked from scanning in the robots.txt file. If you don’t have a crawl scheduled, start a new one by deactivating the “Respect robots rules” checkbox. Once the crawl results are available, go to the results and select the “Indexation” report.

In this report, you’ll find a grouped data table labeled “Blocked by robots.txt.” Click on “View Pages” to see the detailed data table and review all pages blocked from being scanned by search engines due to the robots.txt file. Make sure that only unnecessary technical pages and those you don’t want to be shown in SERP are on this list.

Crawl notifications for important pages Blocked by the robots.txt file

Now, let’s move to the next step – how to quickly receive notifications if a useful page becomes unavailable for scanning by Google due to a change in the robots.txt file.

You can take two approaches: setting up alerts for website crawling in general or setting up alerts for a list of important pages, or you can set up both types of alerts. You can receive a notification when a previously blocked page is no longer blocked or when a page that was allowed for scanning becomes prohibited.

The advantage of crawl alerts is that you can check any number of pages, but the frequency of checks depends on how often you run the crawl. It may not always be possible to run a crawl daily.

Let’s consider the first option: how to get a notification when a page becomes blocked by the robots.txt file.

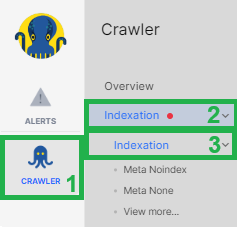

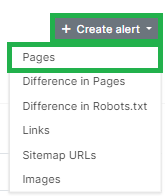

Go to alerts, select “Create a crawl schedule” if you haven’t already configured crawls for alerts. Then, click “Create an alert” and choose “Pages.”

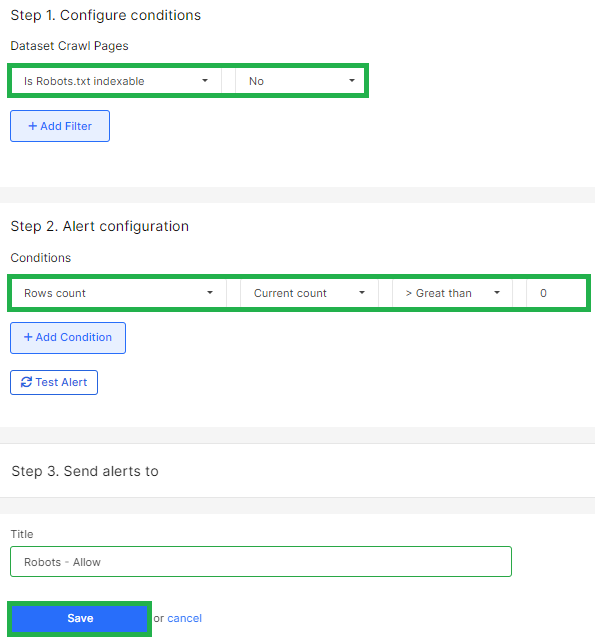

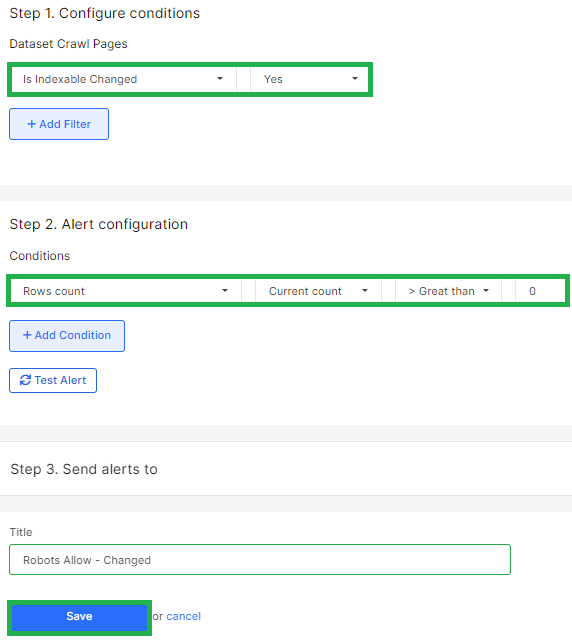

In the “Step 1: Configure conditions” block, add the filter “Is Robots.txt indexable” – “No” (this way, you will receive notifications about pages that are prohibited from being scanned). In the “Step 2: Alert configuration” block, select the filter “Rows count” – “Current count” – “> Greater than” – “0” to receive a notification if at least one page is blocked by the robots file.

Next, give the alert a name and specify where the alert should be sent.

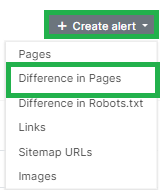

Now, let’s configure the notification that will alert you if the status of a page has changed – either becoming blocked from scanning or, conversely, becoming unblocked. To do this, go to the “Crawl Alerts” block and adjust the crawl schedule if needed. Click the “Create alert” button and select “Difference in Pages.”

In the “Step 1: Configure conditions” block, select “Is Indexable Changed” – “Yes.” In the “Step 2: Alert configuration” block, select the filter “Rows count” – “Current count” – “> Greater than” – “0.” Set up the remaining details, such as the alert name and where it should be sent.

You will receive a notification if the indexability status of the page changes. This includes cases where the page is blocked using the Meta Robots tag, X-Robots-Tag, or the Robots.txt file. If anything changes in these parameters compared to the previous crawl, you will receive a notification.

How to receive notifications about page blocking for Google

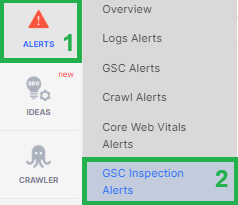

Another notification we recommend setting up is in the Google Search Console (GSC) Inspection Alerts. Google doesn’t always scan pages immediately when changes are made to the Robots.txt file, and it may not always be feasible to run daily rescans. Therefore, a situation may arise that you have already managed to change the rules in robots.txt, the page is available to Google, but Google does not yet know about it and considers the page blocked.

GSC Inspection Alerts can help to check the page status for Google including robots.txt state. You can set up an alert to receive a notification when the page status for Google changes (i.e., when the Google coverage status changes). This is especially useful for monitoring your most important pages.

We recommend setting up alerts in the Google Search Console Inspection Alerts section to receive notifications when the status of a page changes for Google.

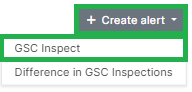

Create a review schedule if you don’t have one already. Then, click the “Create Alert” button and choose “GSC Inspect.”

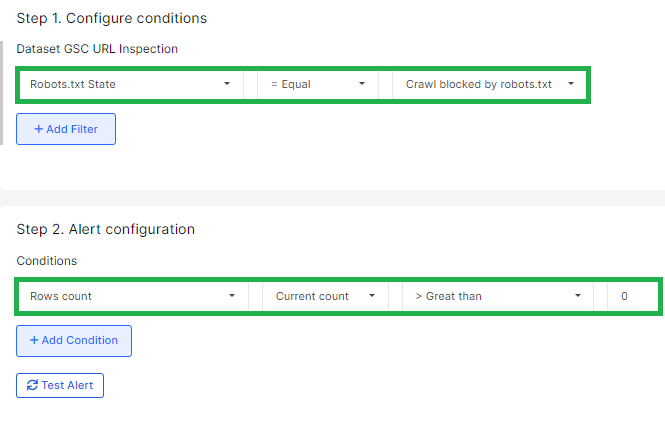

Select “Robots.txt State” and choose “Crawl blocked by robots.txt” under “Step 1: Configure Conditions.” In “Step 2: Alert Configuration,” select “Rows Count,” then “Greater than,” and set the value to 0. In “Step 3: Send Alerts To,” provide your email, phone number, or Slack channel to receive notifications.

By following these steps, you’ll receive a notification if any URLs in the Google Search Console inspection schedule are blocked by robots via the Robots.txt file.

With these alerts, you’ll stay informed and receive timely notifications if important pages on your website become unavailable to Google due to blocking in the Robots.txt file.