How to use the list mode: 5 tips to crawl your website effectively

You can choose several scanning modes: scanning the entire website, scanning URLs from sitemaps or crawling pages from a list. Crawl list mode is very useful when you need to check metadata, status codes, load time and get other data about specific pages. It’s a very handy tool, but there are a few life hacks to using it.

How to run crawl list mode

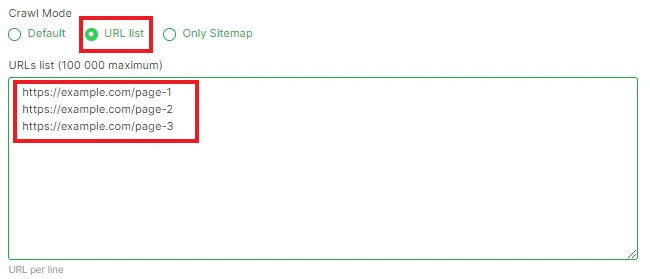

To start a crawl by URLs list, select the desired project and click the “New crawl” button. Next, in “Basic settings”, select the “URL list” mode. After that, a field will appear for entering all the necessary addresses.

Pay attention to the fact that this way you can enter no more than 100,000 URL addresses. Next, perform the settings and start the crawl.

It seems that everything is simple. But there are several points that should be paid attention to.

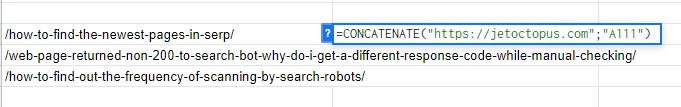

1. Each URL must be absolute, with HTTP/HTTPS protocol and domain. Otherwise, JetOctopus will not be able to open and scan the URLs. If you have relative URLs, you can use Google Sheets and the concatenate formula. For example, you have a list of URLs that you received from developers:

/how-to-find-the-newest-pages-in-serp/

/web-page-returned-non-200-to-search-bot-why-do-i-get-a-different-response-code-while-manual-checking/

/how-to-find-out-the-frequency-of-scanning-by-search-robots/

Using the formula =CONCATENATE(“https://jetoctopus.com”;”/how-to-find-the-newest-pages-in-serp/”) you can get a list of absolute URLs.

2. Verify that the URL list has the correct HTTP/HTTPS protocol. The thing is that in the mode of crawling the list of URLs, JetOctopus will not scan the data from the redirection target URLs. If you use a list of URLs with HTTP, and in fact the pages are located on HTTPS and the redirect is working, you will not receive any data, except for the status of the code, load time, location of the redirect, and so on. Any meta data and content information, because JetOctopus will not crawl pages that differ from those listed.

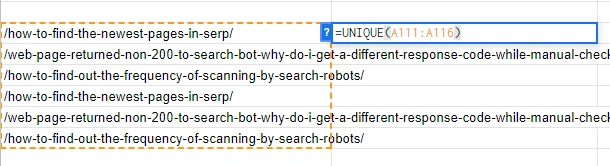

3. At the stage of entering URLs, JetOctopus does not check them for uniqueness. Therefore, you can independently check whether the URLs are not copied twice or more using formulas, if it is important for you to combine as many pages as possible in one crawl. One of the options is the UNIQUE formula in Google Sheets. In a new column, enter the formula =UNIQUE(A1:A4), where there will be a range from which unique values should be output.

But there is good news: if the URLs were not unique and you started crawling, the limits will be applied only to unique URLs. In the results you will see the number of unique pages and all the necessary information about it.

4. Watch for additional characters. Each URL must be entered on a new line, without any commas or additional characters between lines. JetOctopus will treat each extra character as part of the URL.

5. Use URL list mode to check redirects. This is very handy if you need to check if redirects are configured correctly.

How to crawl a list of URLs from CSS, Excel and other files

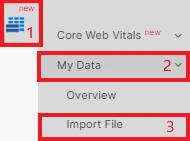

Sometimes you need to crawl more than 100,000 URLs in list mode. This can also be done using JetOctopus. Go to any completed crawl, “Tools” section. Next select “My data” – “Import file”.

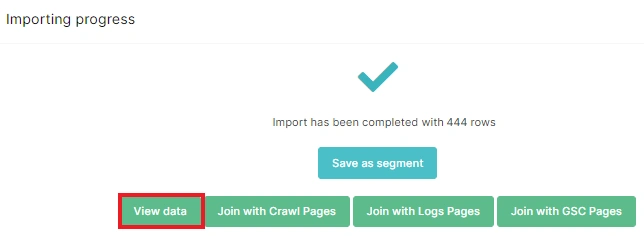

Import the file, load the data. Once the data is downloaded, click “View Data”.

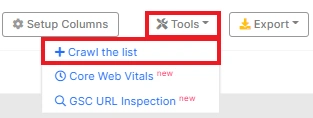

You will see a list of URLs from the file. In the right corner above the list of URLs, select the menu “Tools” – “+ Crawl the list”.

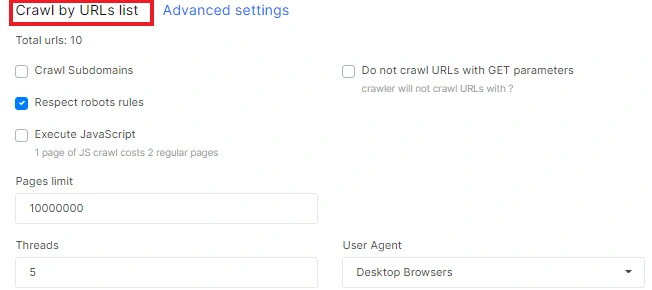

After that, the crawl settings menu for scanning the downloaded address will open.

Enjoy using JetOctopus crawler!