Maximize SEO Efficiency: How to Use JetOctopus to Monitor and Manage Robots.txt

The robots.txt file is a set of instructions (directives) for search engines and other web crawlers that visit your website. Using the robots.txt file, you can prevent bots from scanning (visiting) your website or its individual pages or subfolders. If the file is incorrectly configured, whether accidentally or due to a technical error, the entire website could be blocked. Similar problems may occur if your robots.txt file is not available. As a result, search engines would not be able to access the content and update the data in the index, leading to potential issues. An incorrect or non 200 status code robots.txt file might allow search engines to crawl the entire website, including irrelevant or low-quality pages, negatively impacting your site’s visibility.

To avoid these issues, it’s crucial to regularly check your robots.txt file for errors and inconsistencies. In this article, we will discuss the importance of using the JetOctopus robots.txt checker and how to incorporate this process into your website maintenance routine without extra effort.

What is a robots.txt file?

A robots.txt file is a set of instructions that allow or prohibit various scanners (bots), including search engines, marketing tools, artificial intelligence scanners, and other bots, from scanning your website.

By using a robots.txt file, you can prevent scanning of individual pages, subfolders, or sections of your site, or conversely, allow scanning of specific pages or sections.

- The robots.txt file needs to be placed in the root directory of your website, this file should be accessible at https://example.com/robots.txt.

- It’s important to note that this file only works for the specific domain and won’t apply to subdomains. So it is necessary to specify the robots.txt file for each domain you have. For example, https://example.com/robots.txt will only work for example.com pages but not for the work.example.com subdomain.

- Since the robots.txt file is supported not only by search engines but also by various other website scanners, there is a single protocol for this file. In the robots.txt file, you can use only specific directives.

The “Allow” directive permits scanning of a certain page or section of the site, and the “Disallow” directive prohibits scanning of a certain page or section. Additionally, the robots.txt file must have at least one user agent line. If the rules are common to all scanners, then “User-agent: *” must be specified, or you can specify separate rules for different user agents. For example, you can prevent certain search engines from scanning your website.

User-agent: *

Allow: /

User-agent: Googlebot

Allow: /folder1/

Disallow: /folder2/

It’s crucial to remember that search engines visit the robots.txt file before crawling your website. This provides the scanner with instructions on what to access and what to avoid. However, the robots.txt file does not affect site or page indexing. In some cases, a page blocked by robots.txt might still appear in search results, but without an attractive snippet, as the search engine lacks the page content to generate one.

Furthermore, regular expressions are generally not supported in robots.txt, except for the limited use of * and $ symbols.

Why it’s crucial to check your robots.txt file

The importance of regularly checking your robots.txt file cannot be overstated.

The first reason is that some technical errors can lead to either complete website blockage or unrestricted access for search engines. In the case of complete blockage, Google will be unable to discover new pages or update existing content. Websites that are completely blocked from crawling experience a significant decrease in performance and visibility in Google search results.

The opposite situation may occur when all the pages of your website become available for crawling by Google or other search engines. In this case, Google can start spending the crawl budget on pages with GET parameters, user-generated pages, duplicates, or, in some cases, even access user data or shopping cart pages (this is not good for the users of your website in terms of security). This situation can also happen with test or staging domains that should be blocked from search engines and external users.

Therefore, it’s essential to ensure that your robots.txt file accurately reflects your desired crawling behavior. Regular checks guarantee that your rules are correct and aligned with your website’s goals.

The second important reason to monitor the robots.txt file is how search engines react to the status code of the robots.txt file or its absence. The presence or absence of a robots.txt file, as well as its content and status code, can influence search engine behavior.

Understanding robots.txt status codes and bots behavior

The HTTP status code returned by your robots.txt file significantly impacts how search engines interact with your website.

- 200 Status Code – search engines will have access to the robots.txt file and will be able to follow all the rules specified in this file.

- 3xx Status Code – Google will follow redirects. If the redirect chain is too long, Google will stop following the chain and will perceive such a long redirect chain as a 404. Remember to configure the redirect from the robots.txt file when migrating domains. You should also avoid configuring a complete restriction on scanning pages if you moved them to other addresses. This is because you need to give Google access to these pages so that it can analyze the redirects and update the data in the SERP.

- 4xx Status Code, in particular 404 – if your robots.txt file returns a 4xx status code (excluding 429), Google and some other search engines will consider that you do not have any restrictions on scanning the site and will scan absolutely all pages. This can lead to a waste of the crawl budget and the inclusion of non-useful pages in the index. The same behavior occurs when there is no robots.txt file on your site. The search engine will try to access the robots.txt file, and if it does not exist, it will receive a 404 status code and assume that there are no restrictions on scanning.

- 5xx Status Code – Google interprets this as a prohibition to crawl the entire website. There are some other exceptions that you can read about in the official Google documentation – How Google interprets the robots.txt file.

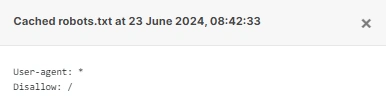

It is also important to remember that some search engines, particularly Google, cache the robots.txt file for some time and do not visit it every time they access the pages of your website. This means that if you had an erroneous robots.txt file, and you updated it, Google might still use the rules of the previous robots.txt file for a while.

Therefore, you always need to ensure that your robots.txt file returns a 200 status code. If you don’t have a robots.txt file on your site, you must add it and include basic rules for scanning your website pages.

By understanding these factors and implementing best practices, you can effectively control how search engines interact with your website through the robots.txt file.

Checking your robots.txt file with JetOctopus

To streamline the manual process of checking your robots.txt file and ensure its consistent availability, JetOctopus offers dedicated tools. Our robots.txt checker is a novel feature that allows you to verify the accuracy of file rules, its availability, 404, 200, or other status codes, and monitor changes in robots.txt files across different crawls.

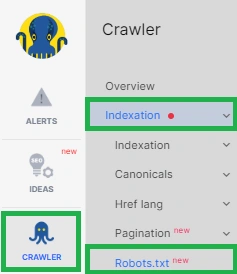

To analyze your robots.txt file, navigate to the crawl results, select the “Indexation” report, and then choose “Robots.txt.”

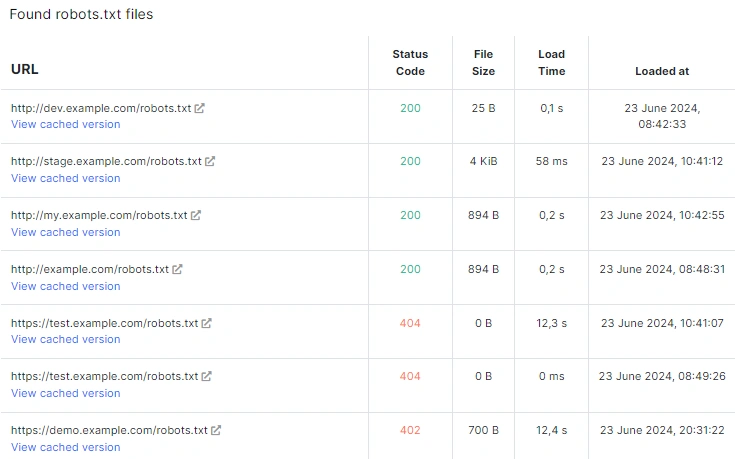

You’ll see a list of all discovered robots.txt files during the crawl.

It’s crucial to verify that each domain or subdomain has only one robots.txt file located in the root directory. If you enabled subdomain scanning during the crawl, the list will include robots.txt files for all subdomains. Even if a robots.txt file is missing and returns a 404 status code, it will still appear in the list.

Clicking the “View cached version” button displays the robots.txt file’s content as it was during the crawl.

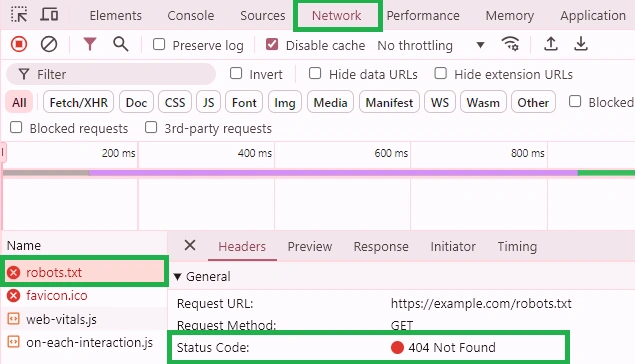

- The “Status Code” column indicates the HTTP status code returned by the robots.txt file. Ideally, this should always be a 200 status code. Any other status code requires further investigation. Use the Chrome DevTools Network tab to check the current status code of the robots.txt file.

Let’s go back to JetOctopus.

- The “File Size” column displays the file size. Since robots.txt is a plain text file without images, media, or JavaScript, it should be very small. A file size of zero bytes indicates an empty file without any scanning rules. It needs to be fixed.

- The “Loaded at” column displays the date and time when JetOctopus accessed the robots.txt file.

Based on this information, you can assess the overall health of your robots.txt file and identify critical errors. However, JetOctopus has additional tools that can help you monitor the file’s status.

Alerts for robots.txt monitoring

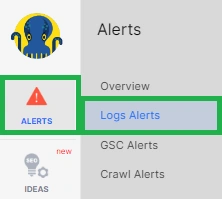

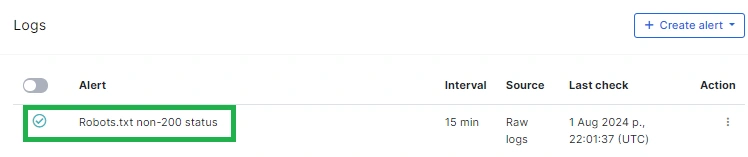

In the “Alerts” section, we have created important default alerts for you that you can activate at any time to receive notifications if something goes wrong with your robots.txt file. To do this, go to the “Alerts” section and select “Logs Alerts.”

Activate the “Robots.txt non-200 status” alert to be notified when Googlebot receives a status code other than 200. You can set the frequency of checking and receive notifications as soon as Googlebot encounters such a problem. If Googlebot encounters a 404 status code when visiting the robots.txt file, it will consider that the site is completely allowed for scanning. If it encounters a 500 status code, then Google will consider that your entire website is prohibited from scanning.

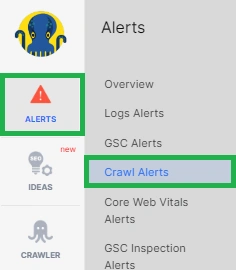

Next, go to the “Crawl Alerts” section, where you can set up a regular daily or weekly check of robots.txt. Here you can configure several default alerts or add your own custom alerts.

More information about setting up the alerts: Guide to creating alerts: tips that will help not miss any error.

- Robots.txt changed content – receive a notification every time the robots.txt file has changed. This way, you will always be able to notice changes in time if new rules have been added to the robots.txt file, if the site has become completely blocked from scanning, or if it has become available for scanning. Set up a check with the regularity you need.

- Robots.txt status code not 200 – you will receive a notification if the robots.txt file does not return a 200 status code. The difference between this alert and the one you configure in the logs is that the log alert triggers when a Googlebot encounters a non-200 status code. The crawl alert triggers if the crawler itself finds a non-200 robots.txt file.

- Robots.txt changed status code – always be aware when the status code of the robots.txt file changes. For example, if you had configured a redirect for a robots.txt file from one domain to another, and after migration, there was a failure in redirects, you will receive a notification. You will also receive a notification if the status code of the robots.txt file changes from 200 to a different code.

The JetOctopus robots.txt checker is an essential tool designed to help SEOs and website owners manage and monitor their robots.txt files effectively. Forget manual checks – JetOctopus automates the process, verifying file rules, accessibility (200, 404, etc.), and monitoring robots.txt changes.