Take Control on Googlebot: the best takeaways from Serge Bezborodov’s talk at BrightonSEO

We want to share key insights from Serge Bezborodov’s brilliant talk at BrightonSEO. Serge talked about how to control Googlebot and why it is needed for all SEOs. The presentation was based on a long-term analysis of the activity of search robots.

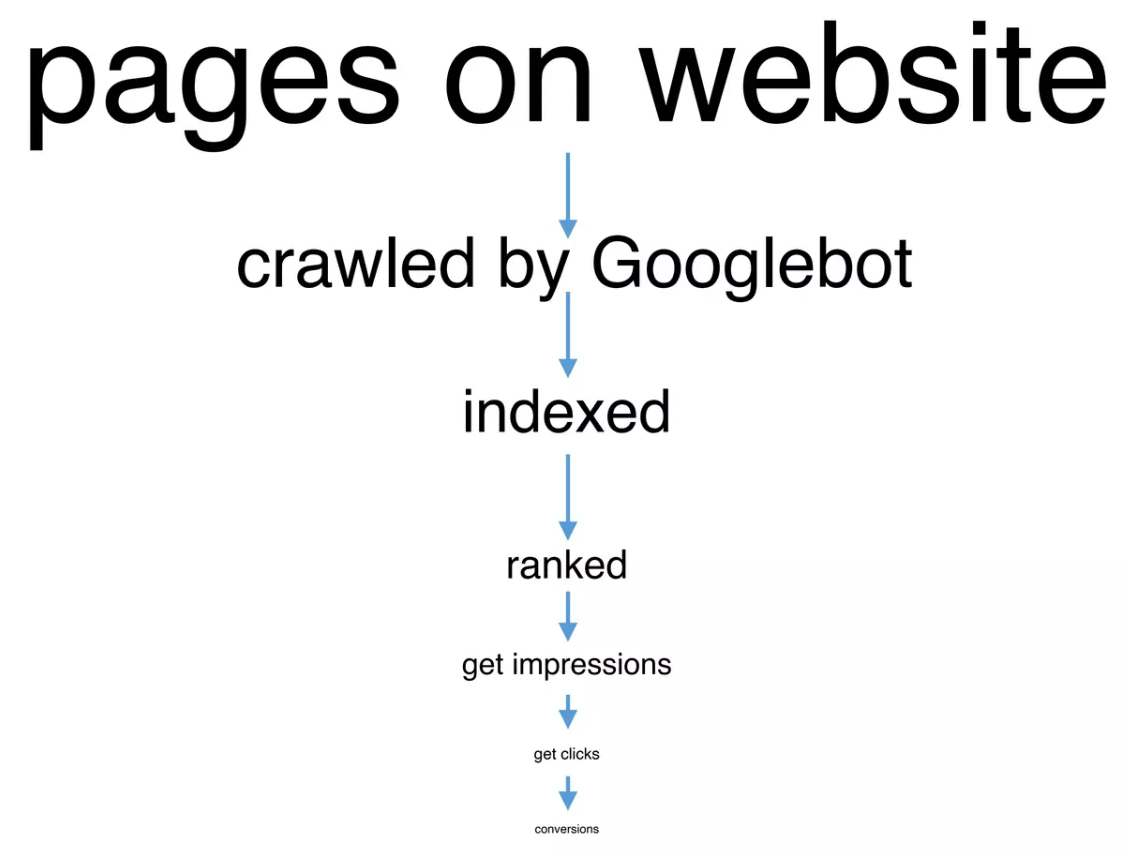

1. On average, ~40-60% of pages of most sites are uncrawled by Googlebot. What does this tell us? First of all, this indicates that the full potential of the website is not being used by Google. Search engines simply do not visit these pages for various reasons: technical errors, weak internal linking, etc. As a result, these pages are not indexed, and the website does not receive traffic.

2. Bigger website – less % of crawled pages. Unfortunately, this is a very common situation. Due to the large number of pages that do not have SERP potential, but are crawled by Googlebot, really valuable pages are not visited by search engines. And if the page is not visited by Googlebot it won’t be indexed and ranked.

3. Googlebot can crawl almost all pages of your website, but you need to strive for it. And you can influence the scanning of your website pages by search bots.

4. No gray/black hat SEO techniques are needed to influence Googlebot behaviour. It is enough to pay attention to the three most important points (see below). All within the guidelines of Google and white hat SEO.

5. What are these three most important points? For crawling, and then indexing and ranking, it is important to take care of the quality of the three whales: content, links and technical issues. This is a kind of triangle, within which you need to optimize the site so that more pages of your website are scanned and indexed by Googlebot.

There is no more or less important point, all sides of the triangle are very important.

6. However, for each website, the aspect ratio may be different. To find out your strongest side, you need to test. But once again: neither side can be ignored.

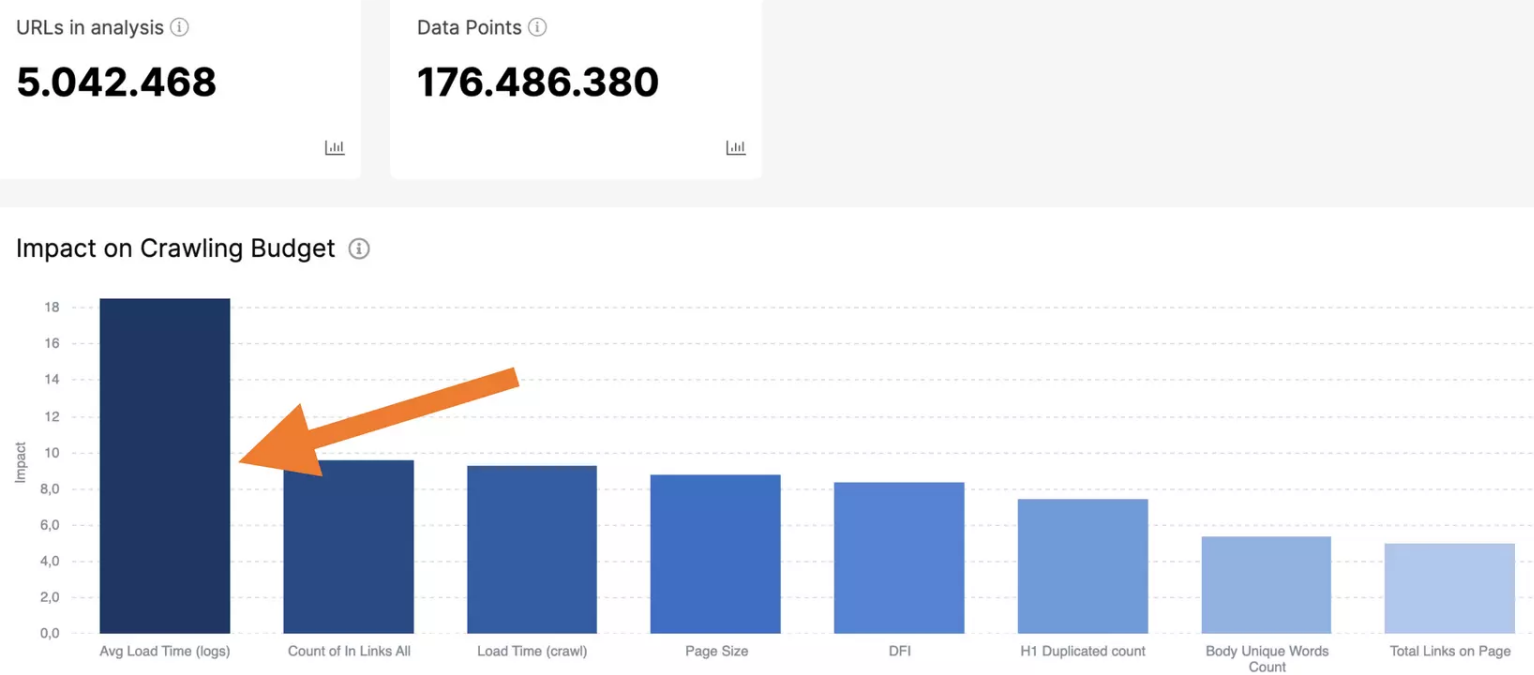

7. Log analysis is very important to find the growth point. Here in the “Deep Impact” chart, you can see the most influential factor for search robots’ scanning. Using in-depth analysis, JetOctopus identifies the factor that will help to increase the percentage of crawled pages on your website.

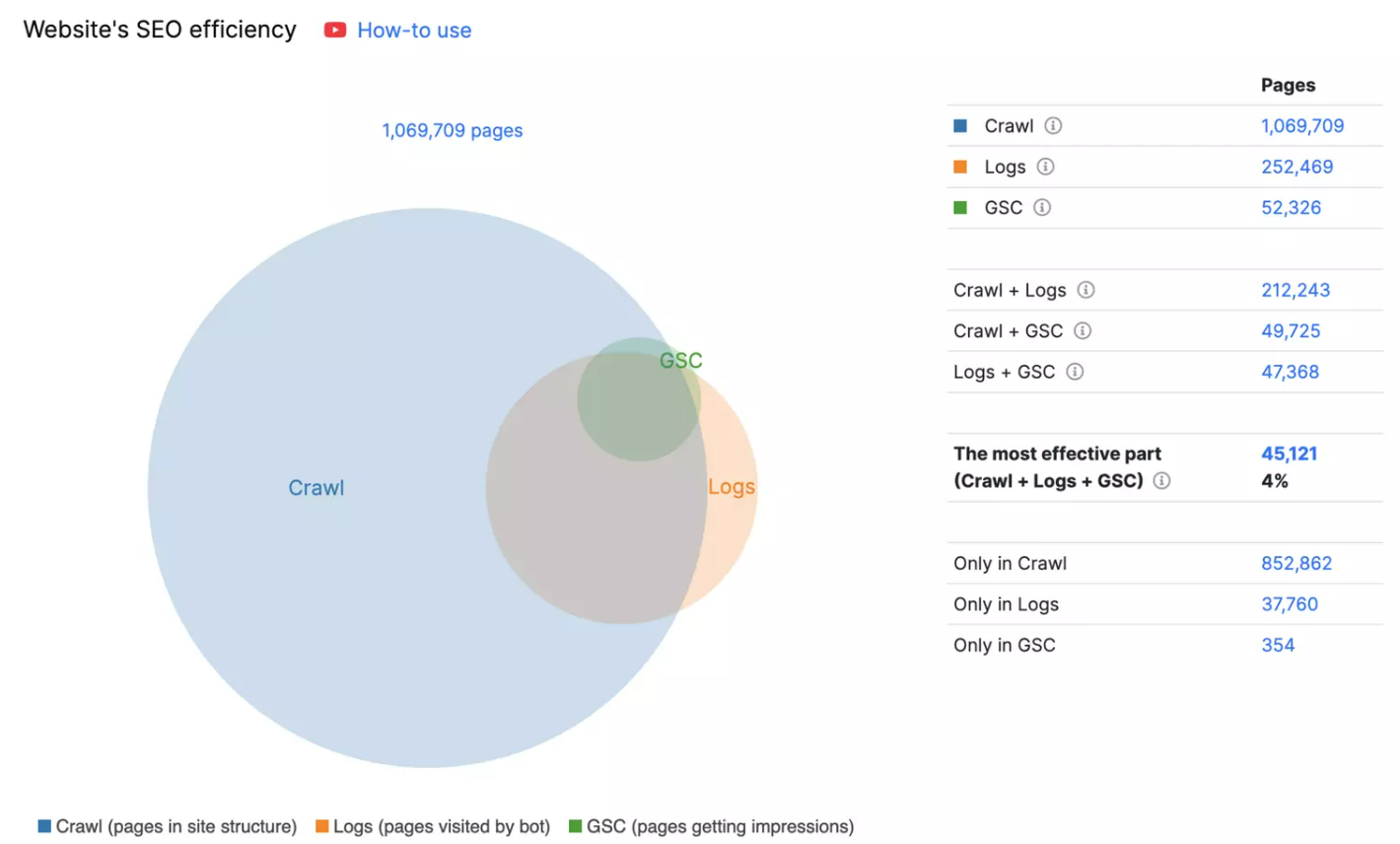

8. But logs is not enough. You need to analyze the efficiency of the website. What pages do search engines crawl? Which pages are active in the SERP and bring traffic? What pages are crawlable and included in the internal linking structure on your website?

The more technically optimized pages with good content are available for crawling by search engines, the greater their number will be indexed and ranked. And the more traffic you will get.

Thanks to all participants for a great discussion at the BrightonSEO conference! It was awesome to talk about logs and share the JetOctopus experience!

We recommend reading some of our articles about logs:

Logs. Dig up the Pages mostly visited by bot and ignored

Case Study: How DOM.RIA Doubled Their Googlebot Visits Using JetOctopus

How to analyze heavy pages in logs and why it is important

Step-by-step instructions: analysis of 404 URLs in search engine logs