Why your crawl can’t be finished.

Sometimes crawl fails because the JetOctopus bot is blocked by the webserver.

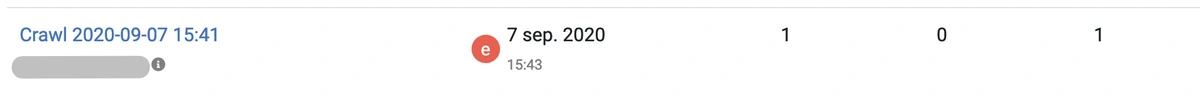

If this problem occurs, you will the following information in your crawl project:

- a lot of URLs returning a 401 or 403 status code;

- the crawl slows down progressively, and appears to stop running completely;

- crawler shows ‘crawl error’ status;

- crawl shows that only 1 page was crawled.

The reason for your crawl being blocked is most likely one of the following:

– JetOctopus uses different cloud providers which may be blocked by default as they are also often used by scrapers.

– There is an automated system on the server which detects and blocks suspicious activities.

– A manual block has been implemented by a server administrator, based on manual inspection of server activity, possibly triggered by a high load caused by the crawl, or a large number of crawl errors.

– The use of a Googlebot user agent has led to the failure of a reverse DNS lookup, appearing to be a scraper that is spoofing Googlebot.

We recommend trying the following solutions to blocked crawls:

– provided that you know the site, you can ask the server administrators to whitelist the IP that JetOctopus uses to crawl:

| 54.36.123.8 54.38.81.9 147.135.5.64 139.99.131.40 198.244.200.110 |

– some websites will block requests which come from a Googlebot user-agent but do not originate from a Google IP address. In this scenario, selecting a different user agent often makes the crawl succeed;

– some websites attempt to use Javascript to block crawlers that do not execute the page. This type of block can normally be circumvented by enabling our Javascript renderer.

If you have any problems with the crawl,

feel free to send a message to support@jetoctopus.com.