10 must-have checks for a small website

There is a prevailing belief that technical SEO is of lesser significance for small websites compared to content optimization. However, we firmly believe this is not the case. In fact, we have worked with numerous small websites that experienced considerable ranking improvements after implementing technical SEO optimizations. To help small website owners automate their technical SEO efforts, we have compiled a concise checklist of essential tasks. While a comprehensive technical audit is ideal, starting with a simpler approach can yield positive results.

Check if bots are crawling your website

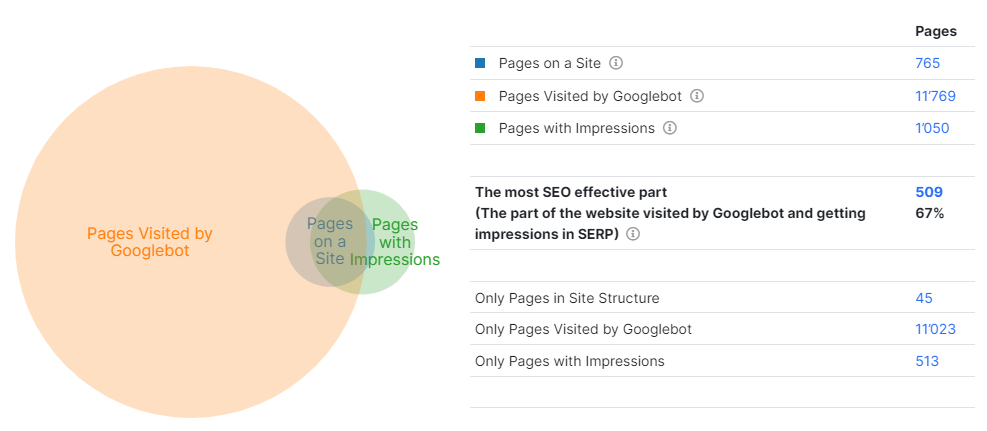

No matter how exceptional your content and internal optimization may be, their effectiveness relies on search bots regularly crawling your website. Detecting any crawling issues is crucial to address potential problems. To accomplish this, you will need a complete crawl of your website to identify all pages. Additionally, configuring log integration in JetOctopus is recommended. So, integrating dall these data (logs, crawl, Google Search Console) allows you to identify pages that are not being crawled by bots at all (making them unlikely to be indexed), as well as technical pages that are not conversion sources but visited by bots. You can also identify pages that receive impressions in the SERPs but were not discovered during the crawling — usually, such pages could include outdated pages or pages with redirects that do not contribute to conversions.

To obtain this information, navigate to the “SEO” section and select the “SEO Efficiency” chart, where you will find clickable charts displaying relevant data.

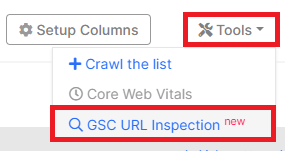

We also recommend analyzing the crawl results in the “Pages” dataset and applying filters to isolate the pages that are important to you and should be ranked by Google. Next, click on the “Tools” button and select “GSC URL Inspection” to gain insights into the status of Google’s processing of your pages.

Some pages may be discovered by Google but have not yet been indexed, while others may not be known to Google at all. Understanding how Googlebot can crawl and process your website is vital information for optimizing your online presence.

Analyzing indexing rules and availability for crawling

This check is similar to ensuring that bots visit and crawl the desired pages for ranking in the SERPs. However, the focus here is on detecting pages that are prohibited from being crawled and indexed. Non-indexable pages, even if crawled by Google, will not appear in the SERPs. It is crucial to ensure that all pages with valuable content are accessible for crawling and have “index” meta robots/X-Robots-Tag.

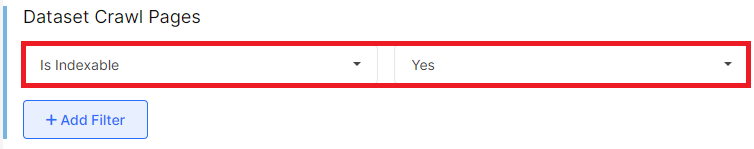

To check if pages can rank in Google:

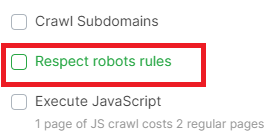

- conduct a website scan by deselecting the “Respect robot rules” checkbox during the crawl configuration;

- after crawl is finished, go to the crawl results and select the “Pages” dataset;

- apply the filter “Is indexable” – “Yes”.

You will see a list of all pages that have no restrictions for indexing: these pages are not blocked by the robots.txt file, do not have a noindex directive in the meta robots or X-robots tag, and return a 200 status code. Verify that this list includes all the pages that are important to you.

Analyzing the number of words per page

This validation focuses on the content aspect. Pages with quality, comprehensive on-page content that aligns with Google’s recommendations tend to perform better in SERPs. For example, a page with a detailed product description will rank higher than a page without one. The amount of content also impacts the number of keywords a site can rank for. A complete description allows the page to rank for long-tail keywords.

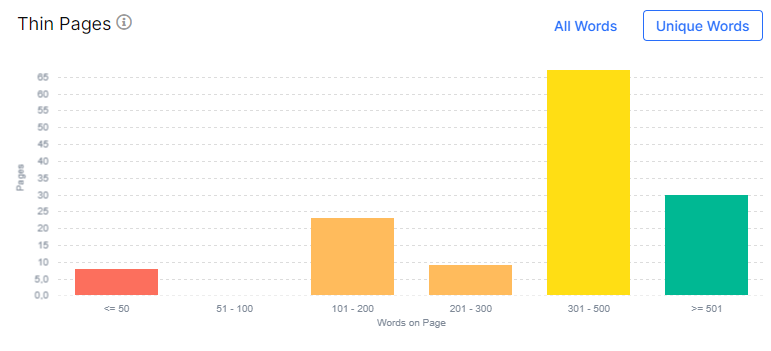

To analyze your content situation:

- navigate to the “Content” report in the crawl results;

- proceed to the “Thin Pages” chart, which displays the distribution of pages based on the number of words per page.

It is optimal for pages to have more than 300 words. Click on the desired column in the chart to access the data table with a detailed list of pages. Analyze which pages require additional content.

Identifying internal duplicate content

Google not only considers external content duplication but also internal content duplication as a problem. External content duplication occurs when your website’s content is similar or identical to content on another website. Internal duplicate content refers to having multiple pages with similar or identical content. If such pages exist, Google may not rank the intended page in the SERPs. Therefore, it is crucial to detect and address these cases.

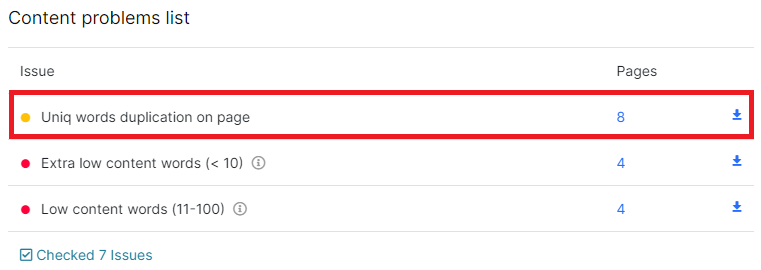

To identify issues with non-unique content on your site:

- access the “Content” report and locate the “Uniq words duplication on page” item in the “Content problems list”;

- click on the number of adjacent pages to view the data table with a list of pages that have similar content.

For example, you have pages titled “SEO Crawler and Log Analyzer” and “Log Analyzer and SEO Crawler” – it is similar content using the same words, so pages with such content will appear in the list. Although these pages may be visually different, they are essentially internal content duplicates.

Analyze these pages and ensure they have unique content where necessary. For some unnecessary pages with duplicate content, you can make them non-indexable or implement canonicalization, depending on their purpose and relevance.

Analyzing сannibalization

When duplicate or similar content is found on your website, it is crucial to check for cannibalization. Cannibalization occurs when different URLs of your website compete for the same queries or keywords. In general, cannibalized pages tend to underperform in the SERPs.

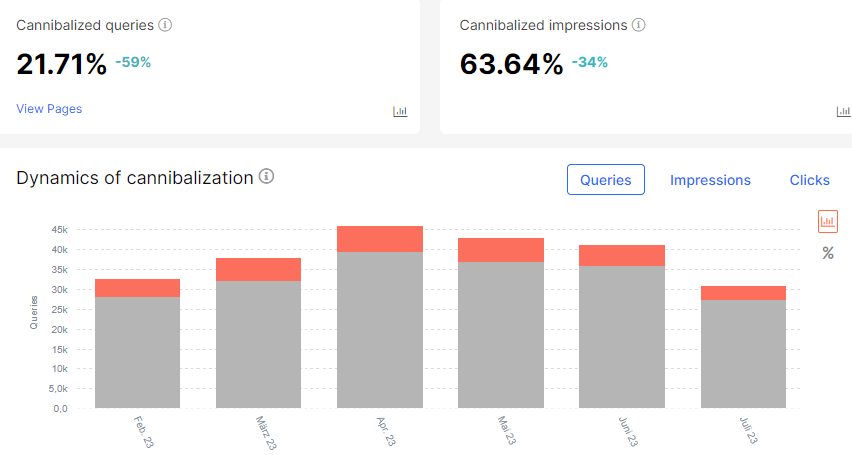

To analyze cases of cannibalization on your website:

- navigate to the Google Search Console section and select the “Cannibalization” dashboard;

- on this dashboard, you will find the dynamics of cannibalization and an overview of the website’s overall state;

- additionally, you can access a detailed list of cannibalized pages in the corresponding data table and analyzed all cannibalization cases.

However, not every instance of cannibalization is critical. Google has the ability to determine itself which keywords to rank pages for based on user preferences and search history. Therefore, if you find cannibalized pages in the SERPs, it is essential to ensure they have unique content and the reason for the cannibalization is not your content. For small websites, it is especially important to have unique and non-cannibalized content on their pages.

Analyzing anchors

Anchors play a vital role for both users and search engine bots. User-friendly anchors provide clarity on the destination of a link, while for bots, anchors serve as an explanation of the link’s subject.

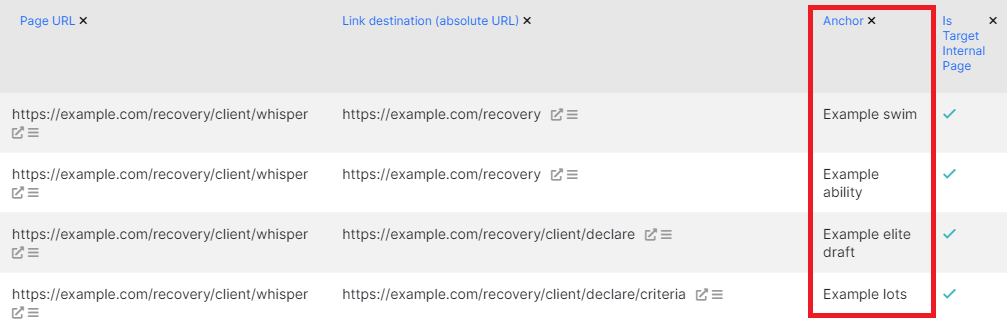

To analyze anchors:

- access the crawl results and select the “Links” dataset.

- in the “Links” dataset, you will find a list of outlinks and their anchors.

Filter out links without anchors, as well as those with short or unclear anchors, and replace them with high-quality, understandable anchors. Avoid using generic phrases like “here,” “there,” or “click here.”

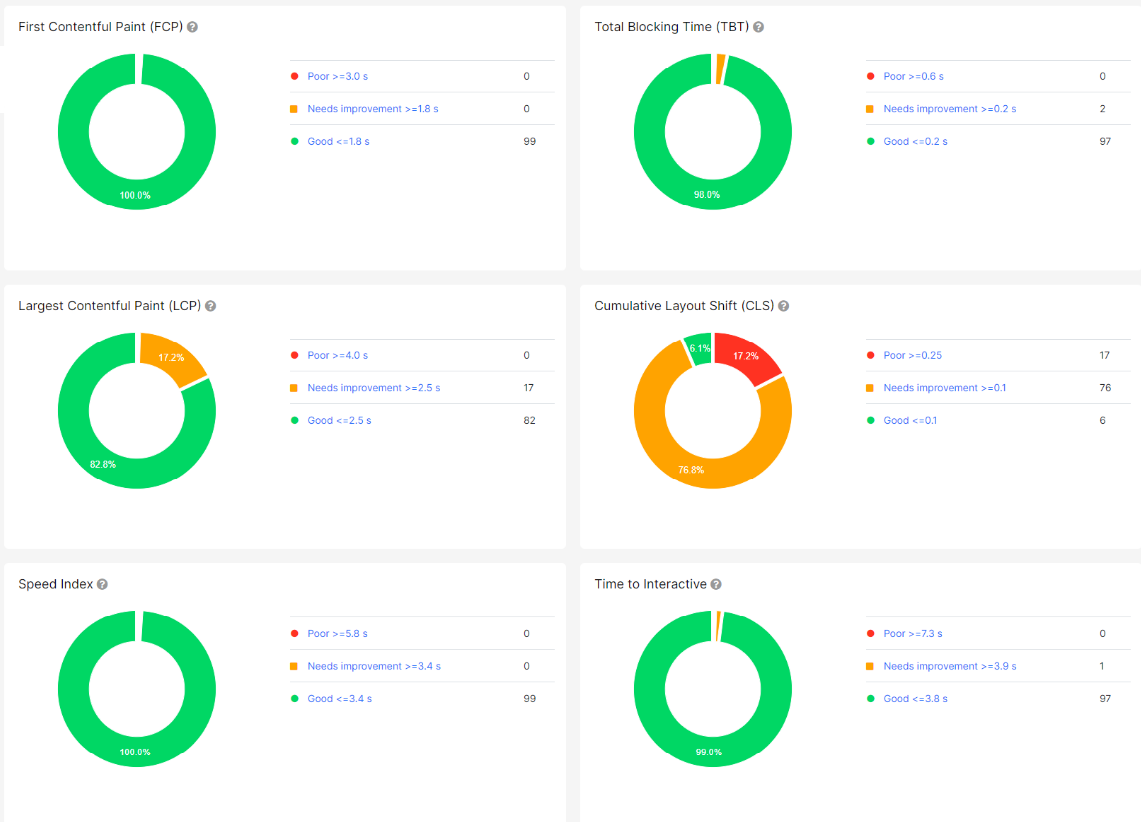

Evaluating Core Web Vitals metrics

Website speed and performance significantly impact both user experience and Google rankings. Google considers the speed of your website when determining its position in the search results. Therefore, it is recommended to check all aspects related to Core Web Vitals.

To assess the lab performance of desired pages (with a primary focus on indexable pages):

- select the desired pages from the crawl results or those that are ranked in the SERPs;

- click on the “Tools” button and choose “Core Web Vitals”;

- configure the necessary fields for validation and initiate the analysis to obtain insights into the performance of your pages.

The results will provide informative tables and charts to help identify performance issues and prioritize pages for improvement.

Identifying broken links

Broken links have a negative impact on the crawl budget and can lead to poor user experiences. Users are left disappointed when they encounter 404 pages instead of accessing the desired information. It is crucial to carefully check and remove broken links within the HTML code of your website. Broken links refer to those pointing to pages that return redirect, 404, or 410 status codes, or if the content of the page is inaccessible to users.

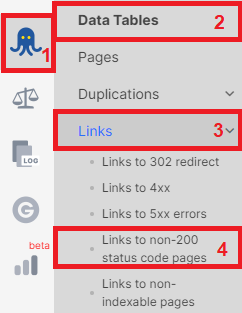

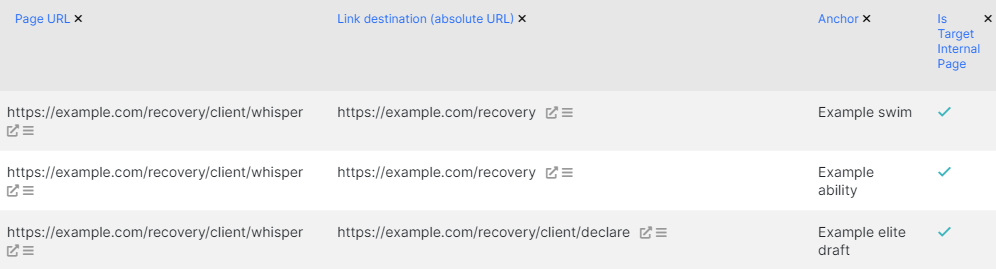

To find broken links:

- go the crawl results and select the familiar “Links” dataset;

- in the drop-down menu, choose the “Links to non-200 status code pages” data table.

Here, you will find a list of all links pointing to pages that returned a response code other than 200. Replace these links to ensure a smooth user experience.

The “Page URL” field provides the link to the page where the broken link was found.

“Link destination (absolute URL)” represents the URL that returns a non-200 status code.

Analyzing headings

Headings, such as H1, H2, and others, serve to emphasize the main content of a page and divide it into sections based on importance. The H1 heading should contain the most critical information, followed by H2 for less important parts, and so on. Properly structured headings improve information perception for users and assist bots in understanding the subject and structure of the page.

To ensure heading effectiveness, check all headings to avoid duplicates. Also, verify that each heading contains relevant and understandable information.

The crawl results provide the necessary data on the “Duplication” dashboard.

Analyze non-unique, similar, and duplicate headings. The charts present clickable sections that lead to data tables with lists of pages encountering heading issues.

Examining XML sitemaps

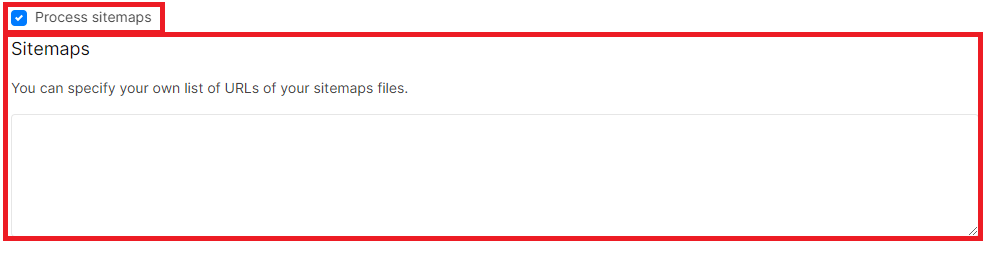

If you utilize plugins to generate sitemaps, it is crucial to carefully review the XML sitemaps. Our experience has shown that such sitemaps often include outdated, blocked, or even deleted links. To address this, activate the “Process sitemaps” checkbox and enter the sitemap links in the corresponding field under “Advanced settings” when configuring the crawl.

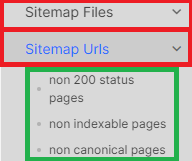

After the crawl is complete, navigate to the “Sitemap Files” and “Sitemap URLs” data tables to identify broken links and non-indexable links within your sitemaps.

If your sitemaps contain numerous such URLs, it is advisable to reconsider using sitemaps – in some cases it is better to remove sitemaps. Otherwise, bots will expend their crawl budget on crawling broken pages, significantly reducing the crawl frequency of your website. Bots prioritize crawling websites with relevant pages that return a 200 status response code, aiming to provide users with high-quality results.

Conclusion

While these are not the only checks required, a small website necessitates the same level of technical audit as a large website. However, starting with the ten points mentioned above can provide a solid foundation for improving your website’s technical SEO performance.