How to calculate the crawl budget of your website with JetOctopus

Using search engine log analysis, you can determine how often search engines crawl your website, how many pages are visited during a certain period, and how long it takes to crawl the entire website to discover new pages and update content on existing pages. In general, this is called the crawl budget of your website. In this article, we will tell you how to calculate the crawl budget of your website using the JetOctopus log analyzer.

What is a crawl budget?

The term “crawl budget” does not have a clear definition. It is used to indicate the crawling potential for each website by search engines. And optimizing the crawl budget is more relevant for large websites, because search engines need much more resources to crawl such websites.

According to Google documentation, the crawl budget is the total amount of time and resources that search engines spend on your website. But we cannot accurately calculate these data, so we use other metrics: the number of scanned pages per period, the number of megabytes downloaded, the number of requests, the frequency of JavaScript execution, etc. And we can get data about all these processes from the logs.

Google, for its part, controls the crawl budget using several metrics.

Capacity of your website – if your website returns 5xx status codes when visited by search engines, i.e. the web server cannot cope with the load, search engines will crawl your website less.

The popularity of your website among users – if users search for your website and choose your website more often in the search results, Google will perceive this as a positive signal and increase its crawl budget.

Technical characteristics – search engines also take into account the health of the website. If there are many 404 URLs, it is likely that the crawl budget will decrease, because neither search engines nor people will benefit from 404 pages.

Why calculate a crawl budget

The Internet and number of websites are extremely large, so it would take a tremendous amount of resources and time for search engines to crawl the entire Internet and all available websites. That is why search engines, including Google, try to scan first of all those pages and websites that will have value for users of search engines.

Therefore, by analyzing the crawl budget of your website, you will be able to discover which pages are most valuable to search engines and calculate how quickly search engines will be able to update the content in the SERP. Based on this data, you can optimize the crawl budget.

If Google crawls non-unique pages, it will not be able to determine the value and main content of your website. For example, your web server can handle 100,000 requests per hour without 5xx status codes. And Google uses half of your web server resources by crawling duplicate pages or URLs with GET parameters. And your web server (and Google) will not have enough resources to scan new or updated indexable pages. As a result, only one or two pages will appear in SERP. And you lose potential traffic.

There is one more important thing to know. If Google crawls relevant and unique pages, but still does not scan all indexable URLs on your website, this may indicate the need to upgrade your web servers.

Therefore, by analyzing the crawl budget, you will be able to influence which pages the search robot will scan. You can limit the scanning of certain pages using the robots.txt file, optimize the site’s loading speed, consolidate duplicate URLs, increase server resources, etc.

How to calculate your crawl budget

Before we get down to the math, we want to emphasize that the crawl budget is an unstable number. We will not be able to accurately calculate the crawl budget, because there are many factors that can affect it. For example, the crawl budget during a sale period on your website may decrease because the capacity of the web server will decrease due to heavy user load. There are other factors, including on Google’s side.

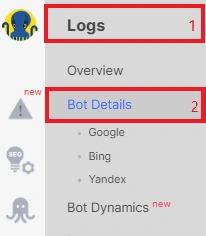

The best source for calculating the crawl budget is search engine logs. Therefore, we recommend integrating logs into JetOctopus to get all the information you need about the robots’ behavior. If you have already integrated logs, go to the “Logs” – “Bot Details” section.

Next, choose the desired period, search engine and domain.

Please note that the “Googlebot Search” bot includes all Google search robots, including Googlebot Desktop and Googlebot Smartphone. We also recommend starting the calculation of the crawl budget with data for all domains and subfolders, because the crawl budget for the entire domain is shared. That is, Googlebot can start scanning pages on the https://example.com domain more often, but at the same time it will reduce the scanning of the https://de.example.com subdomain. However, the total number of visits and scanned pages will remain unchanged.

Once you’ve calculated your total crawl budget, you can drill down to different domains, segments, and page types.

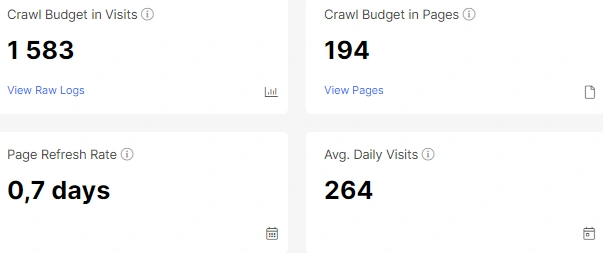

After all the necessary settings, you will see the following data in the “Bot Details” dashboard:

- Crawl Budget in Visits – how many times search engines visited your website;

- Crawl Budget in Pages – how many pages crawlers visited during the selected period (yes, crawlers very often visit the most valuable pages);

- Page Refresh Rate – how often the search robot rescan the page; these are average data on the time between the last two visits of the robot;

- Avg. Daily Visits – the average number of robot visits per day.

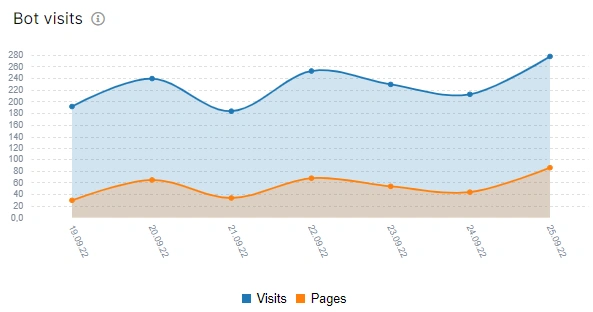

Pay attention to the “Bot visits” chart: the number of visits and the number of visited pages will never be the same. This will vary from day to day. But you should be alarmed by a sharp change in this chart.

After the overall analysis, count how many visits are used for non-indexable and/or duplicate pages using segmentation.