Understanding “Bot dynamics by directory” report: a comprehensive guide on usage and interpretation

The “Bot Dynamics by Directory” report is an invaluable tool for analyzing the distribution of your crawl budget and gaining insights into your website’s bot activity. In this comprehensive guide, we will explore how to access and interpret this report to optimize your website’s crawling process.

How to find information about bot activity by URLs directory?

Analyzing which pages are crawled by search bots provides valuable information for optimizing your website’s performance. By understanding the types of pages that receive the most bot visits, you can assess their effectiveness and identify areas for technical optimization to improve their rankings.

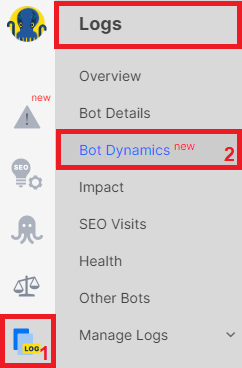

To access general information and dashboards related to bot activity by URLs directories, navigate to the “Logs” section in JetOctopus and proceed to the “Bot Dynamics” dashboard.

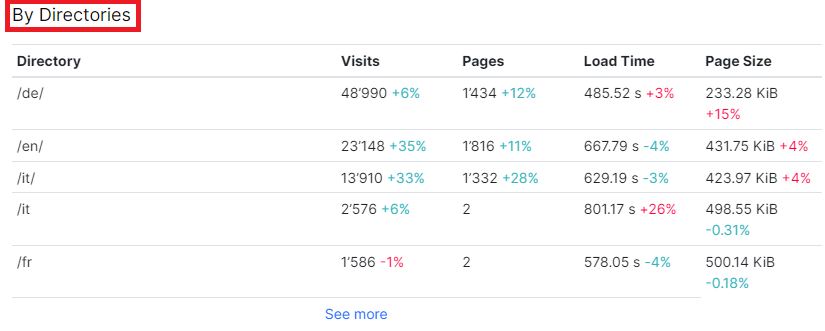

Scroll down to the “By Directories” table, which provides a list of directories visited by search bots with the number of visits, pages, and average load time. It’s important to note that directories are calculated based on the first URL slug.

Clicking on the “See more” button will reveal a comprehensive list of directories. Detailed data on bot activity within each directory can be found in the “Bot Dynamics by Directory” data table.

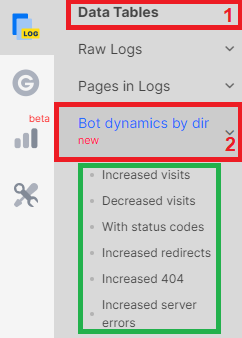

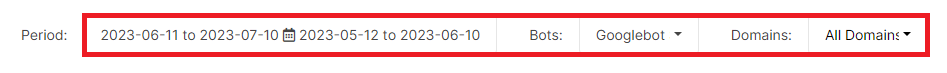

Click on this table and select the desired one from the drop-down menu. You can easily analyze directories that frequently return a 404 status code, directories with increased or decreased bot visits, or any other relevant metrics. Additionally, you can specify the time period, bot type, and domain to refine your analysis.

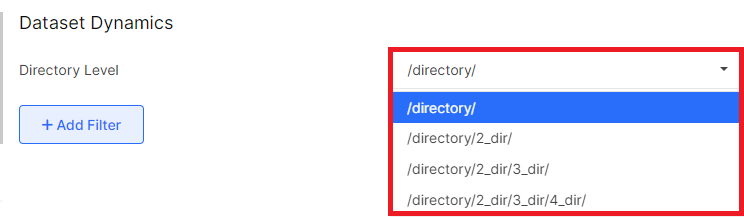

You can also choose the nesting level for analysis. For example, you may analyze directories with up to four nesting levels of URLs. This information can help you understand which types of pages attract the most attention from search engines.

For instance, you may have URL pages on your website containing “/offers/”, “/job-offers/’, or “/category/” slugs. By analyzing the “Bot Dynamics by Directory” table, you can identify which of these page types are frequently crawled by search bots. This knowledge can be utilized for internal linking strategies. You can place internal links on the pages that are crawled by bots the most, to the types of pages that are crawled the least. This will lead to an increase in the frequency of scanning of the lasr ones.

How to use “Bot dynamics by directory” data?

“Bot dynamics by directory” report often reveals directories that are not included in your website’s internal linking structure but are still scanned by bots. It’s crucial to monitor these directories as they can consume a significant portion of your crawl budget.

Additionally, sudden drops in visit frequency for specific directories should be concerning. Decreased bot visits to certain pages may negatively impact their ranking. If you notice an important directory listed under the “Decreased visits” table, conduct a technical analysis to ensure that the URLs in that directory are still accessible for bot scanning and return a 200 status code.

To gain deeper insights, you can combine the visit and bot dynamics data by directory with information from the Google Search Console. This parallel analysis allows you to assess how visits to specific directories correlate with their performance in search engine result pages (SERPs).

Incorporating data from the crawl results can help identify and address technical issues that may hinder bot crawling of URLs within specific directories. Pay special attention to the “Increased redirects,” “Increased 404,” and “Increased server errors” built-in reports. The “Increased server errors” report is particularly important, because if certain pages return a 500 status code for two days or more, Google will stop scanning them and deindex them very quickly over time. Regularly monitor this data to ensure the number of 500 status codes for relevant directories remains minimal.

By utilizing the “Bot Dynamics by Directory” report in conjunction with other data sources, you can optimize your website’s crawling process, enhance rankings, and maintain a healthy crawl budget.