How to deal with sudden drop in Googlebot scanning rate

In this article, we will tell you what to do if, during the analysis of the logs, you notice a sudden drop in the frequency of scanning and the number of visits of search engines. Discover potential issues and solutions to ensure your website’s optimal visibility.

Check log integration with JetOctopus

The initial step involves confirming that all logs are still being sent to JetOctopus. Occasionally, updates on the product side can alter where logs are recorded and stored, leading to incomplete integration. If you’re confident that all logs are integrated and data aligns with Google Search Console statistics, proceed to explore other causes.

Investigate bot’s IP address blocking

Consider IP address blocking as a reason for abrupt crawl rate decreases. While protection systems like Cloudflare generally verify and allow bot IP addresses, manual user agent blocking by devops or administrators might inadvertently affect Googlebot. Ensure there are no blocked search engines IP addresses or Google user agents.

Check robots.txt file changes

Next reason we recommend checking is changes in the robots.txt file. If developers or many other people have access to edit the robots.txt file, then due to an error they can block search engines from accessing your website. Verify that the robots.txt file remains unchanged and that it permits search engines to crawl all pages.

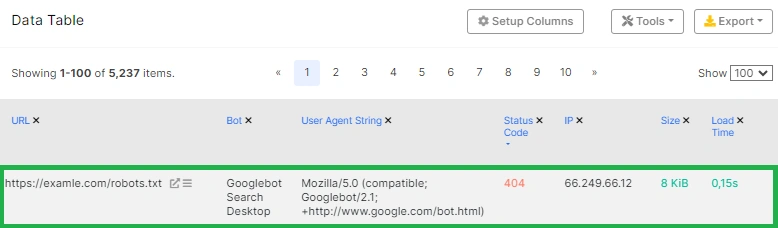

Check robots.txt accessibility

Ensure the robots.txt file is accessible to bots. If the file returns a non-200 status, Google interprets it as blocking all site pages from scanning. What status the code returns the robots.txt file can be checked in the “Raw Logs” data table.

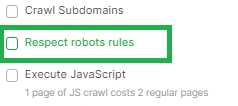

Evaluate indexing rules

A significant crawl rate drop might stem from a blanket block on all pages via meta robots or X-robots tags. If extensive changes have been made, rendering all pages non-indexable, Google could reduce scanning frequency. Verify this manually or run a crawl with the “Respect robot rules” checkbox deactivated.

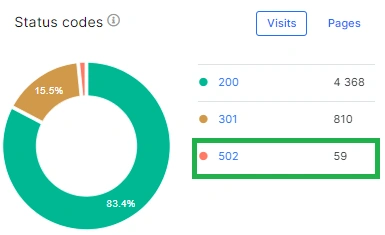

5xx status codes

The next problem that can lead to a sharp drop in Googlebot scanning is a large number of 500 status codes. If a website has been overloaded and returns many 5xx status codes for several days, then Google may suddenly reduce the frequency of crawling your website. In particular, the Google documentation states that if the page returned a 5xx status code within 2 days, Google will stop scanning it and deindex it. Accordingly, if you had a lot of 5xx status codes during the previous 2 days, Google may stop crawling your website altogether.

Similarly, if you have a lot of 5xx status codes even for a short period of time, Google may simply reduce the crawl rate so as not to overload your website. As soon as the situation stabilizes, the scanning frequency will increase.

You can check the dynamics of 5xx status codes in the “Health” report on the “Dynamics of problems (bots)” chart.

401 and 403 status codes

And another reason for a sharp drop in Googlebot scanning, which can be 401 or 403 status codes, that is, if you have enabled authorization or authentication for all users without exception for bots or only for bots. If, for some technical reason, you mistakenly close the website to the Google bot using authorization or authentication, then the Google bot will not be able to crawl your website and will eventually stop crawling the entire site altogether.

You can check the status codes received by Googlebot in the logs and see if there are any 401 or 403 status codes. Everything can be done in the “Bot Details” report in the “Status codes” chart.

Overall, “Bot Details” is a very useful dashboard to explore if you’ve experienced a sudden drop in Googlebot crawling of your website.

Conclusions

There can be many reasons why Google or another search engine suddenly stopped crawling your website. A sudden decrease in crawling, in our experience, has quick consequences, which are expressed in a decrease in the visibility of the site in SERPs, and as a result, in a decrease in organic traffic. Therefore, we recommend setting up alerts to be informed in time about a drop in the crawl rate.