How to recrawl pages unable to load by timeout

When configuring the crawl, you can set up the desired timeout. However, this setting is only necessary if a very gentle and slow crawl is desired. Otherwise, there will be a standard 10 seconds timeout. Most browsers also use a 10 seconds timeout. This means that the crawler (and clients’ browsers) will wait no more than 10 seconds when accessing the page. And if the page does not respond for more than 10 seconds, then JetOctopus will stop waiting and go to scan other pages. Accordingly, in the crawl results, you will not find the title, description and other data for URLs with a timeout. That’s why we recommend running a URL recrawl with a timeout.

Where to find pages with timeout in crawl results

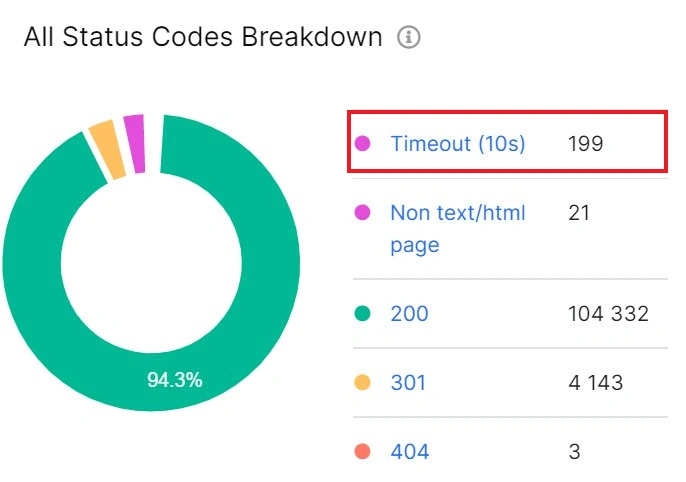

You can find all pages with a timeout in crawl results – “Technical” – “Status codes”.

In the “All Status Codes Breakdown” pie chart, you will see the ratio of all status codes and the timeouted URLs. Clicking on “Timeout (10s)” will go to a data table with a complete list of pages that did not return anything to the crawler request.

How to recrawl URLs with a timeout

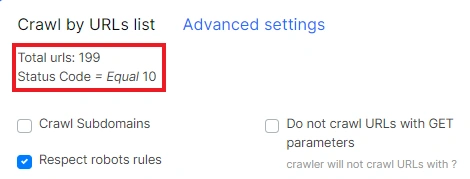

Go to the data table – “Pages”. You can also jump to the filtered data directly from the chart (see above). Next, click on the “+Add Filter” button and select “Status Code” – “= Equal” – “10”.

Why 10? Because the standard browser waits exactly 10 seconds for a response from the page. If there is no response within 10 seconds, the browser terminates the connection. If you configured a custom timeout during the crawl settings, this data table will have pages with the timeout you configured.

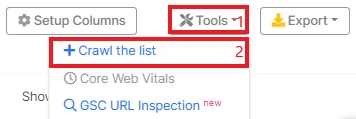

Next, use additional filters if needed. Then, in the upper right corner above the data table, click the “Tools” button and select “+Crawl the list”.

You will then be taken to the crawl settings page with a saved list of timeouted URLs.

Configure and run the crawl. If you suspect that your website is responding slowly, but you still want to have crawl results, you can set a longer timeout. Enter the desired number in the “Timeout” field in “Advanced Settings”. Enter a number in seconds.

Note that if the number of pages is very large and you set a large timeout, the crawl may take longer than usual.

You will be interested in: How to set up a crawl.

Why URLs with a timeout need to be recrawled

Pages with a timeout are inaccessible neither to the user nor to search bots. This can happen to any website if there are many requests at the same time or the server has technical problems. Usually, these problems are temporary.

Therefore, with the help of recrawl, you will be able to rescan pages with a timeout and get all the data necessary for analysis: indexing rules, meta-data, content and technical information.

But if recrawled URLs will also have a timeout, this may indicate a problem with specific pages. Therefore, users and search bots will never be able to get the content of these pages. You need to fix this problem as soon as possible.