How to use Include and Exclude settings when configuring a crawl

Use the Include and Exclude feature to prevent JetOctopus from scanning unwanted pages. We recommend that you use this option if you need to scan one or more types of pages. You can use this feature if you need to scan a part of the large website.

Read about other ways to scan a large website here: How to crawl large websites.

What you need to know when configuring Include and Exclude rules

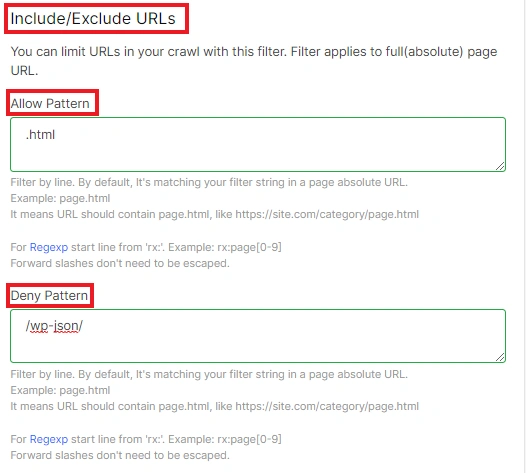

Please note that if you use a “Allow Pattern” rule, only those pages that match the used rule will be scanned. If you use the “Deny Pattern” rule, all URLs will be scanned except those that match the used rule.

We also want to emphasize an important point when using the “Allow Pattern” option. If the crawler does not find any URL matching the applied rule on the start page (the URL you entered in the “URL” field in “Basic Settings”), the crawl will stop.

Example: your starting URL is https://example.com. You want to scan all URLs that contain .html. But in the code of the https://example.com page any <a href=> HTML element with .html was not found. As a result, the crawler will not be able to continue scanning.

Additionally, “Allow Pattern” and “Deny Pattern” are case sensitive. Therefore, if you use “CATEGORY” in “Deny Pattern”, then JetOctopus will scan pages that contain “category” in the URL-address.

We also highlight that the rules will be applied to full (absolute) URLs. This means that if a relative URL without a domain or HTTPS protocol is used in <a href=> HTML element, then JetOctopus will use the current domain.

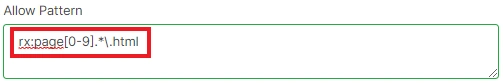

You can use multiple rules or regex. For Regexp start line from ‘rx:’. Forward slashes do not need to be escaped when using Regexp. Each rule should be entered on a separate line, without additional characters between lines. In this case, JetOctopus will use the “either-or” rule instead of the “both-and” rule.

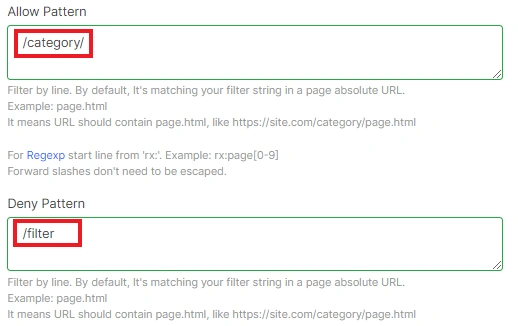

“Deny Pattern” takes precedence over “Allow Pattern”. Therefore, if you allow the scanning of URLs containing /category/, but prohibit the scanning of URLs with /category/how-to, you will not find in the results pages https://example.com/category/how-to-help-animals.

Examples of using Include/Exclude URLs

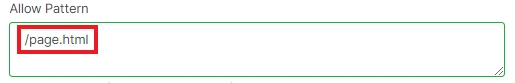

1. If you want to allow scanning of a specific group of URLs, just use the common part of the URL. Use /page.html to crawl all pages, like https://example.com/category/page.html.

2. Use /category/ in “Allow Pattern” and /filter in “Deny Pattern” to scan all category pages without filtering. In order for the crawl to take place, make sure that the starting URL contains a link to a page that matches the given pattern. You’ll find the following pages in the results: https://example.com/category/apples. But there won’t be any like https://example.com/category/apples/filter:red.

Be careful with slashes when using both “Allow Pattern” and “Deny Pattern”.

3. If you know that on your website all article URLs consist only of letters, and all products have number IDs in the URL, you can use regex. Enter rx:page[0-9].*\.html to scan all product pages with URLs like https://example.com/page1234.html.

Regex allows you to scan large parts of a website. You can easily prevent from scanning URLs with GET parameters, filtering, sorting, etc., even if they are open for indexing. To check regex rules, you can use the site https://regex101.com, just remember that forward slashes are not required to be escaped.