How to crawl large websites

In this article, we will tell you how to crawl large websites with millions of pages. With the help of different settings, you will be able to crawl your website most efficiently and get reliable results. Running a full crawl of your website is a great idea to apply the results to your entire website.

However, we strongly recommend crawling the entire website read in the article Why Is Partial Crawling Bad for Big Websites? How Does It Impact SEO?

Actually, with a partial crawl, you will never be able to evaluate the internal linking. As a result, search engines may not crawl the pages that could potentially be the most effective in organic traffic.

How to crawl a large website to get accurate data and save limits

There are different approaches to crawling, including limiting the number of pages. However, we recommend taking a deeper approach if you can’t scan the entire website.

1. Run a little crawling to discover the website’s basic structure.

Often, websites that have been around for years may have URLs with UTM tags, tracking IDs, or URLs that your CMS automatically generates. You can detect these URLs and not crawl them if you know for sure that these URLs are blocked by the robots.txt file and are closed from indexing.

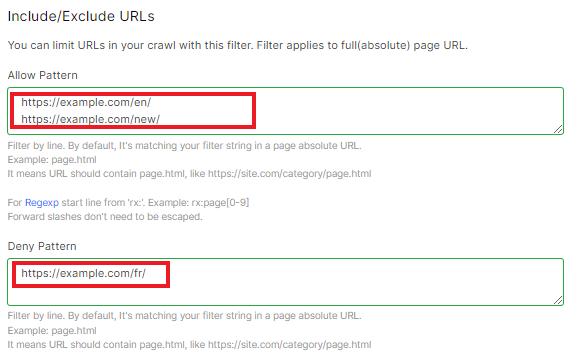

2. Use the Include/Exclude URLs option to exclude all these UTM tags, CMS-generated URLs and other addresses described above. We want to emphasize again that you must be sure that search engines cannot crawl these URLs.

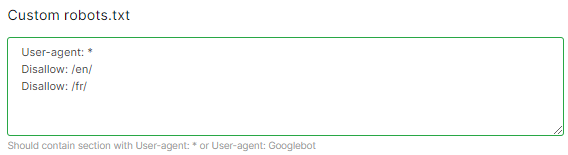

3. You can also use a custom file robots.txt if you are not sure that you will configure the Allow/Deny Pattern correctly. Copy your current robots.txt file and add the required rules. Enter custom robots.txt in Advanced settings.

Be sure to run a test crawl on 2,000-3,000 thousand URLs to check if your configured rules are working.

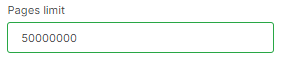

4. Use specific crawling settings. For example, use the page limit. We recommend that you leave limits (up to 10%) to recrawl later pages that returned a 5xx response code or that were unavailable during crawling.

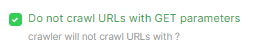

You can also use the “Do not crawl URLs with GET parameters” option during the crawl configuration. It means that our crawlers will not scan pages that contain “?” in the URL address. However, keep in mind that you must know for sure that URLs with GET parameters are blocked from indexing and crawling by search engines.

A similar situation with the links with rel=”nofollow” crawling settings. Be careful not to disable this checkbox (it is the default in every crawling) unless you are sure you have the correct rel=”nofollow” structure. Very often links with rel=”nofollow” contained in the code links to indexable pages. Thus, JetOctopus will not be able to detect useful pages. Search engines are also not able to scan links with rel=”nofollow” and are not able to detect internal links to useful pages. And one of the tasks of crawling is to identify such problems.

Life Hacks for crawling large websites

1. If you want to crawl subfolders, please note that you need to use the Include/Exclude URLs function. If you just enter a subfolder in the start URL field, the results will still show the entire website.

Why this is so, read in the article “How does JetOctopus crawler work?”. In short, the JetOctopus crawler analyzes the HTML code of the start page and looks there for all the links with <a href=>. The folder page will 100% contain a link to the home page, so the crawler will go there and scan any other URLs it finds in the home page code.

2. In order not to crawl subdomains, deactivate the “Crawl Subdomains” checkbox. In this case, only the subdomain you specified in the URL field will be crawled, even if the crawler finds other internal links.

We would like to draw your attention to the fact that full crawling will take more time than partial crawling. However, all processes take place in the cloud, on the JetOctopus side, so you don’t have to worry about using the resources of your computer or server.

Remember that crawling is a load on the site, so set the number of threads that will not overload your website.