Image analysis issues: why crawler didn’t find all images

Regularly checking the images on your website is important as they can bring organic traffic if they have the right context and correct settings. Images can also affect your website’s SERP ranking if they are accessible and have appropriate tags, such as alt tags that correspond to the image’s content. However, there are situations when scanning images with JetOctopus, where you may not see all images in the results. In this article, we will discuss the possible reasons and how to fix them.

Images are loaded by JavaScript

If you use JavaScript technologies, some images may be loaded by executing JavaScript. To check if this is the case, you can use two methods.

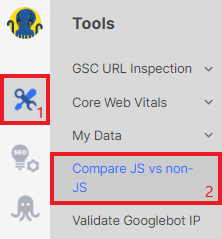

1. Use the comparison tool between the JavaScript and non-JS versions in JetOctopus. To do this, go to the “Tools” section and select “Compare JS vs non-JS”.

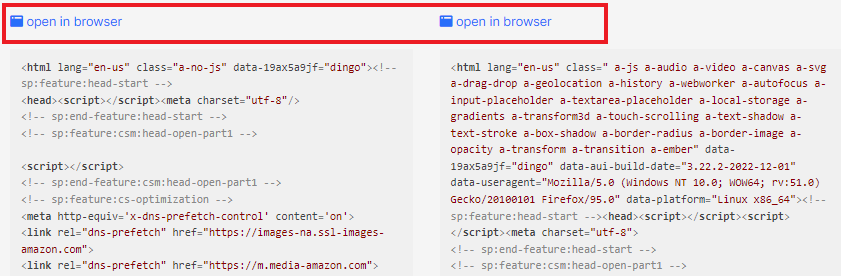

Enter the URL you want to check for images, then click “Open in browser” to see a version of the page without running JavaScript. If there are no images, you need to configure a JS crawl so that JetOctopus can scan all images.

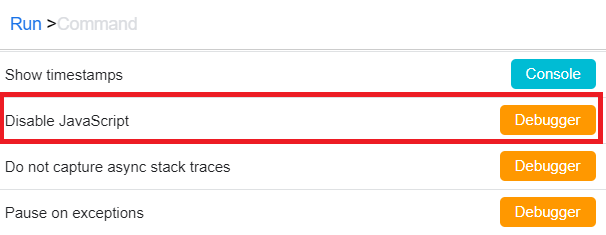

2. Check using Chrome Dev Tools. Open Chrome Dev Tools (Fn+F12 or Command + Option + C for Mac). Run Chrome Dev Tools Command line (press Ctrl + Shift + P) and select “Disable JavaScript”.

In the address browser bar of this tab, enter the URL you want to check and press Enter. This will allow you to run the page without executing JavaScript, and in the HTML code, you will see the static code that your web server sends to the client browser.

In the “Elements” tab in Chrome Dev Tools, check the images on the page. Are they still present or missing without JavaScript? Does the page look different visually? If the pages are different, run a JavaScript crawl.

The image is blocked by robots.txt

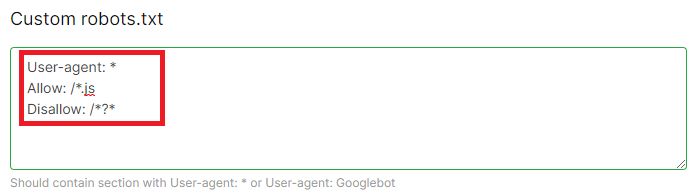

If images are blocked by a robots.txt file, JetOctopus will not be able to access them. Therefore, you need to check your robots.txt file and find the line that blocks the scanning of images. Next, start a new crawl, but first enter in the “Custom robots.txt” field in “Advanced Settings” your robots.txt file without the rule that prohibits image scanning.

For example, your robots.txt contains the following rules:

User-agent: *

Allow: /*.js

Disallow: /*.png

Disallow: /*?*

To scan all images, add a file without the Disallow: /*.png line to “Custom robots.txt” field.

However, note that if JetOctopus cannot access your images, Googlebot cannot scan them either. So, it is worth allowing search engines to scan your images.

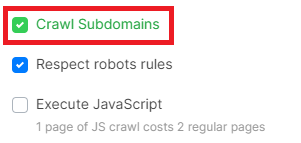

The images are located on a different domain, and you have disallowed other subdomains from being crawled

It is common for images to be hosted on other subdomains and web servers to optimize the speed of web servers. By default, JetOctopus does not crawl subdomains. To have JetOctopus crawl subdomains, activate the “Crawl Subdomains” checkbox.

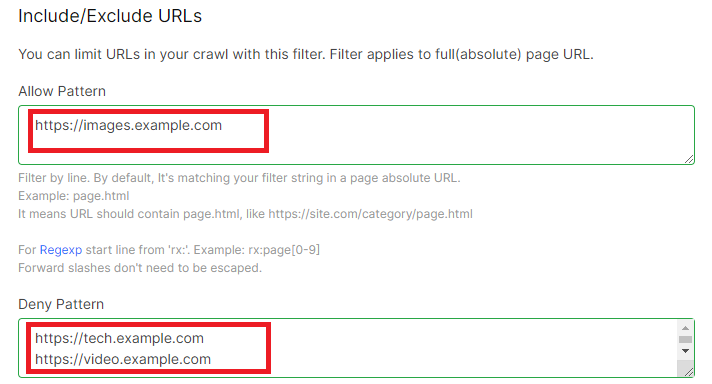

If you want JetOctopus to crawl only subdomains with images and the main domain, add all other subdomains to “Deny Pattern” in “Include/Exclude URLs”.

More information on image analysis:

How to check all images on your website

How to do a deep audit of all internal and external image links

How to find lazy load images and how to analyze it

How to set up image crawling

Why are there missing images during crawling?