Page Speed: How it Impacts Your SEO and How to Accelerate It

Google has included site speed (and as a result, page speed) as a signal in search ranking algorithms. There is no doubt that speeding up websites is crucial. JetOctopus team encourages you to start looking at your webpages speed — not only to improve your ranking in Google, but also to improve users experience.

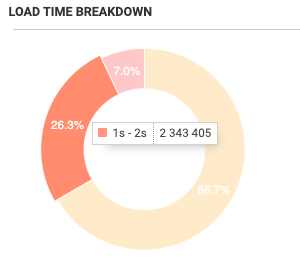

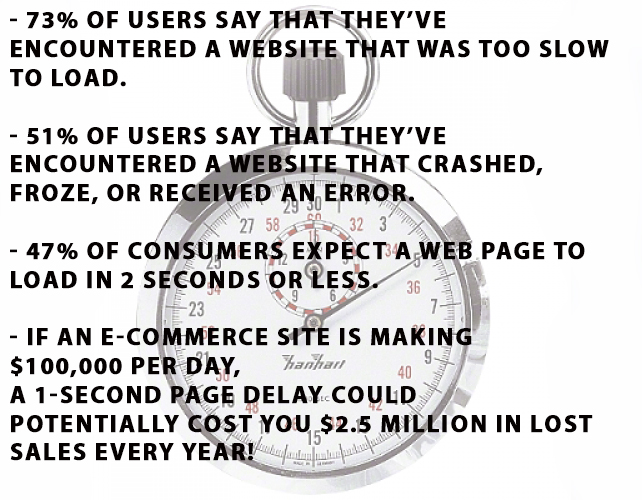

Surveys show that people really care about the speed of a page. Let’s look at the statistic from the Neil Patel blog:

But faster webpages don’t just improve UX. Improving site speed also reduces operating costs. For instance, a 5 second speed up (from ~7 seconds to ~2 seconds) resulted in a 25% increase in page views, a 7-12% increase in revenue, and a 50% reduction in hardware. This last point shows the win-win of performance improvements, increasing revenue while driving down operating costs

JetOctopus team encourages you to start looking at your webpages speed — not only to improve your ranking in Google, but also to improve user’s experience and increase profitability.

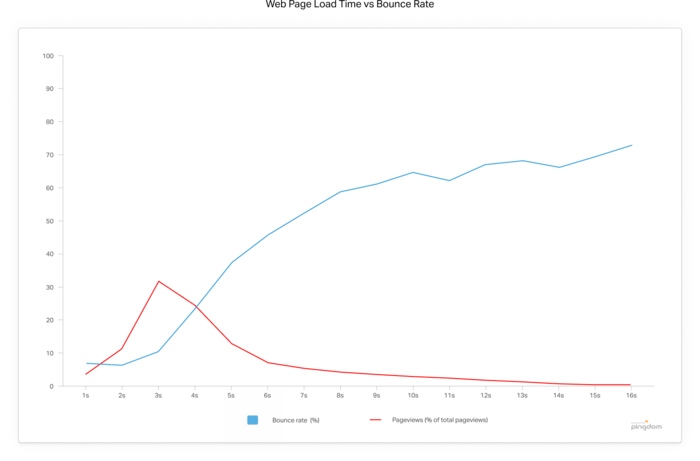

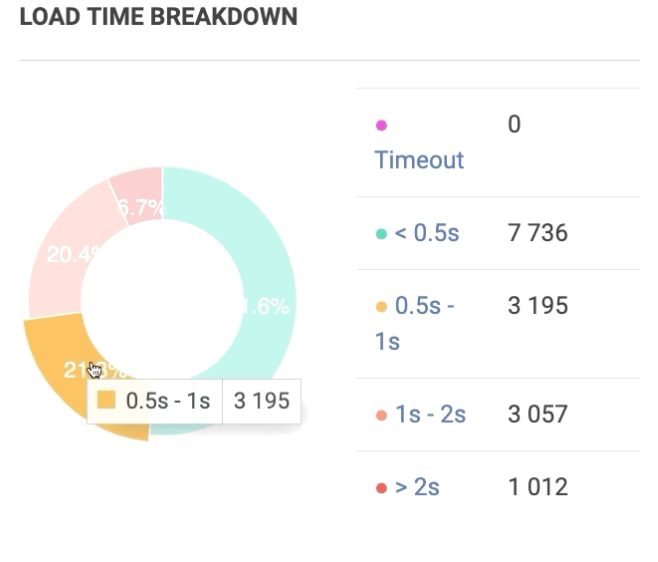

How Fast Should a Webpage Load

To understand what load time is “ideal”, let’s look at the real numbers. Studies show that the average load time for a webpage on the Web is 3.21 sec. The next experiment shows that the average bounce rate for pages loading within 2 sec. is 9%. As soon as the page load time surpasses 3 sec., the bounce rate soars, to 38% by the time it hits 5 sec.!

(Source: pingdom blog)

On the basis of our experience in technical SEO, JetOctopus crawler team claims that ideal loading time should be 0,5–1 second. If you know that you’re better than the industry standard load times for 2019, then you’re going to be better than most of your competitors.

Here are 6 actionable steps to make your website load faster.

6 ways to speed up your site

1. Minimize HTTP requests for the different parts of the page, like scripts, images, and CSS.

Reducing the number of components that a page requires proportionally reduces the number of HTTP requests it has to make. This doesn’t mean omitting content, it just means structuring it more efficiently.

- Remove useless elements from your webpages.

- If it’s possible, implement CSS instead of images

- Combine multiple style sheets.

- Reduce scripts.

2. Reduce image size by compressing them and combine common files to reduce requests

Every little KB is important: even when you think that optimizing an image will only save a little 10% of its size…go with it! You’ll be grateful for it in the future Check this table from Google Developers Guides to learn the different characteristics of each image format.

Image compression apps remove hidden data in the image file like additional color profiles and metadata (like geolocation of where the photograph was taken) that aren’t needed. These tools provide a quick and easy way to reduce file size without losing any image quality.

3. Have both CSS and JavaScript load simultaneously

Rather than forcing the browser to retrieve multiple CSS or Javascript files to load, try combining your CSS files into one larger file (same for JS). While this can be challenging if your stylesheets and scripts vary from page to page, managing to merge them will ultimately help your load times in the long run.

Google’s webmasters warn no to serve priority content in the head section as this can delay rendering, meaning the visitor will have to wait longer before receiving any information

EnglishGoogle Webmaster Central office-hours hangout in person

An optimized page load (render actually) happens in a more step-by-step way, allowing users to see some content gradually until the page loads fully. In order to save your time for other actionable steps, we won’t go into detail but link you to the Running-Your-Code-at -the-Right-Time full guide.

The basic tips for delay Javascript loading are:

- Add script references below DOM directly above the end of the body element.

- Avoid needlessly complicated code by listening to the DOMContentLoaded or load events (except the case you want to create a library that people will use)

- Mark your script references with the HTML script async attribute. For more detailed information refer to 3rd party library like require.js that provides greater control over when your code runs.

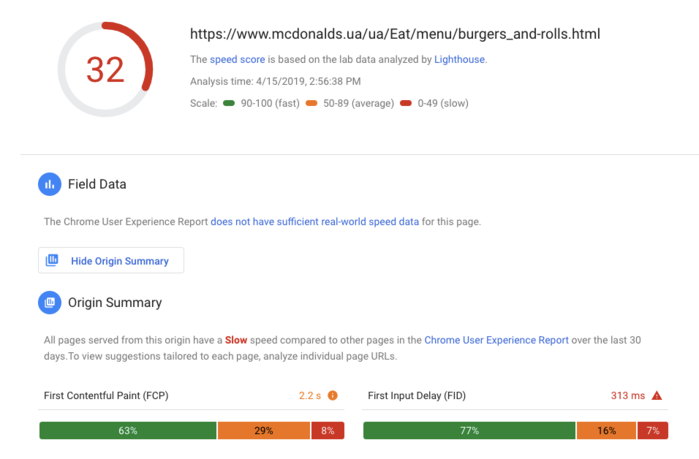

- Improve server response time (SRT)

SRT is the amount of time it takes for the web browser to receive a response. According to Google’s PageSpeed Insights, your server response time should be under 200ms. Look at the PageSpeed Insights of MacDonalds’ webpages:

Google suggest actionable steps to accelerate the webpage:

PageSpeed Insights is a useful tool for a small website where you can check each page manually. But if you trying to optimize a medium/big website, don’t evaluate your website speed on the base of few pages insights.

Carefully evaluating your hosting needs, adjusting your web servers, reducing bloat, and optimizing your databases are ways that you can work toward better SRTs.

4. Leverage browser caching

To leverage your browser’s caching generally means that you can specify how long web browsers should keep images, CSS and JS stored locally. The user’s browser will download less data while navigating through your pages, which will improve the loading speed of your website.

A cache mechanism operates between the server and the visitor’s browser, either on the end of the server or on the computer of the visitor. It can store copies of files (like html/php/css/js files, images, etc.) or strings of code for faster access instead of having to constantly request it from the server. You can leverage browser caching for Apache servers by adding a small snippet to the .htaccess file.

5. Use a CDN

Content Delivery Network delivers web pages depending on where the user is based. CDNs then will faster distribute your website content if you are located in Italy and if the user accessing your content is located in Florance, compare to someone based in Russia. So the furthest away from the visitor accessing the content is, the longer the load time will be.CDNs provide an opportunity to quickly share your content to a wider audience in just a few seconds. Actually, a CDN allows you to cache content to deliver it from an edge server to the visitor faster. Here is a case study of the website in Hotel niche that achieved 80% decrease in average loading time experienced after adding the CDN.

Caching content at the edges of the network allows faster delivery of data to your users while reducing serving costs. By the way, Google provides Cloud Content Delivery Network to cache HTTP(S) load balanced content close to your users.

6. Keep plugins to a minimum

Plugins are usually the biggest culprit for slowing the site down. If there are any plugins that you’re no longer using or aren’t essential, delete them. So, how many plugins is too many? It’s more about quality than quantity.

4X best-selling author and developer of WordPress WP Curve service Dan Norris supports this statement:

Yes, plugins extend and increase the functionality. Unfortunately, some tools use databases extensively, increasing the number of queries and slowing the performance of your website. If a plugin is well-considered this shouldn’t be an issue, however, it’s something to consider before installing a large amount of them.

Wrapping up

Based on our 6 billion logs lines experience and more than 400 m. crawled pages, we claim that neither expert content or modern website design – nothing will help to achieve TOP positions in SERP if your pages are slow.

Search engines penalize websites that load slowly — but more importantly, so do your visitors. They stop visiting, engaging, and don’t just buy. Furthermore, a slow page speed means that Google can crawl fewer pages wasting its crawl budget, and this could negatively affect your indexability. Faster loading pages lead to a better overall website experience and that’s why it’s time to improve your search performance.

Original source: Milosz Krasinski

Read More: Core Web Vitals Optimization