From logs to Insights: analyzing the role of 429 status codes

The occurrence of a 429 status code in server logs is not very common, but it can indicate significant issues on your website. Analyzing any non-200 response code, including 429, is crucial to avoid wasting your crawl budget. In this article, we will focus specifically on the 429 status code.

429 status code: what is it and why should you pay attention if the 429 status code is in the logs

We have previously discussed the 429 Too Many Requests status code in crawl results, which is a rather frequent situation. During a crawl, this status code occurs when the crawler sends an excessive number of requests to the web server. If the server has set a limit on the number of requests within a certain timeframe, a 429 status code will be triggered. This code indicates a client error. However, if the client overwhelms your web server with an excessive number of requests, it will result in a 503 Service Unavailable status code. In the former case, the web server enforces restrictions, while in the latter, the server cannot handle the client’s load.

Identifying and resolving the occurrence of the 429 status code in search engine logs is crucial. The higher the number of 429 pages, the less frequently Google will crawl your website since the content on these pages will be inaccessible to Googlebot.

But there is one extremely important point that should be noted. 429 status code can serve as protection against DDoS attacks. However, Google does not interpret the 429 status code as protection, Googlebot treats the 429 status code as a signal of server overload and considers it a server error. Consequently, the 429 status code, like other 5xx errors, reduces the frequency of Google’s website crawling.

How to find 429 pages in logs

To identify the presence of 429 pages in your search engine logs, follow these steps.

1. Navigate to the “Bot Details” section.

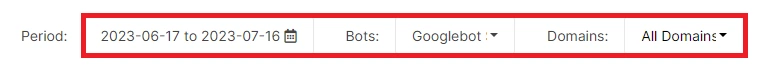

2. Set up a needed time period for analysis, select the desired bot and domain.

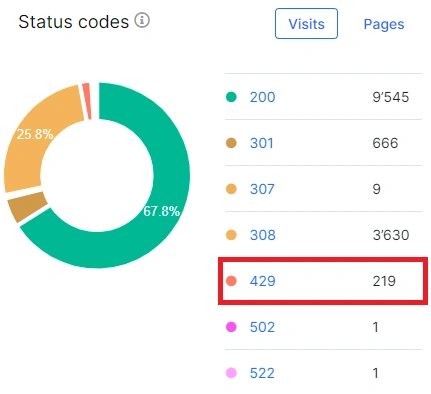

3. Access the “Status codes” chart, which displays a list and distribution of all status codes for the selected period. The 429 status codes, if present, will be shown in the chart description.

4. Click on “429” to access the detailed data table for further analysis. Evaluate the frequency of 429 status codes, identify the pages returning this code, and determine if it occurs regularly or during peak periods. Perhaps there were temporary restrictions on the number of requests for the period of promotions or discounts or due to technical work on the site? All this information is very important to assess the scale of the problem.

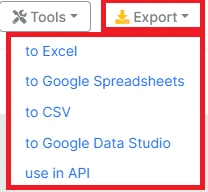

5. Export all relevant data in a format that suits your needs by clicking on the “Export” button.

What to do if you find a 429 status code in the logs

If you discover pages with a 429 response code, it is important to adjust the web server settings to avoid restricting Googlebot’s crawl rate. Your website should always be accessible to Googlebot without any server-imposed limitations. However, you also need to remember about the security of your website: always verify Googlebot to avoid a situation where your site is DDoSed using the Googlebot user agent.

Also, we highly recommend configuring alerts to receive immediate notifications whenever Googlebot encounters a 429 status code.

Being aware of the 429 status code and its impact on your website’s crawlability is essential. By effectively analyzing and addressing this code, you can ensure uninterrupted crawling by search engines and optimize your website’s performance.