How does the JetOctopus crawler work?

Understanding how the JetOctopus crawler works will help you better understand the results of your crawling.

The main principle of our crawler is very similar to search engines. However, you can make many additional settings with JetOctopus.

We want to emphasize that if there are errors in the crawl results or there are not all the pages of your website, it is likely that search engines get the same errors when trying to crawl your site.

More information: Why are there missing pages in the crawl results?

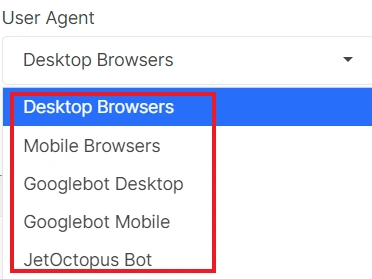

When setting up a crawl, you can select several user agents. They all simulate the work of search engines. Pay attention that mobile and desktop user agents use different browsers (as Mobile and Desktop Googlebots).

If you select Googlebot, we will emulate its behavior, including due to the changed header.

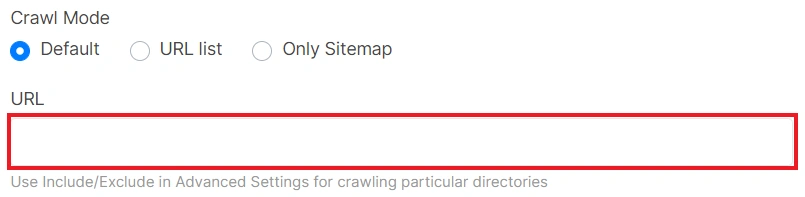

JetOctopus starts crawling from the start page. This is the URL you entered in the “URL” field in the “Basic Settings”.

If you use “URL list” mode, our crawler will only process urls from this list and will not move through other urls that it finds in the page code.

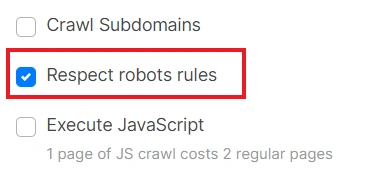

The next step for our crawler is to check the crawl configuration. In particular, settings such as “Respect robots rules”, “Follow links with rel=”nofollow””, and so on.

If some pages are blocked by robots.txt file or do not match the crawl settings, our crawler will not scan these pages.

Just like search engines: they will not scan pages blocked by robots.txt file, and they will not follow URLs with the appropriate attribute.

In addition, before crawling, our crawler checks the robots.txt file and remembers it. Later, during crawling, JetOctopus will follow the prohibition directives from robots.txt, if the “Respect robots rules” checkbox is activated.

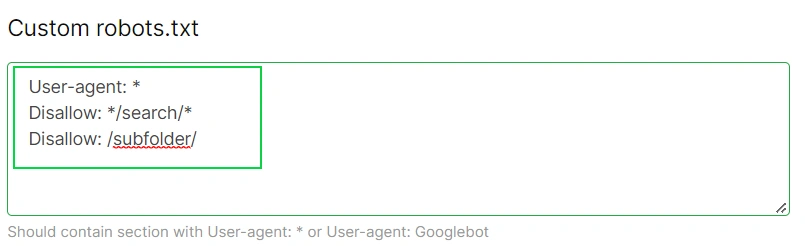

If you have added a robots.txt file in “Advanced Settings”, JetOctopus will use it.

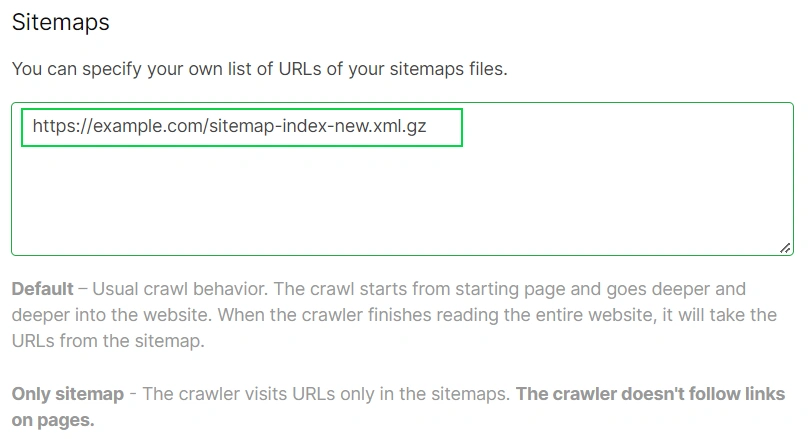

Also, our crawler can use sitemaps, if you have made the appropriate settings. Namely, if you have activated the “Process sitemap” checkbox, or if you have added links to sitemaps in a special field.

Now we want to highlight the most important principle of the JetOctopus crawler.

- By default, we process the HTML code returned by the web server of your site after the GET-request.

- Then our crawler analyzes the HTML code of the start page and looks there for all the links with <a href=>.

Then our crawler goes on the found links inside the <a href=> and looks for the same in their code. And so on until the crawler finds all the links on your website. URLs from the sitemap are processed in the same way after the crawler has scanned all the found pages in HTML.

If the HTML code returned by your web server does not contain URLs with <a href=>, JetOctopus will not be able to crawl your website.

Search engines work on the same principle. They cannot follow a URL that is not inside the <a href=> element. Similarly, they cannot scan links that appear in the page code after any action. For example, if <a href=> elements appear in the page code after the user clicks the menu button, etc., search engines will not be able to find these links and scan them.

Those search engines that process JavaScript can follow the links that appear after execution of JavaScript. However, those links should be inside <a href=> element.

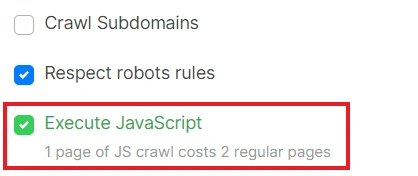

The JetOctopus crawler can also process JavaScript and scan URLs from executed JavaScript code. To do this, you need to activate the “Execute JavaScript” checkbox.

After activating the “Execute JavaScript” checkbox, our crawler will look for <a href=> elements in the executed JavaScript and will scan URLs inside these elements.

After JetOctopus has found all the URLs on your website and sitemaps, it processes the page code in the same way as search engines and analyzes all important SEO elements, including meta tags, web server responses, load time, content, images, internal links and more.

We also recommend reading the following articles:

How to check JS website with JetOctopus

Why do JavaScript and HTML versions differ in crawl results?