How to find URLs blocked by robots.txt

We want to have clean and accessible code on our website. Also, we want search engines to scan only pages needed in search results because we worry about our crawling budget.

But sometimes there are situations when URLs in the code are blocked by the robots.txt file. It means that search engines cannot access the page. And these URLs contain meta robots tags “index, follow”. So, you want to see these pages in Google Search results. But Google search engines cannot scan and index them because of the robots.txt file.

There is another important reason why you need to regularly check URLs blocked by robots.txt. If many pages drop out of Google’s index for no apparent reason, make sure they are not blocked by robots.txt.

How to configure crawl correctly

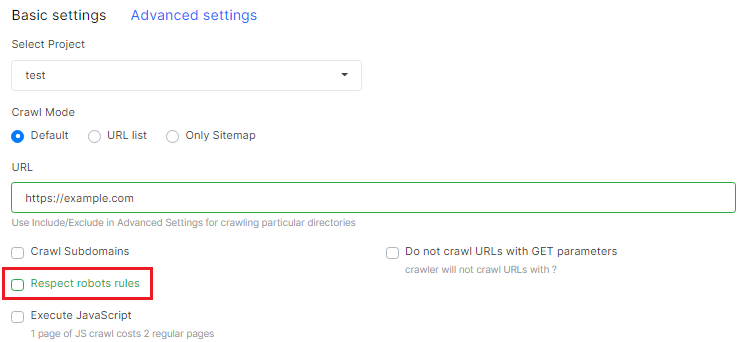

JetOctopus does not crawl URLs blocked by robots.txt by default. Therefore, you need to configure the crawl. Go to the “New Crawl” and deactivate the “Respect robots rules” checkbox. Then start a new crawl.

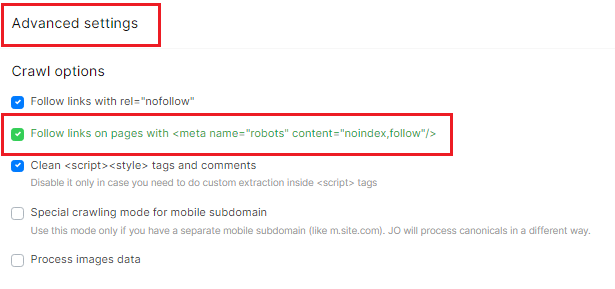

Note, please. If you are sure that many pages blocked by robots.txt are also closed from indexing, you need to make additional settings. Go to the “Advanced settings” and activate the checkbox “Follow links on pages with <meta name = “robots” content = “noindex, follow” />”.

How to find URLs blocked by robots.txt

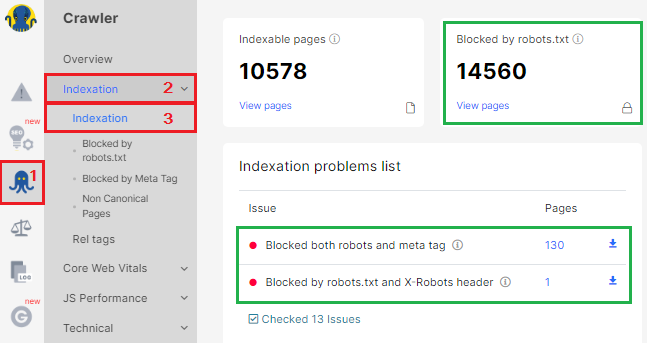

When the crawl is finished, you can analyze URLs blocked by robots.txt. Go to the “Crawler” menu, and select the “Indexation” section. Here are handy charts for analysis. For example, you can see here how many pages of blocked robots.txt are on your website.

“Indexation problems list” shows what are the problems with access to scanning and indexing. If you have problems with URLs blocked by robots.txt, we will show them in this list.

What points should you pay attention to:

- Blocked both robots and meta tag – check if this list contains the pages you want to see in search results; if yes – fix robots.txt rules;

- Blocked by robots.txt and X-Robots header – pages disallowed for Googlebot and closed from indexing; in this list should be only URLs not needed in search results.

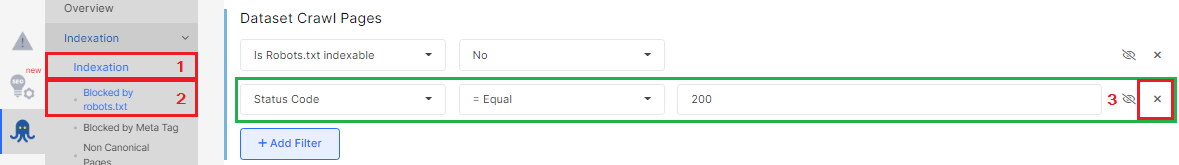

To analyze all pages blocked by robots.txt in more detail, go to the “Indexation” – “Blocked by robots.txt” report. By default, only pages with 200 response codes are shown here. If you want to see all the links found by the spider, regardless of the status code, remove the status code filter. Only URLs blocked by robots.txt will remain in the data table.

We recommend analyzing in detail URLs blocked by robots.txt and with meta robots tags “index, follow”. To do this, add the filter “Is meta tag indexable” – “Yes”. What does it mean if the URLs are blocked by robots.txt, but they have “index, follow”? Search engines have no access to this page, and will not index it, despite the “index,follow”.

If this list contains the URLs you want to see in the search results, you need to fix the robots.txt file and allow these URLs to be scanned by search engines.

To make it easier to analyze the results, use additional filter settings. Or use Regex to highlight the required set of URLs.

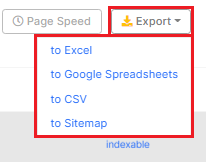

After you’ve set up the sample, you can export the data to a Google Spreadsheet, Excel, or CSV file.