How to check Ajax URLs in logs

Ajax URLs may not be displayed correctly in search results because they are an asynchronously processed part of the page that depends on user action. Although the Ajax URLs have a different address, the content may be identical with the main page. Therefore, it is necessary to ensure that search bots do not scan Ajax URLs and do not waste the crawling budget with this type of URLs. Using JetOctopus, you can quickly detect Ajax URLs in search engine logs.

Step 1. Go to the “Logs” section, select the “Health” dashboard. Select the desired type of search engine, period and domain.

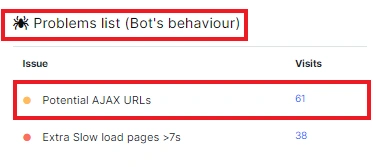

Find in the “Problems list (Bot’s behavior)” whether search crawlers have scanned Ajax URLs. Click on the number next to the problem to go to the data table for a detailed analysis.

In the “Problems list (Visitors’s behaviour) ” chart you will find similar information about Ajax URLs visited by users.

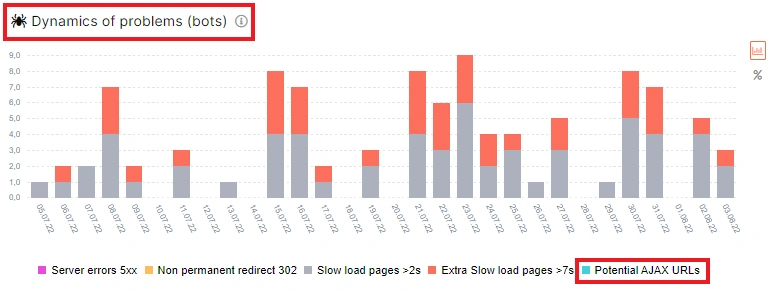

Pay attention to the “Dynamics of problems (bots)” chart to see when search engines were most actively crawling potential Ajax URLs.

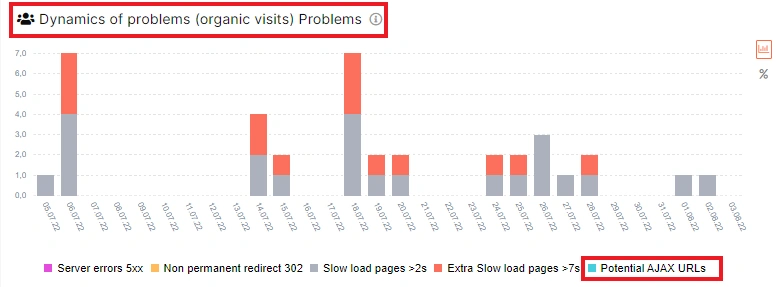

Also focus on the “Dynamics of problems (organic visits)” diagram. Here you will see the dynamics of Ajax URLs visited by users during the period you selected. What does it mean? This means that Ajax URLs are already indexed and users go to such URLs from SERP. However, Ajax URLs may not contain all information that users need.

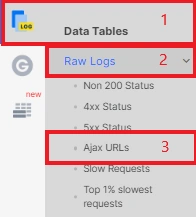

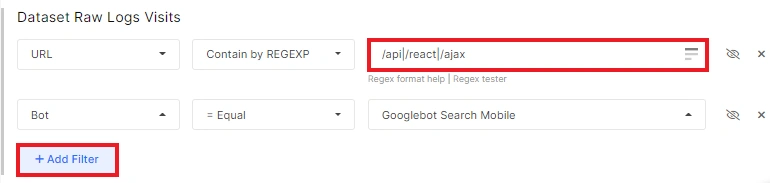

Step 2. Go to the “Raw Logs” data table to perform an in-depth analysis of the Ajax URLs visited by search robots.

You can add any other URL path to the filter, except /api|/react|/ajax, if you are sure how Ajax URLs are generated on your website. Also, you can add any other filters you need here.

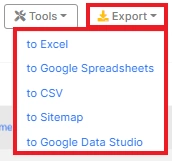

Step 3. Bulk export Ajax URLs. Click the “Export” button and select the desired format. You can also export Ajax URLs from logs to DataStudio.

What to do if you find Ajax URLs in the logs:

- inspect the dynamics of Ajax URLs; you can set up an alert that will notify you about the increase of Ajax URLs in the logs;

- block Ajax URLs with the robots.txt file;

- find entry points to Ajax URLs for search robots, for example, check if inlinks are contained it in texts, descriptions, etc., and replace those URLs;

- check which Ajax URLs are indexed and, if necessary, adjust the canonical to the main page.

Remember that you should not immediately block Ajax URLs in robots.txt if they are in SERP. Analyze the potential loss of traffic and choose the best option to save it: canonical, redirect or block pages.