Log file integration: everything you need to know

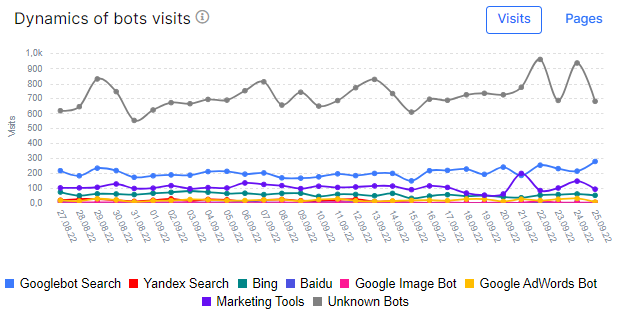

Analyzing search engine logs is a regular task for most SEO specialists. Log files contain 100% accurate information on how search engines crawl your website, which inevitably makes log file analysis an essential part of website SEO. However, it is inconvenient to analyze logs using standard applications such as Kibana, Datadog, etc. And if you export loglines to CSV or other files, then working with this data using tables can be difficult. Therefore, it is a logical step to use JetOctopus as a log analyzer. In this article, we will talk about how to integrate logs into JetOctopus and what errors can occur during integration. Of course, we will tell you how to fix these errors.

Note that you can use the free 7-days trial after demo call to see how easy it is to integrate logs and how quickly you can get the first insights from search engines logs!

Log analysis is a cool thing to do to stay ahead of Google warnings and errors for your website. Therefore, we recommend that you try and get the maximum benefit from this information. Read about what to focus on when analyzing logs in the article: The Ultimate Guide to Log Analysis – a 21 Point Checklist.

Where to get logs of search engines

If you haven’t used a log analyzer before, you may be wondering where to get the logs from.

1. If you have a large website and development team, then it is 100% that the logs are already being collected. Usually, various programs and services are used for visualization, for example, Kibana, Datadog, Greylog, Grafana, Databox, Looker, and so on. Unfortunately, logs in such services may not be stored for a long time, because their number is very large.

To get the logs, you can contact the development teams (best to contact DevOps) and get access to one of the services that collect the logs. Next, you can select the desired period and export the data to a text file.

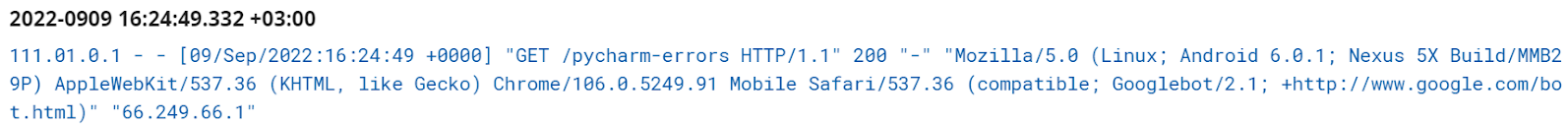

This is what a record of a Googlebot visit looks like in one of the services.

2. Another way is to get Access Log records from hosting. Access Log contains raw logs of all users of your website. Mostly, the data is stored for the last day. Then the logs are archived. Logs are deleted at the end of each month.

What do raw logs mean? This means that you will receive a large file with dozens and hundreds of lines without separated sections with user agents, messages, IPs, etc. Handling such files manually is very inconvenient.

To access the raw logs, you need to connect to your account with FTP or SSH.

3. You can also contact DevOps to integrate logs directly from your web server.

Logs contain standard data:

- user agent,

- status code,

- IP address,

- access date,

- URL/path/query,

- referrer,

- HTTP version,

- and HTTP request type (GET or POST).

This data is usually transmitted by the user agent in the request header to your web server, which is why the logs are also called “server logs”. And they should not be confused with logging changes on your website. For example, logging can contain information about when and which users edited the content of the page.

How to integrate logs into JetOctopus

There are three ways to integrate search engines logs to JetOctopus.

Integration directly from your NGINX or Cloudflare web server

Using this method, you will receive data in real-time. This is a very cool feature that allows you to monitor the behaviour of bots immediately during site migrations or updates.

How it works: theory

Each time bot makes a request, your server sends a small package of data (on average, 200-300 bytes) on our servers in real-time. We analyze this data immediately and store it in the system where data appears without delays in the Raw logs report.

How it works: practice

This technology is built on the base of the UDP protocol. The main advantage of this technology is that it doesn’t impact your server, and even if all our servers break down, it won’t impact your site’s productivity at all.

Security

The only way to intercept data via Live Stream log data transmission is to get direct access to the core routers between your server and our server. You don’t have to worry because it is almost impossible to realize.

How to do it

The only thing you need to do is to insert two lines of code for NGINX configuration.

As for the Cloudflare integration, all you will require is to insert a code for Cloudflare workers.

Please remember that your server doesn’t send us any sensitive data such as passwords, users’ credentials, etc.

Automatic export of log files daily

You need to create a location and choose a way to store the log files with our technical team (FTP, for example), and JetOctopus will fetch these files daily. This method allows you to receive data with a delay of 1 hour to 1 day. You won’t have to do anything manually.

How it works

Together, we choose the way and place of log storage and share all needed access credentials.

Then, your system administrator should set up automatic data exporting for the previous day time that is available at the beginning of the present day.

It’s only a 1-time setup from the client’s side that will enable all next log updates in the storage, no need to export files manually every day.

Every morning JetOctopus retrieves fresh log data from the mentioned storage, analyze it, and import it in our system, so the dashboards will be updated automatically.

Security

This way of logs integration is the most secure. Files transfer is conducted via the HTTPS protocol. Data is only accessible through the IP and with the password.

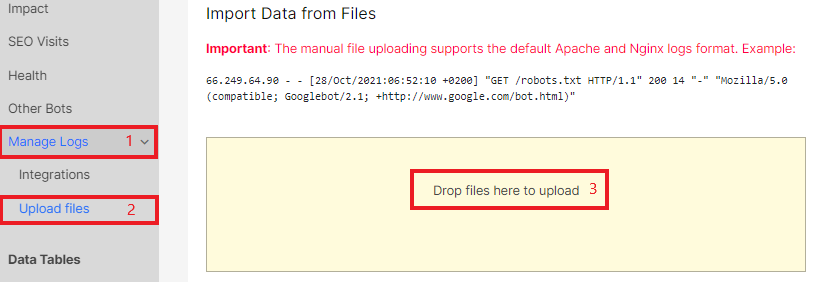

Manual export of files directly in the JetOctopus interface

Processing such files may take a little longer than integrating directly from your web server. But you can independently choose the period and data for analysis according to your limits.

How to do it

Your system administrator should set up data extraction for a particular period and transfer data via FTP, S3, etc.

Security

This way of logs integration is the most secure. Files transfer is conducted via the HTTPS protocol. Data is only accessible through the IP and with the password.

What types of log files do we support?

Actually, JetOctopus supports all types of log files. If there is an error with the file type during the integration, our technical team will manually integrate your file within 24 hours and the logs will be displayed in JetOctopus reports.

Do I need to separate logs of search engines from users yourself?

No, you don’t need to do this. We use only search engine logs, user logs in complete security: we do not use any personal data of users. However, you can separate user logs to reduce the file size when downloading manually. This will speed up the processing of log files. But please note that if you are unsure about which logs are 100% from users and which are not, we recommend not separating the loglines. After all, JetOctopus also checks whether the Googlebot is real or it was a fake bot emulating Google. We do reverse DNS Lookup. Fake bot data is very important for analyzing your website.

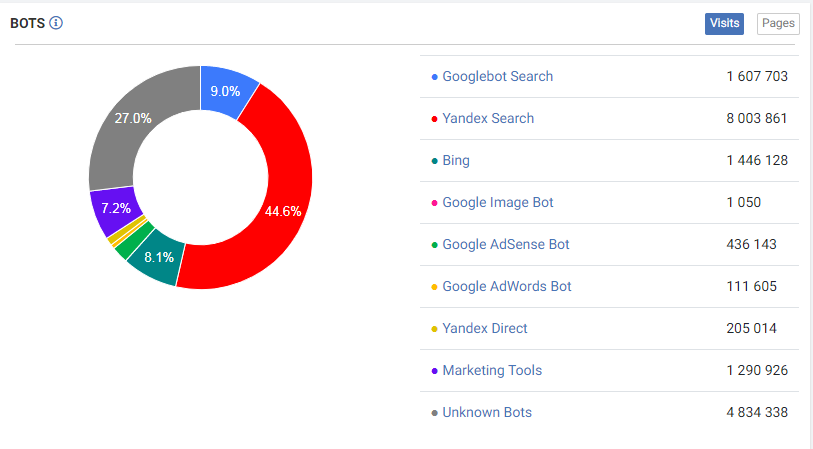

More information: How to analyze what types of search robots visit your website.

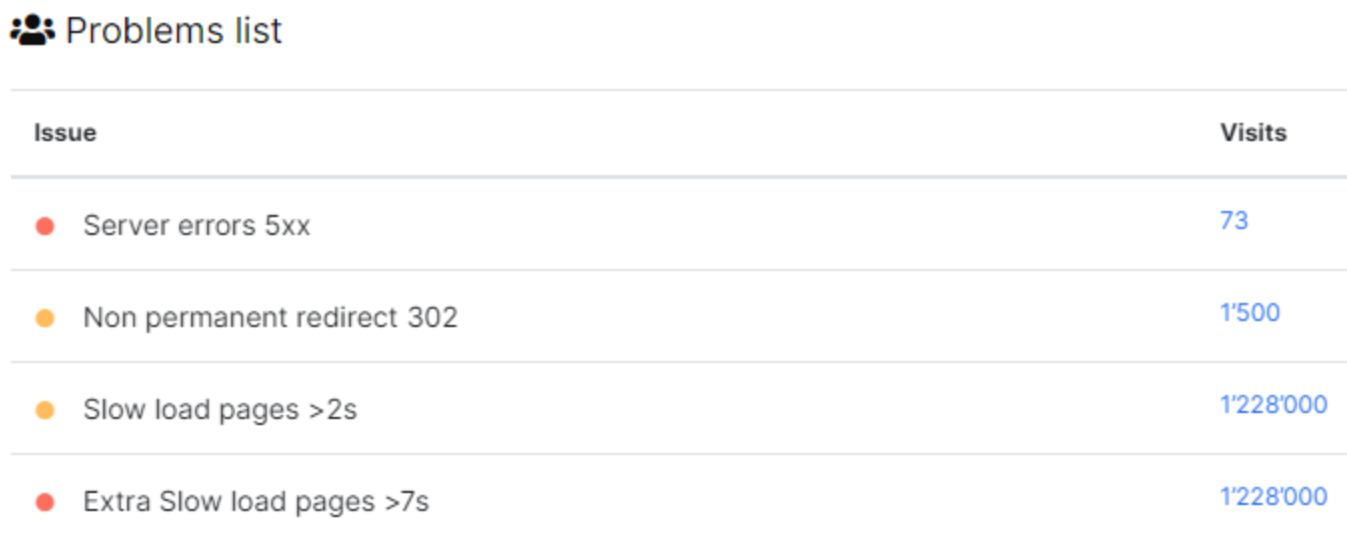

Possible problems when integrating logs and how to solve it

1. An error occurred with the file type during manual download.

Our technical team will solve your question within 24 hours, no need to worry!

2. Logs stopped being updated in logs reports.

Usually, the reason lies in changes in your web server settings or access settings. You need to contact DevOps or the site administrator to verify the connection with JetOctopus. If you use Cloudflare or AWS, you can check the connection with JetOctopus in the interface.

If everything is fine on your side, contact our online chat: we will help you!

3. Outdated logs are displayed in the data tables.

If you downloaded the log files manually, we recommend re-uploading them. Pay attention to the type of download: Append logs data, Skip updates for uploaded days, Update log data for existing days. If you need to update data for days that already have logs uploaded, select the last type of managing logs. If you want to add all log lines from the file, select the first one. If it is necessary to add data only for dates without logs, choose “Skip updates for uploaded days”.

4. The number of logs in JetOctopus differs from the number of downloaded loglines. It is likely that there are many records of fake bot visits among the uploaded data. Check the type of user agent for which you filtered the logs in JetOctopus.

What data do we take from logs?

Log file is usually a text file that contains each step Googlebot makes here, it includes server IP, client IP, timestamp of the visit, URL requested, Http status code, user-agent, method (get/post), etc.

What we need:

- IP address

To verify the source of the request properly, you need to check an IP address from which the request was made. The User-agent detection method is not reliable, because fake bots can use Google’s User-agents “names” to crawl your site. To verify Googlebot, JetOctopus runs a reverse DNS lookup. - User-Agent

Each search engine has a variety of bots with different User-Agent “names”. Here is the table with Google’s User-Agents. JetOctopus uses the User-Agent string to identify each crawler - The page’s URL

- The page’s Status Code (HTTP response)

Apart from those main parts, there are also secondary but still meaningful logs components that provide clues about bots’ behavior:

- Load time.

How long the bot had been waiting until the server gave the full HTML code. We`re not talking about the user’s load time but about the HTML generation process, which is crucial on the large sites. - Referrer.

If we have the referrer data, we can separate bots’ visits from the user’s organic visits. Thus you get unsampled data about pages with organic traffic (you will find these reports in SEO Active Pages.)

How we assure the security of your data

From our experience, different users have specific attitudes and fears connected with the security of the data. And we totally get it.

So before using any tool we have to highlight that JetOctopus follows GDPR of personal data and doesn’t use any sensitive data like POST requests with users’ passwords, invoices data, etc. We don’t collect, analyze, and save this data.

We do not process and we do not save users’ log records, even if you provide us with this data.

An IP address is only saved in case the User-Agent line contains the word “bot” in it.

If you feel like you need an additional legal justification behind the data transfer we sign a mutual NDA (Non-disclosure agreement). It is not an obligatory part but is done at your request and you can suggest your own NDA document if needed.

Our Privacy Policy is disclosed on the website, where you have full access. Please make sure to read it carefully. It aims to help you understand what data we collect, what we use it for, and how you can exercise your rights.

Our technical team will be happy to help you solve all log issues.