How to analyze logs by subdomains

The frequency of crawling of different subdomains by search engines may differ. This may depend on the indexing settings, the robots.txt file (for each subdomain there must be a separate robot.txt), correctly formed sitemaps, etc. But in addition to the domains open for indexing and scanning, the logs may contain technical, staging, and other subdomains that must be closed from scanning. Analysis of logs by subdomains will help to identify such domains and help to find problems with scanning of subdomains by search engines.

Step 1. To see which subdomains are crawled by search engines, go to the “Logs” – “Overview” section. Select the desired period.

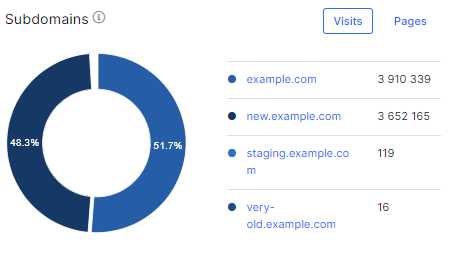

In the “Subdomains” chart, you will see the ratio of subdomains crawled by the selected bot during the selected period. Click on the chart section to go to the data table.

We recommend analyzing subdomain scanning for each search engine, because GoogleBot can find and crawl one type of subdomain, and Bing, for example, can scan old technical subdomains.

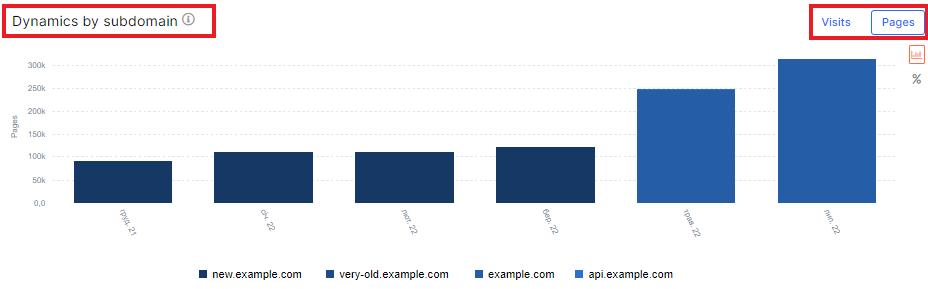

Step 2. Analyze how the frequency of scanning of all subdomains changed during the period you selected. This data is displayed on the “Dynamics by subdomain” chart. Here you can easily find the time when a technical domain was opened for scanning by error or when an internal subdomain appeared in internal links. If necessary, you can roll back the website to the desired version.

Pay attention to the feature that allows you to compare the number of scanned pages and the number of visits by search robots. Search robots can visit the main page of a subdomain a thousand times, or bots can scan all pages of subdomain only at once. If the pages were crawled only once and you managed to block the unnecessary domain, it is likely that it will not get into the index.

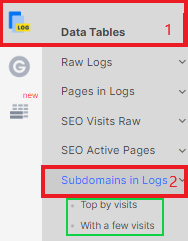

Step 3. Go to the data table – “Subdomains in logs” for deep analysis. Here you will see how many pages and visits the search engines have made for each subdomain.

You can compare this data with crawling results. If bots visit the domain with fewer pages and ignore the larger subdomain, there are likely issues with sitemaps, internal linking, or scanning/indexing rules.

You can find data on the most popular and least popular subdomains in the datasets “Subdomains in Logs” – “Top by visits” and “With a few visits”.

Also check that all the required domains are in the logs. Perhaps one of the subdomains you want to see in SERP is blocked by the robots.txt file.

If you checked robots.txt and the indexing rules, but the subdomain is not in the logs, read the article “Why is not all the traffic of search robots displayed in the logs?” to find the reason.

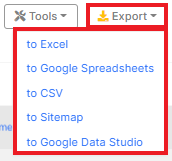

Step 4. Bulk export data to a convenient format: CSV, Google Sheets, Excel or DataStudio.

Step 5. Close unnecessary domains from crawling or indexing using robots.txt file, meta robots or X-Robots-Tag. If the desired domains are not in the search engine logs, check their availability for search engines.

In addition, you can select the desired subdomain for analysis in each dashboard.