Web page returned non 200 to search bot. Why do I get a different response code while manual checking?

You may meet a situation when you check a page that returned a 4xx or 5xx response code to the search engine (you found this data in the logs). And when checking manually, the page returns a 200 response code. What could be the reason for such a situation?

1. In the logs, you can see the response code of the page returned to the search engine in a certain period/date/hour. So, this is historical data. First, since the visit, the status code of your page may have changed. For example, the redirect started working that you configured before. Or the problem could be fixed/fixed automatically after page updating. Secondly, if the search robot received 5xx, then the problem was with the server. Most problems with the server are short-term and indicate server overload, technical maintenance, etc. There is a high probability that you will receive a 200 status code during a manual check because the server is no longer overloaded. Search robots could also overload the server during extreme crawling.

To confirm this hypothesis, we recommend looking at newer logs or manually checking pages as a search robot. For example, you can use the “Mobile Friendly Test” or the “URL Inspection Tool” to check in real time what status code the page will return to the GoogleBot.

With 5xx pages in crawling, the same situation can occur: during manual inspection, 5xx can not be reproduced.

More information: Why there are 5xx pages in the crawl results and why they are not reproduced with the manual checking.

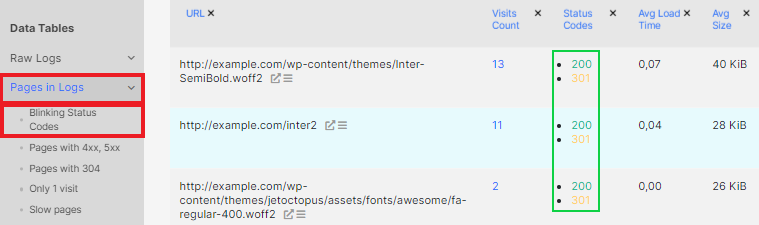

2. Check for blinking status codes in crawler logs. Blinking status codes may indicate that there are problems with either the server or the web pages. Also, when rechecking, you can get the same answer as the search robot under certain conditions.

More information: How to find blinking status codes in search robots logs.

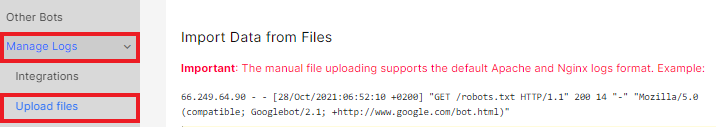

3. Web page returned non 200 to search bot but you see 200/different status code – this is a common situation when you import log files yourself through the log manager.

In most cases, standard default Apache and Nginx log-files contain relative URLs without HTTPS protocol and domain. The URL from the logline will be the same for the adresses http://example.com/page and https://example.com/page. However, there will be a 301 redirect for the first URL in fact. In the JetOctopus logs data table, you will see blinking status codes for the page https://example.com/page, because there was no information about the HTTPS protocol and the domain in the log file (the relative URL was the same).

We recommend paying attention to the types of URLs in the log files. If the log files refer to different domains or the HTTP-HTTPS protocol, they should be uploaded as separate files with the correct project designation. You can also download log files with absolute URLs.

4. The response code may differ, depending on the user agent, IP address, location, cookies.

Check the response codes from different search engines and try to find a trend.

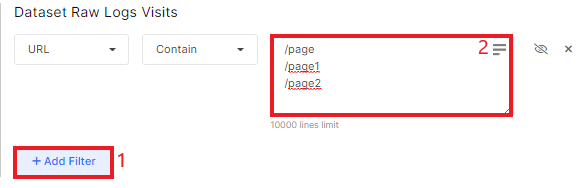

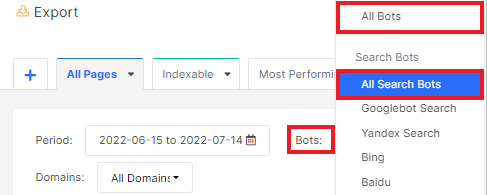

Go to the “Data table” – “Raw logs”. In the URL filter, add a needed address or a list of addresses.

Then select “All bots”.

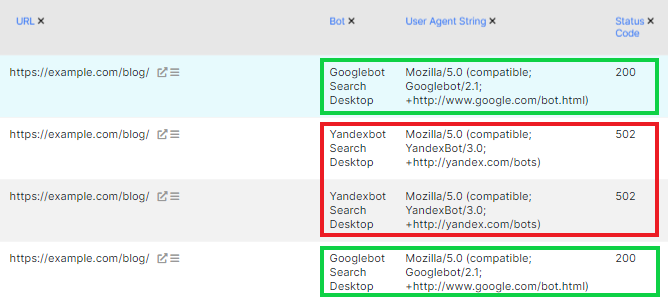

In the results, you will see what response codes this page returned to different user agents. In the example, you can see that the page returned different status codes to different bots.

In such a situation, we recommend contacting the DeVops or website administrator. There may be additional settings at the server or firewall level.

5. Search engines may be mistakenly or specifically blocked at the server level or by firewalls. Therefore, you should clarify this information with the web administrator or DeVops.