Product Update. Bot Dynamics by directories

JetOctopus introduces a new report for effective search engine log analysis. Now you can analyze the behavior of search robots by directories. In this way, you can instantly and accurately measure the crawl budget for each of the directories. This is of particular importance for those who want to understand what the crawl budget is spent on.

We usually analyze the behavior of robots for the entire site, subdomains and page segments, because the crawl budget is common to the entire website and all its subdomains. However, using directory analysis, you will not only be able to identify the pages on which the lion’s share of the crawl budget is spent, but also determine how the structure of your website affects crawling by search engines. Does the page crawl frequency vary depending on the number of directories in the URL? Do crawlers crawl pages that have many directories?

With the help of the new report “Bot Dynamics by directories”, you will be able to evaluate the behavior of bots, analyze crawls during a certain period, etc. Also, such a report will be useful for conducting A/B tests to optimize the crawl budget.

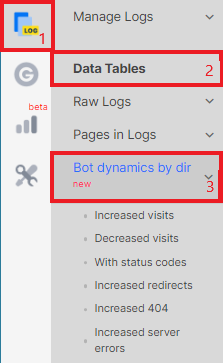

To analyze the dynamics of scanning by directories, go to the section “Logs” – data tables – “Bot Dynamics by directories”.

Next, select the desired period, search engine and domain.

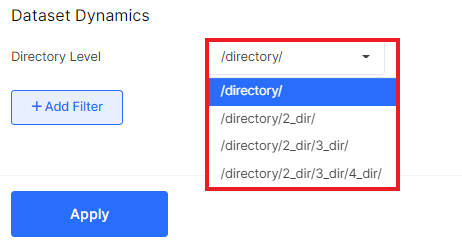

After that, choose what types of directories you want to analyze: with one segment, two or more. Add the filters you need.

In the results, you will see a list of all directories, the number of visits by search robots during the selected period, the difference in visits compared to the previous period, the number of visited pages, the average load time and page size.

If necessary, configure additional columns in the table, such as status codes.

Ready to try our new report for more in-depth log analysis? Then we invite you to use the JetOctopus log analyzer! We have many useful tools for budget crawl optimization.