“URLs Query in Logs” report: practical tips

Analyzing URLs with query parameters is a valuable practice that can help you understand how your crawl budget is being utilized effectively. Let’s delve into the details of query parameters and explore how to analyze them using the “URLs Query in Logs” report.

What are query parameters?

Query parameters, also known as GET parameters or string query parameters, are components of a URL used by client browsers to send requests to web servers via the HTTP protocol. These parameters allow clients to request additional information or perform specific actions on a page. We can say that the client browser is asking for specific information inside the page. For example, the result of such a query can be to sort the results on the page (from newest to oldest or vice versa), or to select information according to a certain filter: for example, if you want to filter on a page with t-shirts only red t-shirts, you will send a request to the web server asking you to return the result from this category page, but which will match your query. Usually you will get the same URL, but already with a query parameter and sorted or filtered results.

Query parameters are appended to the URL after a question mark (?) and consist of name-value pairs separated by an equals sign (=).

Here’s an example of a URL with a query parameter: https://jetoctopus.com/blog?find=logs-analysis

Finding query parameters in search engine logs

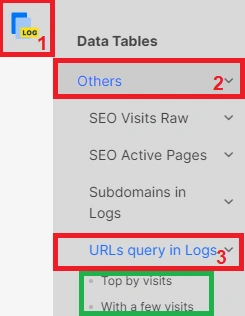

Since search bots can crawl URLs with query parameters, it’s essential to analyze them as they can impact your crawl budget. To access the “URLs Query in Logs” report, you need to integrate logs into JetOctopus. Once you’ve done that, follow these steps.

- Go to the data table and select the “Other” report category.

- Click on the “URLs Query in Logs” report.

- Next, set the desired period for the data and choose the specific bot you want to analyze, such as Googlebot Smartphone, Googlebot Desktop, Bing, or Googlebot Search (which combines data from Googlebot Smartphone and Googlebot Desktop). Select the domain for which you want to analyze URLs with query parameters.

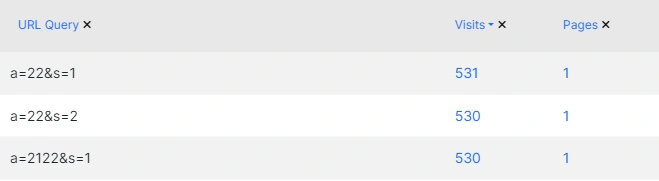

Upon completing the settings, you will see a data table with the list of URLs queries visited by the search bot.

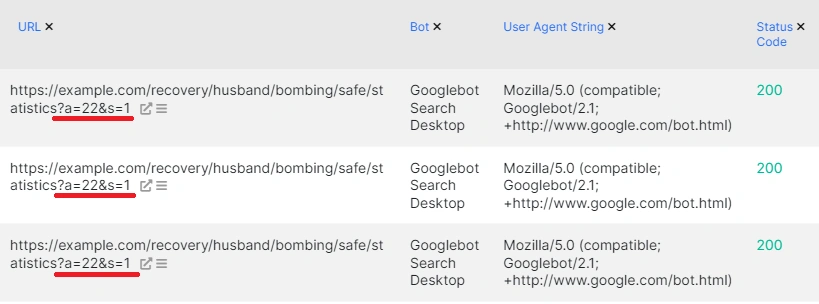

The table displays the number of bot visits to URLs with specific query parameters, along with the number of unique pages visited. Clicking on the numbers in the respective columns will provide a detailed list of URLs with GET parameters visited by the search bot.

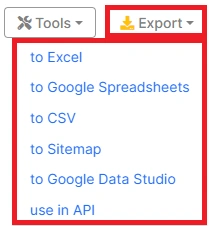

You can export the data in formats like Excel, Google Sheets, or CSV files, and it’s also possible to utilize the data via the API.

Why analyzing query URLs in logs is important?

If your robots.txt file does not prohibit the crawling of URLs with query parameters, search engines, including Google, will crawl them and allocate a portion of your crawl budget to these URLs. It is crucial to understand the meaning of these query parameters and whether you want them to appear in search engine results pages (SERPs).

For instance, pagination often uses query parameters, resulting in URLs like https://example.com/apples?page=12. Such URLs should be crawlable, contain appropriate indexing directives (e.g., index, follow), and have self-canonical tags. However, there may be other types of URLs with query parameters that serve as duplicates or contain irrelevant tags like filtering or sorting options, UTM tags, and more. Analyze these URLs and, if they hold no significance, make them non-indexable or block them in the robots.txt file to preserve your crawl budget.

Pay attention to the overall number of URLs with query parameters and compare it with the number of crawled indexable URLs without query parameters. If the number of URLs crawled with query parameters is disproportionately high, it may indicate issues with your internal links, specifically the presence of query parameters. In such cases, consider replacing links that point to URLs with query parameters.

By deeply analyzing and optimizing URLs with query parameters, you can ensure an efficient use of your crawl budget while improving the visibility and relevance of your website’s content.