How to find and check 404 Errors?

In JetOctopus crawl you can find the pages with a 404 response code. This response code means that the page was not found and the content is not available to your users.

To find all 404 pages on your website, use JetOctopus crawler.

Among other things, you can use the free 7-days trial after demo to make sure our crawler quickly finds 404s on your website. You don’t need to install any software on your computer that will run out computer memory. JetOctopus uses only its own servers during crawling.

How to start crawling your site?

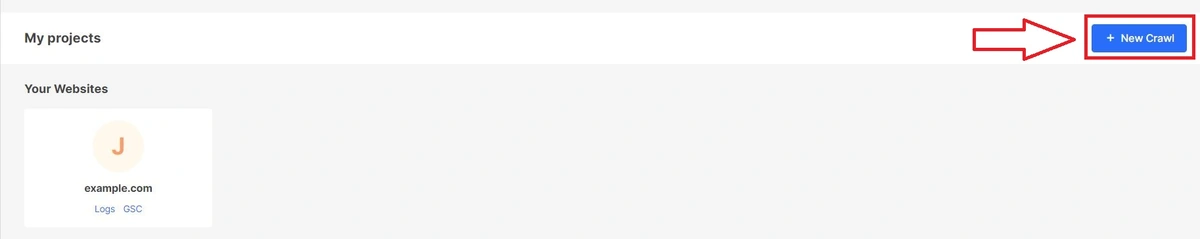

Log in to your account here. Click “New Crawl” in the upper right corner. After setting the crawl, click “Start crawl”.

If you have already started the crawl and the process was completed, you need to select your project and select the required crawl in the crawl list.

More information: How to start a new crawl.

How to find all broken 404 links?

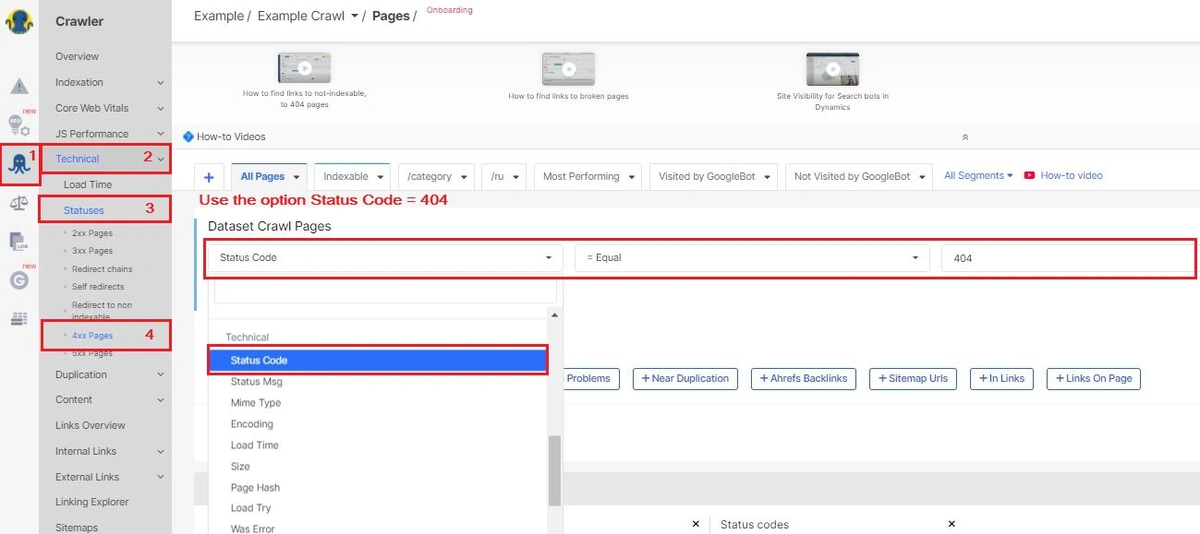

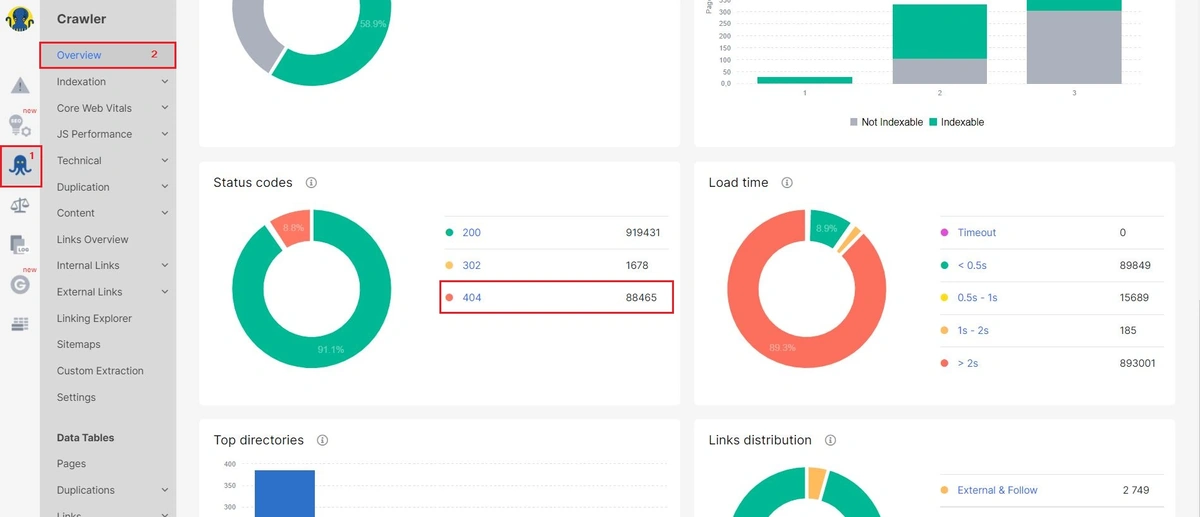

For our customers’ convenience, we have selected all 4xx response codes as a separate report in our sidebar. To find all 404s, select “Crawler” issues on the left sidebar. Then go to the section “Technical” – “Statuses” – “4xx Pages”. Next, set the filter of the dataset: “Status code” – “= Equal” – “404”. Click “Apply”.

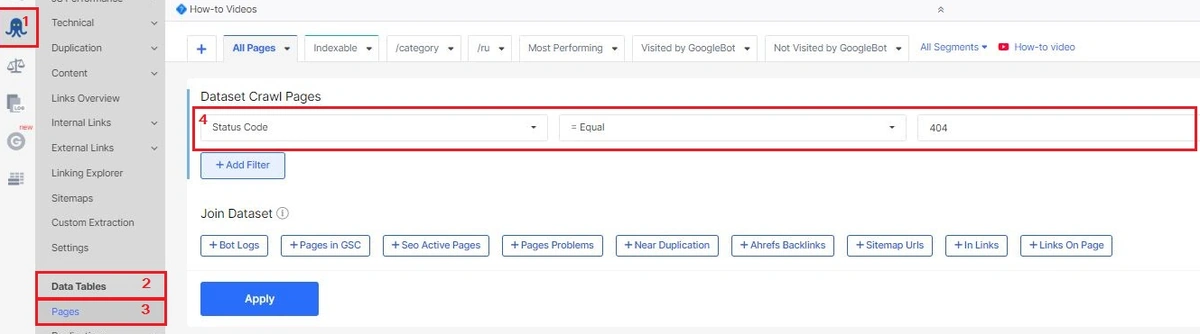

Also, you can find all 404 pages of your website in the Data Tables. Go to the “Pages” report and customize the dataset with 404 pages. Choose a “Status code” – “= Equal” – “404”.

Adjust additional filters if necessary. For example, create a sample of URLs by regex.

How to find pages with 5xx, 4xx and 3xx in the code – read in our article here. Also, you can find information about pages that contain links to 404 in the code below.

How to fix 404 (Page Not Found Error)?

We recommend removing or fixing all broken 404 URLs from your website. Users of your website will definitely not like it when they go to broken URLs and will not be able to find content or will not be able to make a purchase.

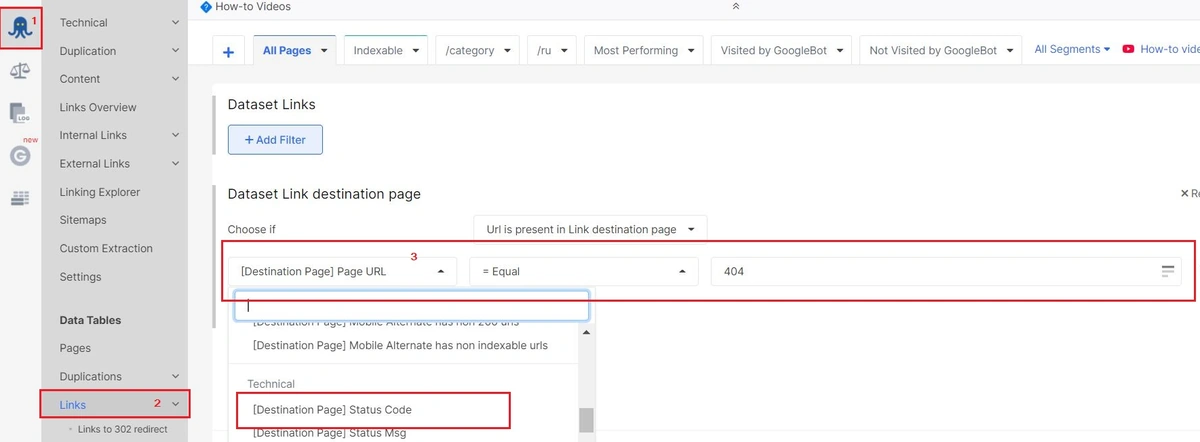

And how to find the list of internal URLs with 404? Let’s go to the “Data Tables” and the “Links” report. Use filters “[Destination Page] Status Code” – “=Equal” – “404”.

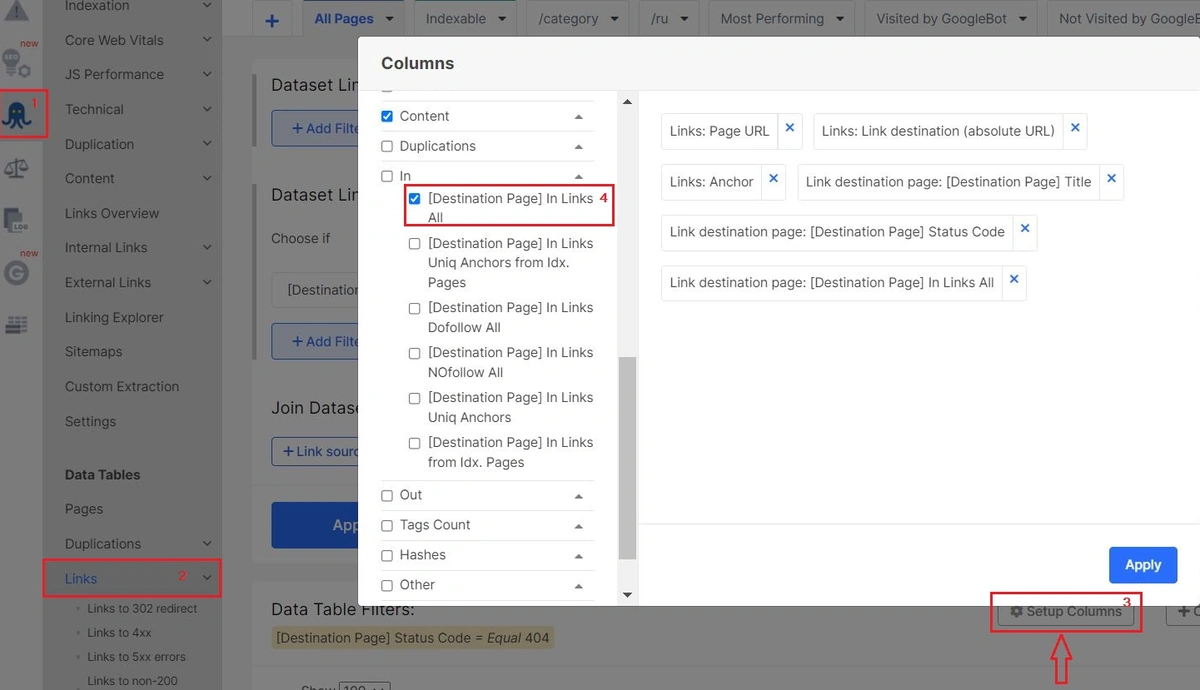

Set the “In Links All” column, where we show the number of pages where 404 links were found.

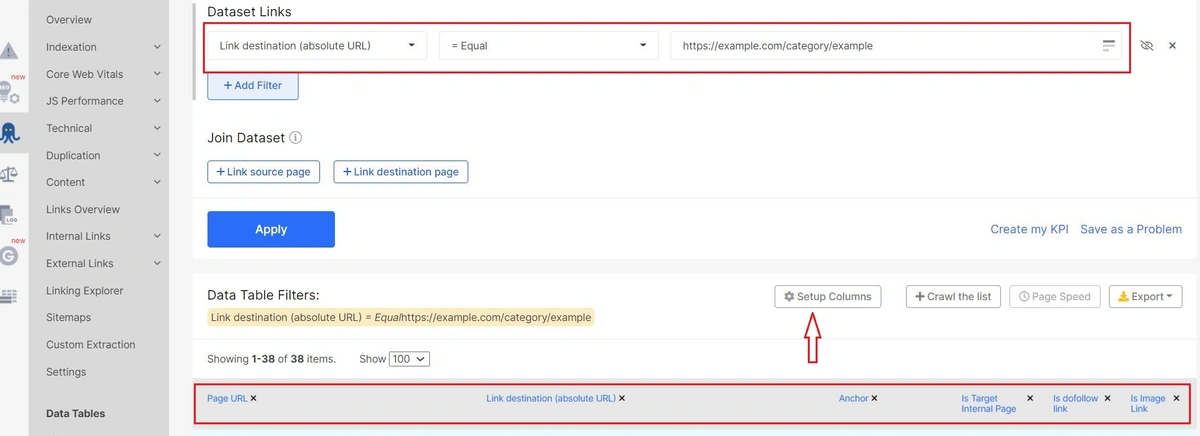

Click on the number in this column. The new browser tab will open a data table with URLs that contain 404 links in the code. As you can see, this report has all the internal links where the broken URLs were found. To simplify the work, adjust the required columns. For example, to find out if a 404 is an image or not.

“Page URL” – this is pages with 404 links in the code. “Link destination (absolute URL)” is actually a broken URL, and “Anchor” is an anchor of a broken URL, not a page URL.

404 links must be removed or fixed from the page code. A large number of 404 negatively affects user behavior. Search engines also don’t like to waste their resources scanning broken URLs.

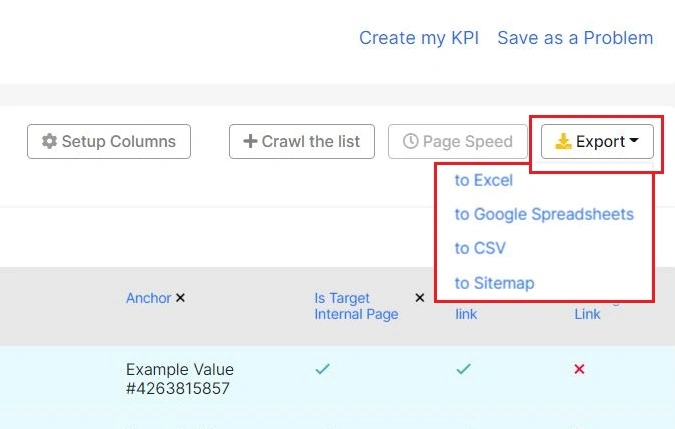

Sometimes the number of 404 is very large, so it is more convenient to work with them on tables. Export data in a convenient format: CSV, excel or google spreadsheets.

How to recheck 404 Errors on your website?

After you’ve fixed or removed all 404 URLs, you should check them out. To recheck 404s use the list of all internal links where 404s were found. This list can be found in the report Data Tables – Links – Links to 4xx. Set a status code of the destination page: select “Status code” – “= Equals” – “404” – “Apply”.

Then click “Crawl the list”. We will not crawl all pages on your website, but only recheck inlinks with broken URLs. The results of the crawl will be saved in a separate dataset. You can find this crawl in the general list of crawls on the project’s page.

JetOctopus also made a useful visualization with all status codes. Go to the report “Technical” – “Statuses” in the Crawler’s data. Here are the charts with all status codes that JetOctopus found on your website. If there are no 404s in this report, congratulations, you’re great!

Why you need to focus on 404 errors?

4xx codes indicate that the error appears on the client-side. Usually, in crawling, 4xx status codes point to deleted, not founded, or to pages with authorization required. There are also proxy or timeout errors.

JetOctopus gets all links from your site during the crawling. Therefore, our crawler found 4xx pages in internal links (unlike users, JetOctopus can not enter invalid URLs in the browser bar :)). Search engines will also crawl 4xx pages from internal links, but is it worth wasting a crawling budget on deleted pages? If the number of 4xx response codes is too large, search robots will reduce the frequency and number of pages scanned.

If pages contain useful content, but they wrongly returned 4xx response codes, search engines after a while will deindex these pages. No pages in SERP – no organic traffic. By the way, be careful with paid traffic. Check for 4xx pages in paid advertising – users will not see the content of these pages.

Also, 4xx pages negatively affect the user experience.

The reason for 4xx response codes may be errors of the formation of URLs. Pay special attention to 4xx pages to detect problems with code in time.

Watch our video: How to find links to broken pages. Video