How to find non-indexable pages in XML sitemaps

XML Sitemaps are one great way to show Google and other search engines which pages are important to you. Using sitemaps, you can highlight to search engines new pages you’ve recently added to your website or show which pages have had updated content. Our viewpoint strongly supports the idea that sitemaps can significantly amplify your website’s visibility on Google. However, it’s essential to acknowledge that erroneous sitemaps, particularly those containing links to non-indexable pages, can yield adverse effects.

Why do you need to identify non-indexable links in sitemaps?

When non-indexable pages find their way into your sitemaps, it can lead to a detrimental impact on your search rankings. This occurs because search engines allocate their crawl budget to these non-indexable pages, diverting resources from discovering new and relevant content. That is, Google and other search engines will visit non-indexable pages whose content cannot be included in the SERP, instead of scanning those pages that can be displayed in search results.

How to detect non-indexable pages in XML sitemaps?

For a comprehensive detection of non-indexable pages in your sitemaps, we recommend harnessing the capabilities of our robust SEO crawler, JetOctopus. Here’s how you can go about it.

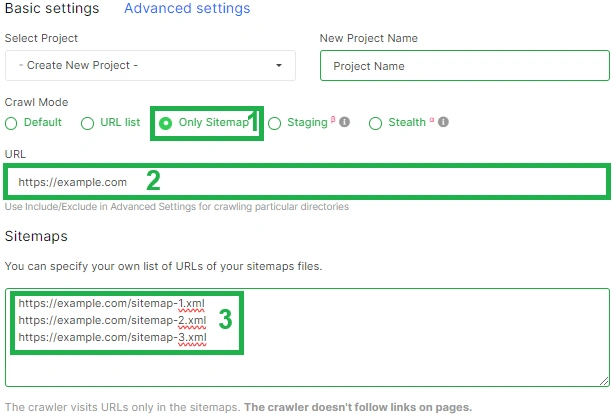

1. Launch a new crawl by clicking the “New crawl” button and opt for the “Sitemaps only” mode.

Next, in the “URL” field, enter its URL of your home page. Please don’t get confused: here you don’t need to enter links to sitemaps, but rather you need to insert a link to the home page of the site whose sitemaps you want to analyze. You need to add links to your sitemaps in the “Sitemaps” field below.

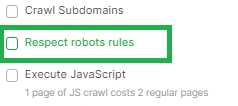

Make additional crawl settings. Since you want to detect non-indexable pages, deactivate the “Respect robot rules” checkbox.

Start the crawl.

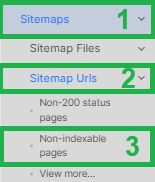

After the crawl is complete, go to the crawl result and go to the “Sitemap URLs” data table. There you will see a separate data table “Non-indexable pages”. Click on this data table to go to the results and see what non-indexable pages are in your sitemap.

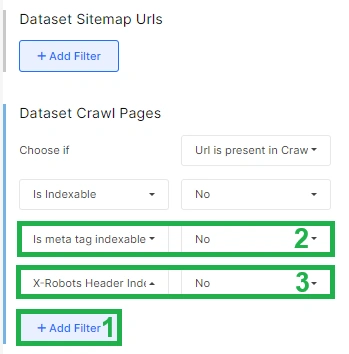

Please note that this data table uses the filter “Is indexable” – “No”, which means that this list will show all pages that cannot be crawled and indexed by search engines, in particular, pages that return a 404 status code , are canonicalized, etc.

But if you want to detect pages that are not indexable due to meta robots or X-robots tag, select the desired filter “Is meta robots indexable” – “No” or “Is X-robots tag indexable” – “No”.

As a result, you will see a detailed list of pages that are non-indexable due to meta robots or X-robots tags that contain noindex.

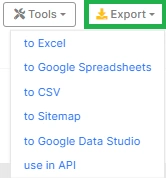

You can export the data table by clicking on the “Export” button and selecting the desired format. It can be Excel, CSV, or you can export data directly to Google Sheets.

Please also note that you can filter out all URLs that are indexable from the current sitemap by using the filter “Is indexable” – “Yes” and export them to the sitemap. You can upload the updated sitemap to the root of your website, replacing the sitemap with non-indexable links.

Managing non-indexable links in XML sitemaps

In dealing with non-indexable URLs within your sitemaps, it’s imperative to expunge them. This can be accomplished by collaborating with developers to eliminate these URLs or by generating a new sitemap via JetOctopus. Subsequently, the updated sitemap can be submitted to Google, facilitating a more effective indexing process.

In summation, XML Sitemaps wield substantial influence in the realm of technical SEO. Optimizing them by removing non-indexable links can enhance your website’s overall search engine visibility and ensure efficient crawling and indexing processes.