How to measure the real crawl budget of Googlebot if you have a JavaScript website – Insights from Serge Bezborodov

In the ever-evolving landscape of web indexing and search engine optimization (SEO), understanding how Googlebot interacts with JavaScript-based websites is crucial. Googlebot’s execution of JavaScript on web pages has introduced new dimensions to the concept of crawl budget, leading to potential discrepancies in tracking and analysis. In a recent Twitter thread, Serge Bezborodov sheds light on the intricacies of measuring the true crawl budget of Googlebot for JavaScript-based sites.

Googlebot’s ability to execute JavaScript presents both opportunities and challenges. On one hand, it allows for a more accurate rendering of web pages, leading to better indexing of dynamic content. On the other hand, it also opens the door to executing website proprietary tracking codes that might not be blocked by the robots.txt file. This can inadvertently skew the metrics used to measure crawl budget.

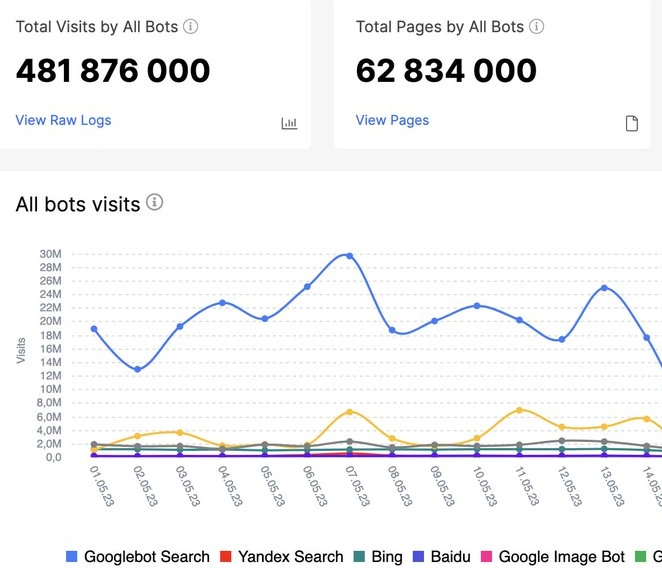

The example provided by Serge Bezborodov highlights this concern. Initially, a website seemed to possess a substantial crawl budget, with around 500 million visits attributed to Googlebot every month.

However, delving deeper into the data revealed that a significant portion – 259 million hits – were merely tracking code requests. This indicates that over half of the apparent crawl budget was allocated to non-essential processes.

Crawl budget, at its core, signifies the number of requests required to access a specific number of pages on a website. For JavaScript-based pages, the situation is more complex due to the potential for additional JavaScript requests such as AJAX. Nonetheless, these supplementary requests should not deplete the valuable scanning budget reserved for genuine page fetch requests by Googlebot.

The key takeaway from Serge Bezborodov’s analysis is the importance of meticulous examination at the URL level. To accurately assess the crawl budget, it is imperative to distinguish between legitimate Googlebot page fetch requests and executions of tracking codes. Focusing solely on the number of visits could lead to a substantial overestimation of the crawl budget’s true value.

In conclusion, the journey to measure the genuine crawl budget of Googlebot for JavaScript websites requires a deep dive into data and a discerning eye for details. By recognizing the impact of JavaScript execution and its potential interference with tracking code metrics, webmasters and SEO professionals can derive a more accurate understanding of how Googlebot truly interacts with their sites. Through this approach, what might initially seem like a substantial crawl budget could reveal itself to be considerably smaller, emphasizing the necessity of precision in crawl budget analysis.