How to set up GSC Inspection Alerts: step-by-step guide

One invaluable tool for proactive website management is JetOctopus’ GSC Inspection Alerts. In this article, we’ll explore the significance of these alerts, delve into the process of setting them up, and illustrate their impact on maintaining SEO health.

Understanding GSC inspection alerts

GSC Inspection Alerts, an essential feature of JetOctopus, empowers website owners and SEOs with real-time notifications into critical issues that might affect their site’s indexing. JetOctopus has 4 types of alerts: log alerts, Core Web Vitals, crawl and GSC. GSC alerts do not require additional crawls because in contrast to traditional crawling methods, JetOctopus employs the Google Search Console API, ensuring that data from the GSC is continuously updated. This live feed of information enables users to identify problems promptly, potentially mitigating negative impacts on search engine visibility.

Set up GSC Inspection Alerts

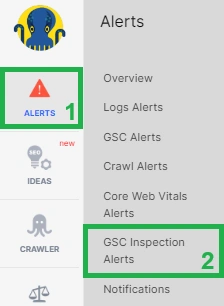

To initiate the setup process, navigate to the “Alerts” section within your desired project and website in JetOctopus. Within this section, locate and click on “GSC Inspection Alerts.”

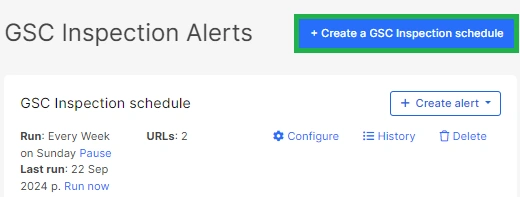

If your team hasn’t previously configured alerts in this section, the initial step involves establishing a page inspection schedule within Google Search Console (GSC). Click the relevant button to proceed.

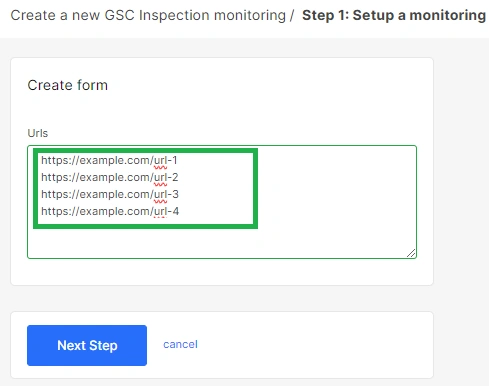

Following this, input the list of pages you wish to monitor. Focus on crucial pages whose status changes could significantly impact your website’s traffic from Google’s search results.

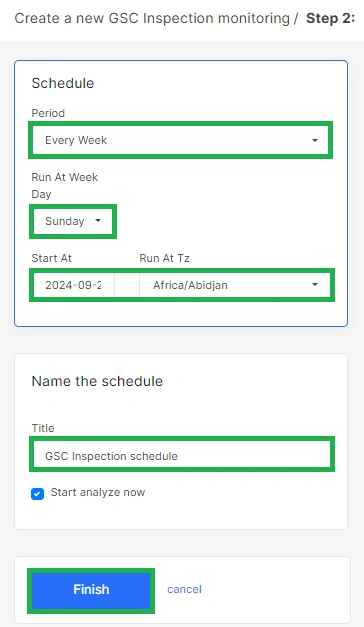

Tailor the inspection schedule to your needs, selecting from options like several times a day, weekly, or monthly. This flexibility allows you to align monitoring with your website’s update frequency and content changes.

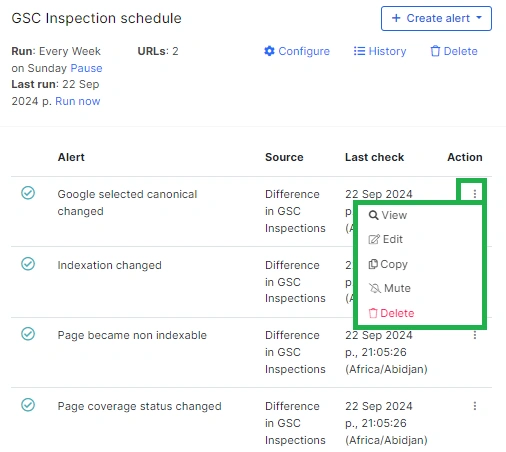

By default, JetOctopus includes alerts for changes to canonical URLs, indexing status, and coverage status. You have the flexibility to deactivate any of these built-in alerts by utilizing the “Mute” button.

Moreover, you can craft your alerts tailored to your specific requirements. By selecting “+Create alert,” you can define conditions based on data tables. For example, “Difference in GSC Inspections” provides a comparative analysis of previous and current Google status, while “GSC Inspect” monitors real-time status.

Examples of GSC Inspection Alerts

GSC Inspection Alerts encompass the entire scope of insights available in the “URL Inspection” section of Google Search Console. This includes crucial information such as:

- last page crawl date;

- crawling allowance (blocked by robots.txt or not)

- fetch status (successful or not);

- indexing allowance;

- canonicals state and so on.

You can also find information about coverage status, structured data and mobile usability.

And of course you can get alerts when it all changes!

Let’s consider specific scenarios where GSC Inspection Alerts prove invaluable.

Monitoring Robots.txt state (Crawl is allowed?)

You have the option to choose from three types of alerts.

- Receive notifications when the URLs you’ve entered have the status “Crawl allowed by robots.txt” or “Crawl blocked by robots.txt.”

- Obtain information about the previous robots.txt status (status during the last Googlebot scan).

- Get information about status changes relative to the previous Google Search Console inspection check initiated by JetOctopus.

Let’s delve into all three alert types.

How to receive a notification if at least one page from the list becomes blocked by the robots.txt file

To receive notifications if any page from your list is blocked by the robots.txt file and if Google cannot access it, follow these steps.

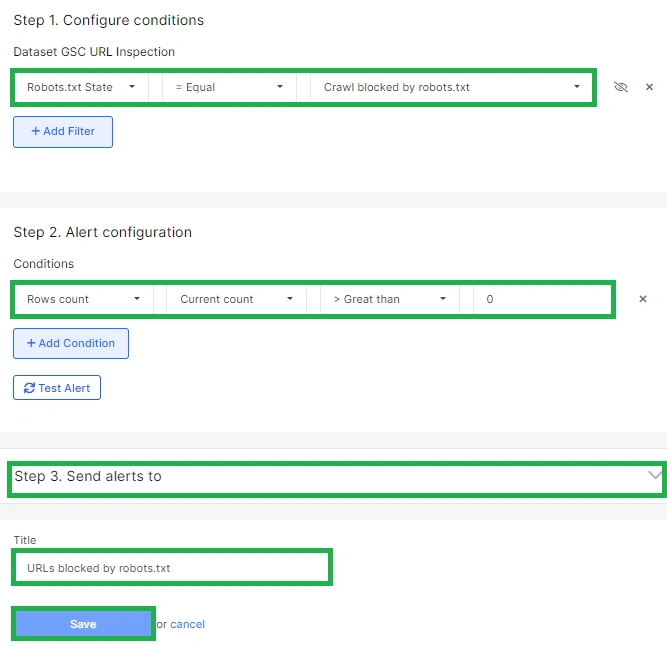

- Click the “Create Alert” button and choose “Differences in Google Search Console.”

- Select “Robots.txt State” and pick “Crawl blocked by robots.txt” under “Step 1. Configure Conditions.”

- In “Step 2. Alert Configuration,” select “Rows Count,” then “Greater than,” and set the value to 0.

- In “Step 3. Send Alerts To,” provide your email, phone number, or Slack channel for receiving notifications.

By following these steps, you’ll receive a notification if any URLs you entered in the Google Search Console inspection schedule are blocked from being scanned by robots via the robots.txt file. This alert is crucial as it ensures that all significant pages on your website remain accessible to search engines. It also safeguards against unauthorized modifications to the robots.txt file, preventing potential site-wide crawling and indexing issues. For example, if the robots.txt file can be modified by developers or if many people have access to edit this file, this can lead to the fact that the entire website will be blocked from crawling and search engines will not be able to access the pages of your website because of the error. And as a result, the pages will be deindexed. And by setting up such an alert, you will be notified of changes in time and can prevent negative consequences.

Comparing previous “Crawl is allowed?” status

This alert lets you compare the website’s crawling status during the previous Google inspection (based on the last Google crawl data, not real-time results). With this alert, you can identify any past issues accessing the website. If the status during the last Google bot check was “Crawl allowed?” (Yes/No), you’ll receive a notification without real-time checking. It’s vital for tracking the page’s crawling and indexing progress.

To set up this alert follow the next points.

- Create a new alert and choose “Robots.txt State Previous” – “Crawl blocked by robots.txt” under “Step 1. Configuration of Conditions.”

- In “Step 2. Alert Configuration,” choose “Rows Count,” then “Greater than,” and set the value to 0.

This way, you’ll receive a notification if any pages you entered when configuring the Google Search Console inspection schedule were blocked by Google via the robots.txt file during Google’s last visit.

Tracking changes in crawling status

This alert informs you about changes in the search engine crawl status. You’ll be notified if pages that were previously allowed to be crawled in the robots.txt file are now prohibited, or vice versa.

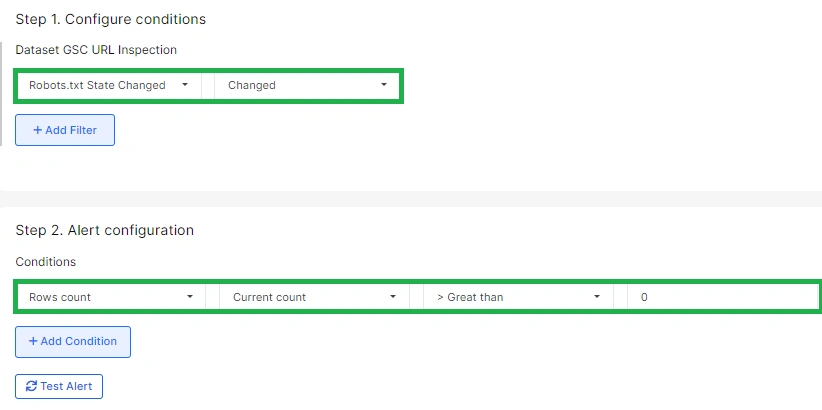

To set up this alert, configure next points.

- Choose “Robots.txt State Changed” – “Changed” under “Step 1. Configuration of Conditions.”

- In “Step 2. Alert Configuration,” select “Rows Count,” then “Greater than,” and set the value to 0.

- Configure email, phone number, or Slack notifications in “Step 3.”

Similarly, you can use these three points to monitor indexing status: check the current indexing status, compare it with the status during the last Google Bot check, and get alerts for indexing status changes since the previous check.

Rich results alerts

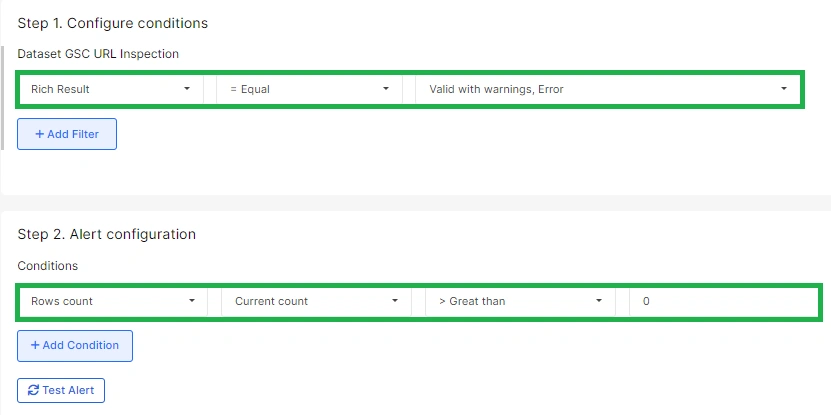

We also recommend giving special attention to the “Rich Result” aspect. You can receive notifications regarding any page on your website that contains errors in Rich results. These errors indicate issues with structured data, potentially resulting in an incorrect snippet display. To enable these notifications, set up a new alert.

- In “Step 1. Configuration of Conditions,” choose “Rich Result,” then select “= Equal” and pick “Valid with warnings” or “Error.”

- In “Step 2. Alert Configuration,” opt for “Rows count,” then “Greater than,” and set the value to 0.

- For notifications, configure email, phone, or Slack details in step 3.

Upon encountering structured data errors in URLs you’ve entered, you’ll be notified. This alert is particularly valuable for e-commerce sites with numerous products, where showcasing prices, reviews, and other data in Google snippets significantly enhances clickability.

Monitoring Google last crawl date

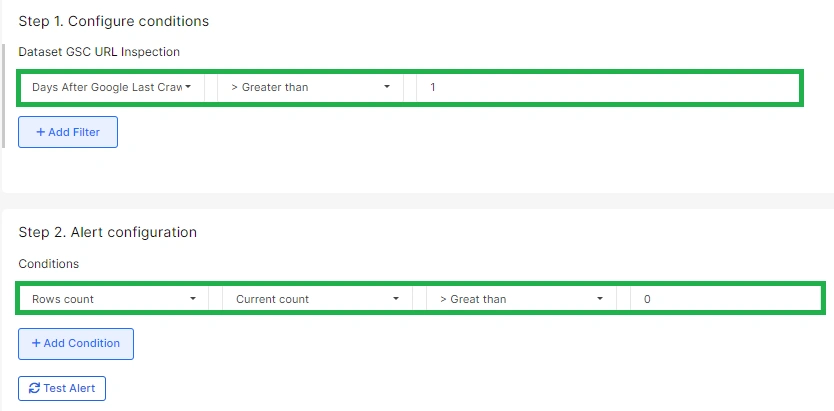

Now, let’s move on to another alert example. This one is a technical alert designed to inform you about extended intervals between Google search engine scans. Follow these steps to set it up:

- Click the “Create Alert” button.

- In “Step 1. Configuration of Conditions,” choose “Days After Google Last Crawl.”

- Then, depending on your preference, select “Equal to” or “Greater than,” and specify the desired number (you can start with seven days).

- In step 2, choose “Rows count,” then “Greater than,” and set the value to 0.

- Configure email, phone, or Slack notifications in step 3.

Whenever Google hasn’t scanned a page for more than seven days, you’ll receive a notification. If this occurs, and the page’s content has been updated, you can consider requesting reindexing to ensure the latest content is reflected in search results.

GSC Inspection Alerts are a powerful tool for staying ahead of critical issues that could impact your website’s indexing and search engine visibility. By configuring alerts, you can receive real-time notifications about changes in crawling status, errors in structured data, and extended periods between Google search engine scans. These alerts enable timely identification and resolution of issues, ultimately helping you maintain a healthy and effective SEO strategy.