I scanned the site with another crawler. Why doesn’t the number of pages match the results in JetOctopus crawl?

In this article, we will explain why different crawlers may show different numbers of pages in the results. By the way, we recommend reading the article why there are missing pages in the crawl results if you cannot find all the URLs o in the full crawl results.

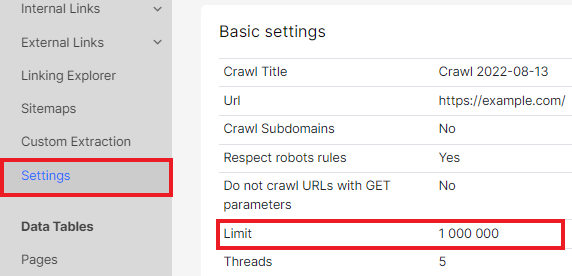

The first thing we recommend is to check your crawler settings. All crawlers have different default settings. Therefore, the number of pages found may vary significantly. To check the JetOctopus crawl settings, go to the desired crawl results and select the “Settings” menu. There you can find all the information about the activated crawling mode.

1. Check the page limit you set in the corresponding field when you started the crawl.

It may be that you specified a smaller number of pages in the limit than your website has. And when scanning with another crawler, you did not specify a limit.

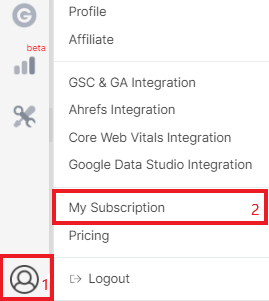

2. Scan limits are over. To check your limits, go to “My subscriptions”.

3. Check the DFI (Click Distance from Index) Limit. By default, JetOctopus has 1000. But if your website has a very deep structure, then not all pages will be in the results. Some crawlers do not consider crawl depth at all.

DFI is the number of clicks from the home or start page to the current one. For search engines, this indicator is of great importance. Read more information in the article: What is DFI (distance from index) and how to analyze it.

4. Scanning non-indexable pages and links with nofollow. By default, JetOctopus will respect the scanning and indexing rules that are set for search robots or specially Googlebot (“Respect robots rules” checkbox is activated). What does it mean? First, when scanning, JetOctopus will follow the rules in the robots.txt file (a group of rules for all user agents, and if it is not there, then for Googlebot). Second, JetOctopus will not process non-indexable pages.

In addition, there are two more rules. Usually, JetOctopus does not scan pages with GET parameters (“Do not crawl URLs with GET parameters” checkbox). Also (by default) the crawler uses the “Follow links with rel=”nofollow”” recommendation. All this can affect the number of pages in the crawl results.

5. JavaScript processing. If you chose to scan a website without JavaScript and you do not have a prerender, then the number of internal links in the static HTML code may be less. By default, JetOctopus does not execute JavaScript. Check if the other crawlers you used to crawl the site rendered JavaScript.

Explanation in the article “Why do JavaScript and HTML versions differ in crawl results?“.

6. Crawling subdomains. When starting the crawl, you can activate the “Crawl Subdomains” checkbox to scan all the subdomains that JetOctopus finds in your website’s internal links. Different crawlers handle subdomains differently, so the number of pages in the results may vary.

7. JetOctopus scans sitemaps by default, many other crawlers do not. Sitemap scanning helps to find orphaned pages.

Other settings may also matter. For example, user agent, mobile or desktop browser, crawl speed. Also check other points in Advanced settings, such as deny and allow patterns.

JetOctopus always supports the idea of scanning the entire website to get accurate data about the number of pages on the website. Therefore, you can make appropriate settings and run a full crawl. Then you will get the most accurate data about the number of pages on your website.