403 pages in crawl results

During website scanning, you may come across pages that return a 403 status code. However, you can note that these same pages return a 200 status code when checked manually or via tools such as Google Search Console or Mobile Friendly Test. In this article, we will examine the underlying causes of this behavior and provide solutions to help you correct the situation.

What is the 403 Forbidden status code

As discussed in our previous article “Status codes: what they are and what they mean” the 4xx status codes indicate a user error that prevents the web server from displaying the content of the page. Specifically, the 403 Forbidden status code is often returned when the user agent is blocked either by security software or on the server side. For instance, CloudFlare may return a 403 status code to unverified bots or fake bots attempting to access the website.

Furthermore, pages that load with captchas may also return 403 response codes.

Therefore, if you come across 403 pages in your crawl results, it may indicate that JetOctopus is blocked from accessing those pages. To resolve this issue, you may need to whitelist JetOctopus or adjust the security settings of your web server to allow access to the restricted pages.

How to fix 403 pages

It’s important to note that fixing 403 pages after they appear in the crawl results is unrealizable, as JetOctopus was already denied access to these pages. Consequently, you may not be able to view the title or meta description of these pages in your crawl results.

To address this issue, we recommend taking the following steps:

1. Whitelist JetOctopus user agents.

Ensure that JetOctopus is whitelisted, either at the web server level or in your website’s security software, such as CloudFlare. By whitelisting JetOctopus, you can allow the crawler to access your website without encountering a 403 error.

2. Whitelist JetOctopus IPs.

In addition to whitelisting user agents, it’s important to whitelist JetOctopus IPs. This is because you may need to scan your website with the Googlebot user agent, and many security systems perform a reverse DNS lookup to verify the bot. Since JetOctopus emulates Googlebot behavior, it does not pass the verification process as a Googlebot. However, by adding the JetOctopus IP to the whitelist, you can scan your website with Googlebot Desktop or Googlebot Smartphone user agents.

More information: How do I crawl with the Googlebot user-agent for sites that use Cloudflare?

A complete list of user agents used by JetOctopus

If you use captcha, set up exceptions for JetOctopus.

After adding JetOctopus to the whitelist, you can proceed with the next steps. If the crawl has completed, the easiest way is to start a new crawl of the 403 pages. First, go to the “Pages” data table, then add the filter “Status code” – “=Equals” – “403”. Apply the filter.

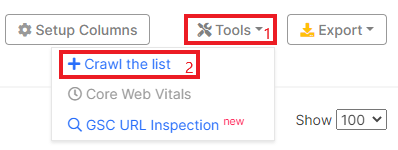

Next, click on the “Tools” menu and select “Crawl the list”.

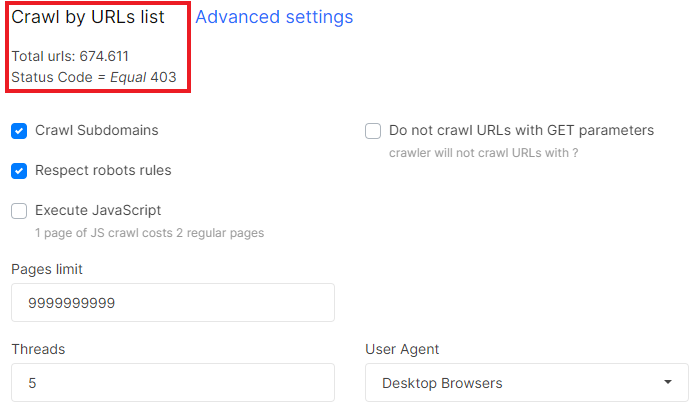

The crawl settings menu will be opened. A list of 403 pages is already stored here, so you only need to configure rules such as number of threads, page limit, add advanced settings, etc.

Next, start the crawl and wait for it to finish. By the way, you will be able to combine data from two crawls (with 403 and without 403 pages) using the crawl comparison tool.

If you notice 403 status codes during the crawl process (you can see the progress of the crawl and a chart with status codes), click on the “Pause” button. Next, whitelist JetOctopus and continue crawling.

If you are unsure if JetOctopus is whitelisted, run a test scan before starting a full crawl. Select 1000-2000 pages and start crawling with the Googlebot user agent.

To start your test crawl, here are some life hacks:

- run a test crawl with Googlebot user agents, as protection systems often block bots that use the user agent string of the search engine but do not have a verified IP;

- add pages of all types to the crawl, as the captcha may not be on all pages, but only on products or categories.

These steps will help you verify that JetOctopus has access to all pages.

If no 403 pages were returned in the test crawl, you can run a full crawl of your website.

What to pay attention to if you found 403 pages in the crawl results

Logs. Be sure to analyze whether search engines (and advertising bots you are using) always have access to your website. If there are 403 pages in the logs, check that you are not blocking Googlebot and other verified search engines.

Users. Check the user logs. Real users should not receive a 403 status code.

Pay attention to 403 pages when analyzing crawl results. Such pages may have the same title, loading time, same content, etc. Therefore, when analyzing the content and duplication of your website, do not take 403 pages into account.

Important: to avoid spending your crawl limits, always check if your website is available for scanning by JetOctopus before starting large crawls.