Internet of Bots: A Quick Research on Bot Traffic Share and Fake Googlebots

Have you ever had a chance to look into the live tail of your website’s access log?

You’d expect to see a lot of different user-agents of users’ browsers on your website. But more likely, you’ll find a variety of bots.

What is bot traffic?

In simple words, when a user uses an internet browser to surf websites, it counts as traffic from real humans. Everything else is bot traffic.

Of course, you know how the most important bots for any SEO specialist are search engine bots: Googlebot, Bingbot, and even Applebot. But bots are everywhere — they can be as simple as scripts written by students in Python. They also arrive on websites from powerful marketing tools like Ahrefs, which crawl billions of pages each day.

I was always curious: what is the proportion between search engine bots and all other types of bots. With time, JetOctopus has gathered huge datasets of access logs from many websites across various countries. And this enables me to conduct a small research about the state of bots traffic on the Internet.

Let’s dive in!

Methodology of the research

I used a part of the JetOctopus logs dataset (of about 50 billion log lines) to calculate the total number of visits, pages, and legit/fake bots.

Most of our user base comprises huge e-commerce websites, catalogs, and aggregators. So my research portrays a picture of the bots traffic ratio on such big websites.

Here’s what I found:

Bot traffic from Googlebot and Google’s crawlers

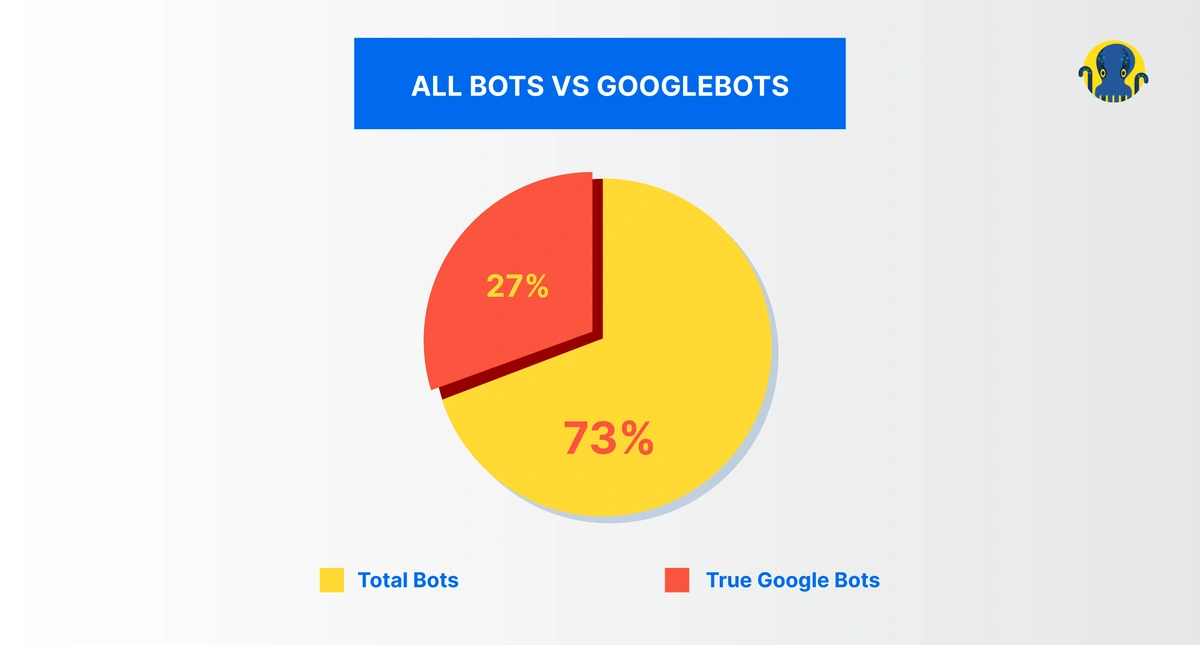

As you’d expect, in sheer numbers, Googlebot is the most powerful crawler on the web. No other bot produces more visits. But if we look at the percent of true Googlebot vs. all bots:

In simple words, only one in three requests is made by a true Googlebot.

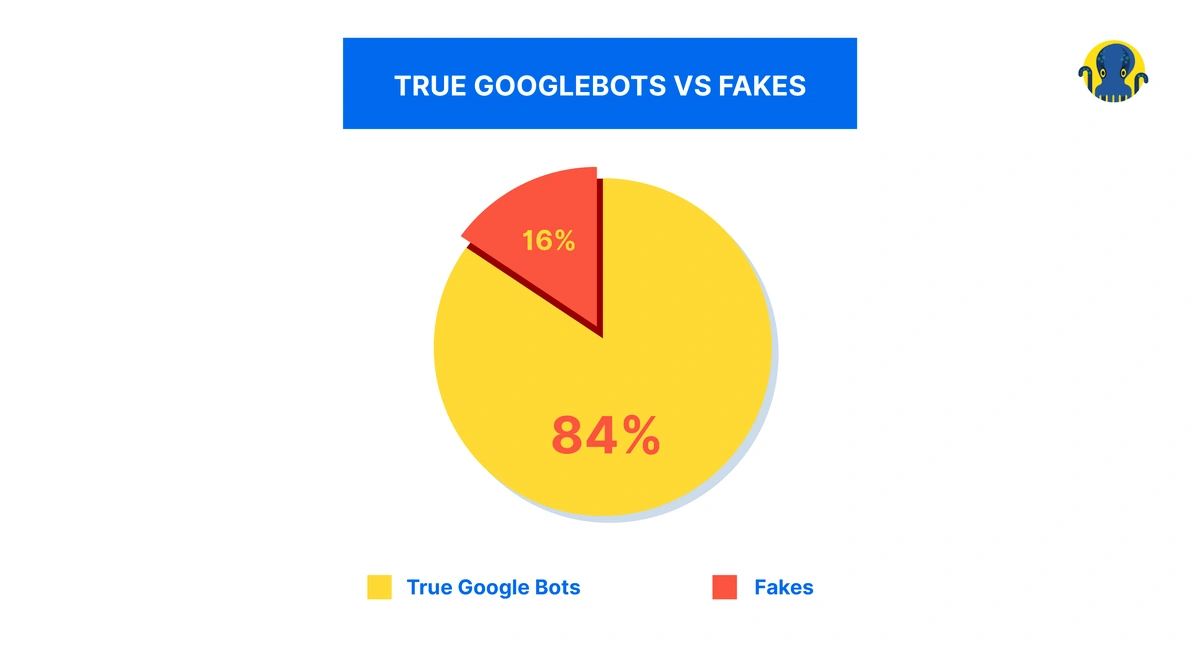

Anyone can use a Googlebot user agent to crawl your website, and a good way to verify a true Googlebot is doing a reverse DNS query.

The pie chart below proves that fakes bots are indeed quite common:

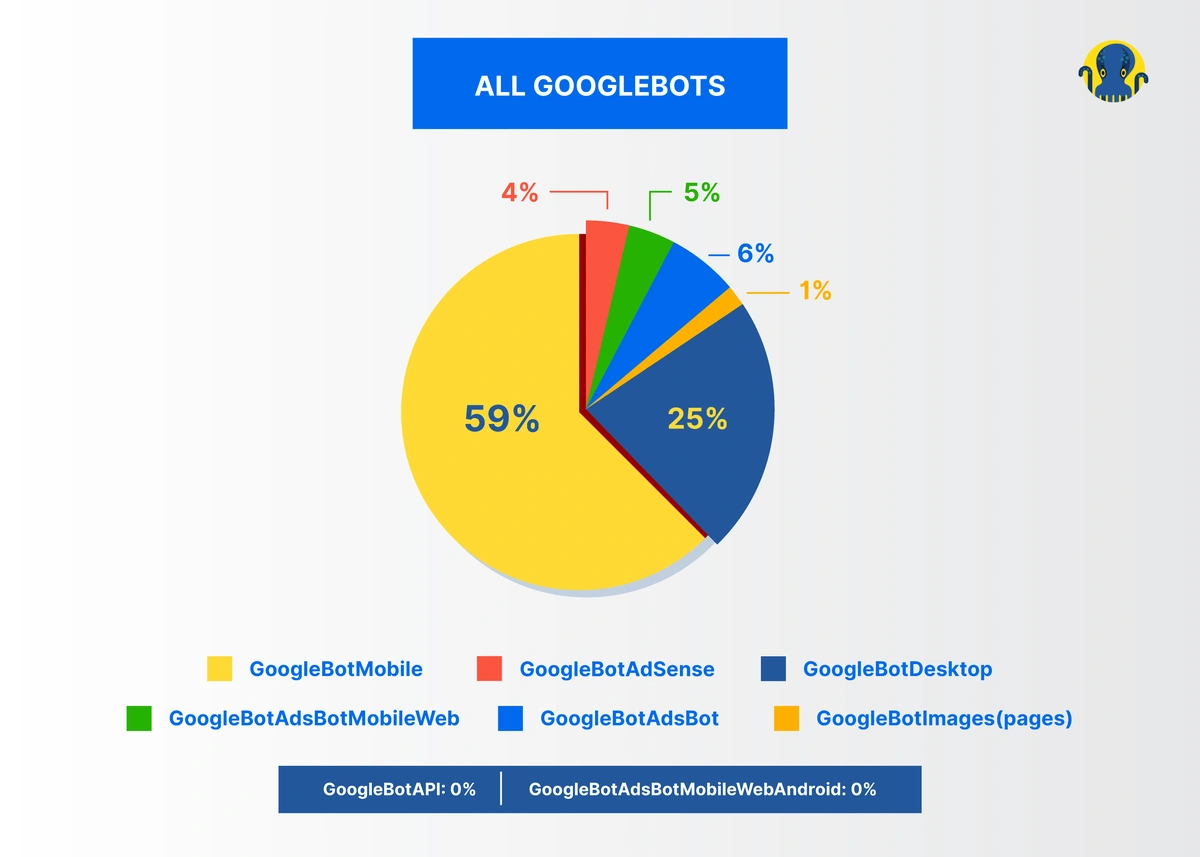

Besides, Google itself has several bots such as AdsBot, Googlebot Image, and more.

That being said, 84% of Google’s traffic is from Google’s main search crawler, the Googlebot.

Bot traffic from other search engines

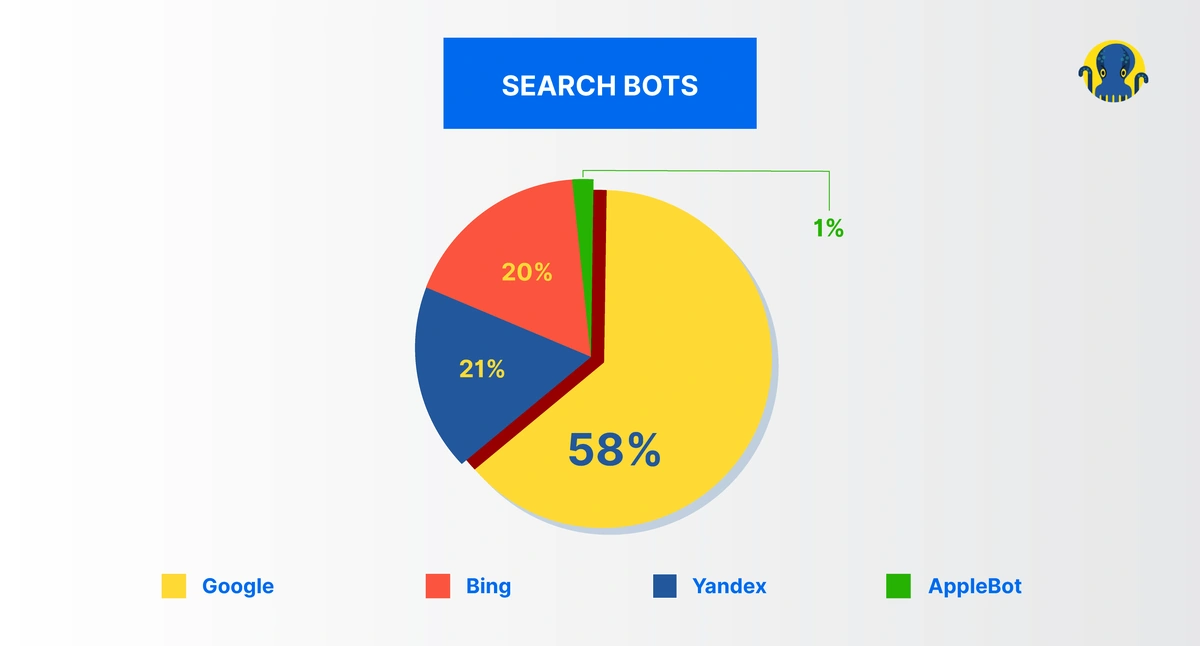

Besides Google, the search mammoth, websites receive traffic from other search engine bots like Bing, Yandex, and even Apple.

Did you hear the speculation last year about Apple planning to launch its own full-fledged search engine? Apple already has an in-house web crawler — Applebot — that is used to help power services like Siri and Spotlight on iOS and OS X platforms.

But current data shows a very small traffic percentage from Applebot, an amount that’s insignificant even when compared to less popular search engines like Yandex.

Bot traffic from marketing tools

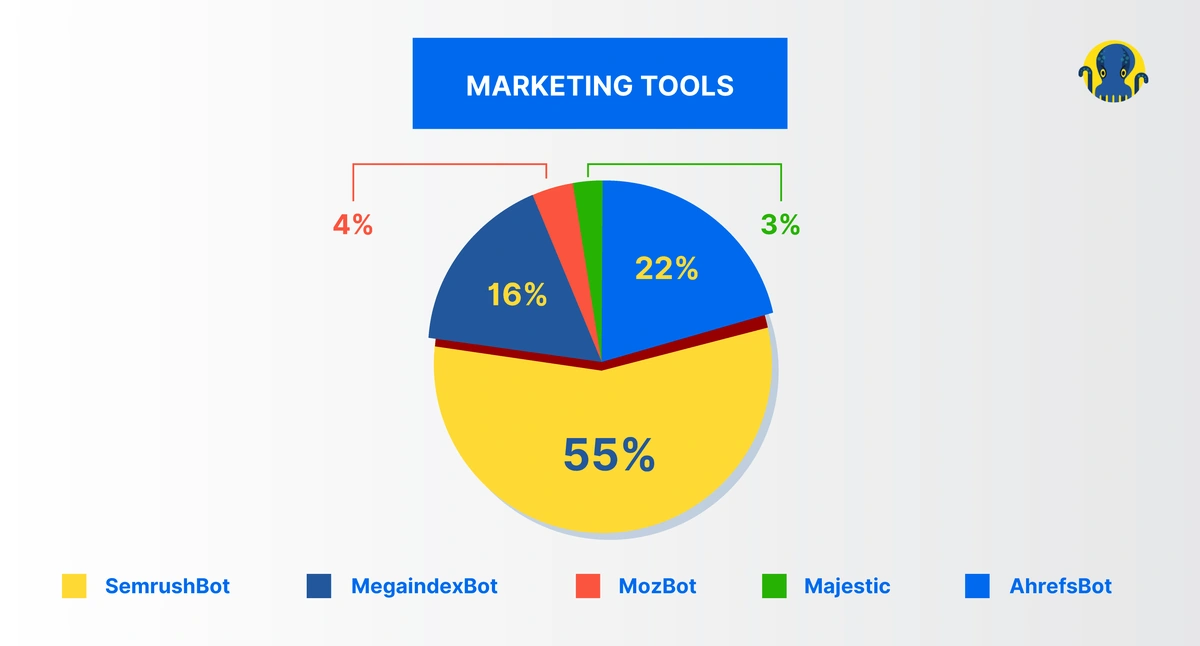

Ahrefs, Semrush, Moz — tools like these are a part of any SEO or marketing professional’s toolkit. These tools are very powerful in terms of their crawling capabilities, and they produce hundreds of millions of crawl requests every day.

Speaking by the numbers, Semrush and Ahrefs are leaders, and other tools like Majestic crawl much fewer websites. Does it affect the quality of their data?

Key takeaways

Bots are an internet norm and bot traffic is bound to increase as servers become cheaper and new technologies help crawl faster. But the main problem with bots is how they produce a load for your web server, and can thus slow down pages. This, in turn, can impact Googlebot’s perception of your website which can hurt your rankings.

So, how to get a complete picture of bots traffic on your website? The easiest way is to do a log files analysis to eliminate fake bots that just scrape your website and hurt your server’s capacity.

A common question that you might have: do you need to block them all except for Googlebot? Well, if your website is small and performs well, it’s best to do nothing.

But if you have a huge website with a lot of pages, constant problems with performance, or heavy scraping of your content, it makes sense to work on optimizing your bot traffic.

When blocking bots, a general best practice is to not do it aggressively — I’ve seen cases when even Googlebot was accidentally blocked and you can imagine the impact of this mistake for big businesses. Here’s a quick three-step process to consider:

- As a first step, consider using a proxy service like Cloudflare or Incapsula. These can easily exclude traffic from fake bots.

- The second step is to block unnecessary bots in robots.txt.

- And the third step is targeted blocking of user-agents, IP subnets, or even countries.

During each step, keep a constant check on errors, Googlebot traffic, and user traffic. If everything is done properly, you’ll see a reduction in unwanted/unnecessary bots traffic, performance improvement, and in some cases, you could even save some money by doing away with redundant servers.