The Ultimate Guide to Log Analysis – a 21 Point Checklist

This checklist is designed for all kinds of SEOs who aspire to improve the website’s visibility, increase the site’s indexation, and boost organic traffic at the end of the day. This includes accessibility for proper indexation, optimized site structure, beneficial interlinking, elimination of technical errors, and other aspects of on-site SEO. You have your hands on an easy-to-follow action plan with the help of which you will know what to change to get high rankings in SERP.

This guide will cover topics like what is log file, how to analyze log files, and the log file analysis tools you should be using.

What`s inside:

What is log file analysis and why do you need it

Log files provide SEOs with invaluable data. It’s extremely handy for your daily SEO routine as well as for more experimental tasks. It not only reveals technical issues and showcases your crawl capacity but also provides valuable SEO insights.

Most importantly, the data obtained from log files analysis provides a bulletproof argument that supports your SEO strategy and experiments. When you have facts to support your theory, it becomes reasonable in web developers’ eyes (especially when their agenda doesn’t initially align with yours).

Furthermore, log files could be helpful for conversion optimization.

You can read more on it in our article Logs Analysis For SEO Boost.

Caution! Log file analysis opens the black box. You’ll be amazed by the secrets your website holds.

What is Crawl Budget and Why Bother?

Crawl Budget is the number of pages Googlebot crawls and indexes on a website within a given period.

In other words, the crawl budget is the number of URLs Googlebot can and wants to scan. Yes, the crawl budget is limited and defined for each website individually. If your website has more pages than it’s set for crawling, Google will miss these extra pages. This, in turn, will affect your site’s performance in search results.

According to Google, several major factors exacerbate a site’s crawlability and indexability. Here are the factors in order of significance:

1. Faceted navigation and session identifiers

2. On-site duplicate content

3. Soft error pages

4. Hacked pages

5. Infinite spaces and proxies

6. Low quality and spam content

Wasting server resources on pages like these will drain crawl activity from high-value pages, significantly delaying the discoverability of great content on the site.

But the good news is that the crawl budget can be extended.

Define Your Crawl Budget

The very first step in optimizing your crawl budget is checking the site’s crawlability. Let’s start with a quick overview.

The ‘Log Overview’ tab provides you with the general crawl data:

- The Interactive Dynamics Of Bots Visits chart shows how often each bot visits your website and the number of pages it crawls each day (link)

- The Interactive Dynamics Of Subdomains chart demonstrates how subdomains are crawled.

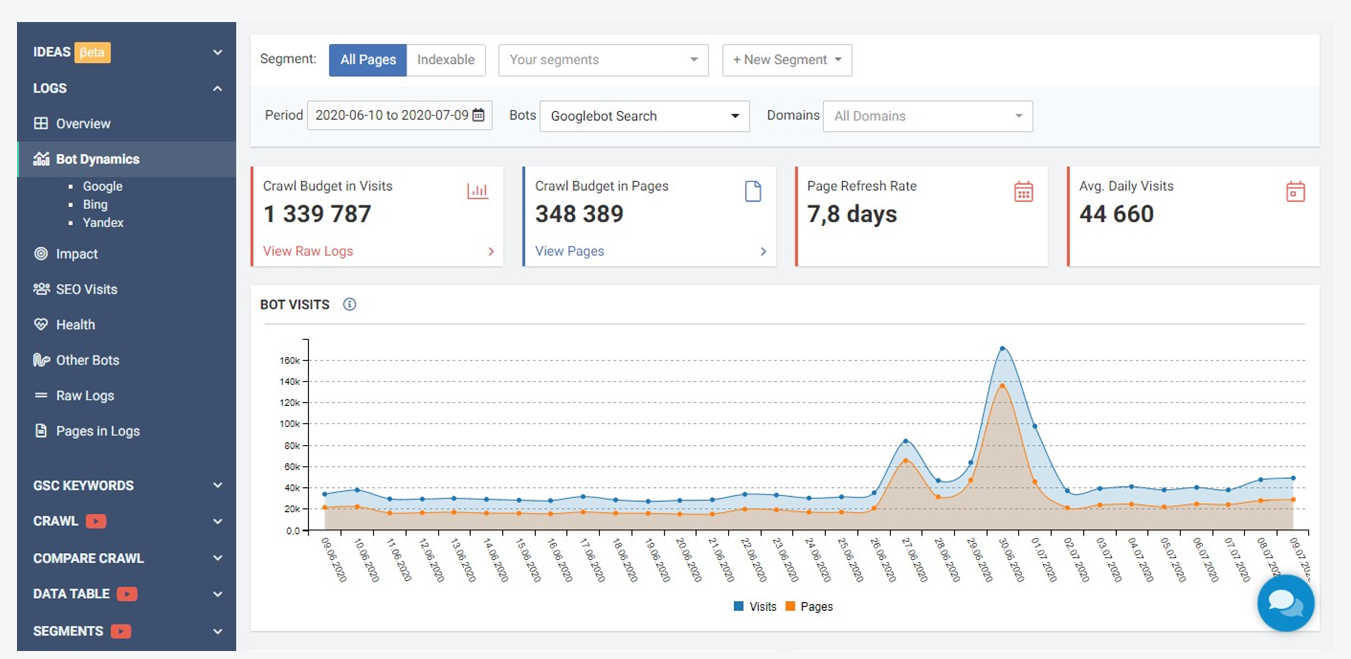

Click on the Bot Dynamics tab for a more detailed review:

This dashboard showcases your crawl budget for each search bot. It can be extremely useful to quickly identify anomalies. You should check for deviations like spikes or declines.

However, the insights derived from this dashboard cannot be viewed independently. So, it’s time to go deeper for an eye-opening experience.

Identify Crawl Budget Waste

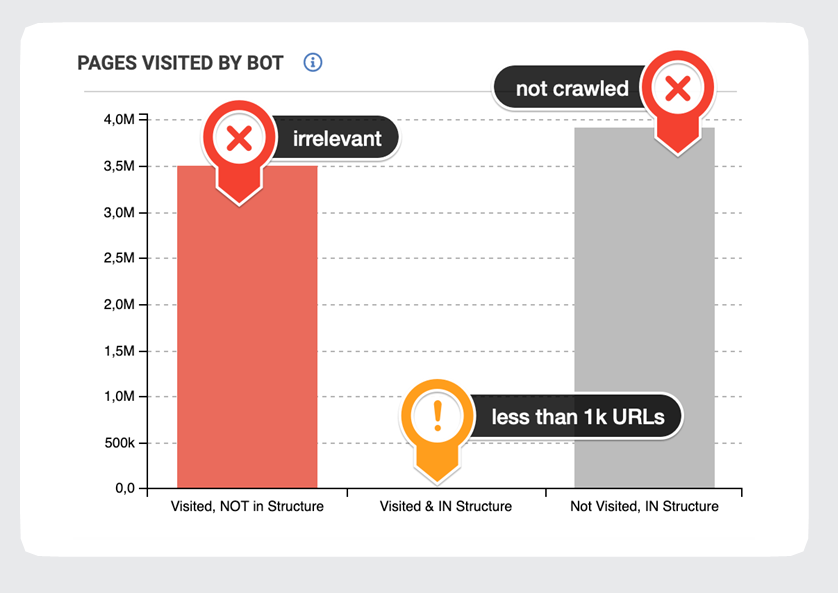

Crawl budget waste is a significant issue. Instead of valuable and profitable pages, Googlebot often crawls irrelevant and outdated pages.

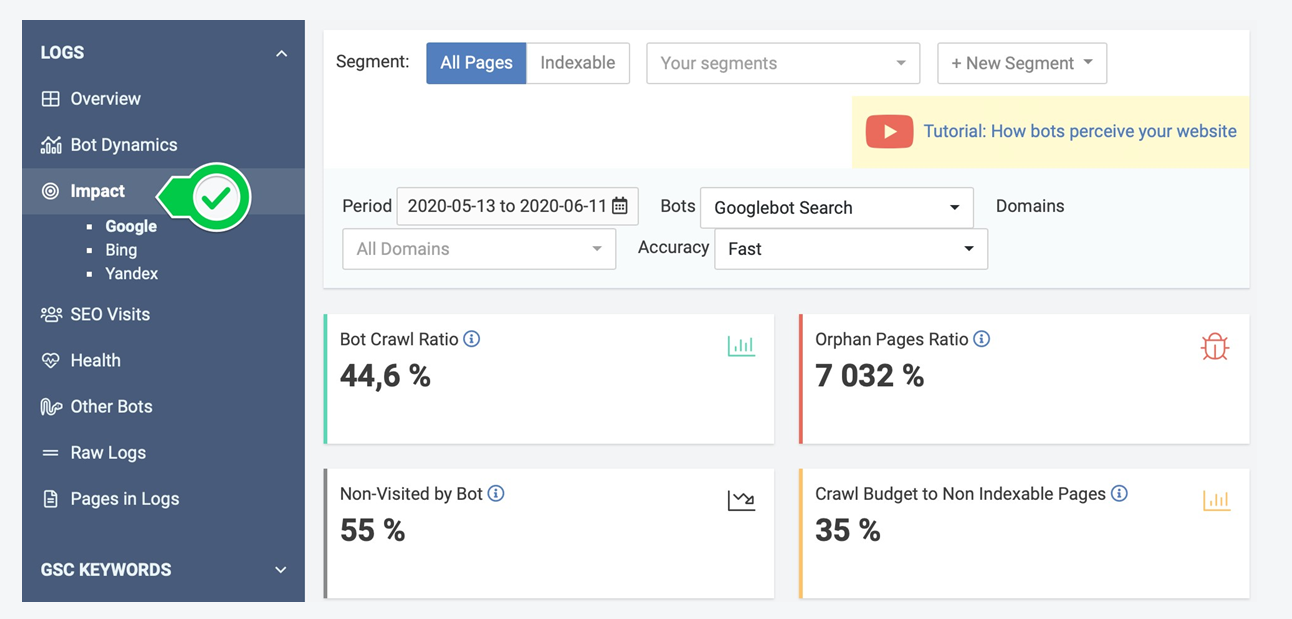

Go to the ‘Impact’ section to evaluate the Crawl Ratio and missed pages:

Once the crawl budget waste is obvious, it’s time for action. Each column is clickable and leads to the list of problematic URLs. JetOctopus crawl data provides you with insights into what problems exist on these pages. This tells you what to change to get all your business pages visited by search bots.

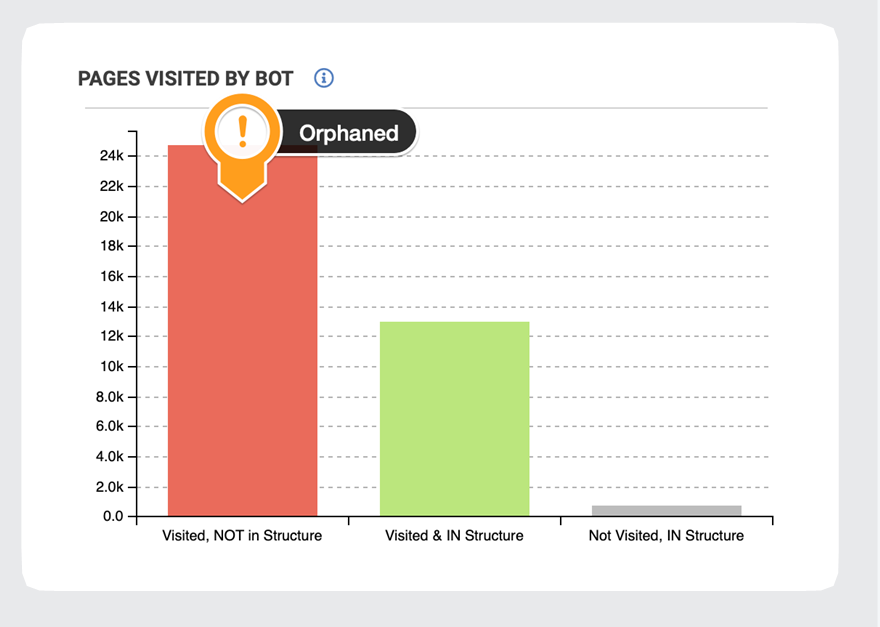

Orphaned Pages

Orphaned pages are the pages that have no inlinks pointing to them. They can be irrelevant, for example, inherent to previous site structures. Also, these pages can be valuable and profitable.

First and foremost, exclude irrelevant orphaned pages from crawling and indexation:

- Look at these pages closer

- Segment this data into categories, directories, etc.

- Identify the most problematic places

- If there are normal pages so bring them back into the site structure (link them)

- Make the trash pages either noindex or delete them.

If these pages are orphaned by mistake, you should include them in the site structure. This would improve their performance.

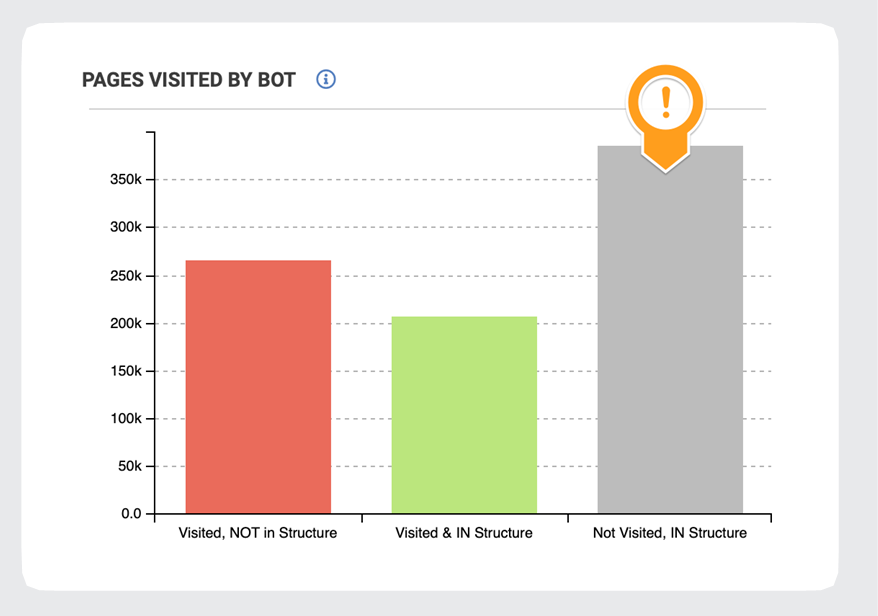

Not visited pages

Crawl budget waste inevitably results in some pages being not visited by Googlebot.

These pages could be ignored for a myriad of reasons, the most common ones being:

- Distance from index (DFI)

- Inlinks

- Content size

- Duplications

- Technical issues.

A closer look at crawl data will help you improve their crawlability, indexability, and rankings.

Read the Case Study to see how TemplateMonster has handled both (orphan and not visited) types of pages.

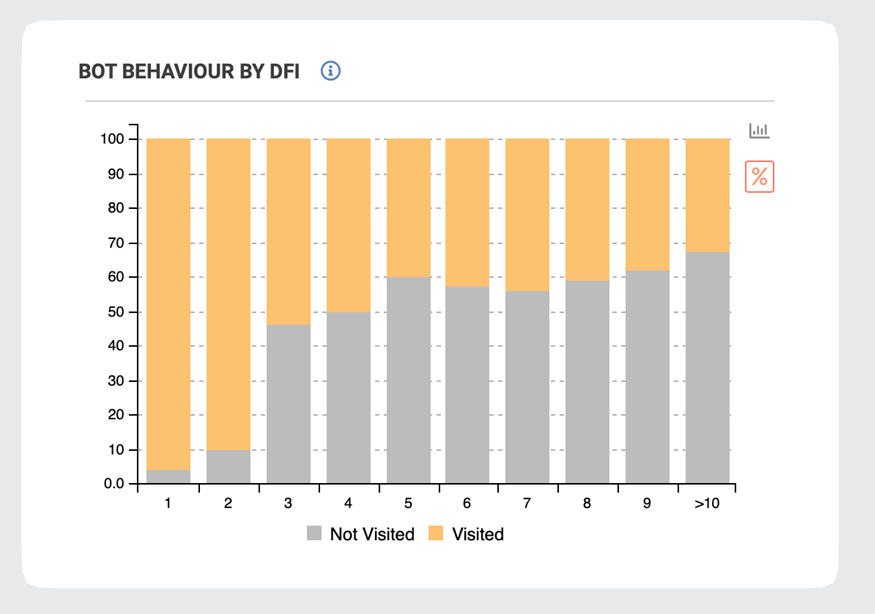

Bot Behaviour by DFI

Distance from index (DFI) is equal to the clicks from the homepage for each page on the website. It’s an essential aspect that impacts the frequency of Googlebot’s visits.

You can access the dashboard from the ‘Impact’ tab.

The following chart shows that if DFI is deeper than 4, Googlebot crawls only half of the web pages or even less. The percentage of processed pages reduces if the page is deeper:

Indeed, distance from the homepage is very important. Ideally, high-value pages should be 2-3 clicks away from your homepage. Anything that is 4 and more clicks away will be seen by Googlebot as less important.

What to do

Check if these not-visited pages are valuable and profitable. If yes, improve their DFI by linking them from pages with smaller DFI.

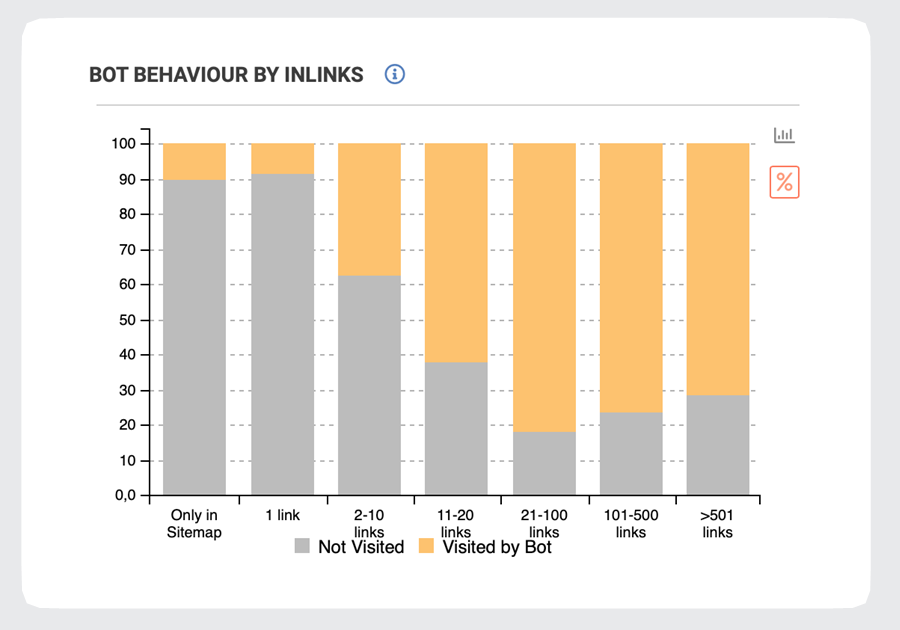

Bot Behavior by Inlinks

Googlebot prioritizes pages that have lots of external and internal links pointing to them. This means that the number of inlinks is a significant factor impacting crawlability and rankings.

With JetOctopus data you can optimize your interlinking structure and empower your most valuable pages.

You can find this chart on the Impact page:

As you can see, there’s a correlation between the number of inlinks and crawled pages.

What to do

Firstly, check whether these not-visited pages are high-quality and profitable. If yes, increase the number of inlinks, especially from relevant pages with better crawl frequency.

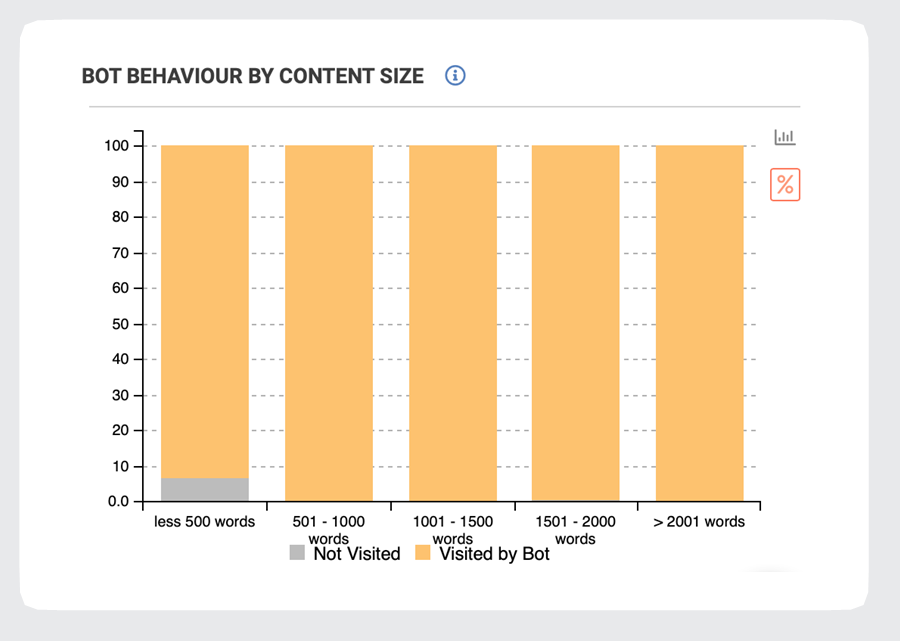

Content Size

Content plays a significant role in SEO. Google’s algorithms are constantly evolving to deliver “…useful and relevant results in a fraction of a second.” The more relevant and engaging your content, the better your site’s performance.

This chart from the Impact tab demonstrates the correlation between content size and bot visits:

Pages with less than 500 words have the worst crawl ratio and are often considered as low- quality pages.

What to do

Firstly, decide whether you need these pages. If yes, enhance the page content using Google’s Guidelines.

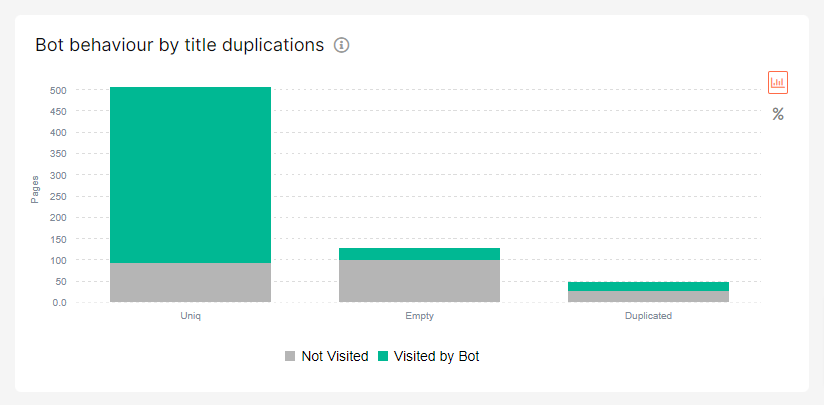

Title tag

Titles are critical to giving both users and search bots a quick insight into the content of a result and its relevance to the keyword. High-quality titles on your web pages ensure a healthy crawl rate, indexation, and rankings.

There are two major crawlability issues associated with the title tags:

- Duplicated titles

- Empty titles.

These errors most likely cause insufficient crawlability. Check this chart on Bot Behaviour by Title Duplications:

What to do

Firstly, decide whether you need these pages with empty or duplicate titles. Optimize title tags using Google’s Guidelines.

Define most visited pages

“URLs that are more popular on the Internet tend to be crawled more often to keep them fresher in our index.” – Google.

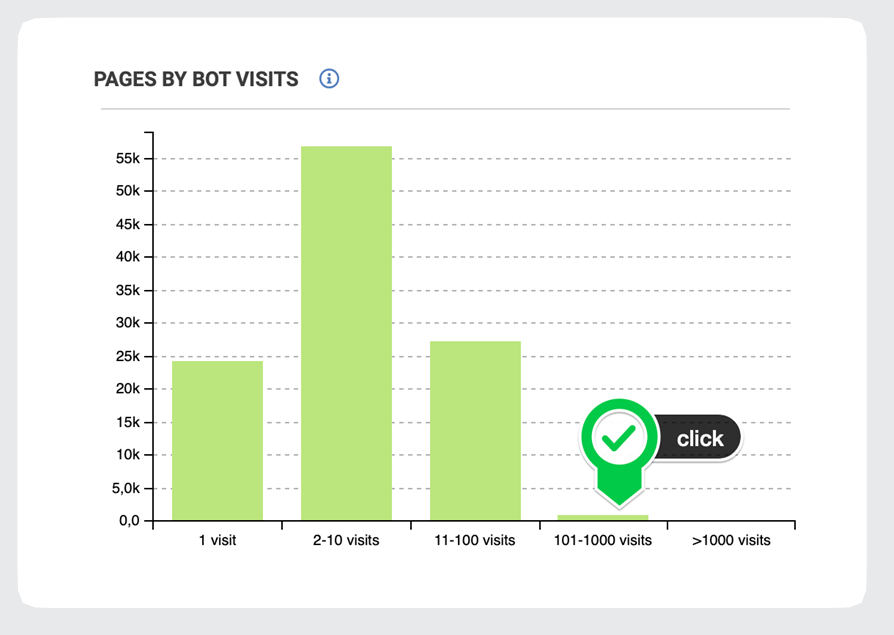

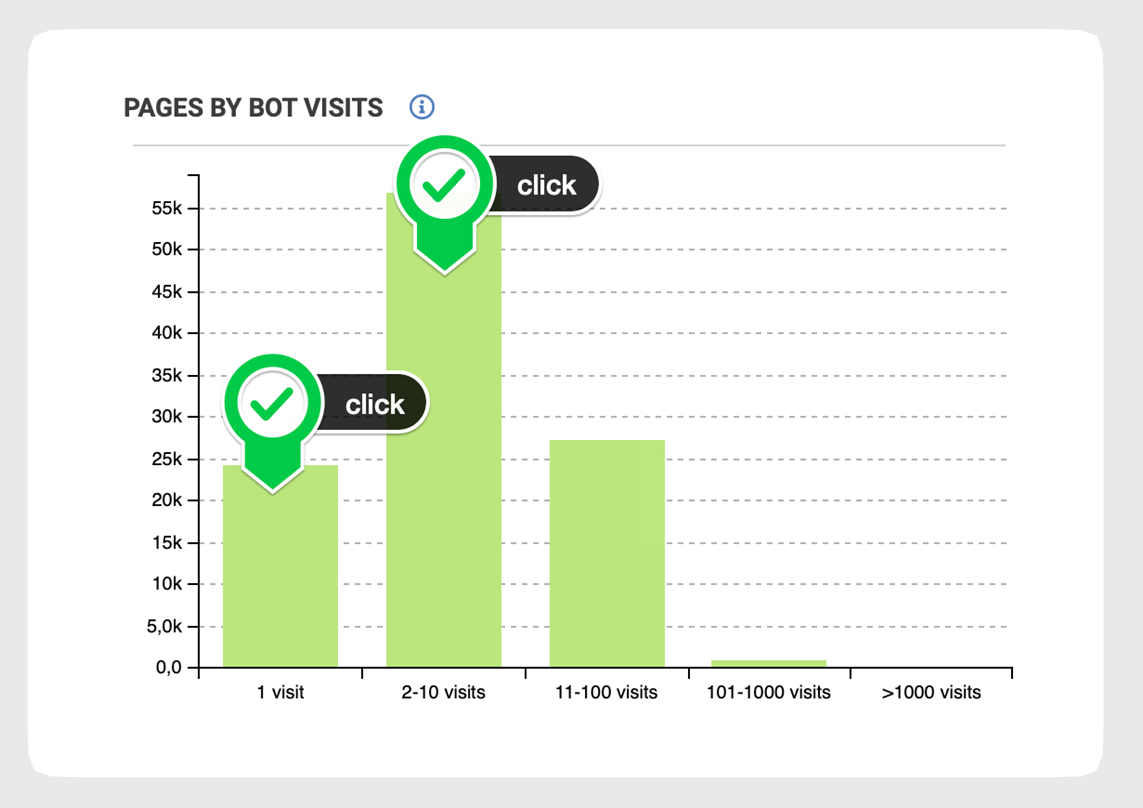

Pages that are most visited by Googlebot are considered as the most important ones. You should pay the closest attention to such URLs, keeping them evergreen and accessible. You can find the most visited URLs by clicking on charts in the ‘Pages by Bot Visits’ report:

You can add links from these pages to the weaker ones (but relevant) to improve their crawlability and rankings.

Define least visited pages

By clicking on ‘Pages by Bot Visits’ you can evaluate the least visited pages.

Even though the frequency of Googlebot’s visits doesn’t necessarily correlate with good rankings, it’s a good practice to check high-value pages among these pages with a couple of visits. If there are some profitable pages, you can analyze our data and devise a data-driven strategy to improve these pages overall performance:

- Decrease page’s DFI

- Revise interlinking

- Optimize page’s content

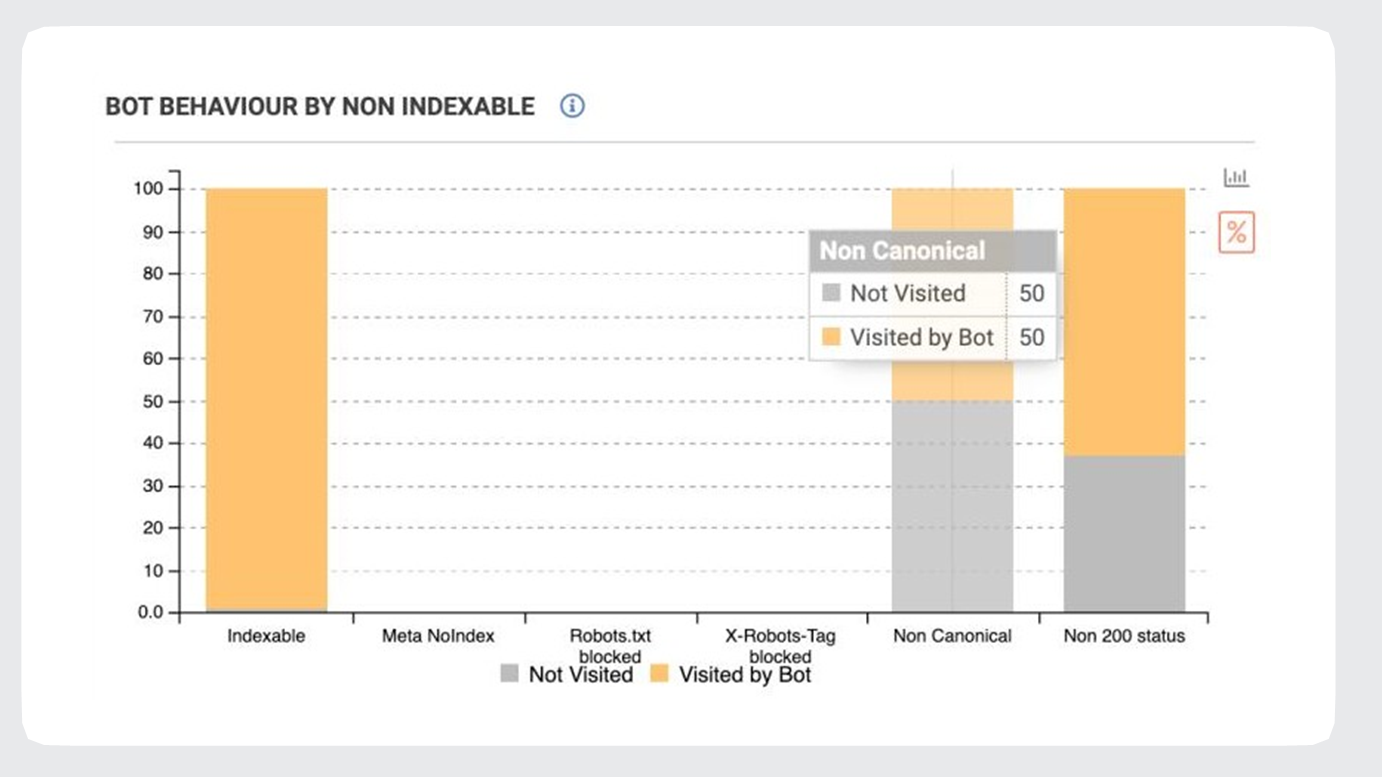

Non-indexable pages with Googlebot’s visits

Googlebot visits non-indexable pages, such as:

- Non-200 status code pages

- Non-canonical pages

- Pages blocked in robots meta tag and X-Robots-Tag

To ensure Googlebot isn’t visiting non-indexable pages, go to the Impact Dashboard:

The most common reason for such pages being crawled is the internal links pointing to them. Or maybe, some other page points to the non-indexable ones as a canonical or alternative lang version (hreflang tags). This, in turn, causes crawl budget waste.

What to do

Check and do your best so the non-indexable pages:

- Don’t have any inlinks

- Are non-canonical

- Are not used in hreflang tags

For more details: Wanna know which pages bots visit/ignore and why? Use logs analyzer

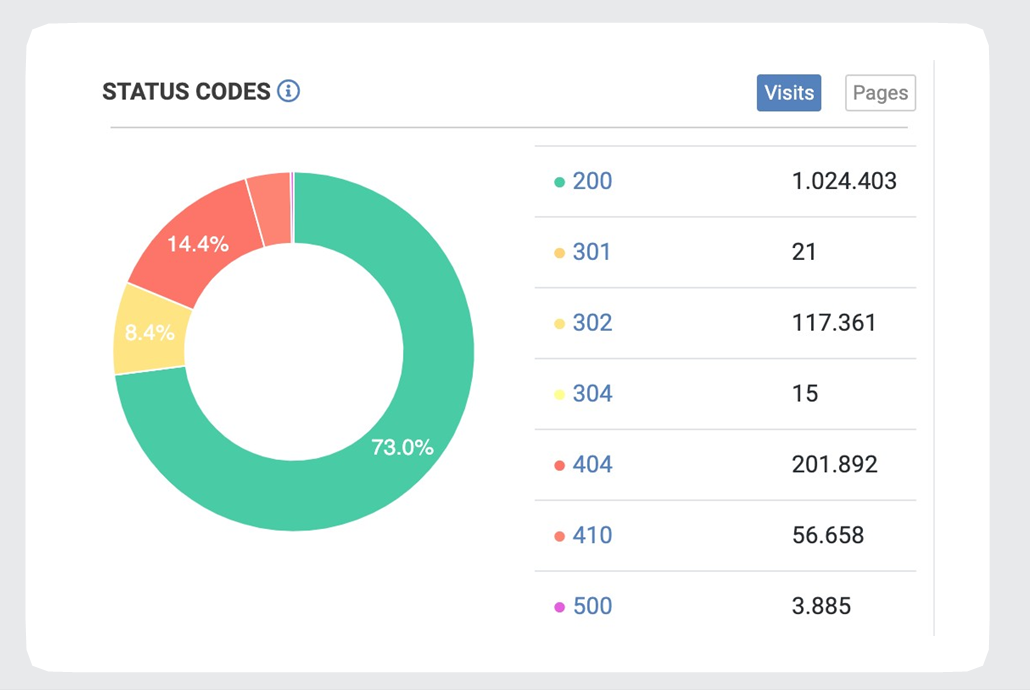

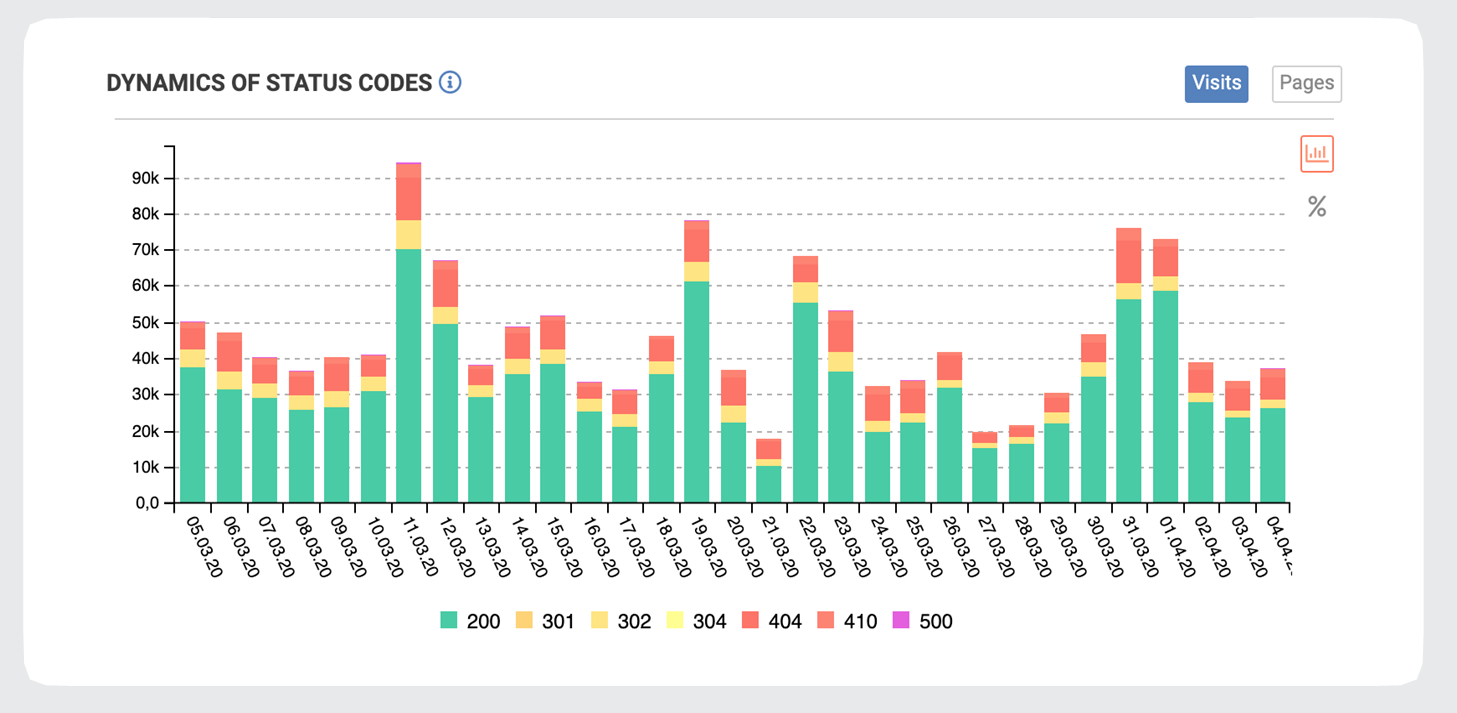

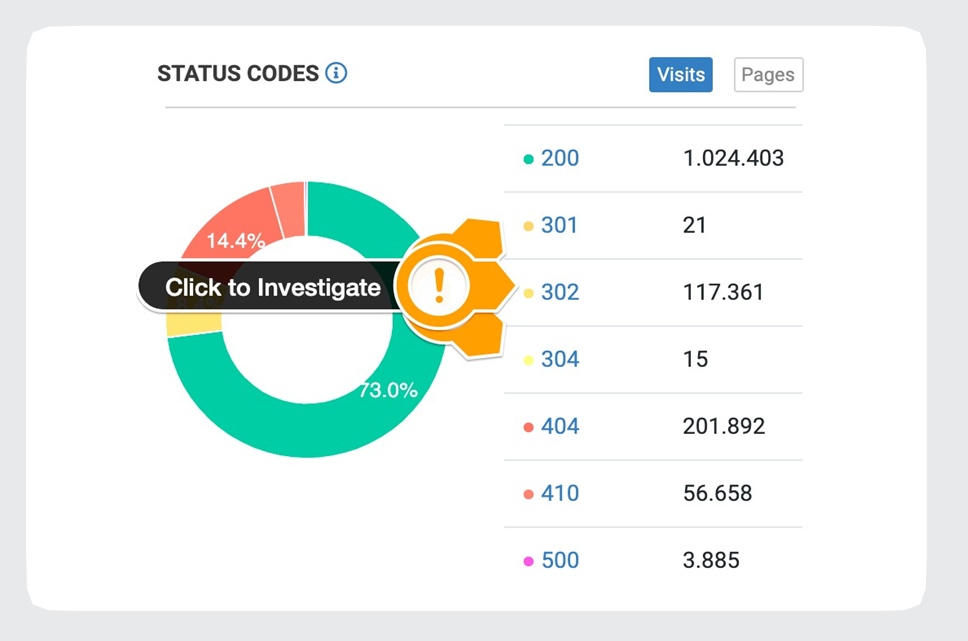

HTTP status codes

HTTP status codes have the biggest impact on SEO. Make sure your crawl budget is not spent on 3xx-4xx pages, and keep an eye on 5xx errors.

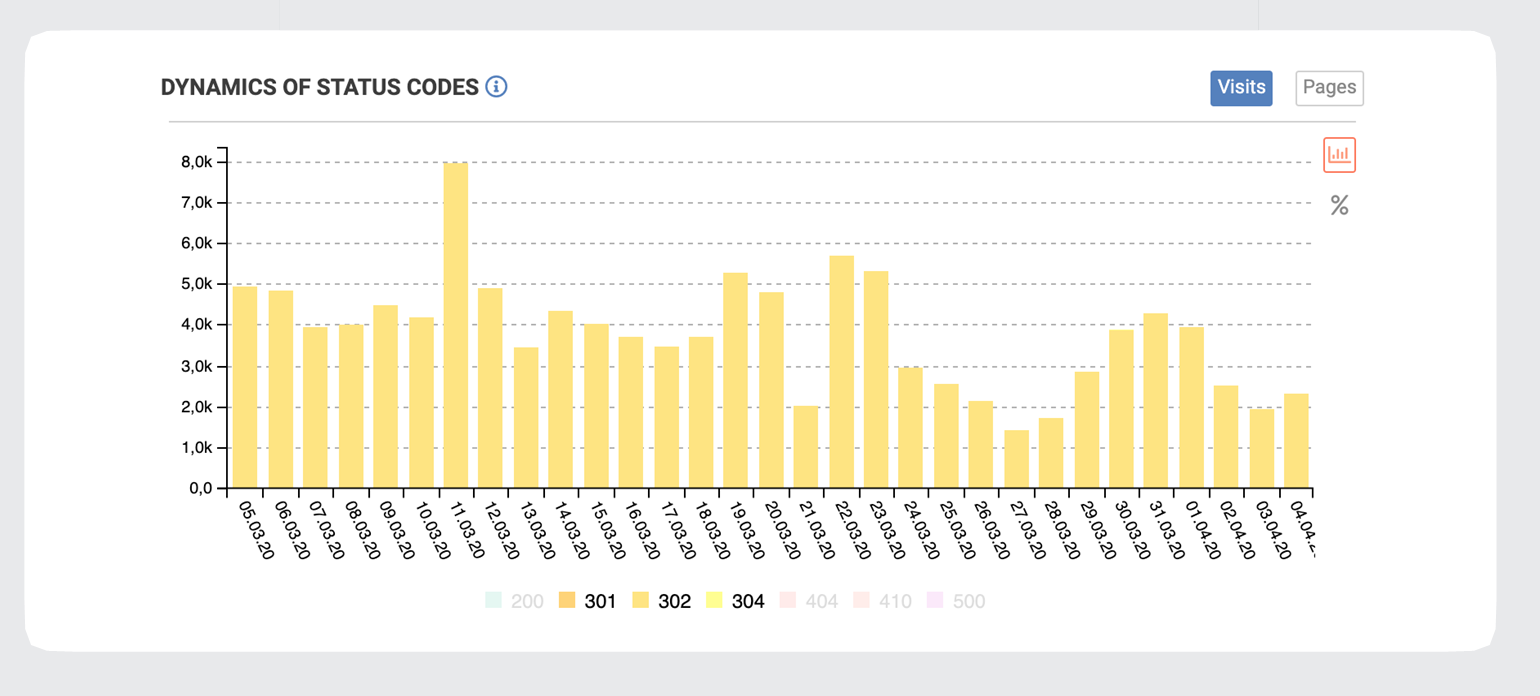

You can find the breakdown of status codes on the Bot Dynamics dashboard:

We will discuss what you should do with:

- 5xx status codes

- 3xx status codes

- 4xx status codes

5xx Errors

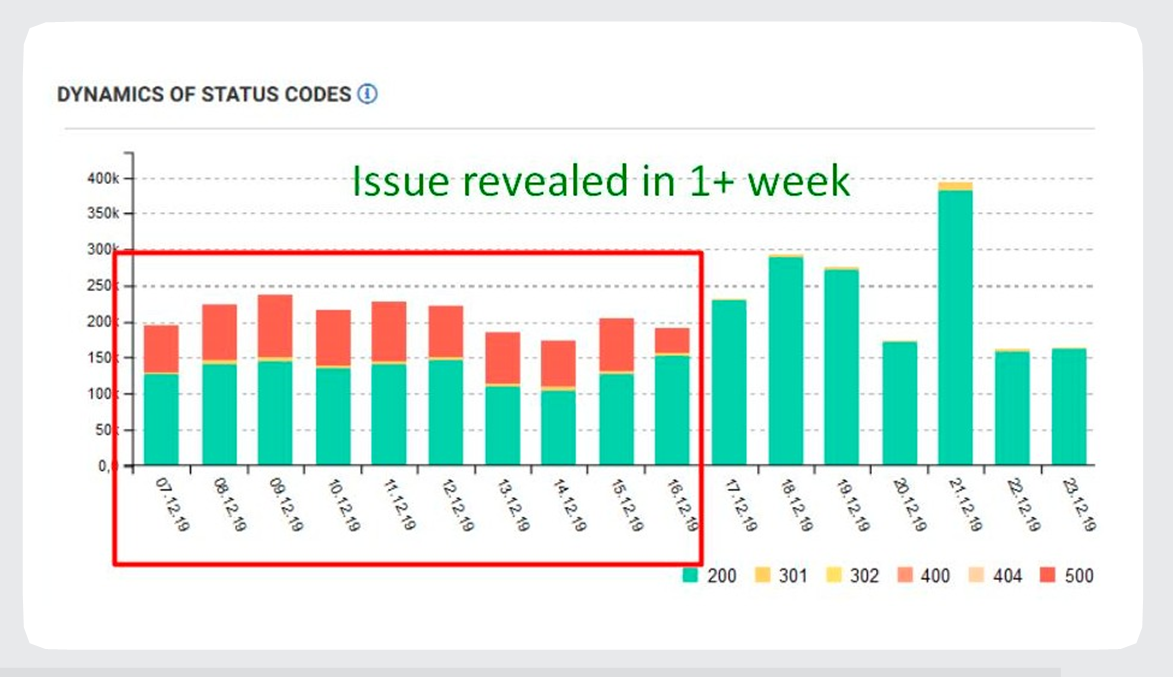

500 server error and server response time are crucial because they affect access to your site. Search engine bots and human visitors alike will be lost. A significant number of 5xx errors or connection timeouts slows down the crawling.

You can quickly spot critical issues via the Health and Bot Dynamics Dashboards. If something goes wrong for Googlebot, you’ll be able to notice it in real-time and act accordingly.

What to do

Investigate and fix those issues as soon as you encounter them. If those errors are frequent, consider optimizing your server’s capacity.

3xx Status Codes

3xx status codes are used for redirection. The most common are permanent redirects (301 status codes) and temporary redirects (302 and 307 status codes).

Bot Dynamics and Health dashboards contain such data:

What to do

Investigate 3XXs. Why are they being crawled?

- Check internal links to these pages

- Check if they are canonical

- Check if they are used in hreflang tags

- Check if they are still included in XML Sitemaps

- Check chains of redirects

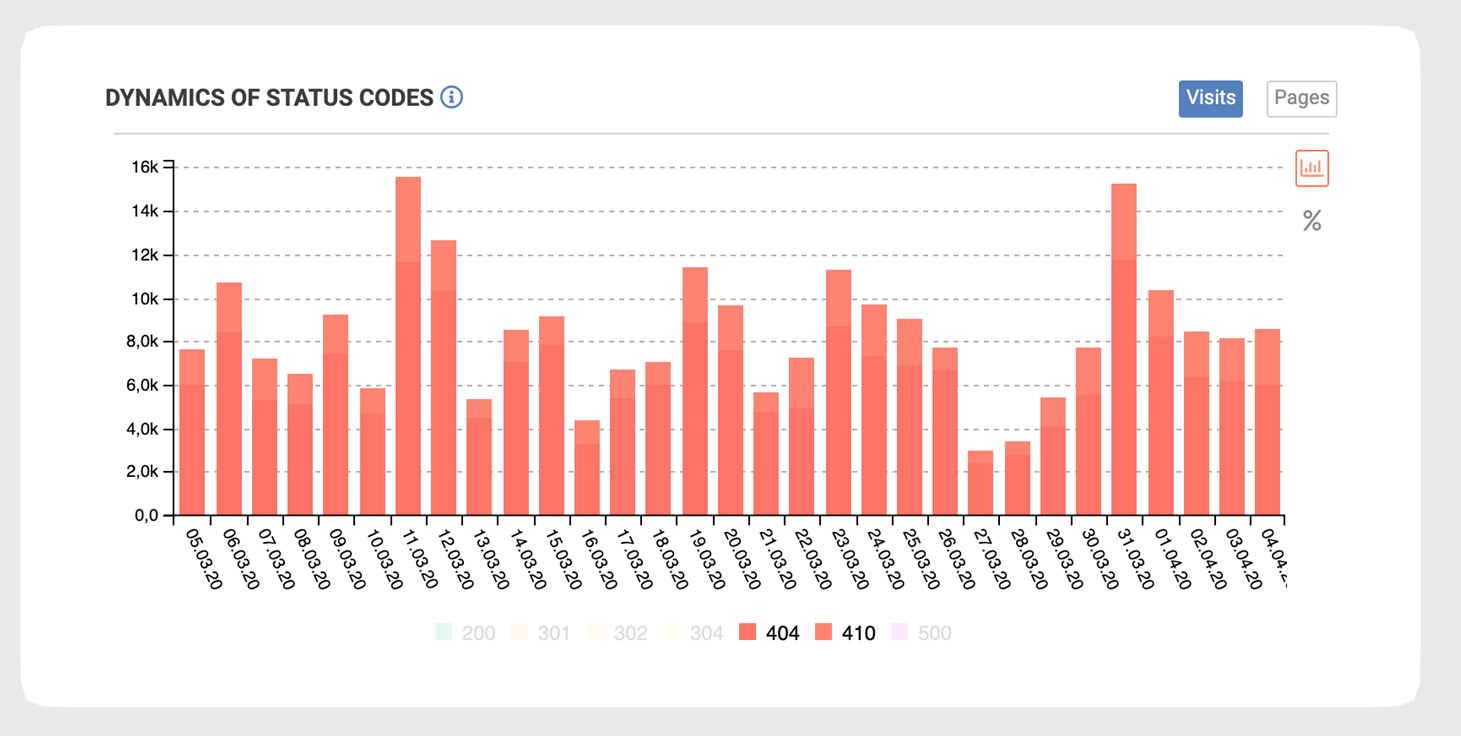

4xx Status Codes

The most common 4XXs are:

- 404 Not Found – the file or page wasn’t found by the server. It doesn’t indicate whether it is missing permanently or only temporarily.

- 410 Gone – is more permanent than a 404; it means that the page is gone.

Google spends crawl budget on these pages:

What to do

Investigate 4XXs. Why are they being crawled?

- Check internal links to these pages

- Check if they are canonical

- Check if they are used in hreflang tags

- Check if they are still included in XML Sitemaps

- Check chains of redirects

If the pages returning 404 codes are high-authority pages with lots of traffic or Googlebot’s visits, you should employ 301 redirects to the most relevant page possible.

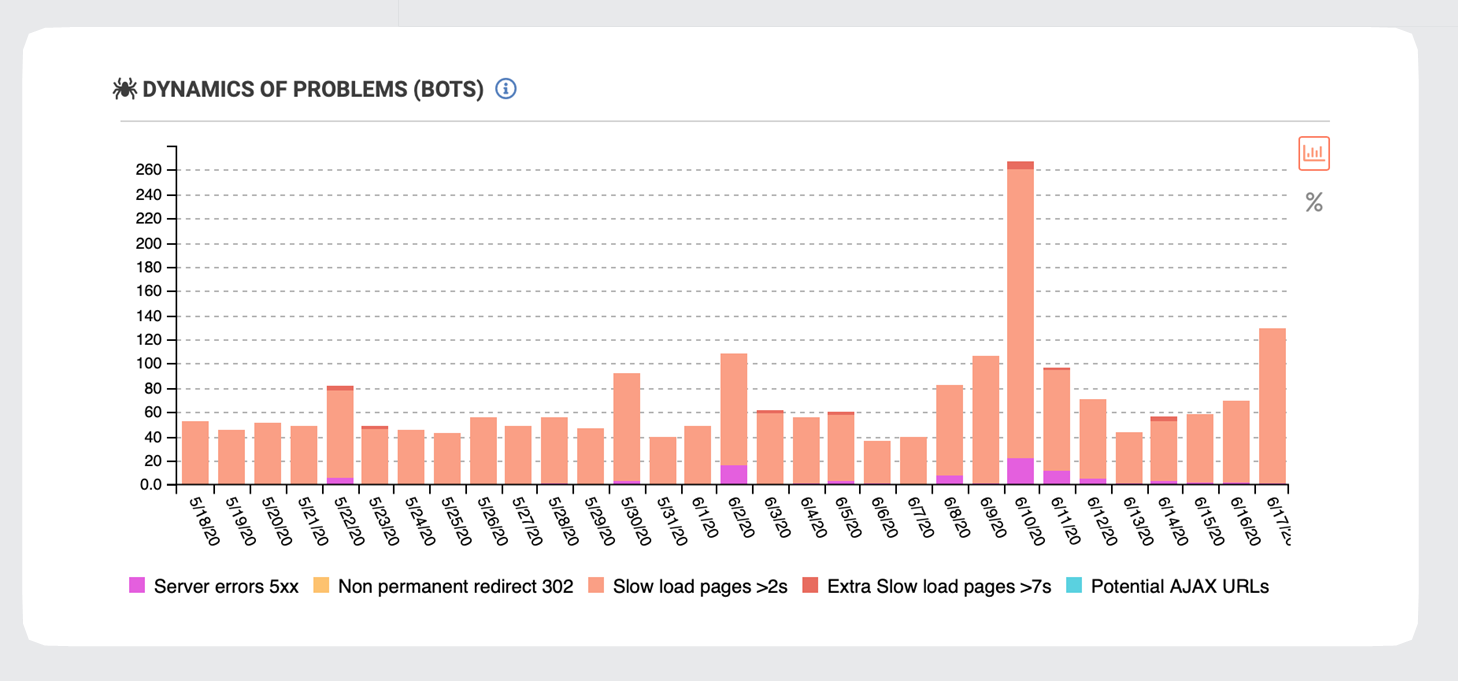

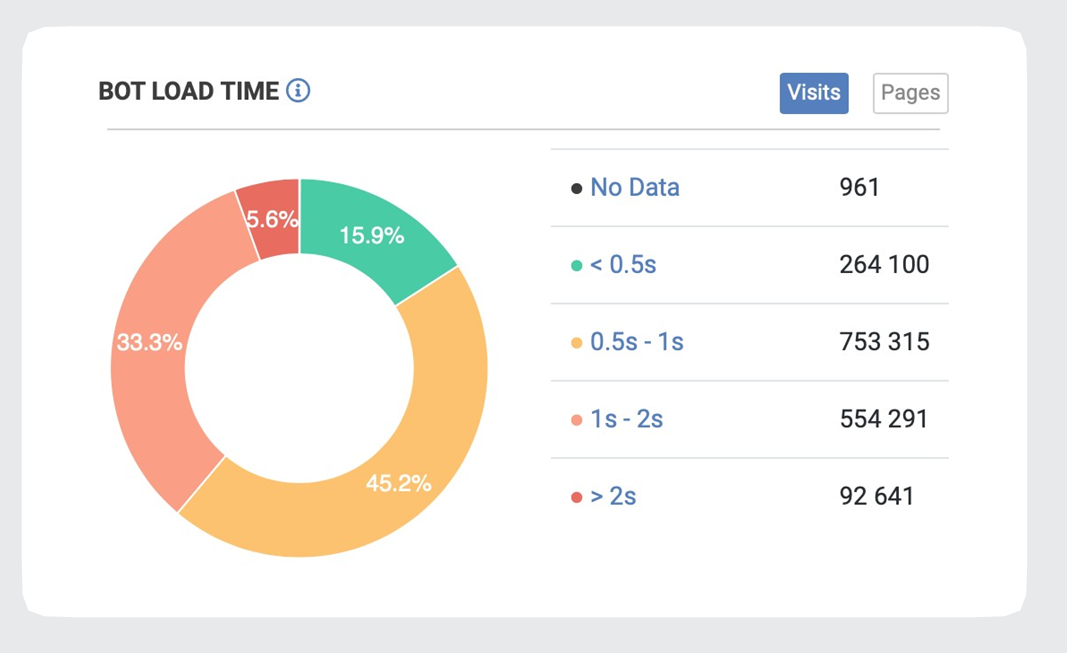

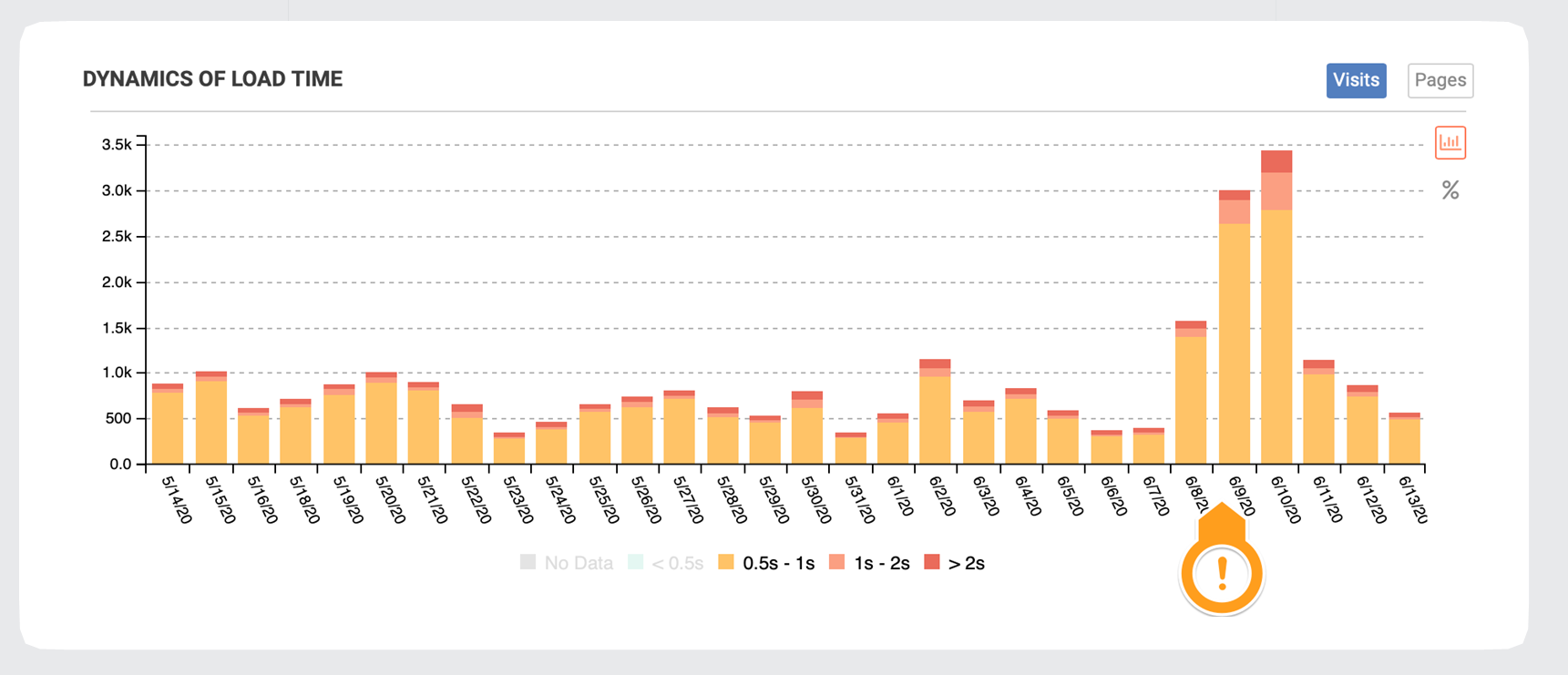

Load time

Site speed significantly affects your crawl budget. For Googlebot, a speedy site is a sign of healthy servers, so it can get more content over the same number of connections.

Check HTML load time for search bots and their dynamics on Bots Dynamic dashboard:

What to do

Improving the site speed is a rather complex task. The issue could be solved only by your web development team.

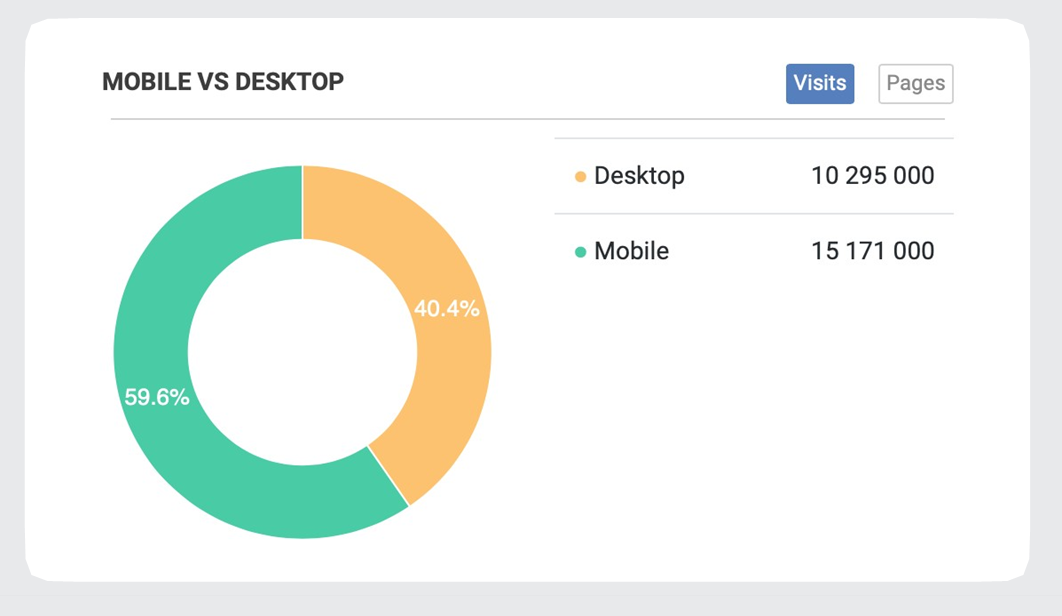

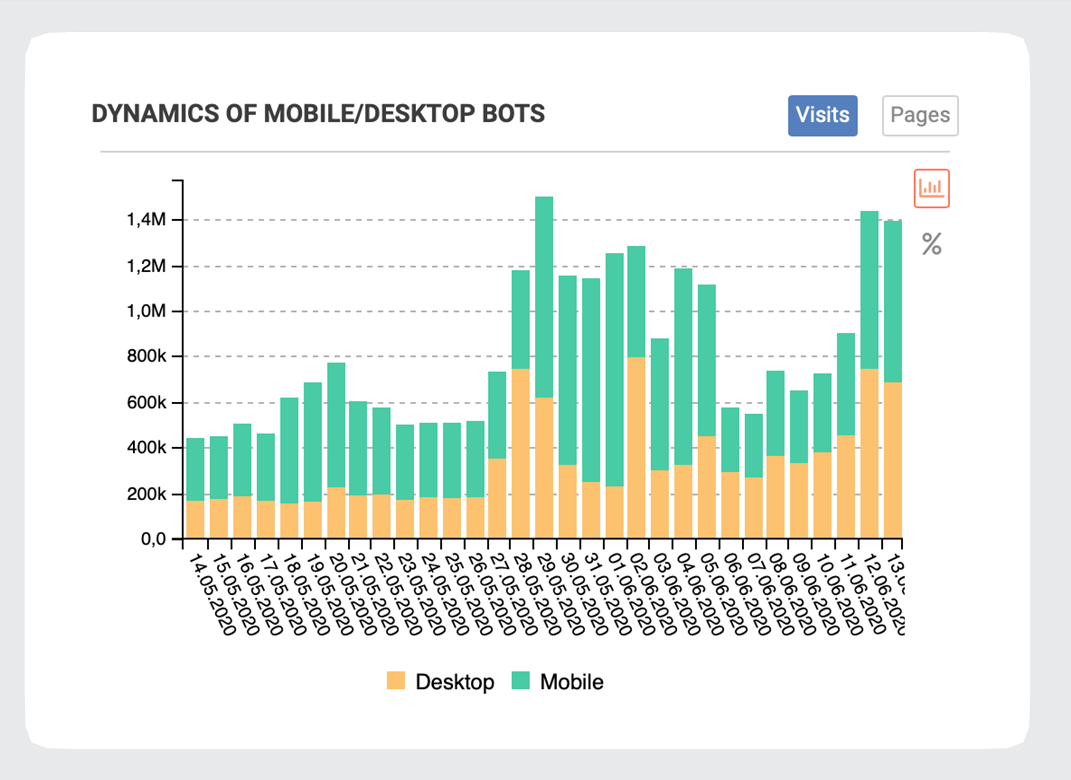

Mobile-First Indexing

Google switched to mobile-first indexing for all websites in September 2020. In the meantime, they continued moving sites to mobile-first indexing when their systems recognized that the site is ready.

If your website has not been switched to mobile-first indexing yet, keep an eye on Google’s mobile bot and its behavior.

What to do

Make sure your website is mobile-friendly. Check Google’s Guidelines.

User Experience

A detailed log file analysis also showcases how organic visitors perceive your site and the technical issues they are facing. This section is especially important for optimizing conversions.

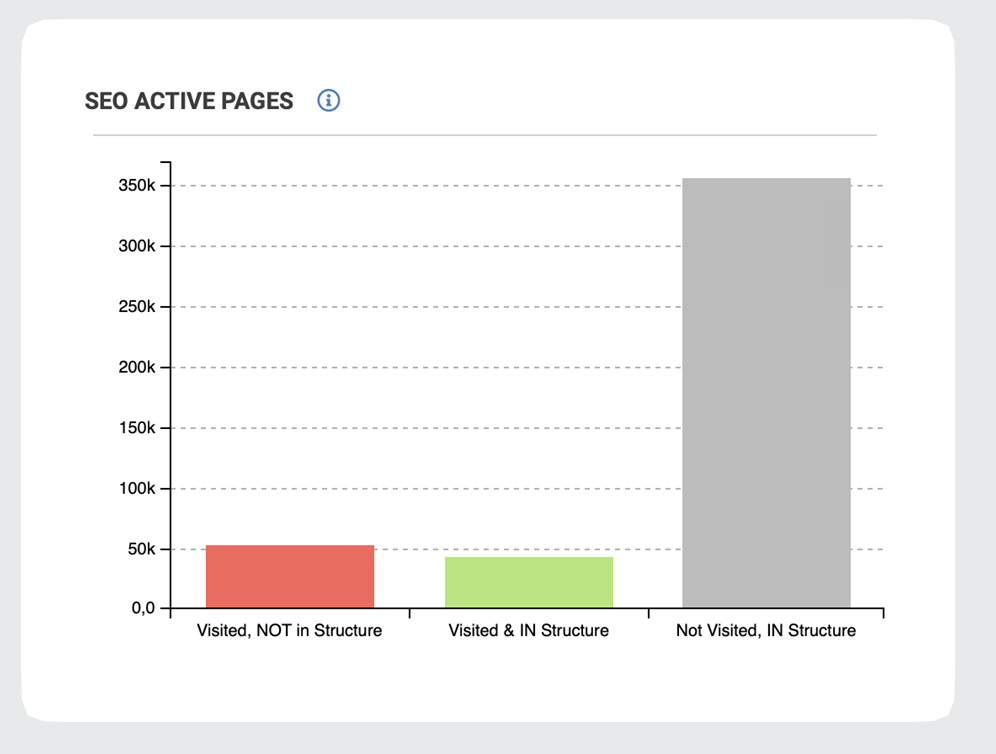

1. Evaluate how organic visitors explore your website. Refer to this bar chart in JetOctopus’ log file analyzer.

- The red bar represents the orphaned pages visited by organic users. By including these pages into the site’s structure, you can improve their rankings and receive even more organic visitors.

- The grey bar demonstrates the pages within your site structure that had no organic visitors. You should revise these pages and devise a strategy for the said pages to improve their SEO performance.

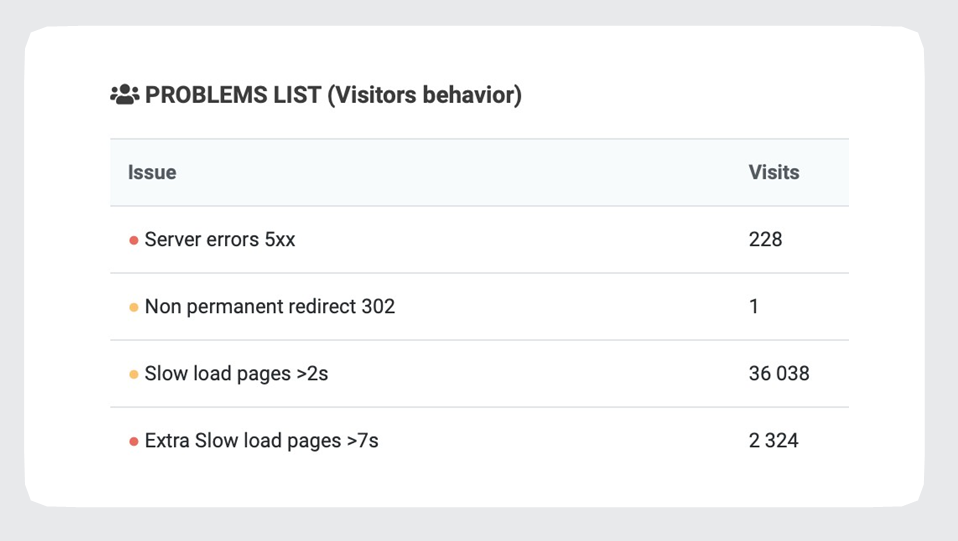

2. Identify technical issues encountered by your visitors:

By resolving these errors your site’s user experience will improve, thus increasing your conversion rate.

SEO Efficiency

JetOctopus offers critical data on a site’s SEO efficiency through charts that connect three datasets, namely logs, crawl data, and GSC data. This shows how efficient your site is in terms of SEO and whether the crawl budget is being spent optimally.

Thus, by integrating these three datasets you can analyze the pages visited by search bots, the pages in site structure, and the pages getting impressions.

Here are a few SEO insights you can check for optimizing your pages.

- Crawl budget waste – This is the orange part of the circle that doesn’t overlap with the blue circle. These pages are visited by bots but are not present in the site structure. Hence, they are potentially wasting your crawl budget. You can either remove these pages or add them to your site structure.

- The not visible part of your website – This is the blue circle that doesn’t overlap with the orange circle. These are the pages visited by the bots but aren’t present in your site structure.

- The pages visited by bots but aren’t getting impressions – The intersecting section between the orange and blue circle shows the pages that are visited by bots and in site structure but aren’t getting impressions.

Conclusion:

To sum up, log file analyzer is a must-have tool to boost your site’s crawlability, indexability, and rankings.

In combination with crawl data and Google Search Console data, log files analysis will give you powerful insights to increase your organic traffic and conversions.

The in-depth file analysis using the JetOctopus Log tool you’d be able to:

- Monitor your server health.

- Optimize the site’s crawl budget.

- Discover technical issues.

- Eliminate useless and orphaned pages.

- Optimize the site’s structure and interlinking.

- Define non-active SEO pages.

- Find pages with thin or duplicated content.

Choose the way of log file integration: 3 ways of log file integration with JetOctopus.

Tech SEO experts share their wins with Log Files Analysis

Co-founder and technical SEO lead at Snippet Digital

Unlike the standard web crawlers and tools, Log files will tell you the full story. They can tell you how search engines are behaving on your site. Which pages they frequent, which pages they encounter problems with, how fast your server is responding, and many other essential things. Now, you take this information and overlay it on top of the web crawler data, and it will open up a whole different world. What if you can verify errors reported by a Web crawler? How about overlaying your website conversion data on top of the crawled URLs? How about seeing how a specific page is crawled over time by search engines as it goes through various updates? How about finding orphaned pages? You can do this a lot more accurately than using conventional methods. There are many use cases for log file analysis and it’s often more powerful when you combine it with a Web crawler.

Log File analysis is a good way to better understand Google’s algorithms, including which pages are important (with intensive crawling and hundreds of hits per day) and which are not (with minimal crawling activity). It is also a great way to receive instant feedback from Google since when we analyze SERP positions, there is a huge delay between what was done and seeing the results of such. The biggest win for me has been optimizing my site’s structure after analyzing orphan pages and crawl depth, which has led to significant results.

COO of tranio.com

It doesn’t matter how clever your internal linking system is – Google will always have its own view on how to crawl your website. Google’s behavior on different language versions of our website (which are almost identical) was surprisingly rather different.

Nowadays SEO requires you to push as many experiments as your dev team can. Even at the cost of extensive testing which sometimes leads to unexpected results.

With fast and highly customizable JetOctopus filtering and analytics tools, we were able to find errors and even whole problematic page clusters (50k+ 404 pages still under crawl).

Using the JetOctopus log analysis, we’ve reviewed our indexing policy. After about a year that helped us achieve 80–90% crawled pages by Google of all known, and the number of active pages increased by 60%.

Implementing improvements from JetOctopus reports on a regular basis, we have prevented a few (possibly big) indexing issues in our tests and now it is a vital part of our day-to-day routine.

Well, one thing that comes to my mind, hasn’t been covered that much and what many don’t necessarily understand is that the data in logs is exact. Not like in GA where blockers, the timing of the data push, sampling, etc. can affect the data. In most cases, logs are 100% accurate. So when you analyze the data it is trustworthy by default. Ofc it is also unfiltered by default so might need to clean it up a bit. Like internal traffic etc.

But from that data whatever analysis you do after proper filtering is pretty much something you can be confident to make decisions on.

The biggest challenge in log analysis – is to understand how all this data is connected to your website. What it all means. Without it, you can’t make the right conclusion, and all the insights can be inaccurate.

I am glad that I can analyze millions of log data lines overlaying them with crawl for 3 Mln urls and GSC data for a month. Because it gives you really interesting data joins: how many noindex pages are crawled by Googlebot; what are the most effective pages by clicks in logs and a lot of other questions can be answered with the help of logs overlapped with other datasets.

My last colossal finding was a segment of the pages which got bot’s visits over the given period of time. We’ve found millions of trash pages crawled by Googlebot. We closed all these pages in robots.txt and saved the crawl budget for the profitable pages. I know that it will lead to better indexability shortly.

Log analysis is one of the most important parts of the field of technical SEO. Don’t be afraid to jump into it! It allows you to have an exact and unlimited trace of the paths from robots. Some technical problems are only visible thanks to the logs, which makes the importance of an analysis primordial in a global SEO strategy.

The other great strength of the logs is to be able to cross this set of data with a crawl to easily and quickly identify orphaned URLs or hit pages that should not be. In constantly changing sites, the logs provide a historical trace of the evolution.

All this data allows you to demystify the notions of Crawl Budget and optimize the path of robots to important pages.

Bot activity check is my everyday task while I am drinking my morning coffee.

Website log analysis together with convenient data tables merge also gave me an opportunity to shape interlinking effectively and get rid of unnecessary pages. For example, we identified that Googlebot has been knocking to our API and been making requests hundreds of times per day. So, we optimized the crawl budget and reduced the load on our servers

Doing SEO on the top level with large or huge websites without analyzing logs is just impossible.

For example, without logs on an e-commerce site you won’t be able to know:

which page type is most crawlable, which categories can cause problems, is your internal linking strategy correct, does your filter navigation work properly? Or some really basic stuff like if there are new problems after a recent dev release? Are you dealing with pages with 5XX status? Do you properly handle 404 and 301 pages?

Also, keep in mind that with tools like JetOctopus you can combine your logs with GSC or crawl data. Imagine you can easily see the correlation between content quality and crawl rate or from a business value perspective – the number of products in a specific category and crawl rate. By combining logs with custom extraction, the only thing that is limiting you is your own imagination.

Elena Bespalova, SEO Strategist at Rank Media Agency

Log analysis has become a part of my SEO routine. It’s really helpful not only for regular health checks but also for more advanced tasks.

Log analysis showcases weak spots and provides useful insights. To me, the greatest challenge was a migration of a storied website with 300k+ indexed URLs and even more URLs inside the black box. With the log analysis, we discovered tons of orphaned pages, inconsistency in interlinking structure, and technical issues.

With this data, the migration became less challenging. We could successfully map all the required redirects, optimize the site’s structure, prevent migration of technical errors, and optimize the interlinking structure. Long story short: the migration proved to be successful and organic traffic increased.

Crawl budget is one of the main components of effective website scanning. The garbage technical pages appear on websites quite often (especially on the old ones), and it’s difficult to identify those pages without proper analysis.

With the help of log analysis of our site, we have found tens of thousands of junk pages that should not have been visited by Googlebot. We have managed to significantly optimize the crawlability of important pages after we’ve worked with the website structure, tags, etc.

Logfile analysis allowed us to identify many unacceptable server responses (503, 302 in particular), which were received by Googlebot in large numbers during the crawling process. These problems were solved on a server-side in a short time.

Having a lot of design templates on our site, the log analysis showed us a clear picture of the bots` behavior on pagination pages. Our goal is to index tens of thousands of such templates, hence analyzing logs is extremely insightful for our website.

Also, the log files allowed us to identify the pages most visited by bots. In one click, we received a huge amount of information and loaded the backlog for months ahead.

When I performed my first log analysis with JetOctopus… I was shocked! I realized that Googlebot keeps attending hundreds of thousands of outdated, junk, and “gone forever” pages, even out of the Google index. They were consuming our website’s crawling budget dramatically. Another insight – now we know the minimum number of internal links that guarantee that your target page will be visited by Googlebot. And what’s exciting – there are dozens of new SEO insights ahead.