Sitemap as a True Damager

While most of SEOs share an understanding that submitting a sitemap to Google Search Console is important, they may not know the intricacies of how to implement them in a way that drives SEO KPIs. Here is how to find and fix problems in the website’s structure and optimize a sitemap both for Googlebot and for users.

About the e-commerce website:

- E-commerce website with 22+ years of experience

- 1 mln pages

- 1,3 mln monthly visits

The main challenge:

To find and fix problems in the website’s structure and optimize a sitemap both for Googlebot and for users.

What was done?

- The website was crawled with JetOctopus to find all technical bugs in the website’s structure.

- Log lines analyzer was used to understand how Googlebot crawls the sitemap.

What problems were detected?

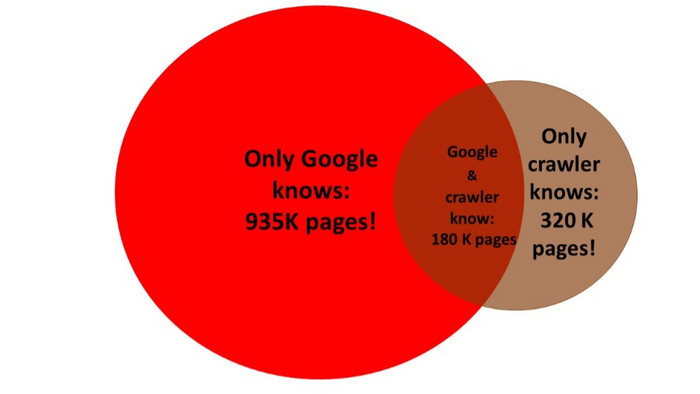

- 1 mln pages which aren’t in the website’s structure, but are regularly visited by Googlebot. Crawling budget is wasted on unknown content.

- There are 320K pages which aren’t indexed by Googlebot, but these pages are valuable for the website. Only 180K pages are effectively crawled by Googlebot.

- There are around 320K links to not in-stock products in the sitemap.

- Incorrect logic of adding new products (new products used to be indexed within 2 weeks).

What recommendations we gave:

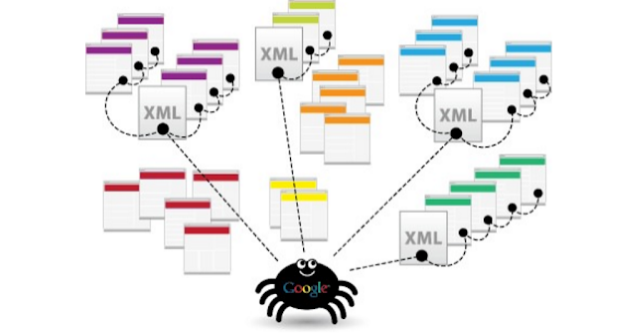

1. Сhange the logic of sitemap generation. For e-commerce websites it’s crucial to show new products in search results as soon as they are updated on the website. Googlebot regularly analyses the website’s sitemap to index fresh content. That’s why it’s needed to generate the actual sitemap constantly (we recommend doing it every week).

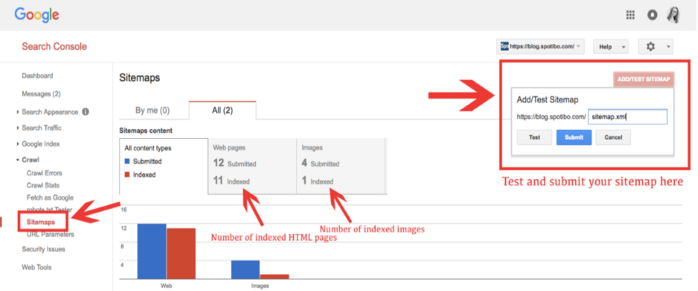

2. Resubmit new sitemaps through Google Search Console. Decide which pages on the website should be crawled by Google, and determine the canonical version of each page. You can create your sitemap manually or choose from a number of third-party tools to generate your sitemap for you.

3. Get rid of non in-stock products in the sitemap.

Software engineer Matt Cutts said that e-commerce sites with hundreds of thousands of pages should set the date the page will expire using the Unavailable_after META tag. This way, when the product is added, you can immediately set when that product page will expire based on an auction date or a go-stale date.

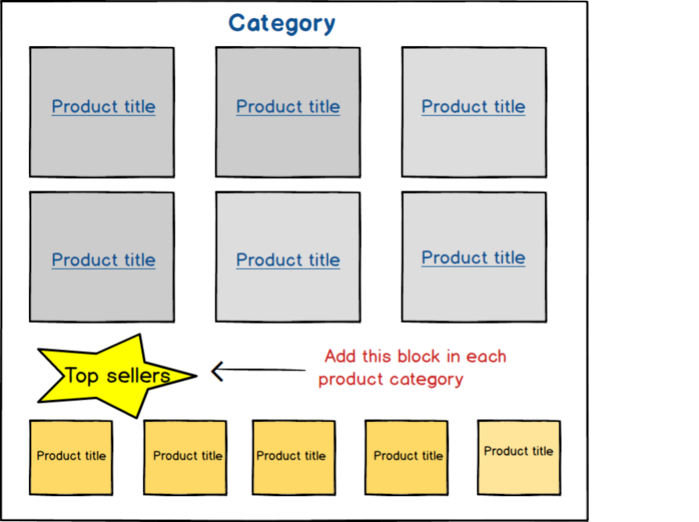

4. Add a new block with the most popular and prior products in each product category. Proper internal linking makes it easy for Googlebot to crawl your webpages.

JetOctopus team recommends reviewing GSC guide, explaining how to submit a new sitemap for crawling. Also, there is useful information on how to solve common problems with sitemaps.

Conclusion:

- For e-commerce sites the correct sitemap generation is one of the most crucial things (new products to be added immediately, not-in-stock products to be deleted from actual sitemap). It is a true opportunity to increase sales.

- Accurate work with canonical and non-canonical tags is your silver bullet.

- Interlinking structure is your rocket tools. Don’t underestimate it.

Read more: 2 Different Realities: Your Site Structure & How Google Perceives It