Sum up for 2024. It was innovative.

2024 has been a landmark year for JetOctopus! We’ve made waves at top industry conferences worldwide, including BrightonSEO (UK), E-commerce Berlin Expo (Germany), and TechSEOConnect (US), connecting with leaders and innovators in the SEO and e-commerce space.

We’re proud to welcome big-name brands like DeliveryHero, Hubspot, Trendyol, Bukalapak, Wallapop, and many more to our growing list of clients. These partnerships showcase the trust and value companies place in JetOctopus to elevate their digital presence.

On top of that, we’ve taken our platform to the next level—technically more advanced, robust, and ready to deliver even greater results for our users. The future looks bright, and we can’t wait to see what’s next!

Let’s make a technical recap of all platform updates.

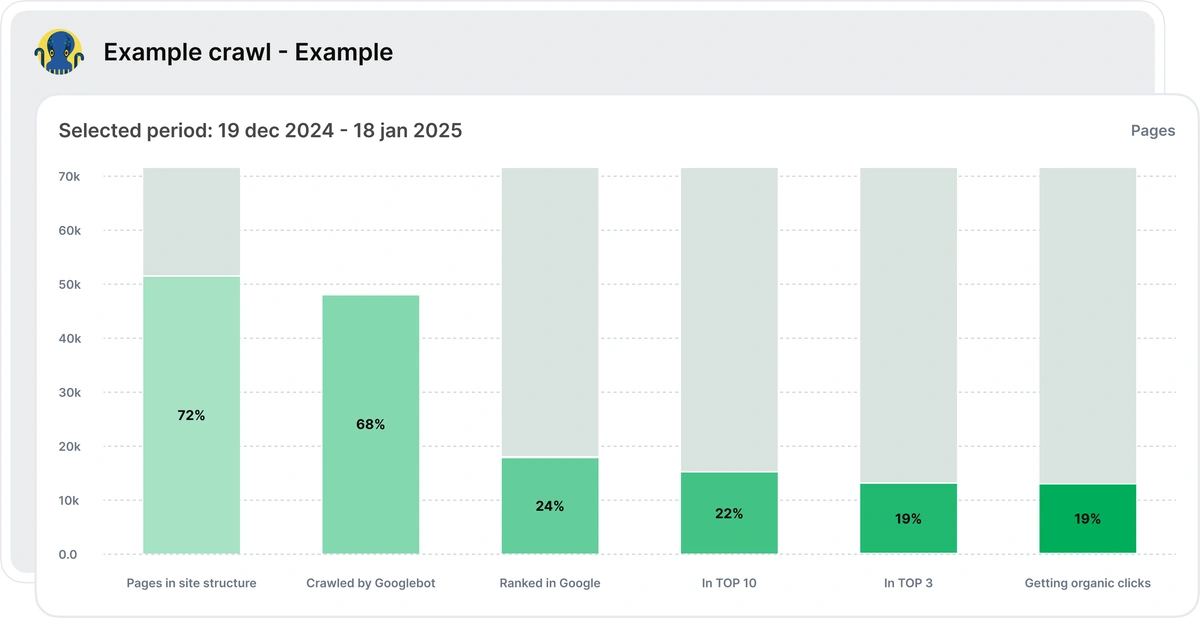

SEO Funnel (January 2024)

consolidating report – the best start of 2024 for all our clients. It helps you understand where the main problem is.

📎 SEO funnel reflects the state of things of a website on the main stages of organic traffic growth (The page was created on a site → It has to be visited by Googlebot →Then to be ranked→ It starts bringing organic traffic).

📎 SEO funnel lets you understand the most critical works that you should start with to get positive dynamics in SEO.

📎 Now you will know what pages are “dead” in terms of SEO and what to change so they get ranked.

It all saves you a loooooot of time to understand it. And the most important – you will spend SEO resources on impactful optimizations that will definitely bring you new organic traffic, not just SEO works for SEO works.

Impact Hub

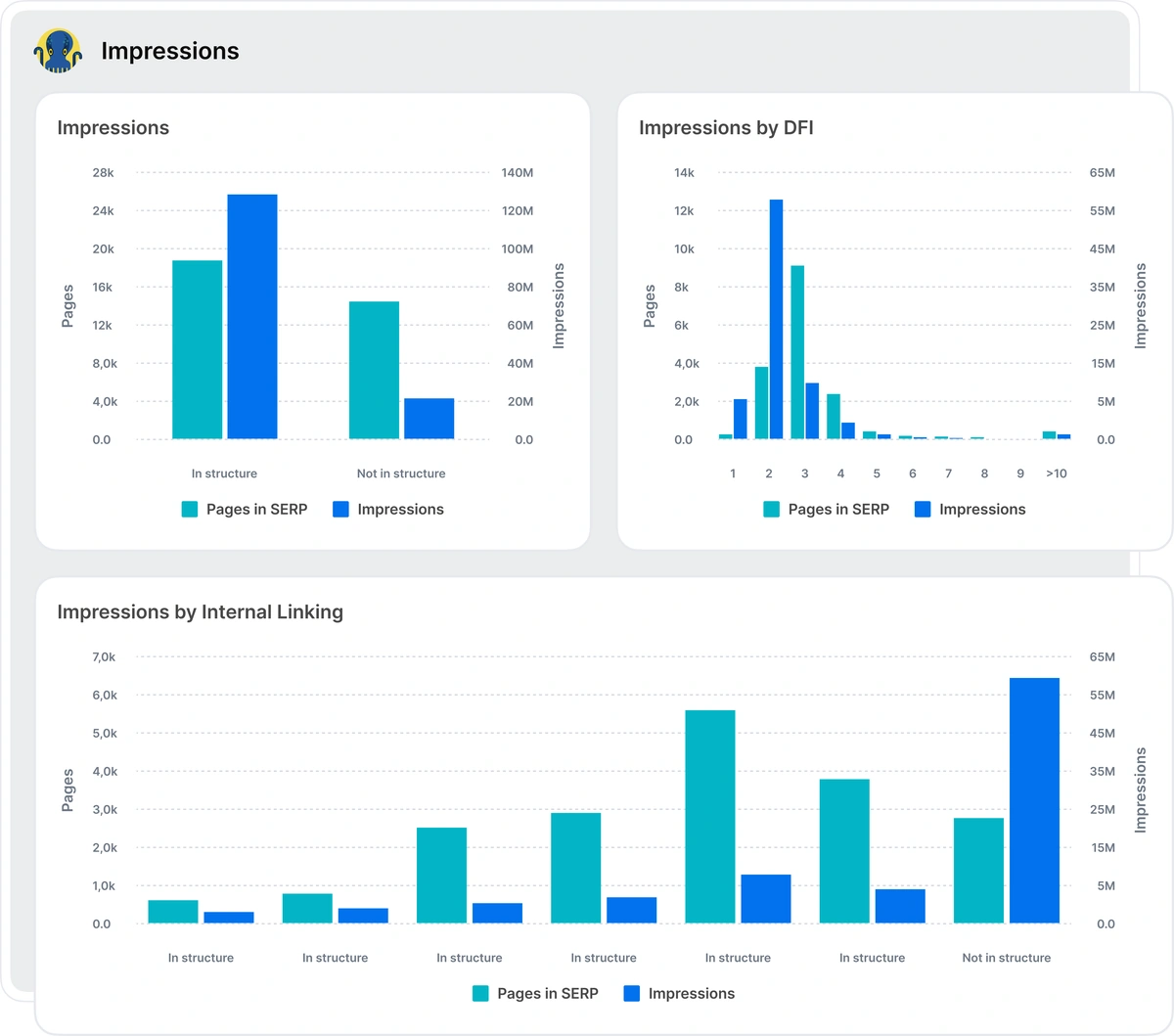

Impressions (January 2024)

A big inspiration to the SEO community!

With the help of this tool, users can analyze on-page SEO efficiency by Impressions as the main criteria of analysis.

Another inspirational tool that leads to pure satisfaction but not frustration. SEO data can be visualised and informative!

📍The pages In site structure Getting impressions

📍The pages NOT In site structure Getting impressions

📍The Impressions depending on the Page depth

📍The Impressions depending on the Content size

📍The Impressions depending on the # of Internal links

📍The Impressions depending on the loading time

Additional vector to analyze content length, load time, efficiency by countries, page depth, etc.

Impact HUB: – Metric Impact tool (February)

You can play with any SEO metrics endlessly, getting valuable insights on a fly🌪️

Look at on-page SEO metrics and How they impact on:

– Impressions

– Googlebot visits

– Rankings

– Clicks

If Is interlinking is important or does the number of words on a page have any impact or load time?

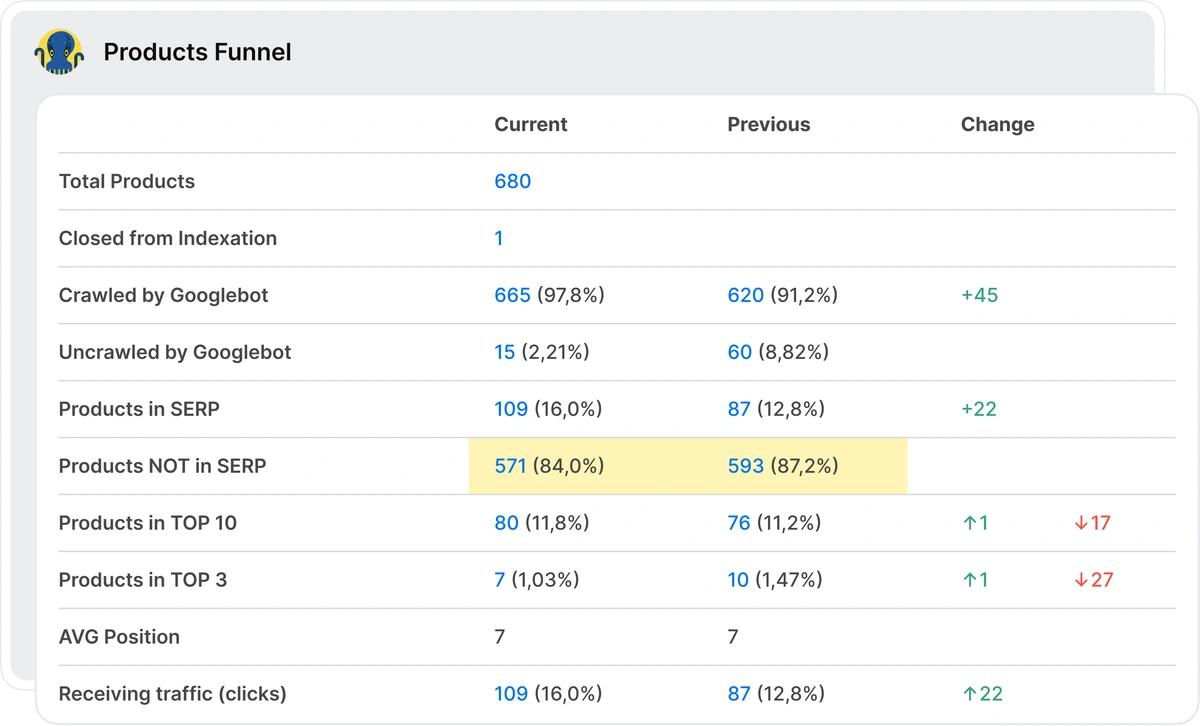

Impact HUB: E-commerce Performance Tools (February)

You know that JetOctopus.com was initially built for the SEO needs of big websites and we constantly add more and more tools to increase efficiency of e-commerce websites.

We are proud to present new outstanding tools to analyze SEO efficiency of products. The best insights for e-commerce that can dramatically increase SEO traffic.

Get the preset, detailed information about Products by categories:

– indexation management of products,

– products being crawled by Googlebot, NOT crawled,

– products ranked in SERP/not ranked,

– products ranked in TOP 10, in TOP 3,

– the average position of ranked products,

– the products bringing organic traffic.

Usually, you don’t have time to gather such detailed information, but if you work with E-commerce, you should work with this data regularly, trying to rank as many product pages as possible as they generate sales. Now, it is all preset for you!

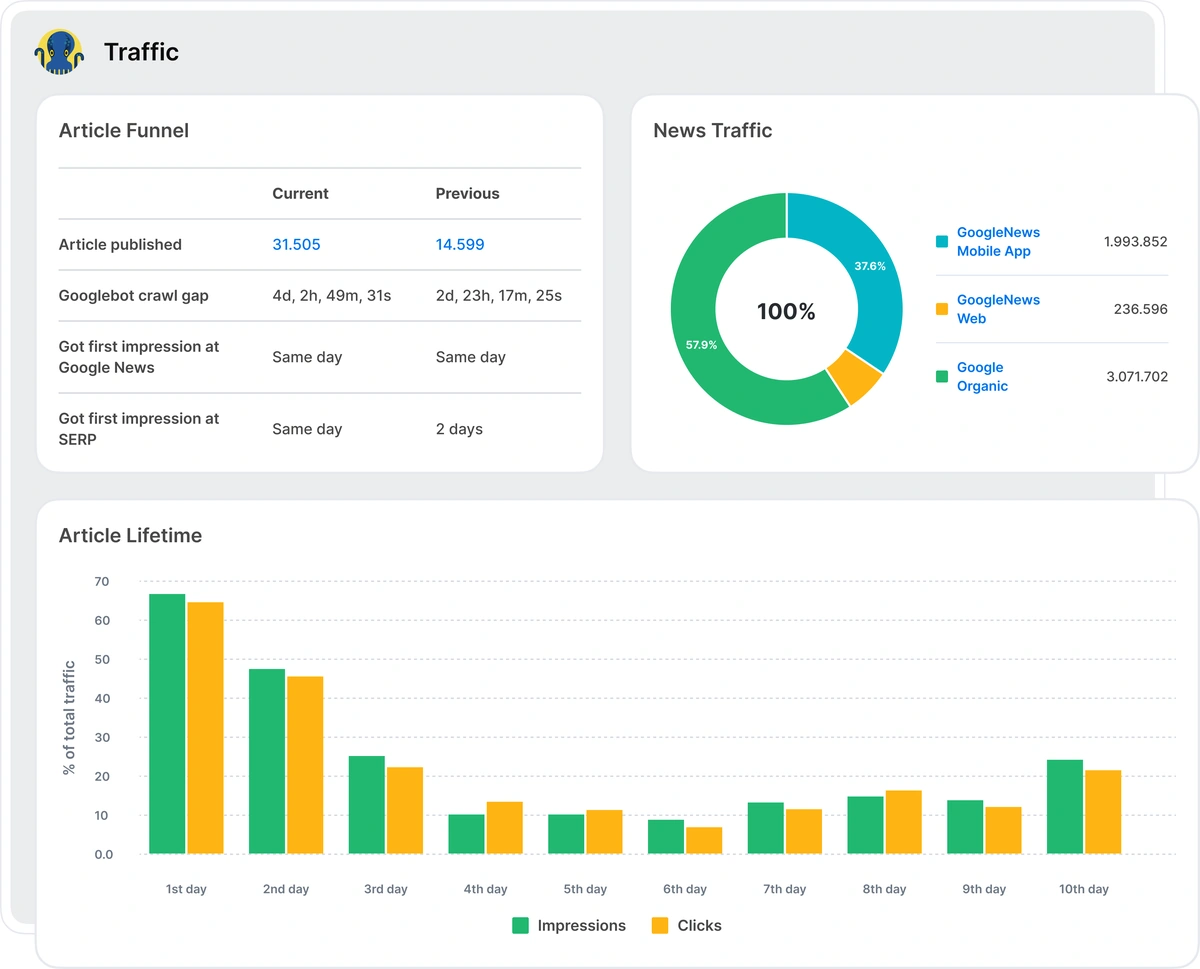

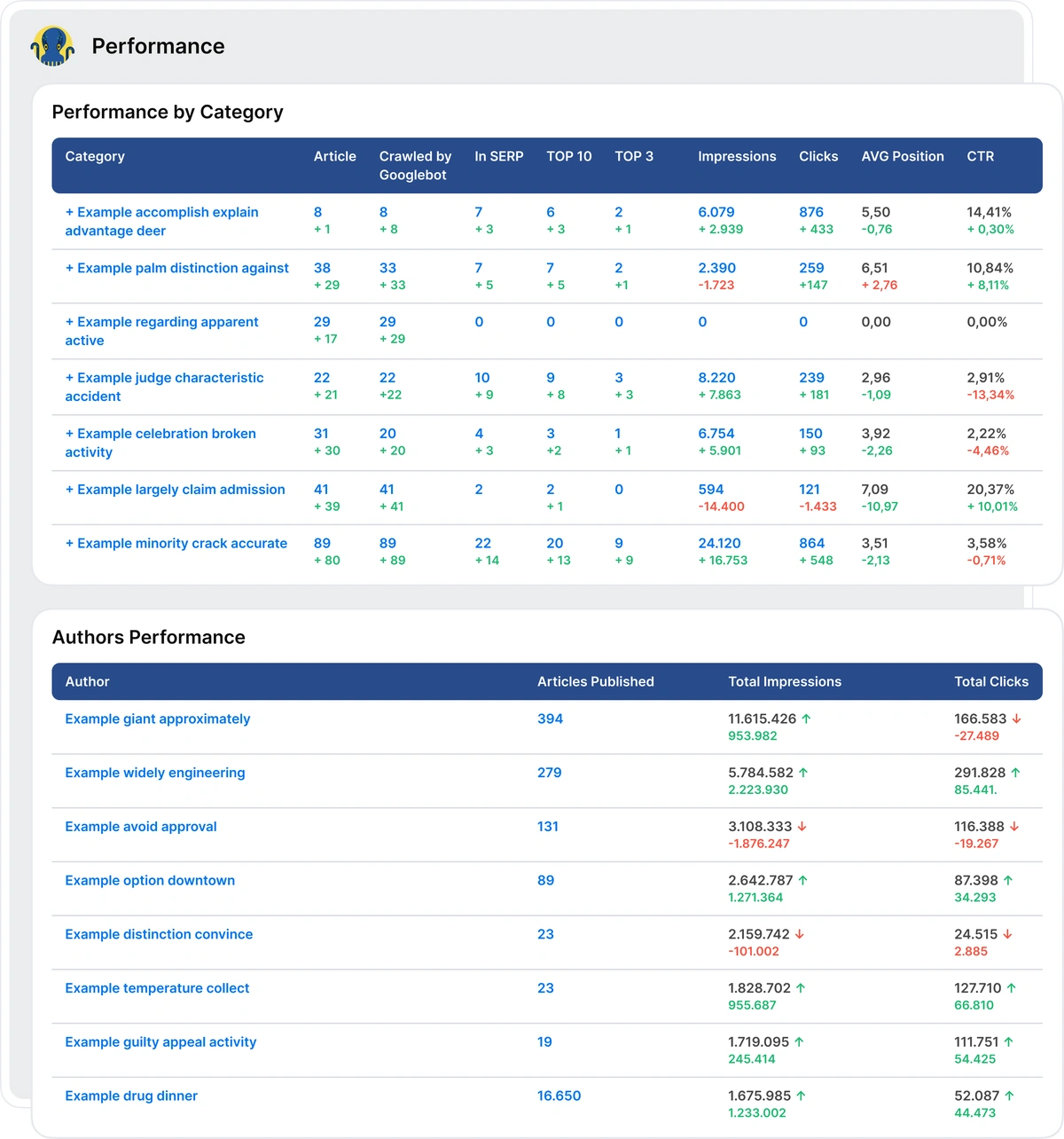

Impact HUB: News Performance Tools (March)

Media websites, publishers, and the ones who care about Quick article indexation, about the long Lifecycle of the article to make the News work super effectively, grab the tools.

Inside, you will work with:

– Crawl gap by Googlebot after the article is published

– Lifetime cycle of the article (impressions, clicks)

– The SEO efficiency by categories (Googlebot visits, impressions, clicks, positions, CTR)

– The SEO efficiency by articles (all the same)

– Efficiency by authors from an SEO point of view!

🏆It is outstanding, isn’t it?

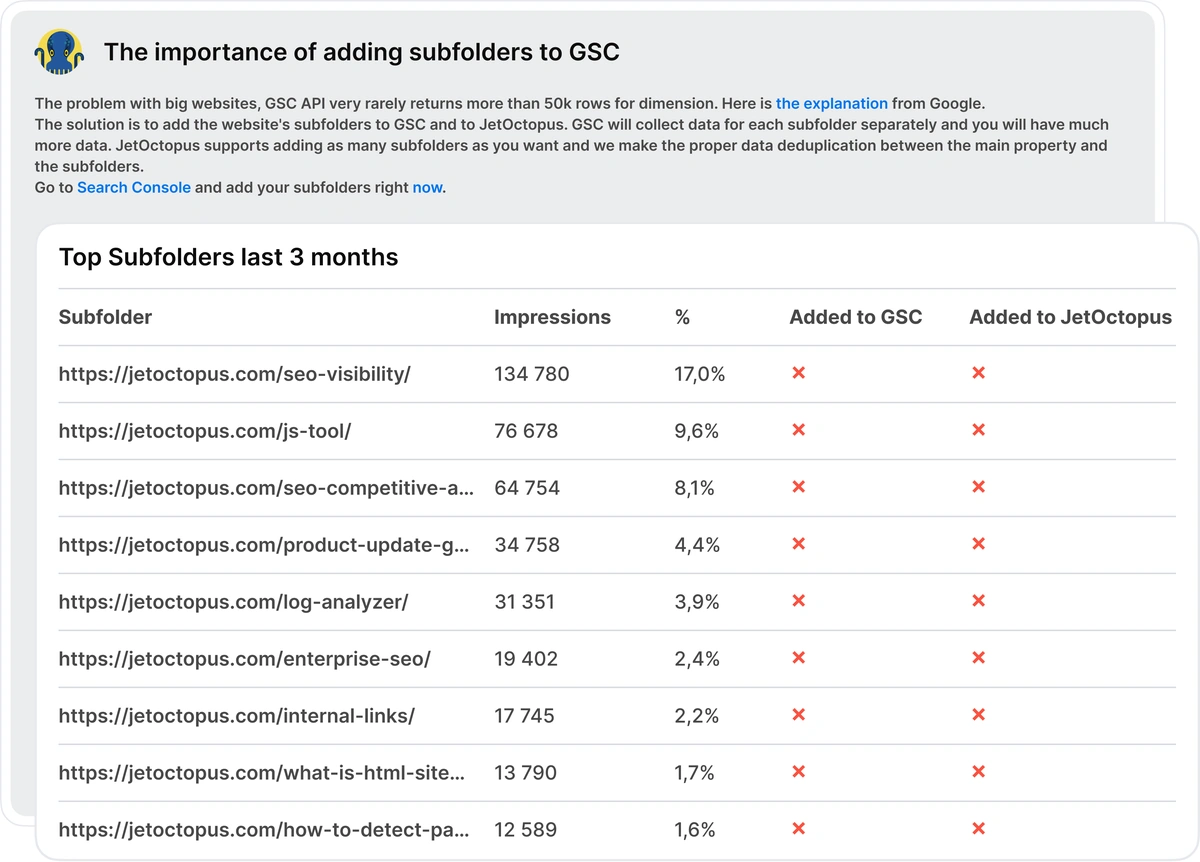

GSC Subfolders Report (April)

The GSC Subfolders Report – provides an in-depth analysis of how Google Search Console (GSC) anonymizes data for your website. You can identify which subfolders generate significant traffic and consider adding them as separate properties in GSC. This approach enables you to access more detailed data and minimize query anonymization, enhancing the ability to monitor and optimize your site’s performance effectively.

Crawl Configuration Extension (April)

We’ve introduced new crawl options, including more precise controls for crawl speed and timeout settings, advanced JavaScript rendering configurations, and additional customization features to better tailor crawls to your specific website needs.

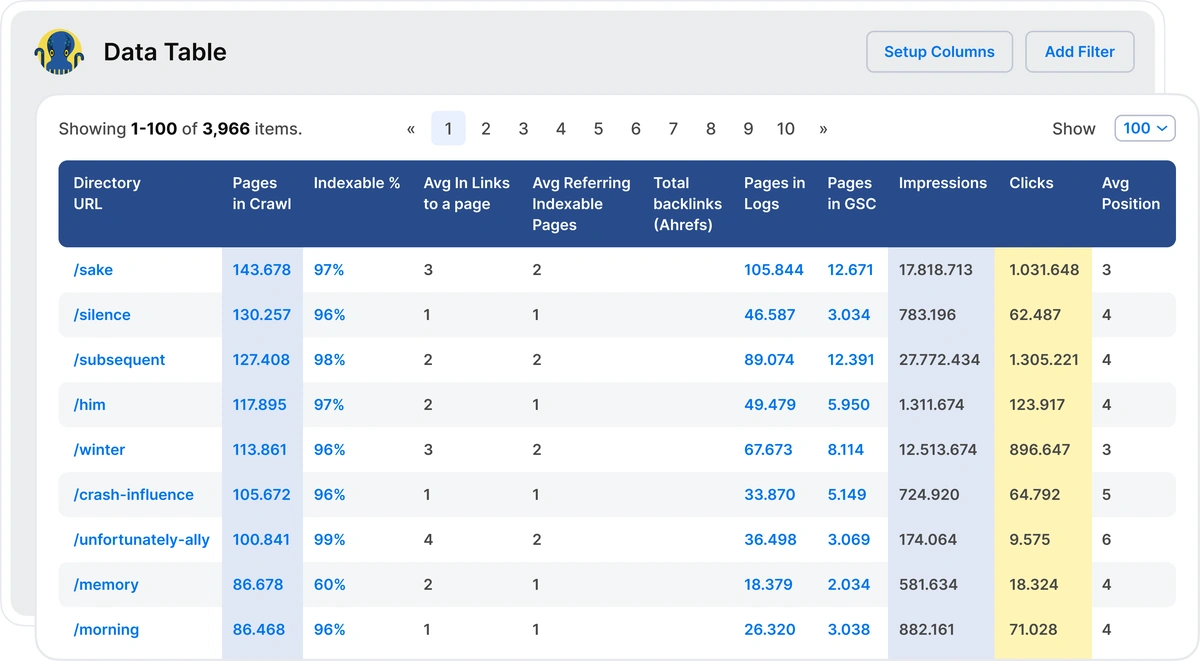

Structure Efficiency (May): by directories, by segments, by categories

When you work with big websites the first needed approach is to be able to slice the data into logical parts. And then work with each segment/directory/category separately analyzing the efficiency, making experiments, monitoring the dynamics.

You already know our Site Structure Efficiency tool which has already become a favourite among our users. Now we’ve upgraded it. There are now Site Structure Efficiency data divided into logical parts:

– By directories

– By segments

– By categories

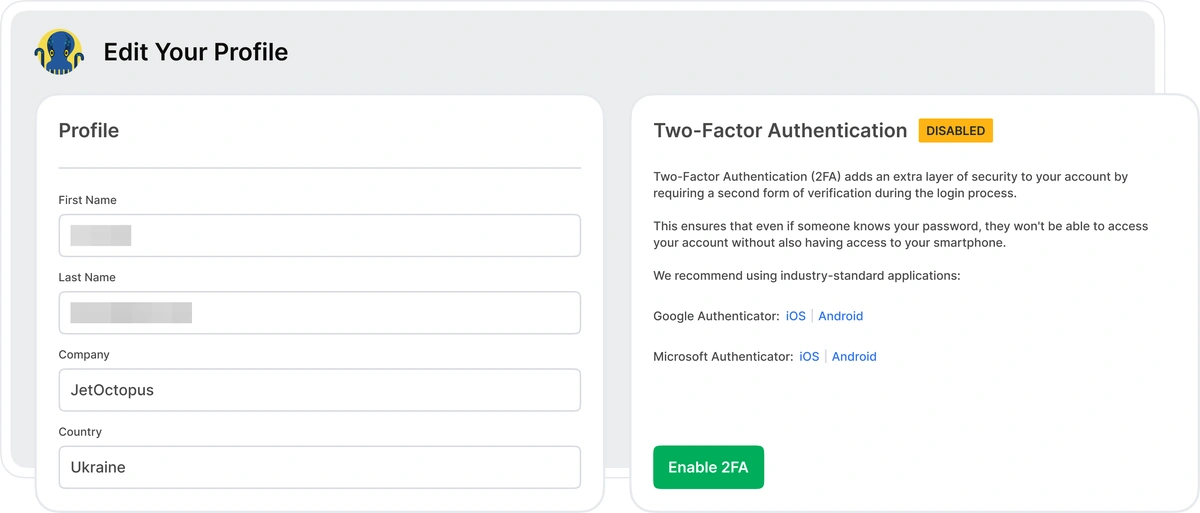

Multifactor authorization (June)

We’ve enhanced account security by introducing the option to use Google Authenticator for an extra layer of protection.

URL Explorer improvements (Structured data) (July)

Structured Data Extraction in URL Explorer – Dive deep into URL-level investigations with structured data extraction now integrated directly into the URL Explorer for more detailed insights.

XPath Custom Extraction (July)

One of the most anticipated features in JetOctopus! You can now leverage your existing XPath rules from other tools for custom extraction, streamlining your data collection processes.

JavaScript Custom Extraction (August)

Unlock limitless data extraction capabilities with the option to write custom JavaScript code executed against the fully rendered page in a browser. This is an invaluable feature when traditional methods like XPath or CSS rules fall short.

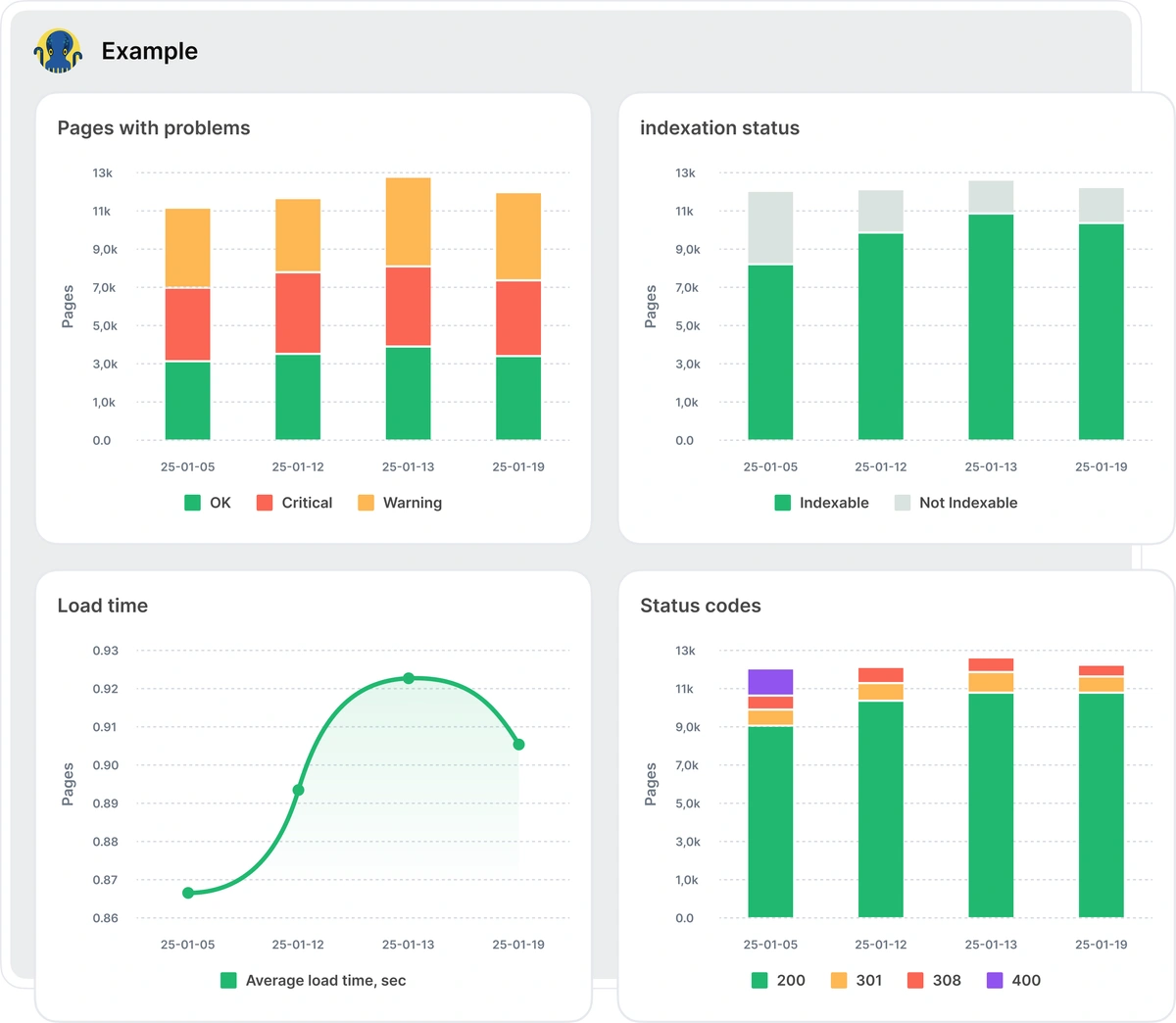

SEO Trends Reports (August)

We are glad that finally, this task in our roadmap is live – Manager’s Dashboard. There are a ton of reports with SEO-specific insights and opportunities. SEOs love working with all that. But we all know that our Managers are also the clients of the tools.

Now the big picture of SEO is available to analyze by a manager at SEO Trend’s section:

🔖 Traffic trends: total vs SEO

🔖 SERP efficiency details in dynamics

🔖 Crawl Budget dynamics

New Alerts Types (September)

One of the most significant updates is robots.txt tracking, which notifies you of sudden changes to your robots.txt file—helping prevent potential traffic disruptions caused by misconfigurations. Our alert system has expanded to help users monitor critical changes in Google News and Google Discovery.

DataTable Performance Improvements (September)

The DataTable is at the heart of JetOctopus, where users spend the majority of their time. We’ve made substantial improvements to ensure it operates faster and more efficiently, delivering a seamless user experience.

Robots.txt report and alerts (September)

Robots.txt files monitoring. Always fresh, always at hand. As always:)

Welcome a new section in Crawler – Robots.txt:

📍 all robots.txt files with Status code, File size, Load time.

And New Alerts on Robots.txt:

📍 if the content of your robots.txt has changed

📍 if the status code of robots.txt has changed

📍 if the status code of robot.txt file is not 200

DataTable Presets (October)

Boring work should be done by algorithms, and strategic SEO decisions should be made by a person. We are super excited to save a ton of your time by introducing Preset Datasets for you.

1. All possible lists of problematic URLs from Crawl data:

- non-canonicals

- non-indexable pages

- slow pages

- heavy pages

- orphaned pages

- very long titles

- 3xx, 4xx, 5xx status codes

- links to 302 redirect

- and many many more

2. All you have to know about Googlebot’s behavior at your website as it is your main client at the end of the day:

- blinking status codes

- heavy pages

- pages with 4xx, 5xx

- pages with only 1 Googlebot visit

- slow requests

3. SERP efficiency (GSC data):

- Keywords gone from TOP 10

- Keywords back to TOP 10

- Pages gone from TOP 10

- Pages back to TOP 10

External Links crawling (November)

This feature is designed specifically for websites driving affiliate traffic and for publishers. JetOctopus crawls external links to check the target page’s status code, title, and other key details. By identifying and fixing broken external links, you can ensure a seamless user experience and maximize affiliate revenue by avoiding the loss of users to non-functioning pages.

Significant JS Crawler Optimisation (November)

As JavaScript-heavy websites remain prevalent, we’ve dedicated significant resources in late 2024 to enhancing our JS crawler. With a next-generation crawler core now deployed, crawl speed has increased by over 40%, ensuring faster, more reliable analysis of JavaScript-based websites.

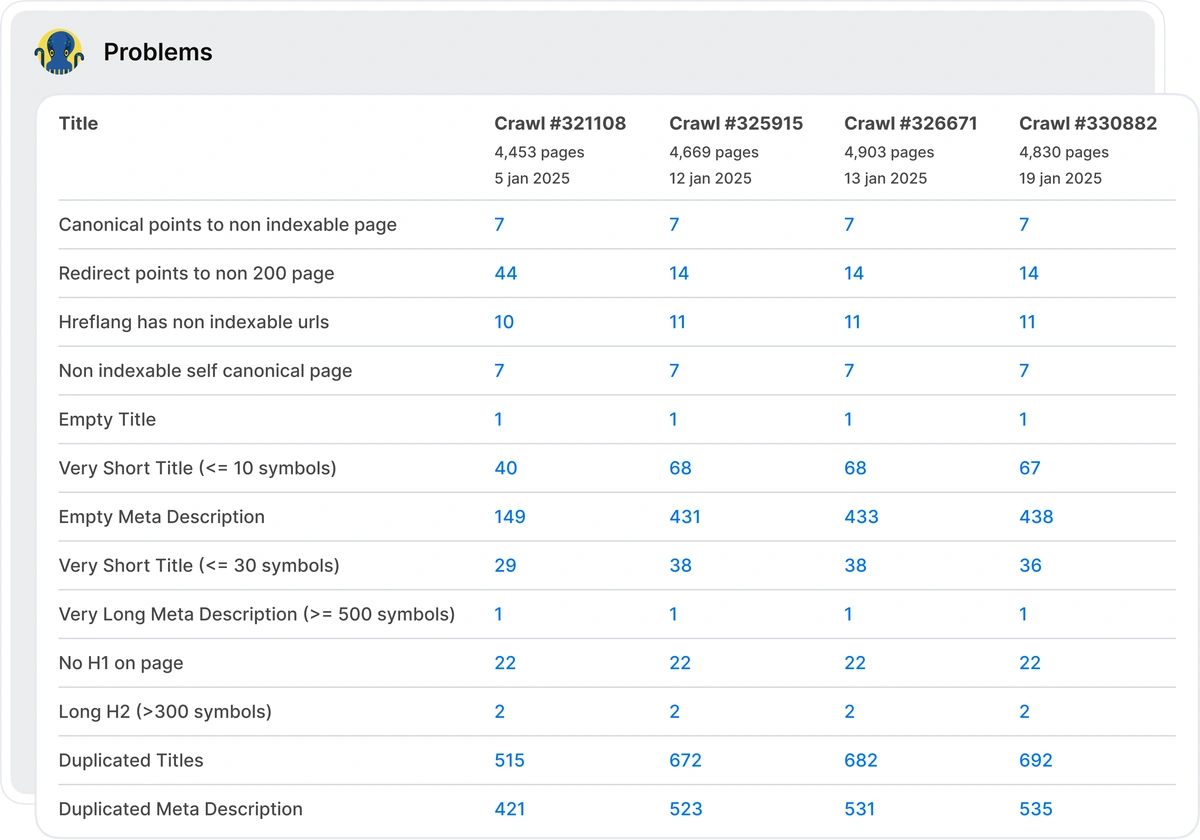

Crawl Compare 3.0

🔥 Dramatic update of Compare Crawl module.🔥

Your SEO tasks are implemented and you want to be sure that all are launched correctly? Compare Crawls tools are super handy here. What updates we have at JetOctopus Compare Crawl module:

🧷 Now you can compare not just 2 crawls but 3, 5, and even 10 crawls in a row and see which On-page SEO criteria have changed.

🧷 The system will not let you select a partial crawl and compare it with the full one. The conclusions will be invalid.

🧷 We have added a big table of all Problems and On-page SEO criteria from the selected crawls to compare the dynamics. You vividly see all the crucial criteria and the changes to control the whole situation during 3 months during even a year, just in one table.

🧷 All is clickable and leads to the filtered URLs with the selected parameter to dive deeper.

We’re definitely aiming for even bigger things in 2025! With the momentum we’ve built this year, the sky’s the limit. More groundbreaking partnerships, even more powerful features, and exciting collaborations are on the horizon. We’re ready to push boundaries and deliver exceptional results—stay tuned!

Related posts:

1. JetOctopus in 2022

2. Sum up for the six months of 2022

3. JetOctopus in 2021: A Recap of All We Achieved and a Timeline of Updates

4. JetOctopus in 2020