What causes the difference between crawled URLs and indexed pages in Google or pages in Search Console?

In this article, we will explain the reasons behind the disparity between the number of indexable pages in crawl results, and the number of pages shown in Google. Understanding these reasons will help you identify crucial issues that may affect the indexing of your website.

Previously, we touched upon this topic briefly, but in this article, we will delve into more practical aspects and identify problems that can hinder the crawling and indexing of your pages.

Issue 1. The number of pages in Google search results is greater than the number of indexable pages in crawl results

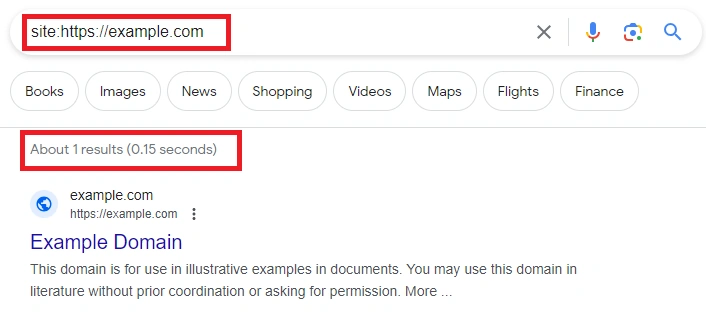

When we want to determine the number of pages indexed by Google, we often use the “site:https://example.com” operator. The results show the estimated number of pages known to Google.

However, it’s important to note that this is an approximation, especially for larger websites. The difference between indexed pages and the number of pages displayed by Google in search results using the “site:https://example.com” operator can be significant, often in the thousands.

Furthermore, there may be old pages in the Google index that you may not be aware of because they haven’t been displayed on your site for a long time. However, if the direct URL of these pages returns a 200 status code, Google may continue to crawl and index them. Google utilizes not only sitemaps and internal links on your website for indexing and crawling but also its own indexes and external resources that link to your site. This is particularly common for e-commerce websites that employ product archiving.

Another possible reason could be related to your crawl budget. If you have made changes to your indexing rules, Google may still display certain pages in the search engine results pages (SERPs) until it recrawls them. Due to the limited crawl budget, Google and other search engines may retain non-indexable pages in the index for an extended period, even if they return a 404 status code. If you have encountered this issue, we recommend optimizing your crawl budget by analyzing log files to understand which pages Google is scanning.

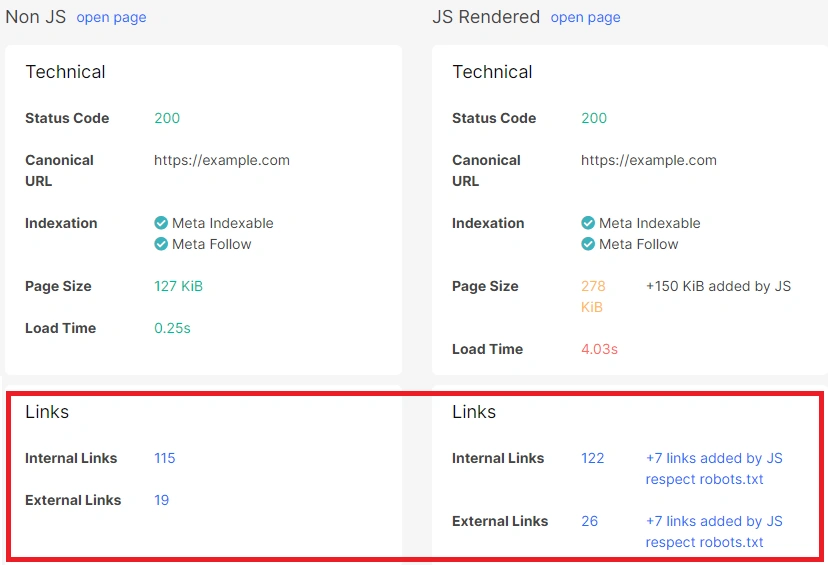

JavaScript on your website can also be a contributing factor. By default, JetOctopus does not render JavaScript. If you don’t have a server-side rendering and internal links appear in the HTML code only after executing JavaScript, JetOctopus may not discover these links and, consequently, may not crawl the pages. On the other hand, Googlebot renders JS pages and can identify your internal JavaScript links. To test this hypothesis, you can use a JS and non-JS version comparison tool or conduct a JavaScript crawl.

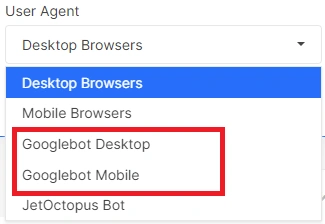

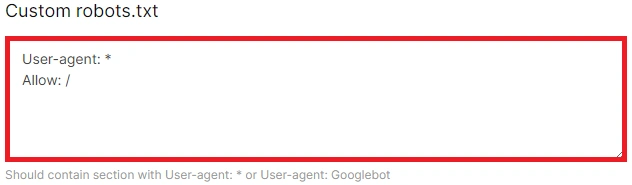

There are two other potential reasons why the number of pages in Google search results may exceed the crawl results. These causes are typically associated with crawler settings or your website configuration. Firstly, let’s consider the robots.txt file. If you have different settings for different bots in your robots.txt file, the crawl results may differ. To ensure that JetOctopus crawls your website similar to Googlebot, you can select the user agent “Googlebot Desktop” or “Googlebot Mobile” during the crawl configuration.

Or add a custom robots.txt file – the same as for Googlebot.

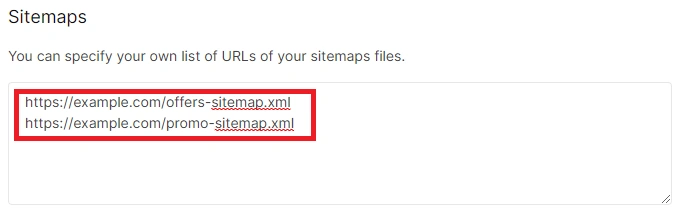

The second reason may be related to disabling sitemap scanning for JetOctopus. If you have orphan pages on your website (i.e., pages that are only present in the sitemap but not linked within the internal structure), Google may index them, while JetOctopus will not discover them without scanning the sitemap. SO, allow sitemaps scanning for JetOctopus activating “Process sitemaps” checkbox and adding links to your sitemaps in the “Sitemaps” field in the “Advanced Settings”.

Enabling the “Do not crawl URLs with GET parameters” checkbox or prohibiting follow links with the “rel=’nofollow'” attribute can also account for the disparity. Google typically crawls these URLs and indexes them if relevant tags are present.

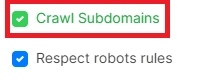

Lastly, the “site:https://example.com” operator setting can cause differences in the results. If you have scanned the entire website excluding subdomains, only results for the start domain will be shown in the crawl results. If you enter “site:https://example.com” in the Google search bar, Google will display results for both the domain and all subdomains.

To crawl a website with subdomains, ensure that the “Crawl Subdomains” checkbox is activated.

Issue 2. The number of pages in Google search results is less than the number of indexable pages in crawl results

The primary reason for this disparity is the crawling and rendering budget. If your website has a large number of indexable pages, but they have slow loading times or contain numerous slow resources, or if they are not included in the internal linking structure, Google may not crawl all of these pages within a given timeframe. Consequently, not all pages will be indexed by Google. We recommend combining data from Google Search Console inspections and crawl results to identify pages that are not indexed. Then, analyze the technical issues associated with these pages to determine why Google is not crawling and indexing them. By incorporating log data, you can determine if Google managed to discover indexable pages that appeared in the crawl results but were not included in Google’s output. Therefore, optimizing the crawl budget should be a top priority if you notice a significant discrepancy between the number of pages in the Google index and the crawl results.

Duplicate content or pages with similar content can also contribute to poorer search engine performance and inadequate crawling by Google, resulting in less frequent index updates. Analyze the “Content” and “Duplications” dashboards to determine if duplicate content is a problem on your website and whether the number of unique words per page is low.

Another possible reason could be related to JavaScript. If you have performed a JS execution crawl and found numerous indexable pages that are not present in the Google index, we recommend verifying if Google correctly renders the JavaScript and if there are no blocked resources for Google. Additionally, indexing JS content may require more time for Google. Therefore, after checking JS rendering, monitor the logs to ensure that Google is visiting your website, and keep track of the number of indexed pages over time.

In general, we recommend spending a lot of crawl optimization and rendering budget if you are faced with the fact that the number of indexable pages in crawl results and in Google SERP is extremely different.

You will find many interesting details and insights about the number of pages visited by the bot, the number of indexable pages and the number of pages in SERPs on the “SEO efficiency” chart.