Why are there missing images during crawling?

If you have configured image crawling, but the results do not include all of them, there may be several reasons. Below we will tell you how to find out the reason. Understanding why there are missing images in the results is important, because search engines may not have access to all images either.

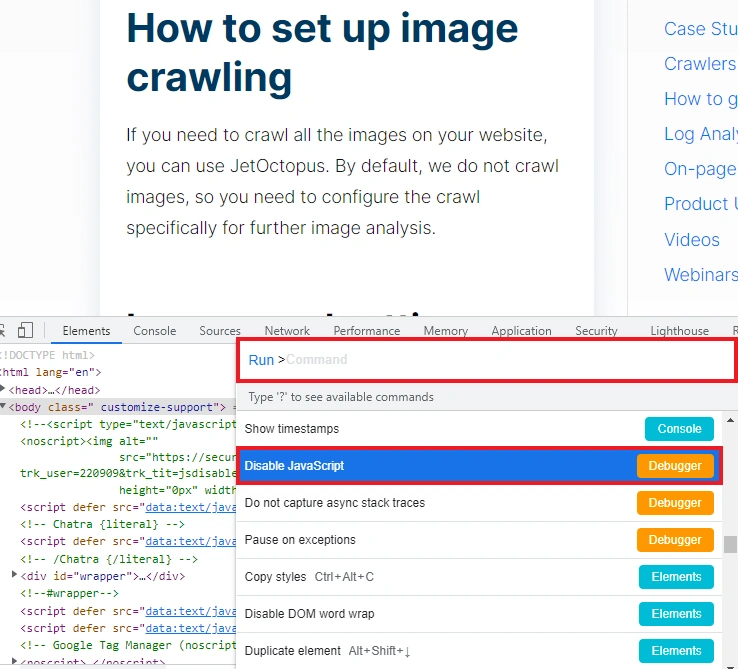

Reason 1. Images are processed on the client side with JavaScript. To check if this is the case, disable JS. Select the desired page, run Chrome DevTools (F12 or Win+F12), then run the command line (Control + Shift + P) – “Disable JavaScript”. Next, run the page or refresh it.

If there are no images in the HTML code, your website uses JavaScript to load them. So, you need ro start JS crawling to analyze all images on your website.

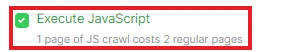

To crawl images processed with JavaScript, run the JS crawl. Remember that in addition to JS, you need to activate the “Process images data” checkbox.

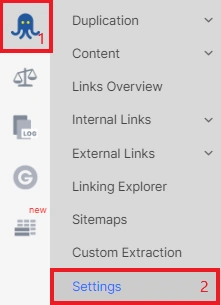

Reason 2. You used “Include/Exclude URLs”, so not all pages were crawled. Check the crawl settings in settings. You may also have set a page limit or otherwise restricted crawling rules, so it is expected that you will not find all images in the results.

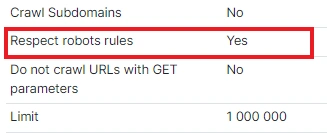

Reason 3. Images or pages that contain images are blocked by the robots.txt file. Check your robots.txt and pay attention to whether the “Respect robots rules” checkbox was activated during the crawl (“Settings” in “Crawl” section). If the checkbox was activated and the images are blocked by this file, JetOctopus will not be able to scan the images. Activate the checkbox or add a custom robots.txt.

If the images are blocked by the robots.txt file, search engines will not be able to access them either.

You may also be interested in: Why are there missing pages in the crawl results?