Why does a page render differently in JetOctopus during a JS crawl than during manual inspection?

Using JetOctopus, you can configure a JS crawl and see how the page is rendered by search bots. When checking a JS crawl, it is important to compare static HTML and JS. In particular, indexing rules and meta data should match. However, when comparing the results of a JS crawl and manually inspecting a particular page, you may notice some discrepancies. In this article, we will explain why this happens and what problems this may indicate.

How JS is rendered in JetOctopus

You can choose to emulate Googlebot Desktop or Googlebot Smartphone when setting up the user agent crawl. However, even if you choose a desktop or mobile browser without Googlebot specification, JetOctopus will still crawl your website just like Googlebot does. After receiving data from your web server, the JetOctopus crawler renders the page using the headless version of the Chrome browser, just like Googlebot does. In most cases, the results of the crawl will reflect the real problems and issues that Googlebot is encountering on your website. However, the results of rendering by a verified Googlebot will differ since they depend on the load on your web server at a certain time of day and many other factors.

Why do the results of the JS crawl and manual inspection data differ?

This can happen for various reasons.

JS crawl settings

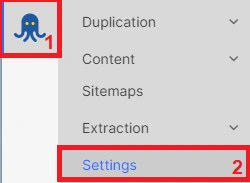

If the results of manual inspection are different, check the JS crawl settings in the “Crawler” – “Settings” section.

Pay attention to the following points.

“Block JS files by robots.txt” – “Yes” – search engines cannot load resources and JavaScript if they are blocked by the robots.txt file. Instead, your browser ignores the robots.txt file and renders all resources. Accordingly, if there are blocked resources and you activate this checkbox to check how the page is seen by the bot, then the version of the page during manual check and results in JS may differ. Analyze blocked resources to identify important ones to show to Google.

“Disable cache resources” – “Yes” – Googlebot does not cache resources during page processing, but a regular browser caches. Maybe the cached version in your browser is out of date and you need to clear the cache.

“JS Execution max time” – Googlebot usually waits for all JS to load, just like your browser (except in cases of extra-slow loading). On the other hand, if you set a small number in the crawl settings, results may be different because the crawler did not wait for slow scripts to load.

“JS Execution render viewport size” – if you use a non-standard browser viewport size when manually checking, results may vary.

Changes on website

JetOctopus saves data at the time of page check. Make sure there have been no developer releases or changes to the website.

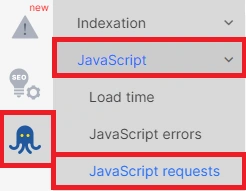

Non 200 resources during JS crawl

If there were non 200 resources during JS crawl, page could be displayed incorrectly. Check out resources in “JavaScript requests” section.

Using dynamic rendering

If you use dynamic rendering for different user agents or bots, or if you have blocks that are only displayed for returning/registered users, or if there are other behaviors for users who have never visited your website before, the rendering results may vary. Check the page in an anonymous tab by clearing cookies and cache.

In any case, any difference should be a cause for concern. Ideally, the page should be displayed the same for both users and bots. If you find non-200 or blocked resources during the check, you should resolve this issue so that Googlebot can correctly render your JavaScript website.