Why the “Page indexing” Google Search Console report differs from crawl data?

In this article, we will explore the reasons behind discrepancies in the “Page Indexing” report of Google Search Console – why the reported number of pages might exceed crawling results, or conversely, why it could be fewer. Analyzing these issues can be instrumental in detecting and promptly rectifying problems with indexing.

Why were fewer pages found in crawl than in Google Search Console?

Several factors might contribute to this situation.

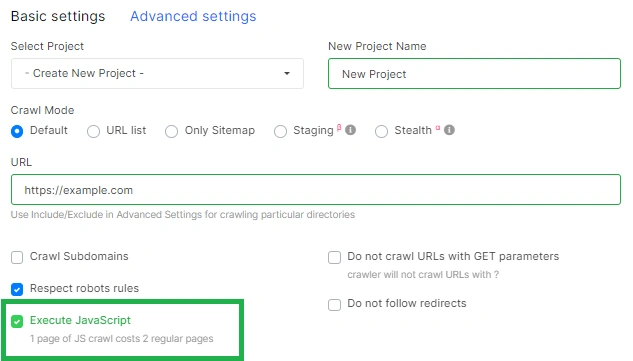

JavaScript usage. One potential reason relates to your crawl setup. If your website employs JavaScript without server-side rendering, internal links might appear after JavaScript execution. JetOctopus, by default, doesn’t process JavaScript, potentially missing internal links executed by JS. To ensure accurate indexing, set up JavaScript crawl. While Google processes JavaScript, indexing links from JavaScript pages can be time-consuming.

To run a JS crawl and compare the actual number of indexable pages on your website, simply start a new crawl and activate the “Execute JavaScript” checkbox.

Weak internal linking. Inadequate internal linking could lead to orphaned pages. Running a sitemap scan helps identify these orphaned pages. If you don’t scan sitemaps, JetOctopus only detects internal links in HTML code. Search engines, however, also examine sitemaps as an additional source to locate indexable pages.

That is, if you ran a crawl without sitemaps, then in the results you will see only those pages that the crawler found in the HTML code of your website. And in the “Page indexing” report of Google Search Console, there will be both pages from sitemaps and pages from the HTML code of your website.

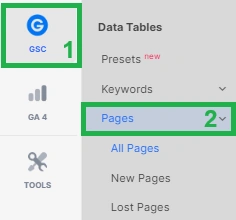

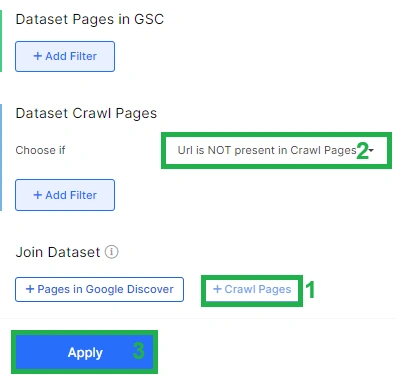

Old pages in Google index. Older pages might remain in the Google index. To address this, cross-reference Google’s indexed pages with crawl results. Merge datasets by going to Google Search Console and selecting “Pages”.

Set up the time frame. After that, add the dataset “Crawl Pages” – “Is not present in dataset Crawl Pages”.

Analyze the received pages. These are precisely the pages that are not present in the crawl but exist in Google’s index. You can also initiate a crawl specifically for these pages, which are exclusively indexed by Google. This process allows you to assess the content on these pages and determine if they return a 200 status code. It is a common occurrence that Google refrains from frequently rescanning outdated pages due to constraints on its crawl budget. The Google index retains both antiquated pages and those featuring redirects. Should you identify such instances within the list, you possess the option to manually deindex them using Google Search Console.

Why more indexable pages in crawl than in the “Page indexing” report?

This scenario is critical and warrants attention, as it might signify indexing issues. Follow these steps to assess if you have indexing problems.

- Verify that all pages are allowed to scan in the robots.txt file.

- Analyze search engines log data. If your site returned 5xx status codes for specific pages for over 2 days, Google could deindex them. Even if 5xx issues are resolved, Google might not promptly reindex these pages. That is, Google could scan these 5xx pages and deindex them. And when you started the crawl, the problem with the 5xx status codes could have already been solved and that’s why you didn’t see it in the crawl results.

- The disparity in page count could stem from Google not detecting all your site’s pages due to incorrect or incomplete sitemaps (it often happens that not all indexable pages are in the sitemaps).

- Weak internal linking might also contribute to this inconsistency.

By examining these scenarios, you can enhance your website’s indexing accuracy and address any issues that may hinder your content’s visibility in search engines.