How to analyze pagination with JetOctopus

Pagination makes your site more user-friendly. When you have all products on several pages, it is more convenient to view the entire range of products. The user will always know how many products still need to be viewed. Also, pages with pagination load faster than the pages with all the products. But when setting up pagination, it is also necessary to monitor the correctness of its configuration for search robots. Below we will tell you how you can check pagination using JetOctopus.

How pagination works

Pagination is the way to separate large content into pages. For example, you can split a long article into several pages. In this way, you will organize the content and make it more readable.

Many e-commerce sites also use pagination to separate product ranges.

Whichever version of pagination implementation you choose, you need to ensure that search bots have access to these pages. This will allow it to fully scan additional pages of your website and use all the content when ranking the page.

Using Jet Octopus, you can check your pagination.

Checking crawlability of pagination pages

Pagination pages must be crawlable by search engines. As you know, large part of an article can be placed on paginated pages. Or pagination URLs may contain internal links to many products on your website. If you disallow search engines from crawling pagination pages, you will lose a lot of content to rank.

To test if there are non crawlable paginated URLs on your website, start a new crawl and disable the “Respect robots rules” checkbox.

More information: How to run a new crawl.

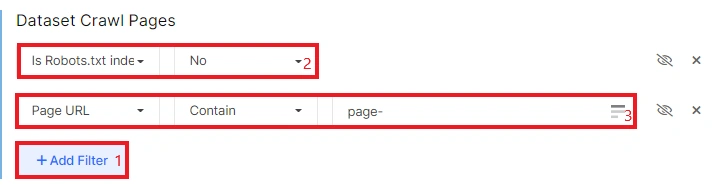

After completing the crawl, go to the crawl results – “Pages” data table. Than configure the appropriate filters:

- Is Robots.txt indexable – No;

- Page URL Contain – the part of the URL indicating the page number, for example “page=” or “?p=”.

Next, click the “Apply” button and check whether there are pagination pages in the list of pages blocked by the robots.txt file. If such URLs exist, make changes to the robots.txt file.

Importantly! If in the results of any crawl you did not find pagination pages, but you have its on your website, it means that there is a high probability that there are no internal links to paginated pages. That is, neither bots nor the JetOctopus crawler can find internal links to pagination. Add links to pagination pages using <a href=>.

How to check if links on pagination pages have followers

Pagination URLs can contain many internal links. That’s why you need to use “follow” in meta robots so that search engines can scan such URLs and discover new pages on your website.

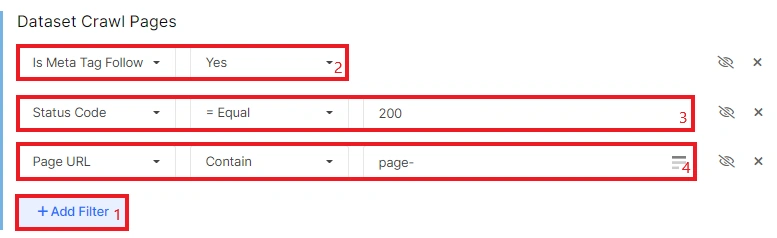

To check it, start a new crawl (or use recent one). Once done, go to the data table and set the following filter:

- Is Meta Tag Follow – No;

- Status Code – = Equal – 200;

- Page URL Contain – the part of the URL indicating the page number, for example “page=” or “?p=”.

In the results, you will see pagination pages with nofollow in meta robots. We recommend using follow meta robots on such pages.

How to check how much crawling budget is spent on pagination pages

Sometimes there are situations when the entire crawling budget is spent on crawling pagination pages, and not on the main URLs. However, the main page usually contains the most recent content. For example, e-commerce sites place new products, promotional offers, etc. on the first page. Therefore, it would be nice if search engines crawled the main page more often. Also, if you have a lot of pagination pages, there is a risk that pagination pages will take up the largest percentage in the crawl budget.

To check the crawl frequency and how much crawling budget is spent on pagination pages, you need to integrate the logs into JetOctopus.

More information: How to find out the frequency of scanning by search robots.

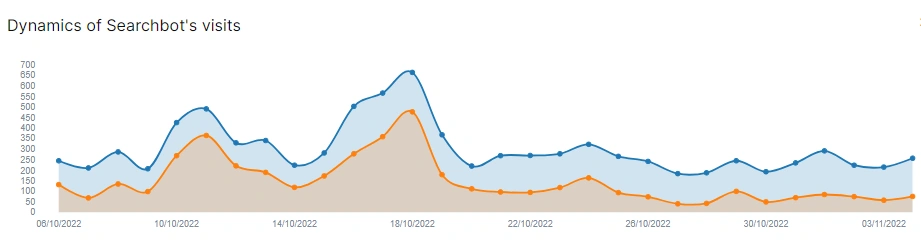

After the logs are integrated, go to the “Logs” report. Next, select “Raw Logs”, select the search engine and period. After that, filter the pagination pages.

On the chart, you will see the dynamics of pagination page scanning.

And in the data table you will see which pagination pages were visited by search engines.

Compare this data with the data of the entire website.

How to check <link rel=”next” href=”…”> and <link rel=”prev” href=”…”>

Previously, Google used <link rel=”next” href=”…”> and <link rel=”prev” href=”…”> to define pagination pages. However, Google no longer supports these tags. But there are other search engines that support these tags. So, you can check the correct use of prev and next tags with JetOctopus.

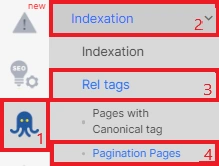

Go to the crawl results and select the “Indexing” – “Rel tags” report. Next, select “Pagination Pages”.

You will be taken to a data table that contains all pages with prev and next tags.

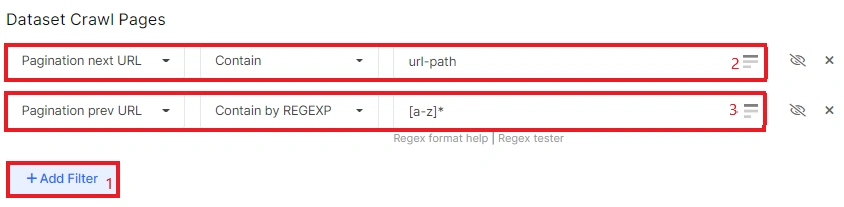

You can also filter pages that contain prev or next using the appropriate filters: “Pagination next URL” and “Pagination prev URL”.

How to check status codes of pagination URL

It is important that pagination pages return 200 response codes both during crawling and to search engines.

Of course, if these pagination pages exist. For example, if one of the users mistakenly enters https://example.com/category-1/page=111111 instead of https://example.com/category-1/page=11, then the 200 status code cannot be here.

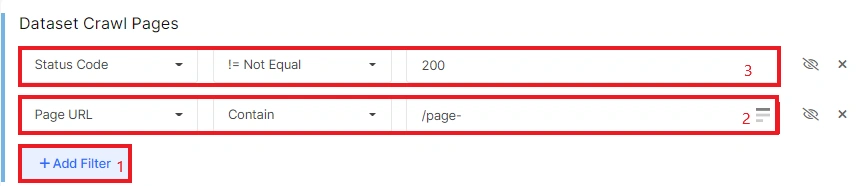

To check the status codes of the pagination pages, go to the crawl results, filter the required URLs. Next, add the filter “Status code” – “= Not Equal” – “200”.

You can perform the same check in the logs.

If you will have non 200 pagination pages, try to find the reason and eliminate it.

What else to check on pagination pages

Pagination pages should be subject to most of the same checks as all other pages on your website.

Here, for example, is a list of things to check: Health of your website. Quick check.

By the way, you can create a segment with pagination URLs. You will then be able to view data about pagination pages in all reports: it is very convenient to see the data visualized.

Below are some more ideas for checks on pagination pages.

- URL of the first page. Check the crawl results and logs for page=1. Such a page is a complete copy of the main page. Make 301 redirects from https://example.com/category-1/page=1/ to https://example.com/category-1/.

- Does the pagination page contain a canonical and what kind of canonical is it: self-canonical or main-page canonical.

- Check the number of internal links on the pagination page. The number of internal links should not exceed 1000, otherwise search bots will not be able to process all links.

- Be sure to pay attention, there are no pagination pages in SERP. This can be done by connecting Google Search Console to JetOctopus.