How to detect pages with multiple meta robots

Meta robots play a crucial role in guiding search engines on how to treat webpages, determining whether they should be indexed and displayed in search results. Understanding the purpose and correct implementation of meta robots is essential for effective search engine optimization. In this article, we explore the significance of meta robots, the potential consequences of multiple meta tags, and provide actionable steps to identify and rectify any issues. By following our guidelines, you can ensure that your website is correctly indexed and achieves optimal visibility in search engine rankings.

What are meta robots?

Meta robots are HTML tags placed in the <head> section of a webpage that inform search robots how to treat the page in terms of indexing and displaying it in search results.

Meta robots can be defined for all search robots or specifically for Googlebot. For example, using the following rule:

<meta name=”robots” content=”index,follow” />

This rule instructs all search engines, including Google and Bing, to index the page and display it in search results (and to follow links on the page).

However, it is also possible to allow or prohibit individual bots from indexing a page. Here’s an example:

<meta name=”googlebot” content=”noindex”>

This rule specifically prevents Googlebot from indexing the page.

Multiple rules can be set for multiple bots. For instance, you can allow indexing for Googlebot and restrict others from creating a snippet, or allow indexing for Googlebot News and prohibit others, etc. Additionally, other rules can be specified in the meta robots tag, such as nosnippet or max-snippet.

How many meta robots can be on a page?

A page can have multiple meta robots tags, including rules for all bots, individual bots, and different instructions. Multiple meta tags can be used, or a comma-separated list can be included.

What does it mean? For example, the following meta robots tags are equally valid:

<meta name=”robots” content=”index”>

<meta name=”robots” content=”follow”>

These multiple meta tags allow indexing of the page and analysis of the links on it, associating them with your website.

Another commonly used option is:

<meta name=”robots” content=”index,follow”>

This approach enables the usage of multiple meta robots as needed, using different methods – either a single meta robot with a comma-separated list or several meta robots.

However, note that search bots can only access these rules if page crawling is not disallowed in the robots.txt file.

Why is it important to check the number of meta robots on a page?

If a page contains multiple meta robots tags with different rules, Google will consider the most restrictive rule during crawling. Consequently, if the correct meta robots tag with “index, follow” is present alongside an incorrect one with “noindex, nofollow,” Google will interpret the page as non-indexable, resulting in its exclusion from the search index.

Similarly, if a meta robots tag is defined for all bots and another tag is specified for Google, Googlebot will combine all the prohibition rules and apply them. For example, if the following meta robots tags exist on a page:

<meta name=”robots” content=”noindex,follow”>

<meta name=”googlebot” content=”index,nofollow”>

With these rules, you want to allow Googlebot to index the page, right?

But in fact, Googlebot will consider the most restrictive rule from the general specification and the most restrictive rule from its own specification, ultimately using “noindex, nofollow” for that page.

Therefore, it is strongly recommended to check the number of meta robots tags on a page and review the instructions specified for each bot to ensure accurate and desired behavior.

How to find pages with multiple meta tags

Suppose you typically use a single meta robots tag and list all the rules separated by commas. In that case, it’s important to check if any additional meta robots have appeared due to an error. To accomplish this, you can utilize Chrome Dev Tools and manually inspect each page. However, performing such checks for the entire website can be time-consuming. Alternatively, you can rely on the JetOctopus crawler to conduct a comprehensive scan of your entire website.

Here’s a step-by-step guide on how to find pages with multiple meta tags.

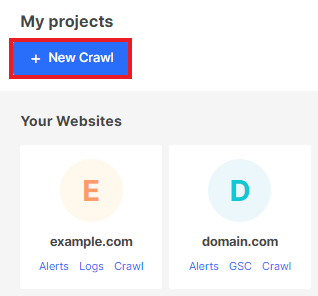

Step 1. Start the crawl.

You can find more information on configuring crawls in the article: How to configure a crawl of your website.

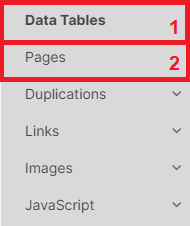

Step 2. Navigate to the crawl results and select the “Pages” data table.

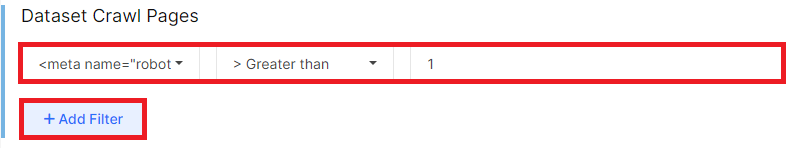

Next, click on the “+Add filter” button and choose “<meta name=”robots”> count” – “> Greater than” – “1”.

You can also count the number of meta robots specific to Googlebot or the total number of indexing rules for all bots and Googlebot combined. To do this, select “<meta name=”googlebot”> count” or “<meta name=”googlebot|robots”> count” in the filters, respectively.

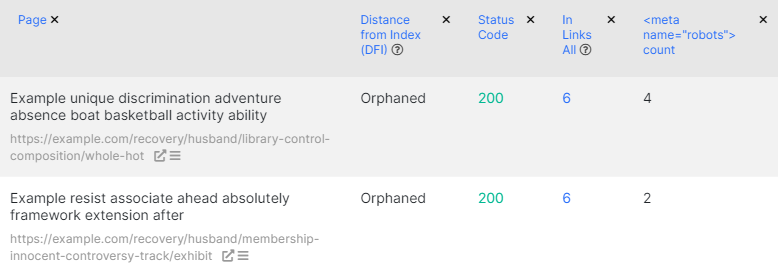

Step 3. Analyze the resulting data table to identify pages with multiple meta tags.

The URL column displays the pages where two or more meta robots tags were found.

In the “<meta name=”robots”> count” column, you can find the total number of meta robots on each page. By clicking on the column name, you can sort the numbers in either ascending or descending order.

Step 4. This is the crucial step – you need to fix any incorrect multiple meta robots. If you intend to have only one meta robots tag and separate the rules with commas, ensure that any additional meta robots tags are removed.

If you use multiple meta robots tags, double-check that you don’t have more than necessary. Also, verify that using multiple rules for different bots does not prevent Googlebot from indexing the page. Remember, Googlebot considers the sum of all prohibition values.

By following these steps, you can identify and address any issues related to multiple meta tags, ensuring optimal indexing and search performance for your website.

Conclusion

Maintaining control over meta robots is paramount for ensuring proper indexing and search engine performance. Through our step-by-step process, we have empowered you to identify pages with multiple meta tags and take corrective actions. By adhering to best practices and guidelines, you can avoid common pitfalls and safeguard your website’s visibility on search engine result pages. Remember, a comprehensive understanding of meta robots is key to leveraging their full potential and enhancing your website’s online presence.